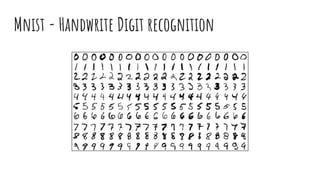

The document provides an overview of deep learning and its applications, focusing on various neural network architectures and frameworks like Caffe. It details the author's background, including their work in web development, teaching, and research in image classification using CNNs. Additionally, it discusses practical implementations, comparisons between CPU and GPU performance, and segmentation algorithms for image processing.

![Pycaffe - Example of use - Building transformer

>>> mu = np.load(caffe_root +

'python/caffe/imagenet/ilsvrc_2012_mean.npy')

>>> mu = mu.mean(1).mean(1)

>>> transformer = caffe.io.Transformer({'data':

net.blobs['data'].data.shape})

>>> transformer.set_transpose('data', (2,0,1))

>>> transformer.set_mean('data', mu)

>>> transformer.set_raw_scale('data', 255)

>>> transformer.set_channel_swap('data', (2,1,0))](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-48-320.jpg)

![Transpose an image

Original Red pixel = [255, 0, 0] (shape = 1,1,3)

Transposed Red pixel = [[255], [0], [0]] (shape = 3, 1, 1)

BGR transposed = [[0], [0], [255]] (shape = 3, 1, 1)](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-49-320.jpg)

![Pycaffe - Example of use - Preparing data

>>> image = caffe.io.load_image(caffe_root + 'examples/images/cat.jpg')

>>> transformed_image = transformer.preprocess('data', image)

>>> net.blobs['data'].reshape(1, 3, 227, 227)

>>> net.blobs['data'].data[...] = transformed_image

>>> output = net.forward()

>>> output_prob = output['prob'][0]](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-50-320.jpg)

![Pycaffe - Example of use - Discovering the class

>>> print 'predicted class is:', output_prob.argmax()

predicted class is: 281

>>> labels_file = caffe_root + 'data/ilsvrc12/synset_words.txt'

>>> labels = np.loadtxt(labels_file, str, delimiter='t')

>>> print 'output label:', labels[output_prob.argmax()]

output label: n02123045 tabby, tabby cat](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-51-320.jpg)

![Pycaffe - Example of use - Top N Class

>>> top_inds = output_prob.argsort()[::-1][:5]

>>> print 'probabilities and labels:'

>>> zip(output_prob[top_inds], labels[top_inds])

probabilities and labels:

[(0.31243637, 'n02123045 tabby, tabby cat'),

(0.2379719, 'n02123159 tiger cat'),

(0.12387239, 'n02124075 Egyptian cat'),

(0.10075711, 'n02119022 red fox, Vulpes vulpes'),

(0.070957087, 'n02127052 lynx, catamount')]](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-52-320.jpg)

![Or you can compare images using Distance

# Load images

>>> image_1 = caffe.io.load_image(my_image_1_path)

>>> image_2 = caffe.io.load_image(my_image_2_path)

# Tranform images

>>> transformed_image_1 = transformer.preprocess('data', image_1)

>>> transformed_image_2 = transformer.preprocess('data', image_2)

# Reshape net and load

>>> net.blobs['data'].reshape(2, 3, 227, 227)

>>> net.blobs['data'].data[0, ...] = transformed_image_1

>>> net.blobs['data'].data[1, ...] = transformed_image_2](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-53-320.jpg)

![Or you can compare images using Distance

>>> output = net.forward()

>>> image_1_features = net.blobs[‘fc7’].data[0]

>>> image_2_features = net.blobs[‘fc7’].data[1]

>>> import distance from scipy.spatial

>>> image_1_2_dist = distance.euclidean(image_1_features,

image_2_features)

# if image_1_2 closest to 0, more similars they are](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-54-320.jpg)

![Using the opencv

>>> import cv2

>>> image = cv2.imread(image_path)

>>> import segmentation from cv2.ximgproc

>>> selective_search =

segmentation.createSelectiveSearchSegmentation()

>>> selective_search.setBaseImage(image)

>>> segments = selective_search.process()

>>> x, y, w, h = segments[0]

>>> cropped_image = image[y:(y+h), x:(x+w)]](https://image.slidesharecdn.com/deeplearning-theconf2018-181106005751/85/Deep-learning-the-conf-br-2018-57-320.jpg)