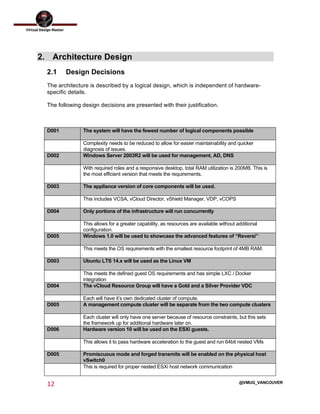

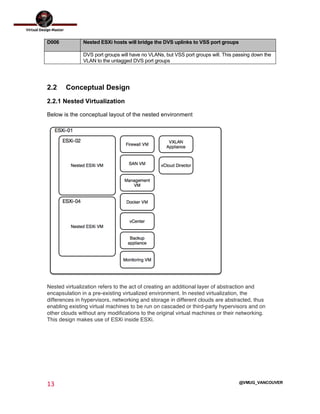

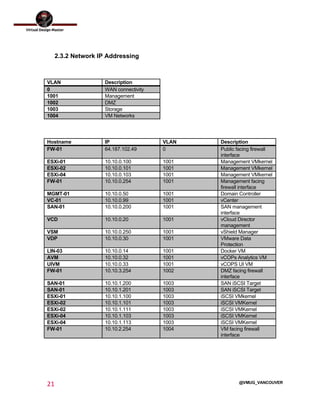

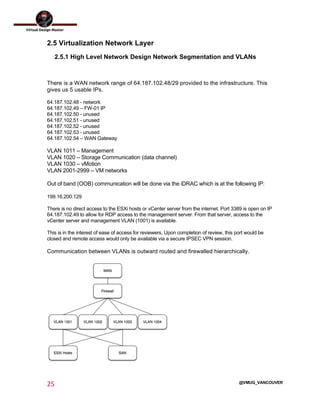

This document outlines the architecture design for a system supporting human evacuation from Earth to Mars, detailing technical requirements, design decisions, and deployment strategies. It includes a nested virtualization setup using VMware components, ensuring scalability, reliability, and maintainability within strict resource constraints. Key stakeholders and technical team members are identified, with an emphasis on collaborative preparation for future infrastructure expansion.