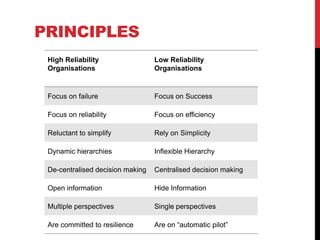

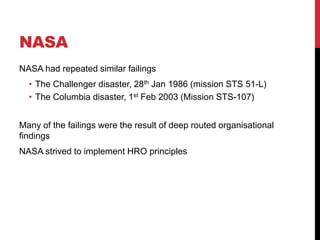

This document discusses the qualities of high reliability organizations (HROs) and how they differ from low reliability organizations based on five key principles:

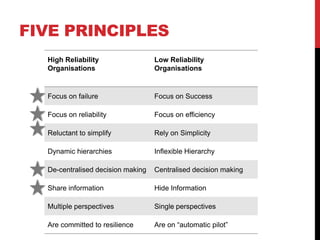

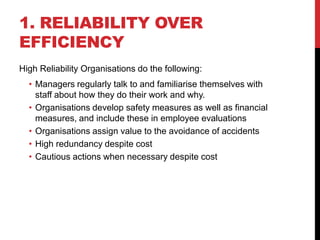

1. HROs prioritize reliability over efficiency while low reliability orgs prioritize efficiency.

2. HROs are preoccupied with failure while low reliability orgs focus on success.

3. HROs ensure everyone understands the big picture while low reliability orgs rely on narrow focus.

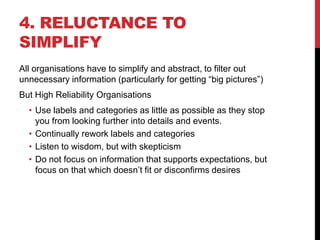

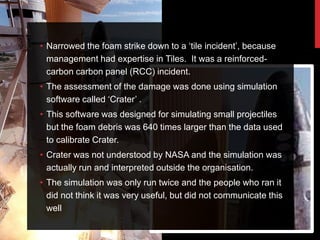

4. HROs are reluctant to oversimplify while low reliability orgs rely on simplicity.

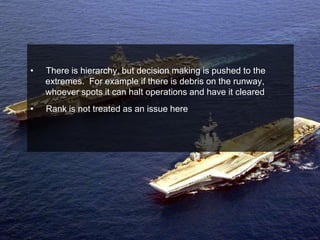

5. HROs decentralize decision making while low reliability orgs centralize decisions.

It provides examples of nuclear aircraft carriers demonstrating HRO