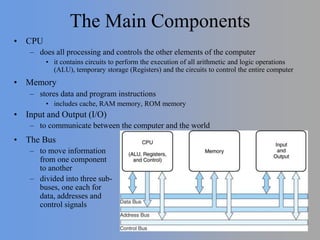

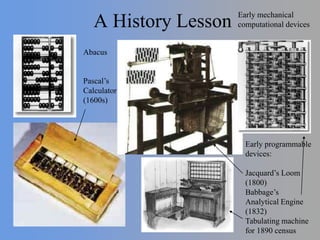

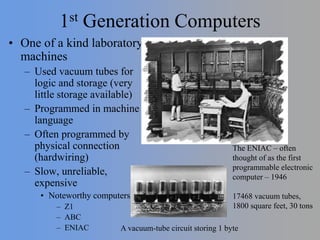

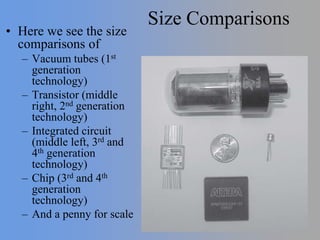

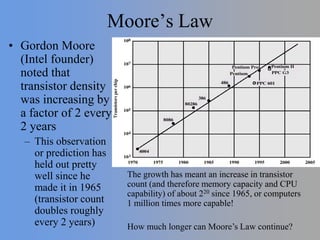

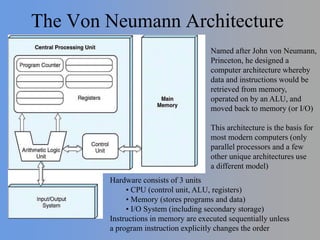

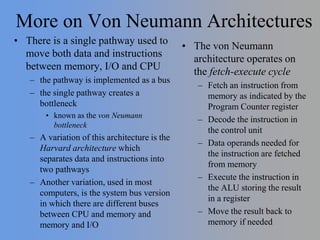

This chapter introduces the components of a computer system and the evolution of computer hardware. It discusses the typical structure of a computer, known as the Von Neumann architecture. This architecture uses a centralized processing unit connected to memory and input/output via a bus. The chapter then covers the history of computers from early mechanical devices to modern integrated circuit-based systems. It describes how each generation brought improvements in size, cost, reliability and capabilities through new technologies like transistors, integrated circuits and microprocessors.