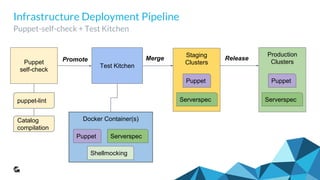

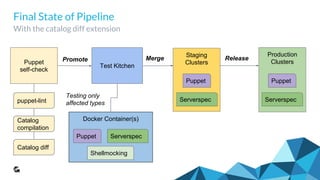

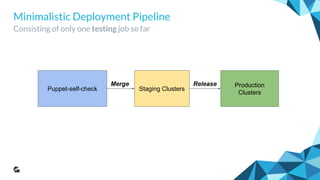

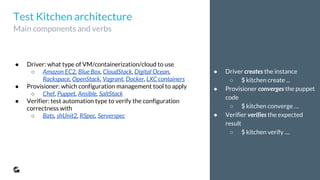

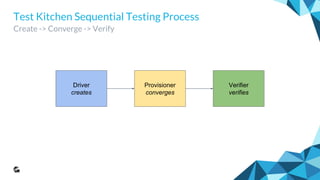

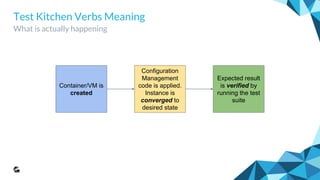

This document outlines the evolution and implementation of test-driven infrastructure at GoodData using Puppet, Docker, Test Kitchen, and ServerSpec. It emphasizes the challenges of managing a complex distributed system, the importance of tracking changes through Puppet code, and the introduction of automated testing pipelines to improve quality and efficiency. Key components discussed include Puppet self-checks, Test Kitchen orchestration, Docker utilization for testing environments, and the use of ServerSpec for configuration verification.

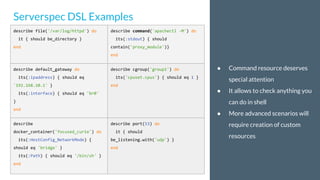

![● The test that checks behaviour

of actual API endpoints after

instance converge

● Notice that the test is still

isolated within one instance

● Serverspec/RSpec is internal

DSL so you can easily use Ruby

right in the test definition

under_kerberos_auth = ['', 'status', 'status.json',

'status/payload', 'images/grey.png']

under_kerberos_auth.each do |path|

describe command("curl -k -X POST http://127.0.0.1/#{path}") do

its(:stdout) { should match(/301/) }

end

describe command("curl -k -X POST https://127.0.0.1/#{path}")

do

its(:stdout) { should match(/401/) }

its(:stdout) { should match(/Authorization Required/) }

end

end

Testing the Outcome

DSL plus a bit of basic Ruby](https://image.slidesharecdn.com/6de9bd52-0a82-43c8-b905-6576039ade8d-161006164220/85/ContainerCon-Test-Driven-Infrastructure-35-320.jpg)