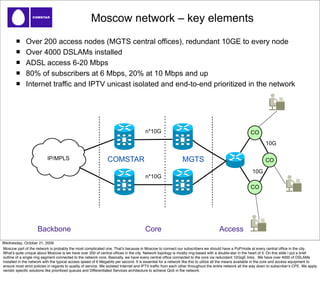

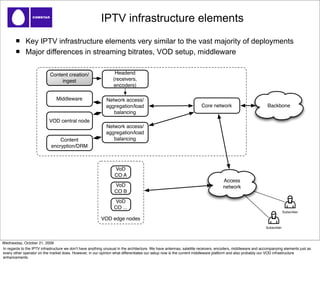

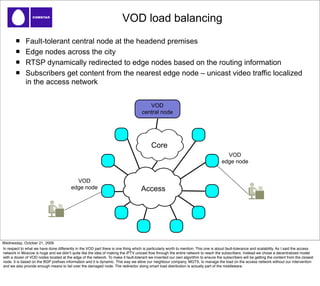

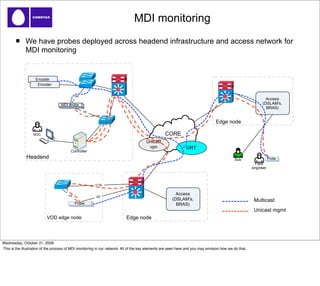

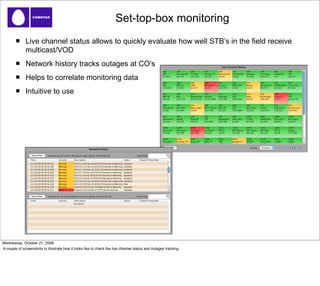

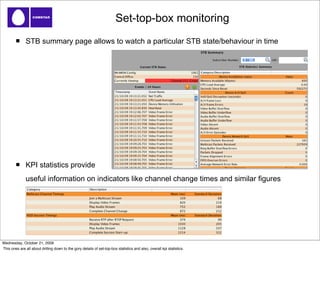

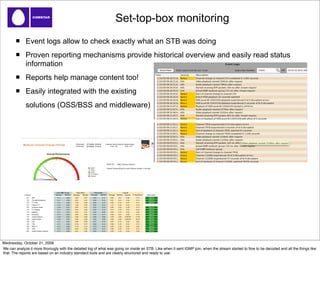

COMSTAR-UTS is a leading Russian telecommunications company providing broadband internet, IPTV, and other services. Andrey Alekseyev discussed COMSTAR-UTS's network and IPTV infrastructure, noting its large scale in Moscow with over 200 access nodes and 4000 DSLAMs. He highlighted challenges in ensuring quality of service (QoS) and quality of experience (QoE) given the old copper infrastructure and mix of managed and unmanaged devices. Alekseyev also discussed efforts to monitor service quality, including the use of industry standard and proprietary fault and performance management tools to monitor the complex IPTV and network systems.