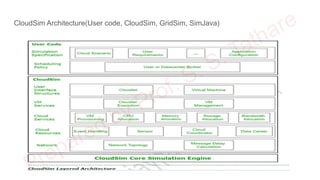

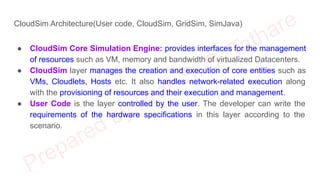

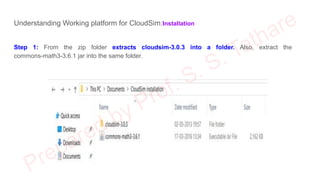

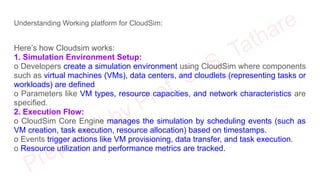

The document discusses CloudSim, OpenStack, and AWS, focusing on the architecture and operational aspects of CloudSim as a simulation tool for cloud computing, and the features and functionalities of OpenStack for managing cloud environments. It provides detailed information on the integration, setup, and basic operations of OpenStack, including instance creation, network management, and storage options. Additionally, it highlights the modular architecture of CloudSim and its benefits over actual deployments, emphasizing its use for testing cloud applications without the need for capital investment.