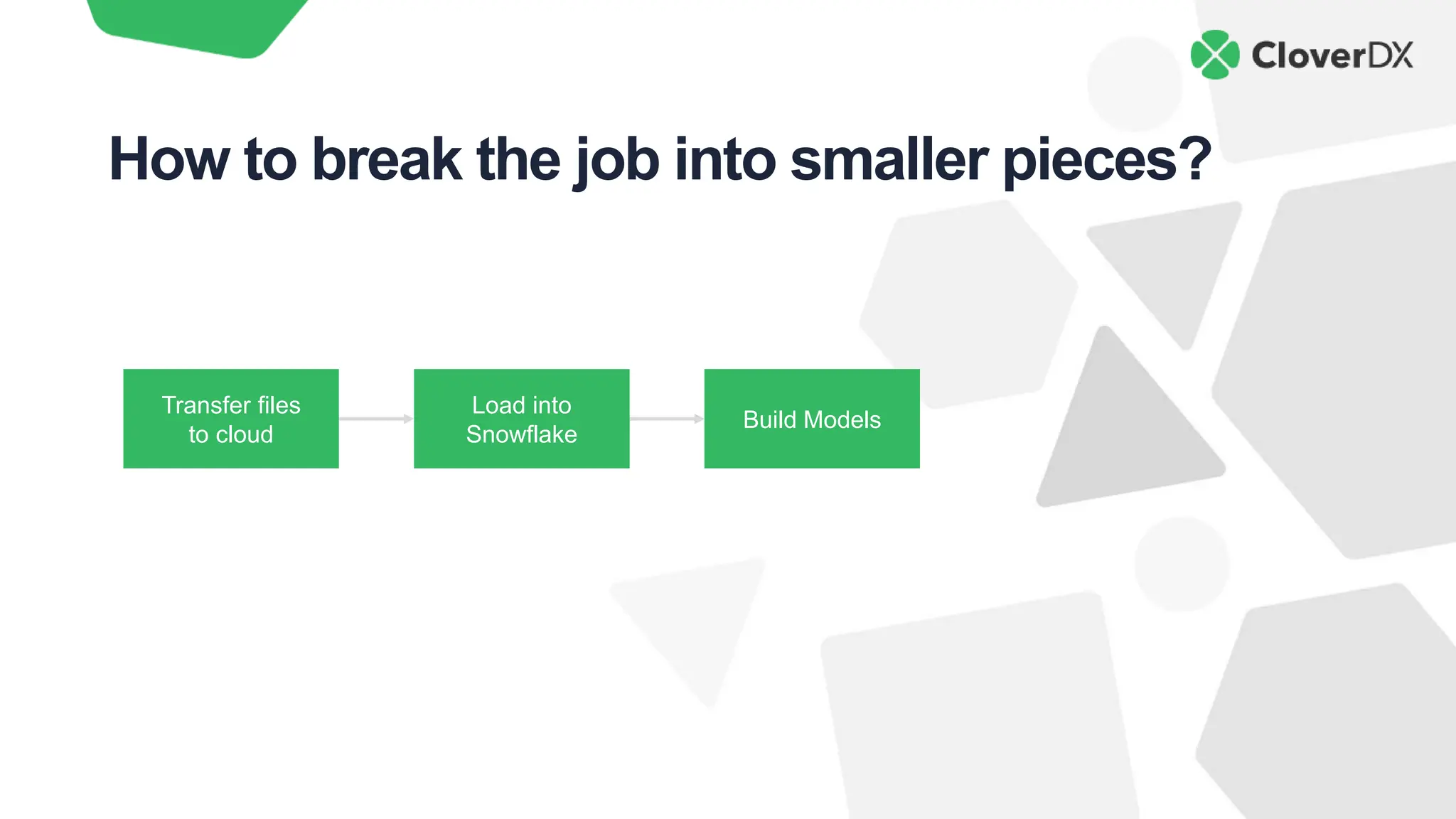

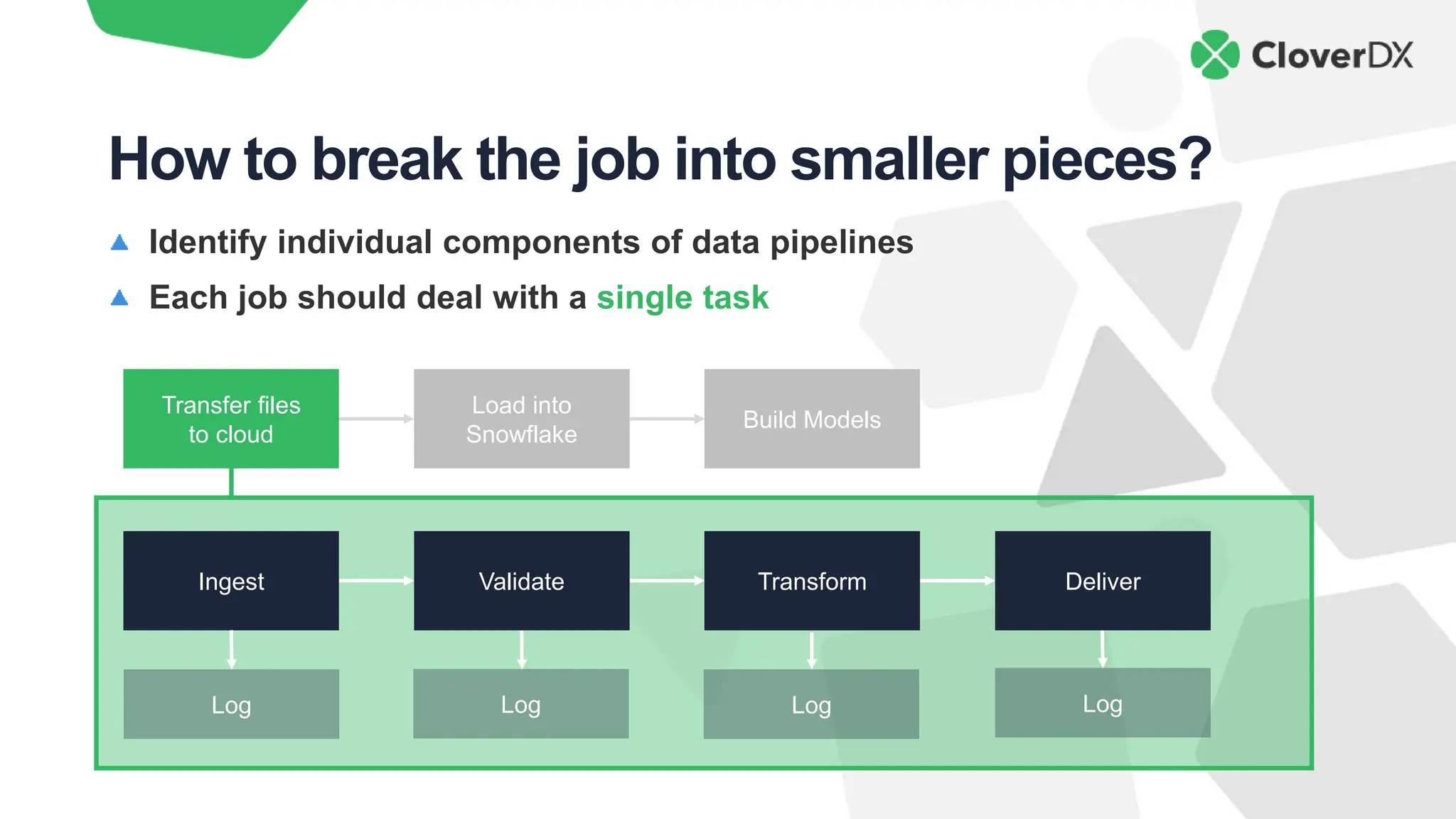

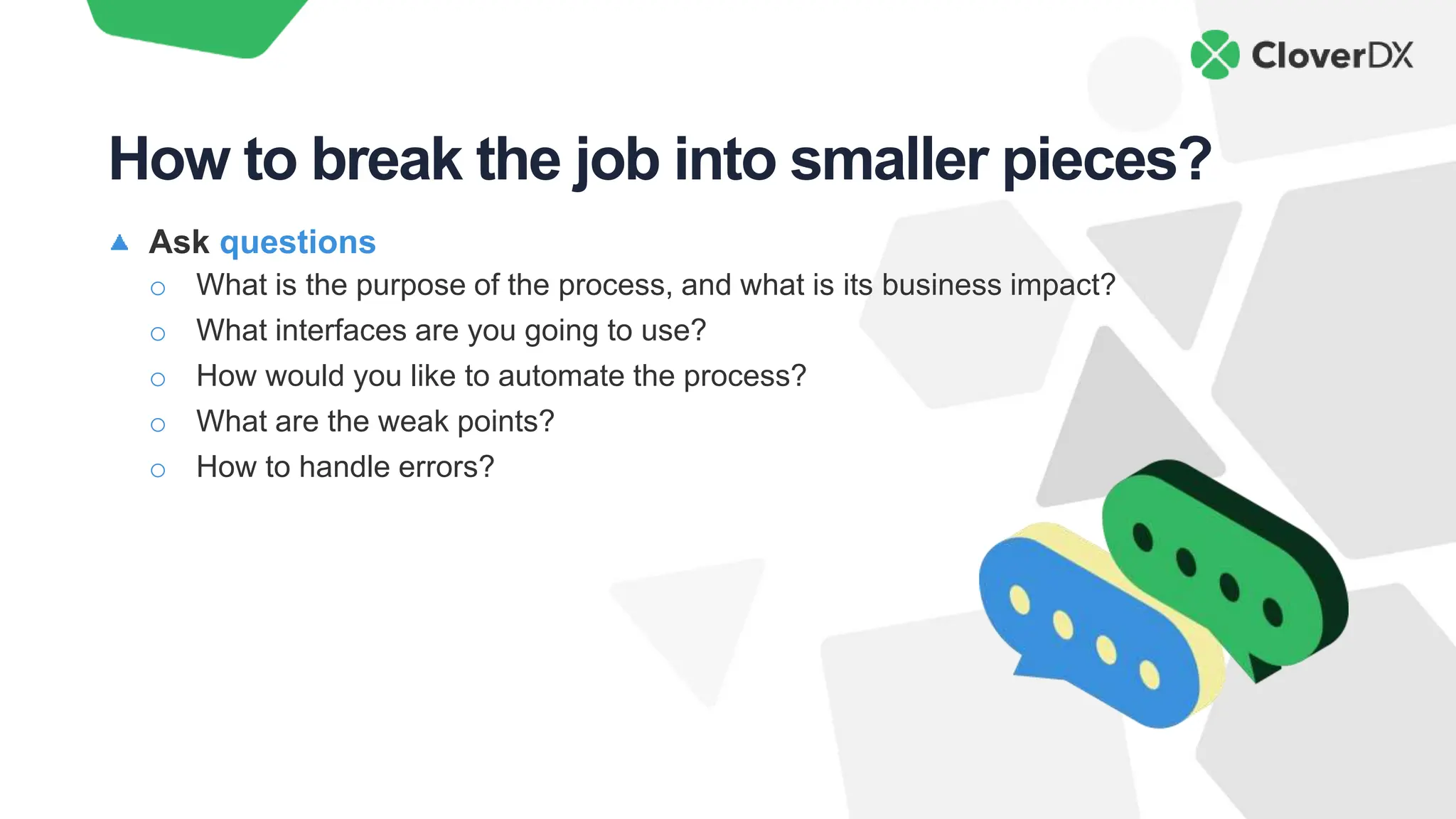

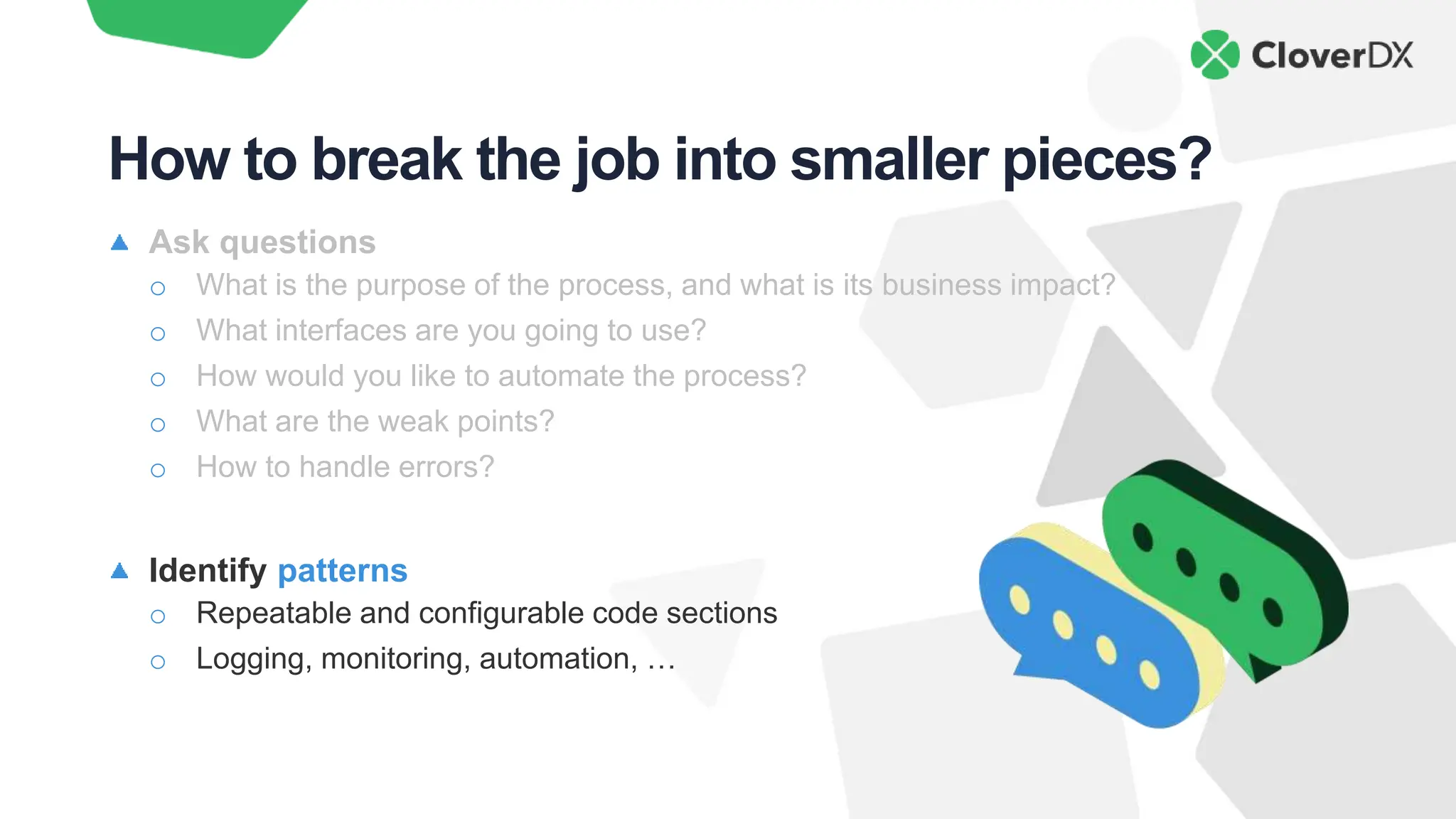

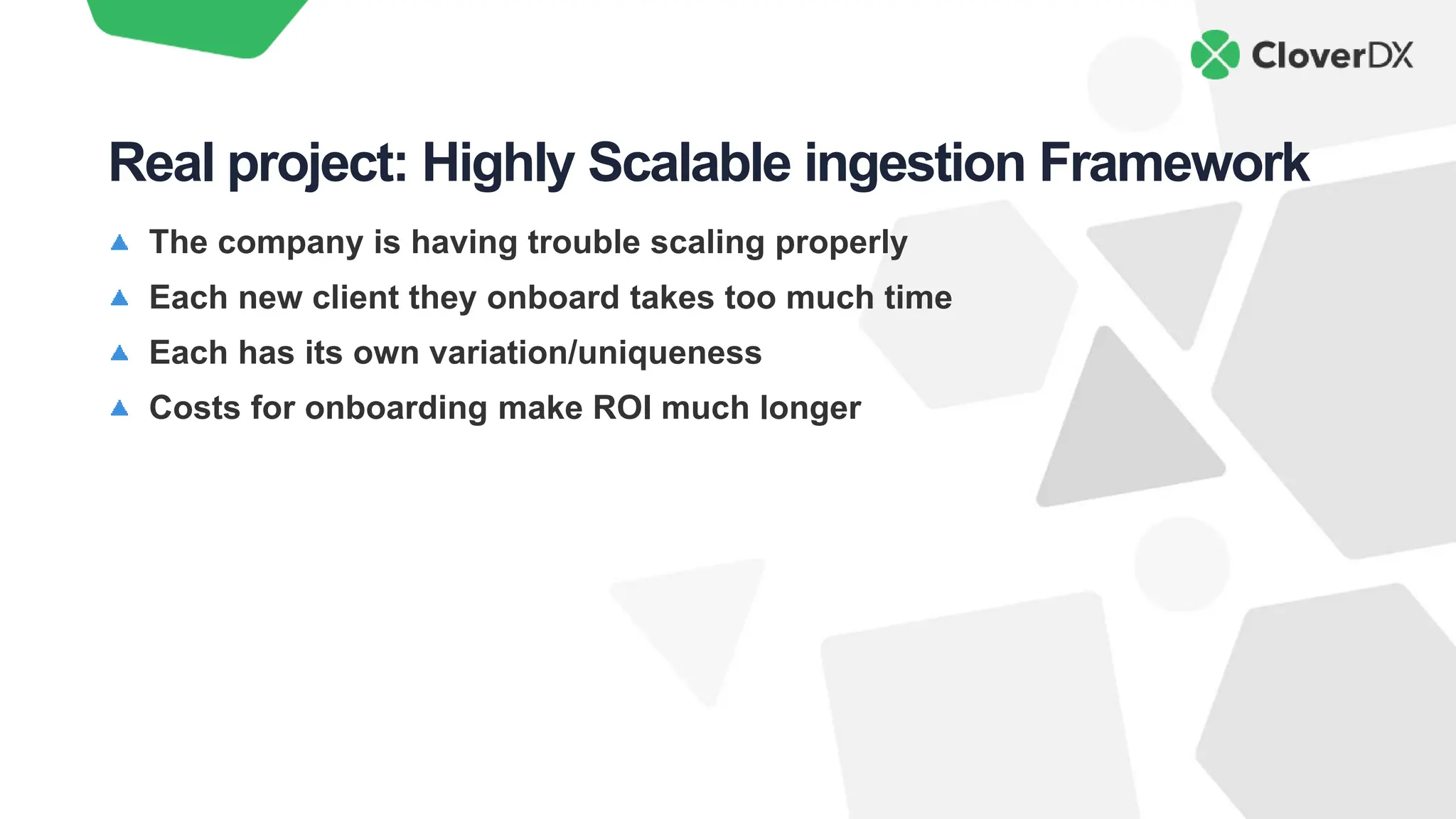

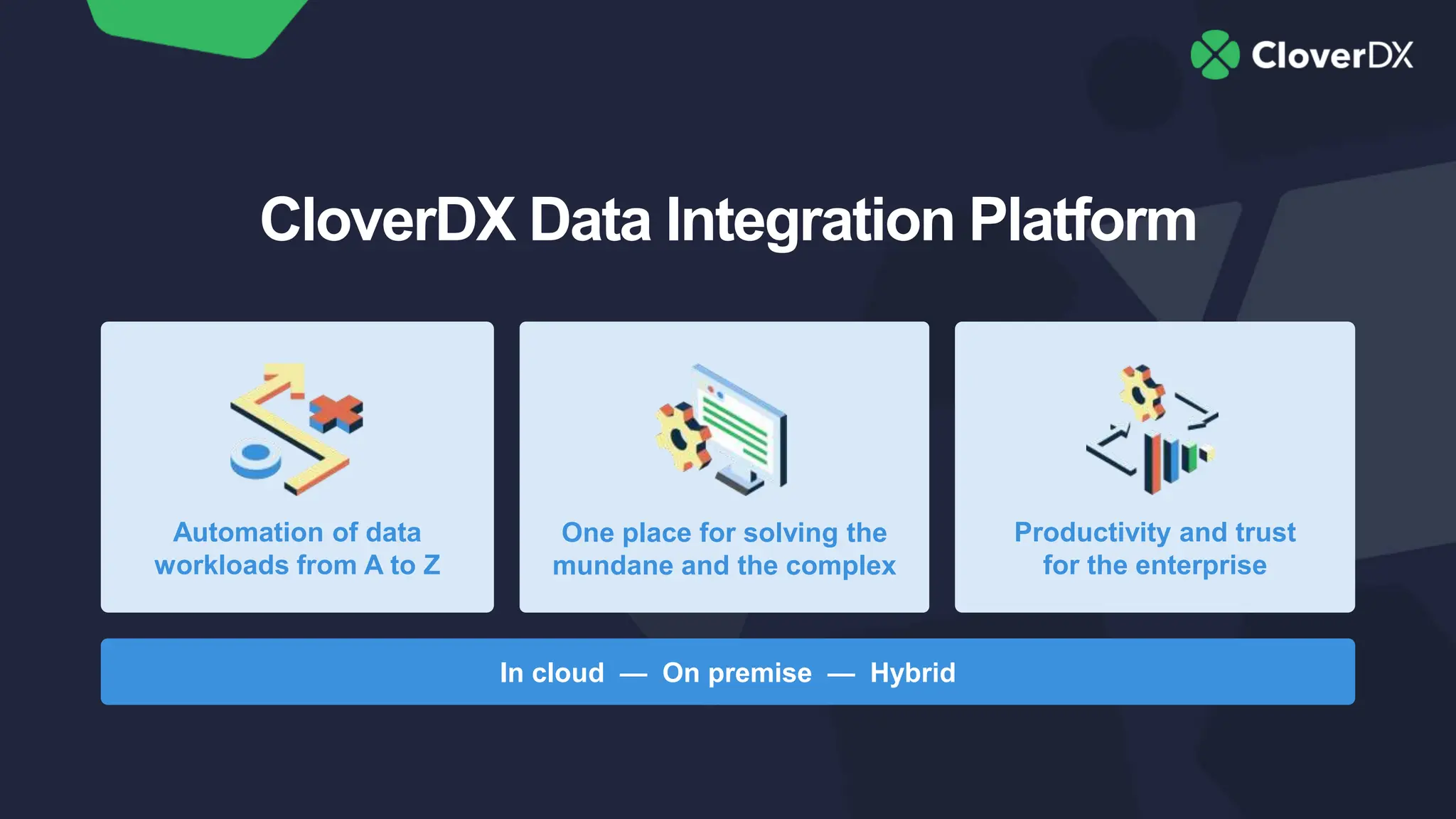

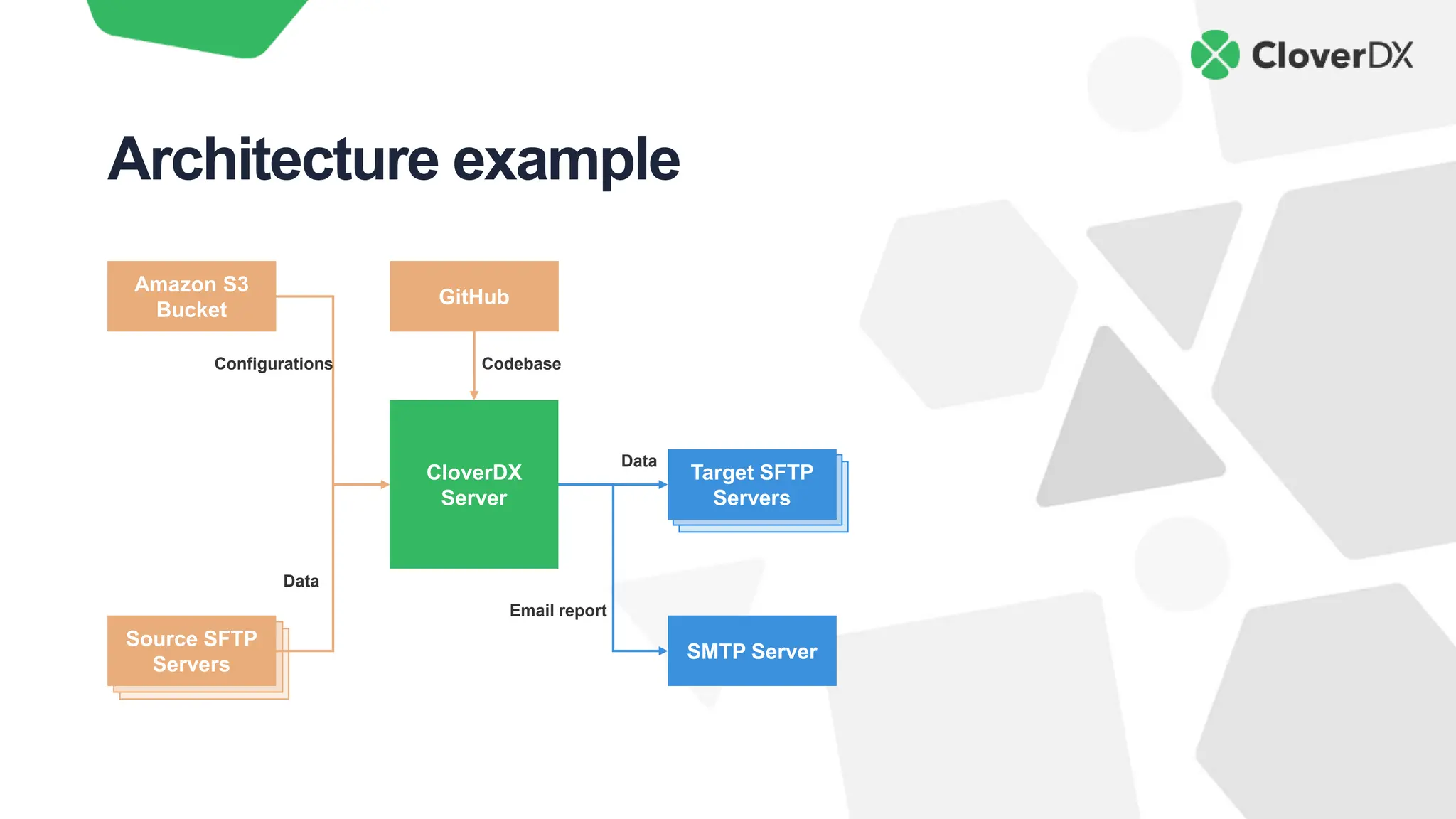

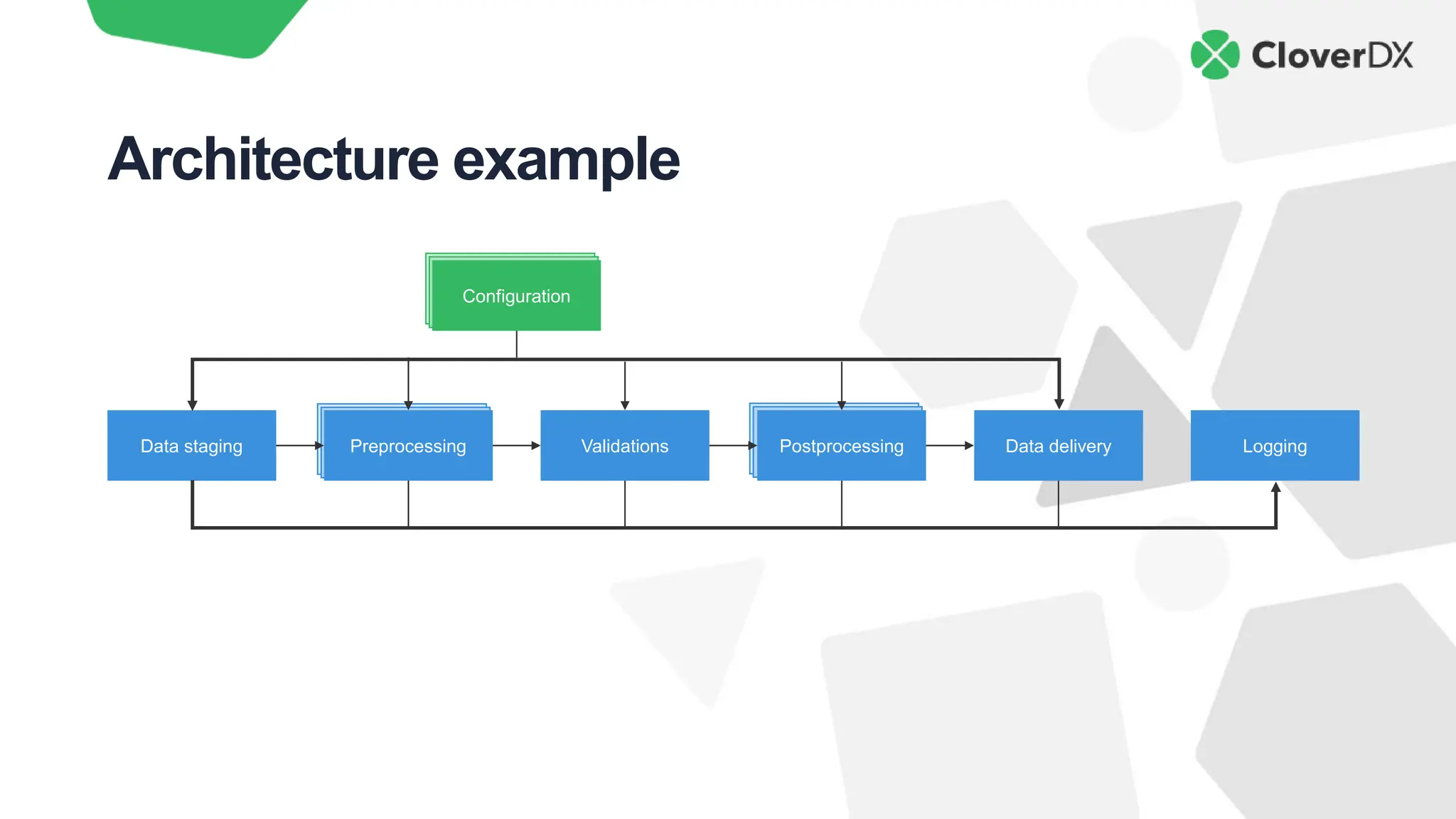

The document outlines key characteristics of modern data architecture that enhance innovation, including automation, simplicity, data quality, scalability, and cost savings. It emphasizes the importance of these features in maintaining competitiveness and improving efficiency in data processing. The document also provides practical insights into how to implement these principles using the CloverDX data integration platform.