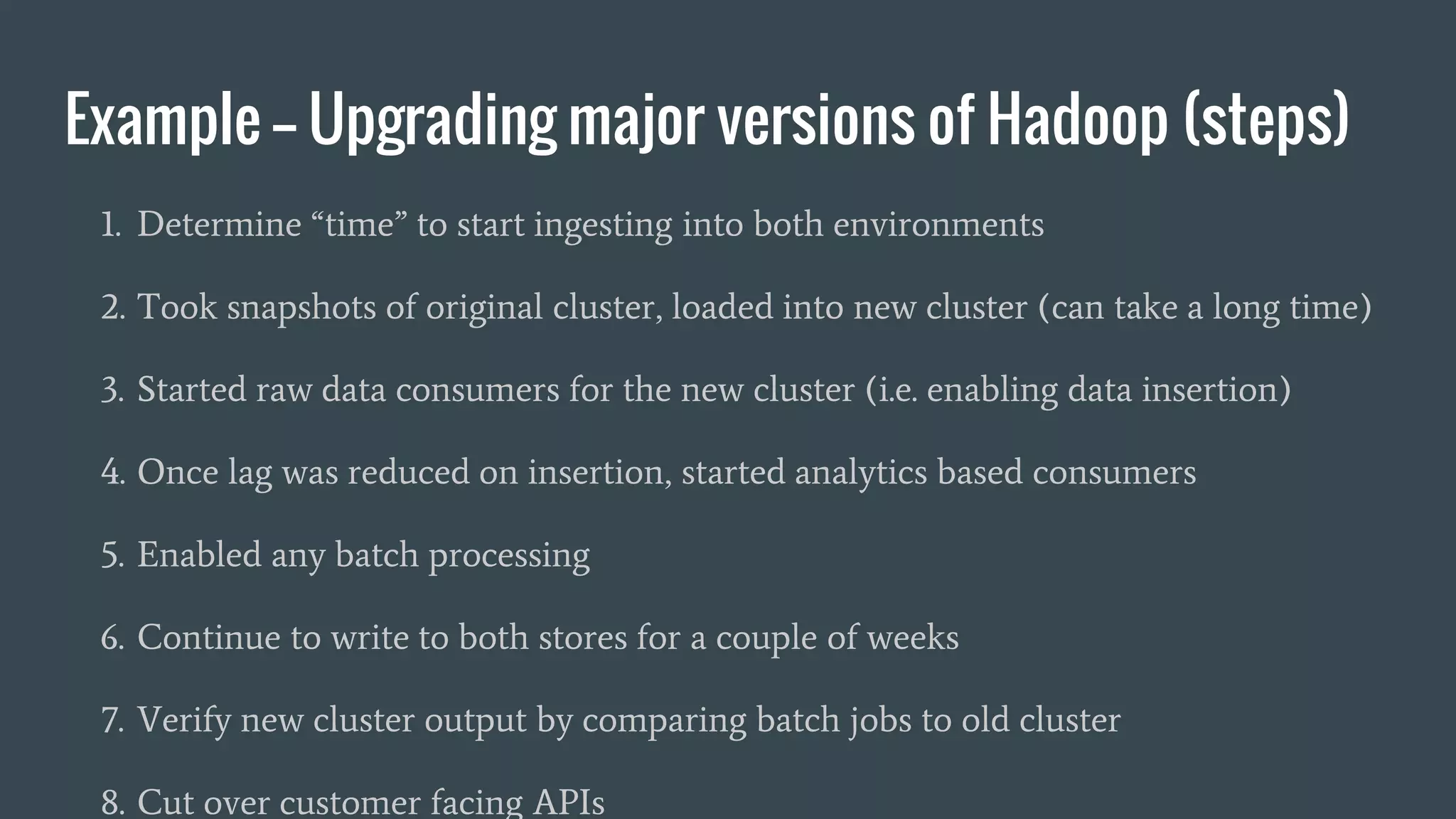

This document outlines different approaches for upgrading or migrating the infrastructure and data stores for big data systems, including upgrading in place, building a new cluster, and strategies like starting the new cluster before cutting over or doing incremental data moves. It emphasizes the importance of planning, testing, and having solid data flow architectures, and provides an example migration from Cassandra to Hadoop using different approaches.