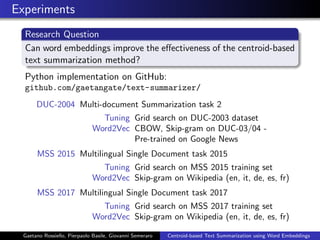

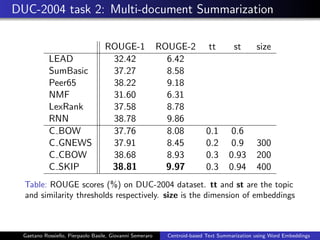

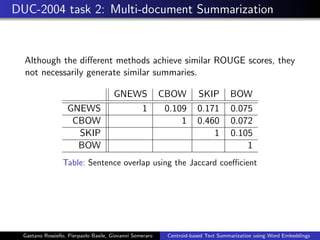

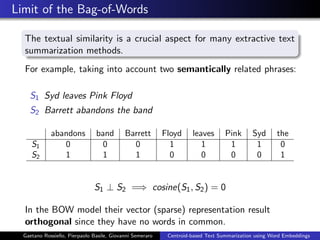

The document discusses a centroid-based extractive text summarization method using word embeddings to improve the selection of relevant sentences. It outlines the limitations of the bag-of-words model and presents a scoring method based on sentence embeddings to better determine textual similarity. Experiments demonstrate the effectiveness of using word embeddings in multi-document and multilingual summarization tasks, providing insights into future improvements and applications.

![Neural Language Model - Word2Vec

Word embedding stands for a continuous vector representation

able to capture syntactic and semantic information of a word.

vec(“Gilmour”) = [0.1, 0.3, 0.2, ...]

vec(“Barrett”) = [0.3, 0.1, 0.6, ...]

...

Figure: Continuous bag-of-words and Skip-gram [Mikolov et al., 2013]

vec(Barrett) − vec(singer) + vec(guitarist) ≈ vec(Gilmour)

Gaetano Rossiello, Pierpaolo Basile, Giovanni Semeraro Centroid-based Text Summarization using Word Embeddings](https://image.slidesharecdn.com/multiling17slide-180221143146/85/Centroid-based-Text-Summarization-through-Compositionality-of-Word-Embeddings-6-320.jpg)

![Centroid-based Text Summarization: Overview

Centroid-based Extractive Text Summarization [Radev et al., 2004]

The centroid represents a pseudo-document which condenses

the meaningful information of a document (tfidf (w) > t)

The main idea is to project in the vector space the vector

representations of both the centroid and each sentence of a

document

The sentences closer to the centroid are selected

Gaetano Rossiello, Pierpaolo Basile, Giovanni Semeraro Centroid-based Text Summarization using Word Embeddings](https://image.slidesharecdn.com/multiling17slide-180221143146/85/Centroid-based-Text-Summarization-through-Compositionality-of-Word-Embeddings-7-320.jpg)

![Sentence Scoring using Word Embeddings

Word Embeddings Lookup Table

Given a corpus of documents [D1, D2, . . . ]a and its vocabulary V

with size N = |V |, we define a matrix E ∈ RN,k, so-called lookup

table, where the i-th row is a word embedding of size k, k << N,

of the i-th word in V .

a

The model can be trained on the collection of documents to be summarized

or on a larger corpus.

Given a document D to be summarized:

Preprocessing: split into sentences, remove stopwords, no stemming

Centroid Embedding: C = w∈D,tfidf (w)>t E[idx(w)]

Sentence Embedding: Sj = w∈Sj

E[idx(w)]

Sentence Score: sim(C, Sj ) =

CT •Sj

||C||·||Sj ||

Gaetano Rossiello, Pierpaolo Basile, Giovanni Semeraro Centroid-based Text Summarization using Word Embeddings](https://image.slidesharecdn.com/multiling17slide-180221143146/85/Centroid-based-Text-Summarization-through-Compositionality-of-Word-Embeddings-8-320.jpg)

![Sentence Selection

Input: S, Scores, st, limit

Output: Summary

1: S ← sortDesc(S,Scores)

2: k ← 1

3: for i ← 1 to m do

4: length ← length(Summary)

5: if length > limit then return Summary

6: SV ← sumVectors(S[i])

7: include ← True

8: for j ← 1 to k do

9: SV 2 ← sumVectors(Summary[j])

10: sim ← similarity(SV ,SV 2)

11: if sim > st then

12: include ← False

13: if include then

14: Summary[k] ← S[i]

15: k ← k + 1

Gaetano Rossiello, Pierpaolo Basile, Giovanni Semeraro Centroid-based Text Summarization using Word Embeddings](https://image.slidesharecdn.com/multiling17slide-180221143146/85/Centroid-based-Text-Summarization-through-Compositionality-of-Word-Embeddings-9-320.jpg)

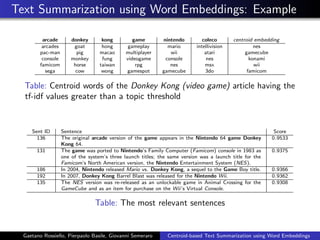

![Text Summarization using Word Embeddings: Example

Figure: Embeddings visualization using t-SNE [van der Maaten et al., 2008]

Gaetano Rossiello, Pierpaolo Basile, Giovanni Semeraro Centroid-based Text Summarization using Word Embeddings](https://image.slidesharecdn.com/multiling17slide-180221143146/85/Centroid-based-Text-Summarization-through-Compositionality-of-Word-Embeddings-10-320.jpg)