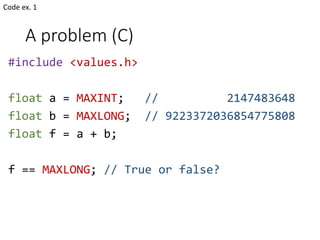

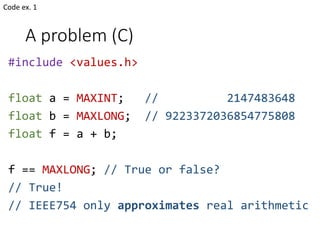

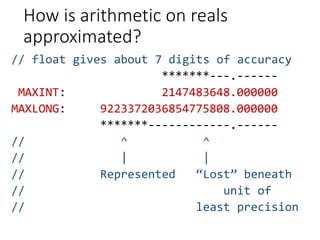

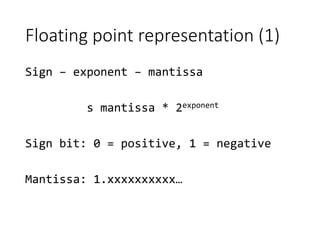

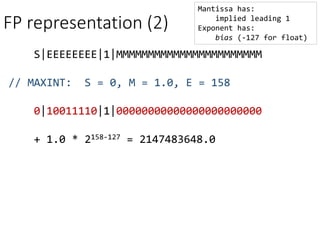

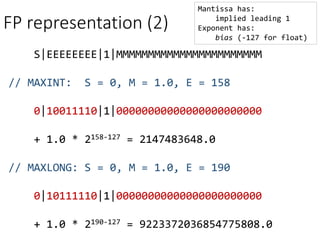

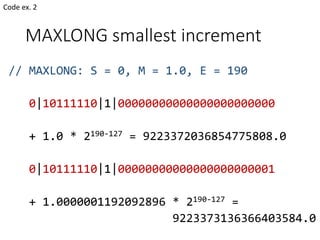

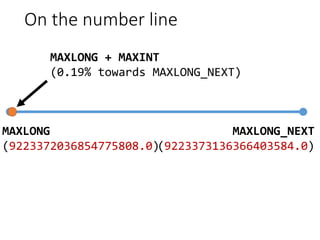

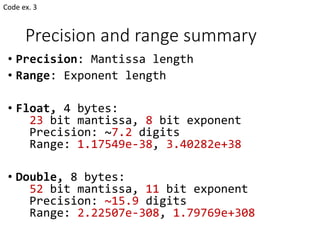

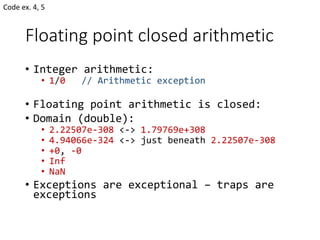

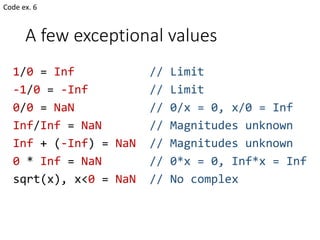

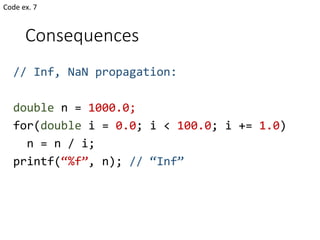

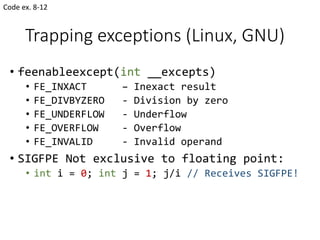

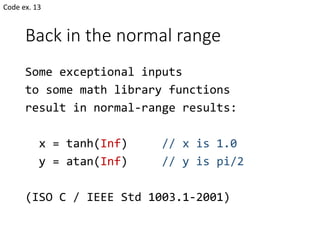

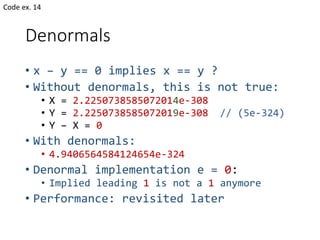

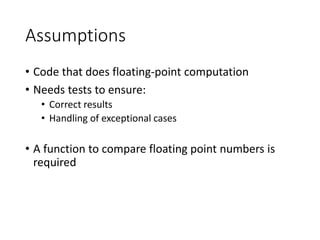

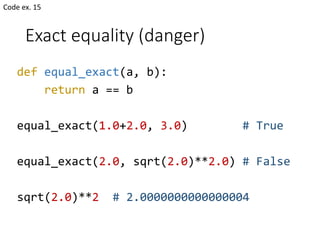

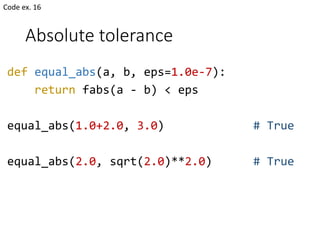

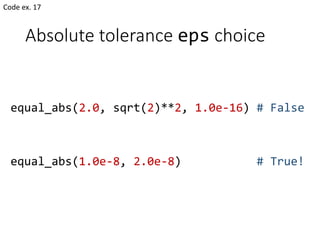

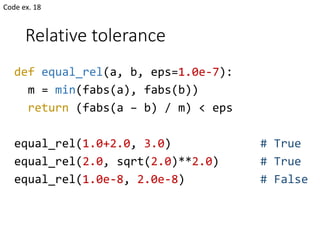

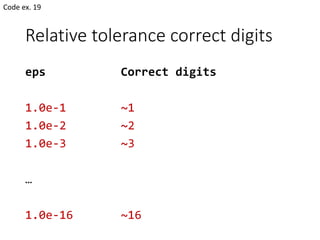

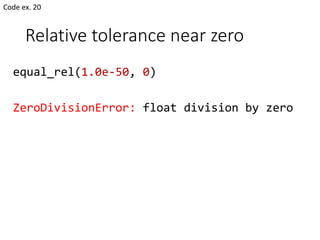

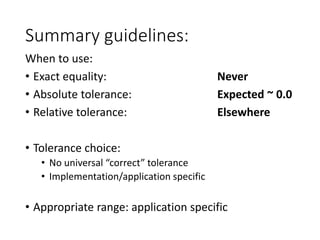

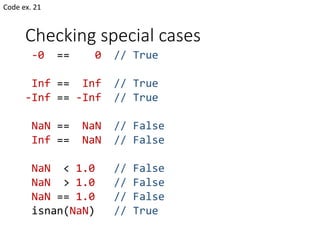

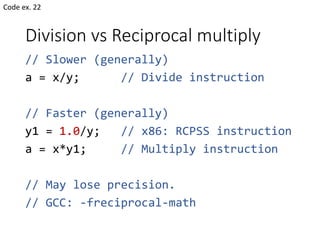

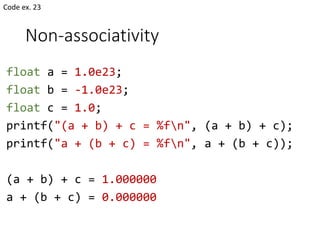

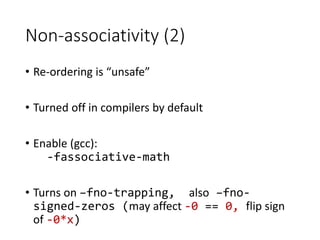

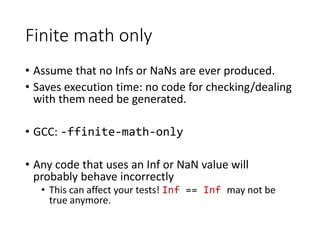

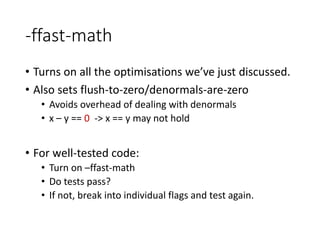

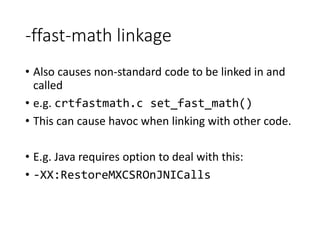

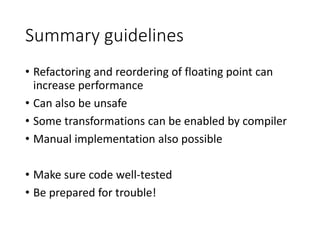

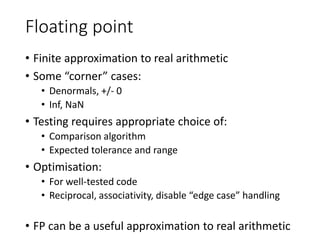

The document discusses floating-point arithmetic, its representation, and the IEEE 754 standard, highlighting its implications for software development. It covers concepts like precision, range, exceptional values, and provides examples and best practices for handling floating-point computations. The document aims to empower developers by fostering a deeper understanding of floating-point operations and their optimization in programming.