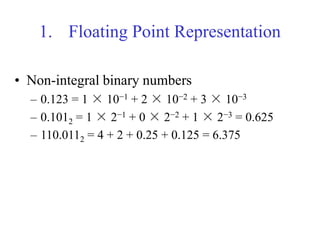

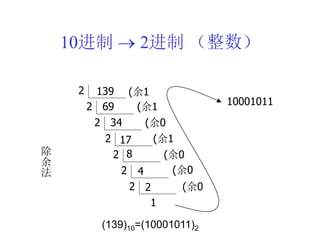

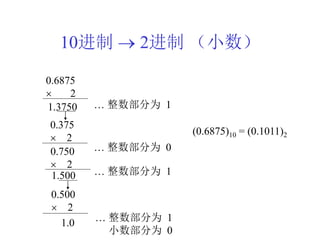

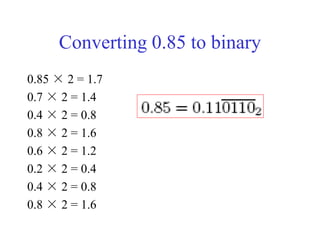

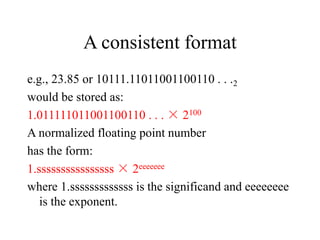

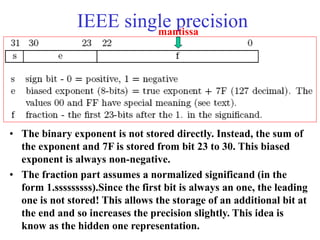

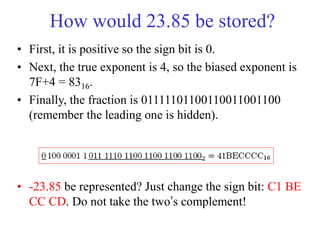

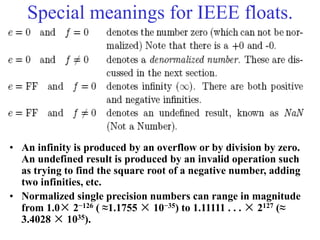

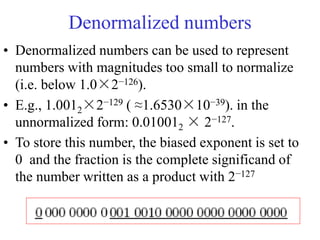

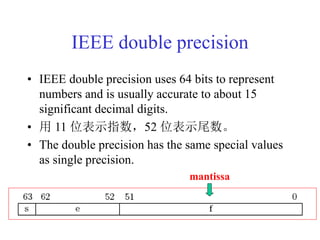

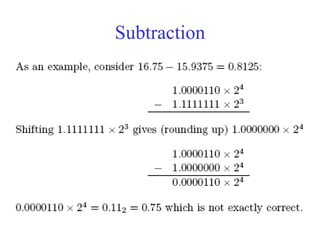

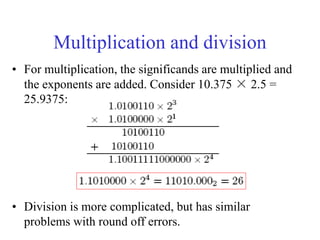

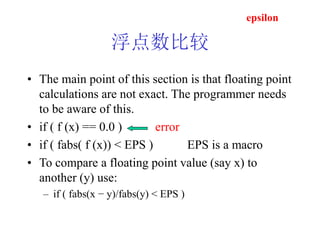

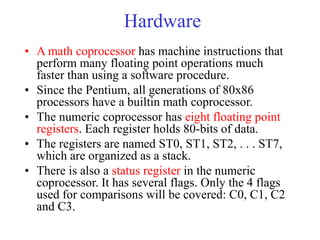

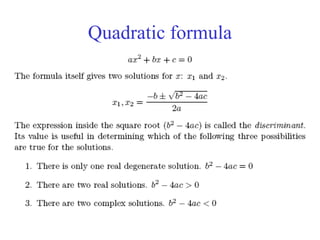

This document discusses floating point numbers, representation, arithmetic, and numeric coprocessors. It describes how floating point numbers are represented in binary using the sign, exponent, and significand. Arithmetic on floating point numbers is approximate due to limited precision. Numeric coprocessors perform floating point operations in hardware for improved speed over software methods. Examples demonstrate using a coprocessor to implement the quadratic formula, read arrays from files, and find prime numbers.