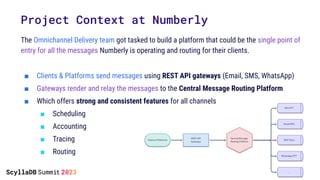

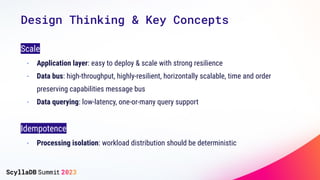

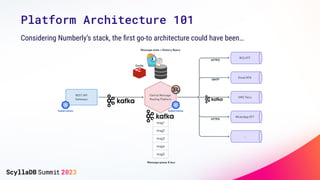

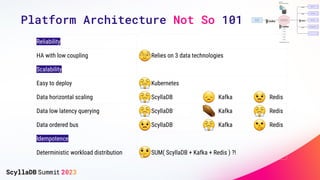

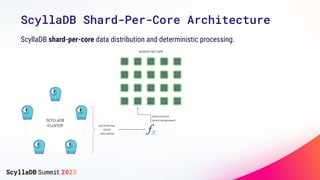

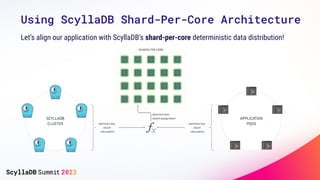

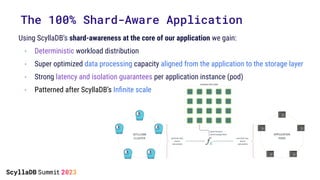

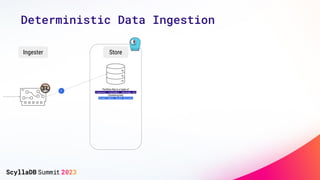

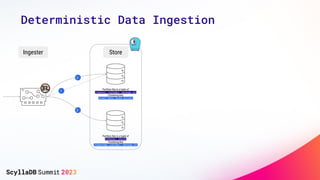

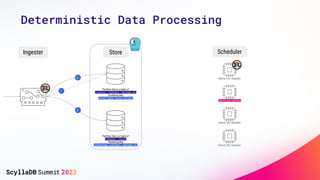

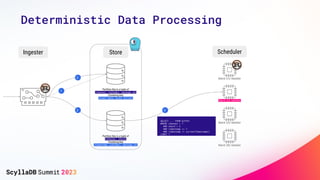

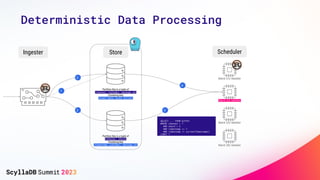

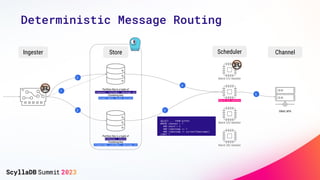

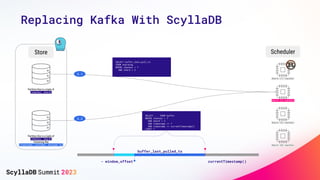

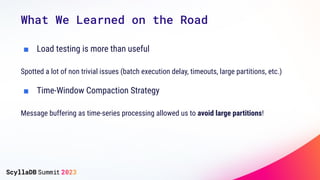

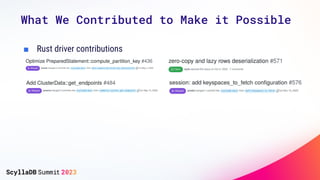

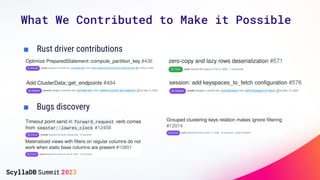

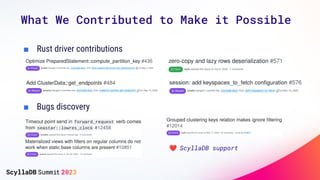

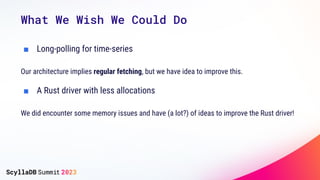

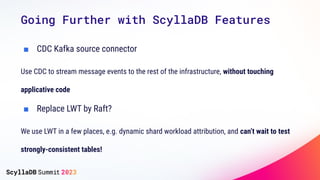

The document discusses the development of a 100% shard-aware application using ScyllaDB and Rust at Numberly, emphasizing the need for reliability, scalability, and efficient data handling. It elaborates on the architecture employing a shard-per-core data distribution mechanism to optimize performance and outlines the design principles focused on observability and idempotence. Additionally, the authors share insights on the challenges faced, contributions made, and future improvements desired for the Rust driver and overall system functionality.