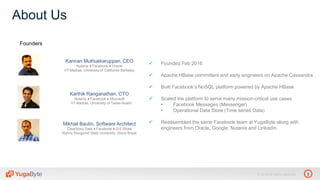

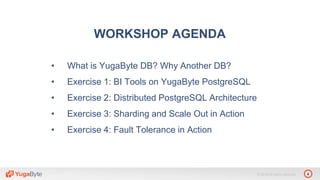

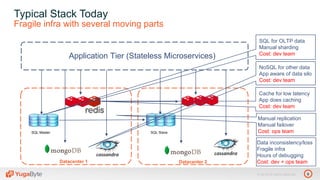

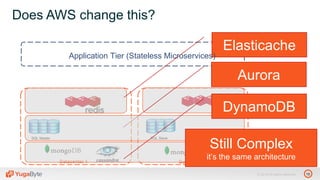

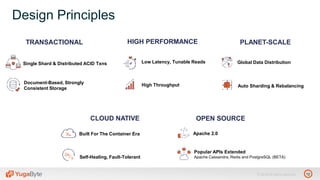

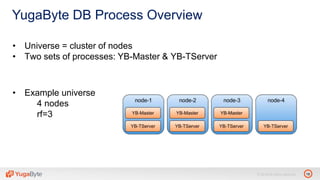

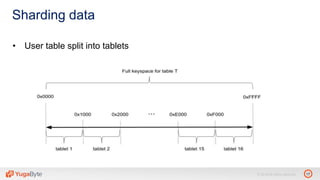

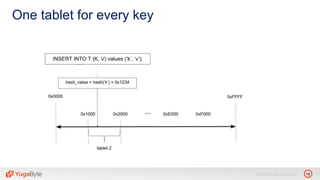

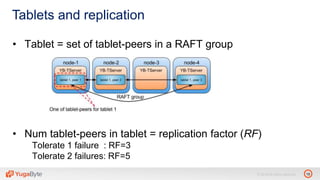

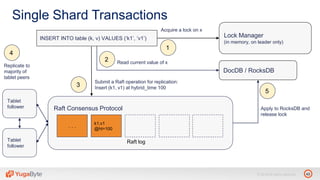

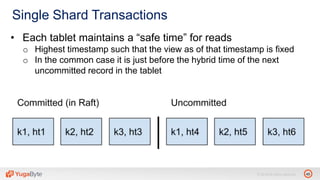

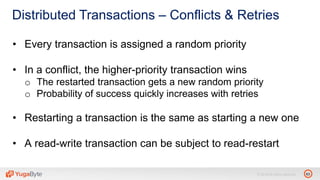

The document discusses YugabyteDB, a transactional database designed for high-performance cloud services, founded by engineers with experience at companies like Facebook and Oracle. It covers its unique architecture, which features automatic sharding, fault tolerance, and a focus on cloud-native deployment, as well as the transaction processing capabilities, including single and distributed transactions. A workshop agenda is outlined, including practical exercises aimed at demonstrating YugabyteDB's capabilities and functionality.