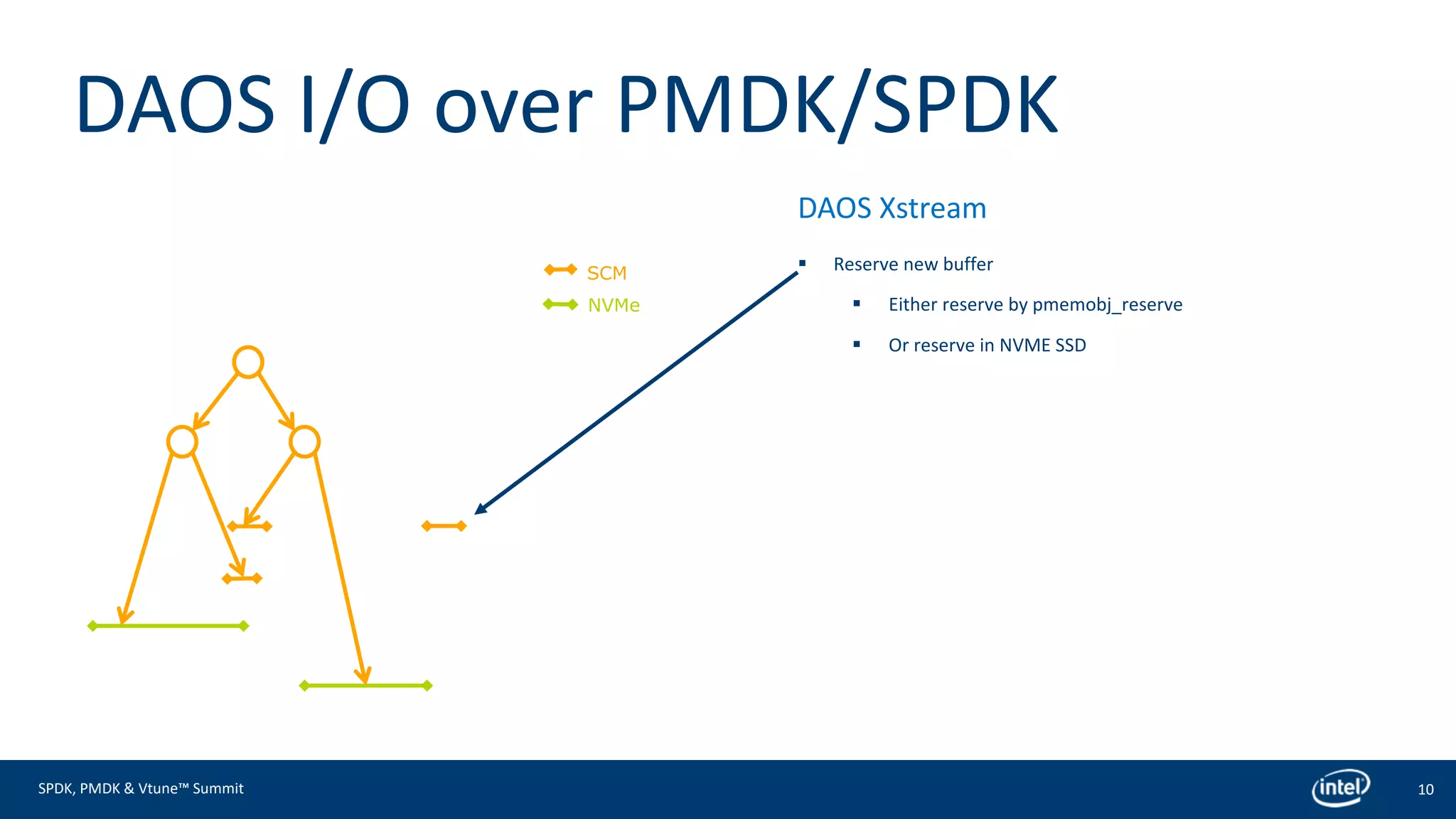

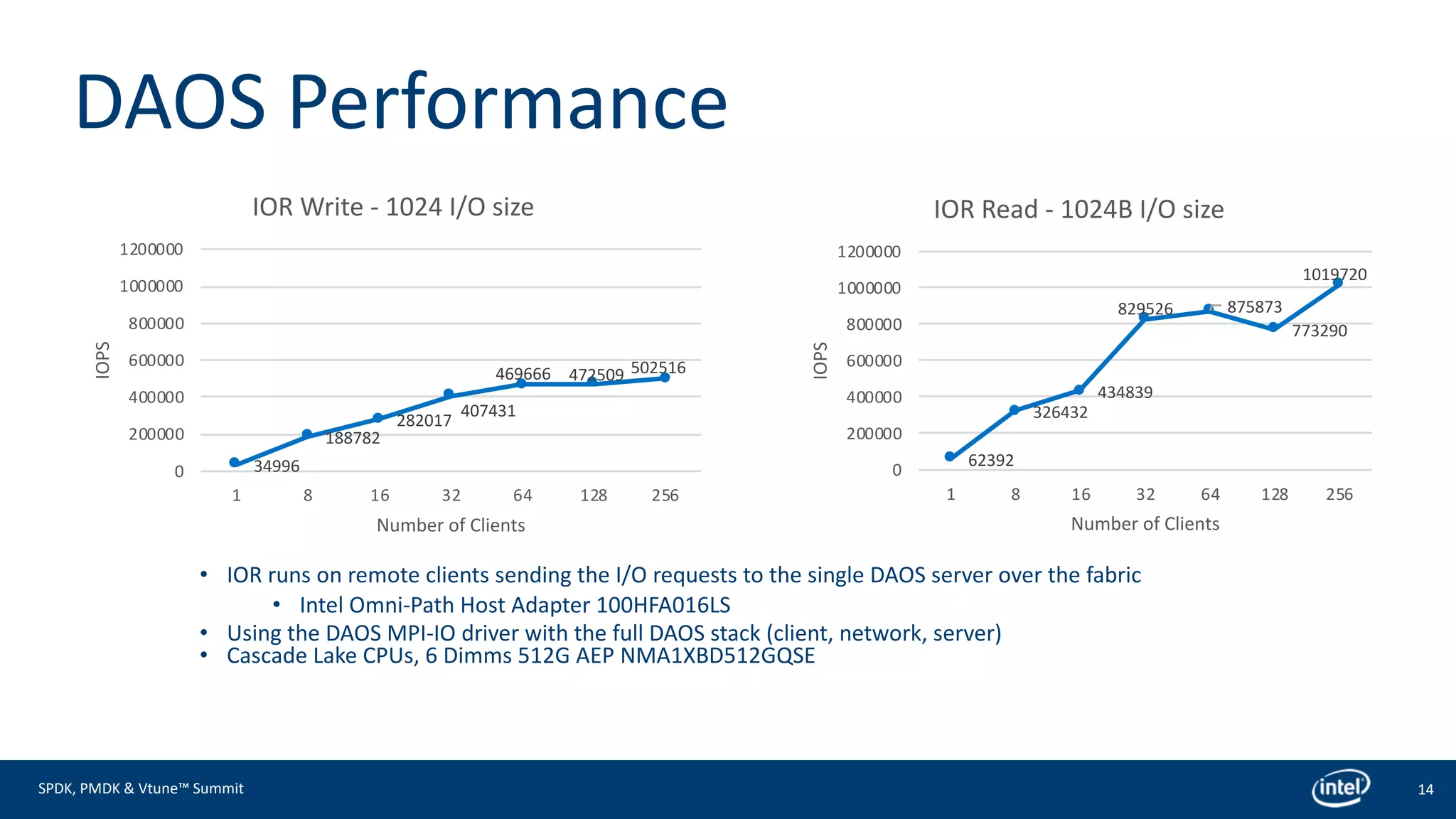

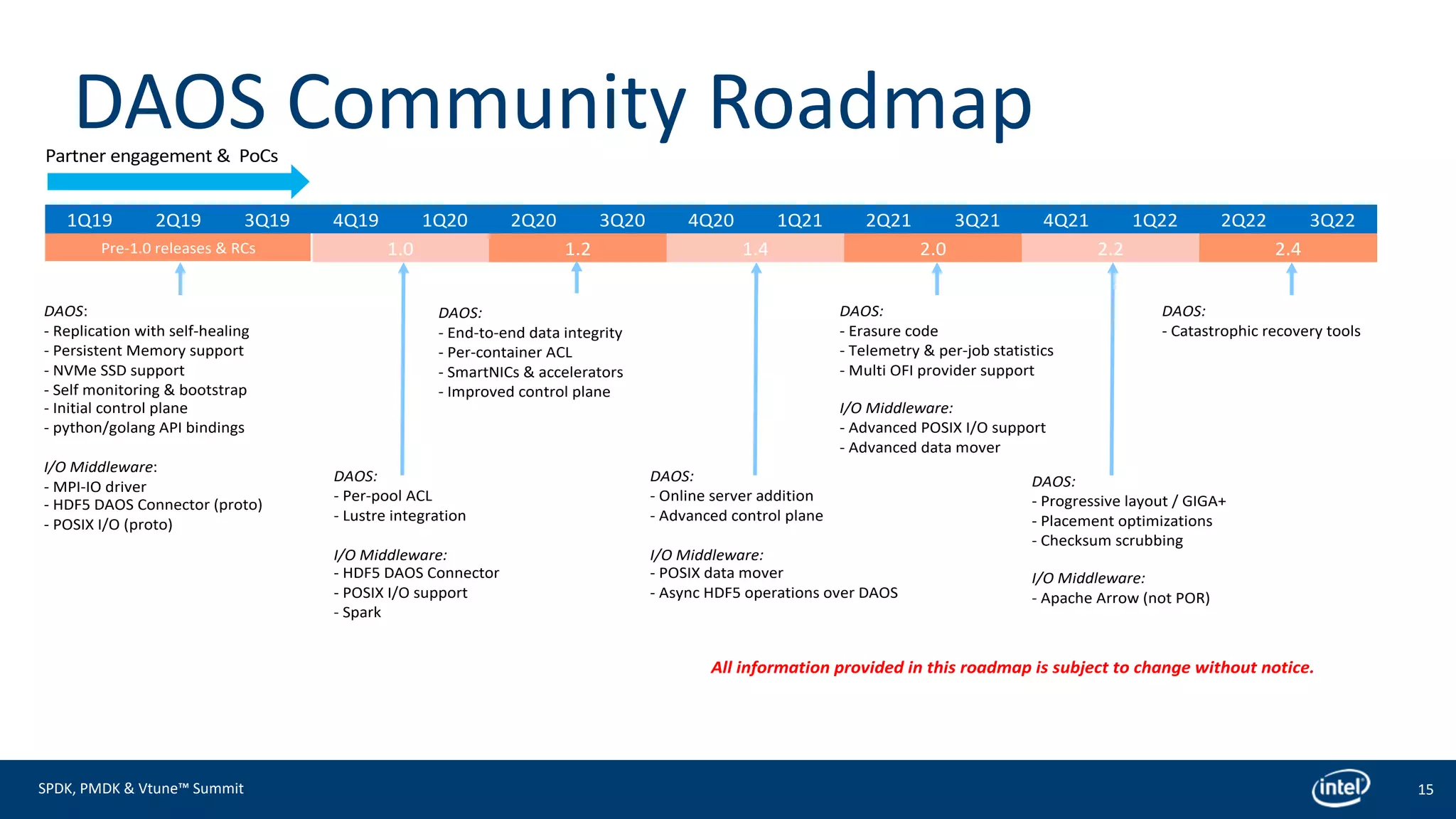

The document presents an agenda for a summit discussing DAOS (Distributed Asynchronous Object Storage), covering its architecture, performance, and integration with PMDK and SPDK. It highlights DAOS's features, such as a scalable storage model suitable for diverse data types, rich API, and efficient data management including security and reduction methods. Additionally, it outlines DAOS's performance metrics and community development roadmap, with various timelines and planned features for future releases.