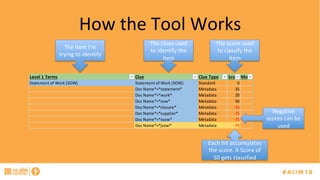

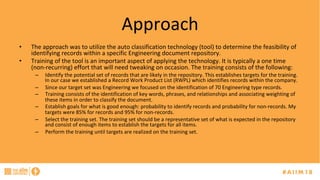

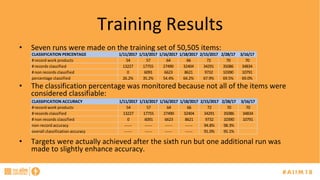

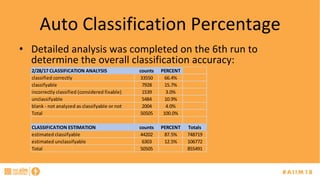

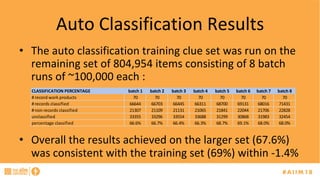

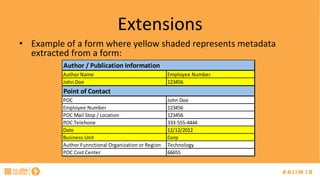

The document discusses the challenges of managing records, particularly identifying and categorizing documents in repositories using semantic technology like auto classification. It details an approach employing machine learning to enhance document classification accuracy, including the iterative training process and outcomes from a specific case study. Additionally, it provides recommendations for improving document management practices and potential applications of the technology in extracting metadata and screening for sensitive information.