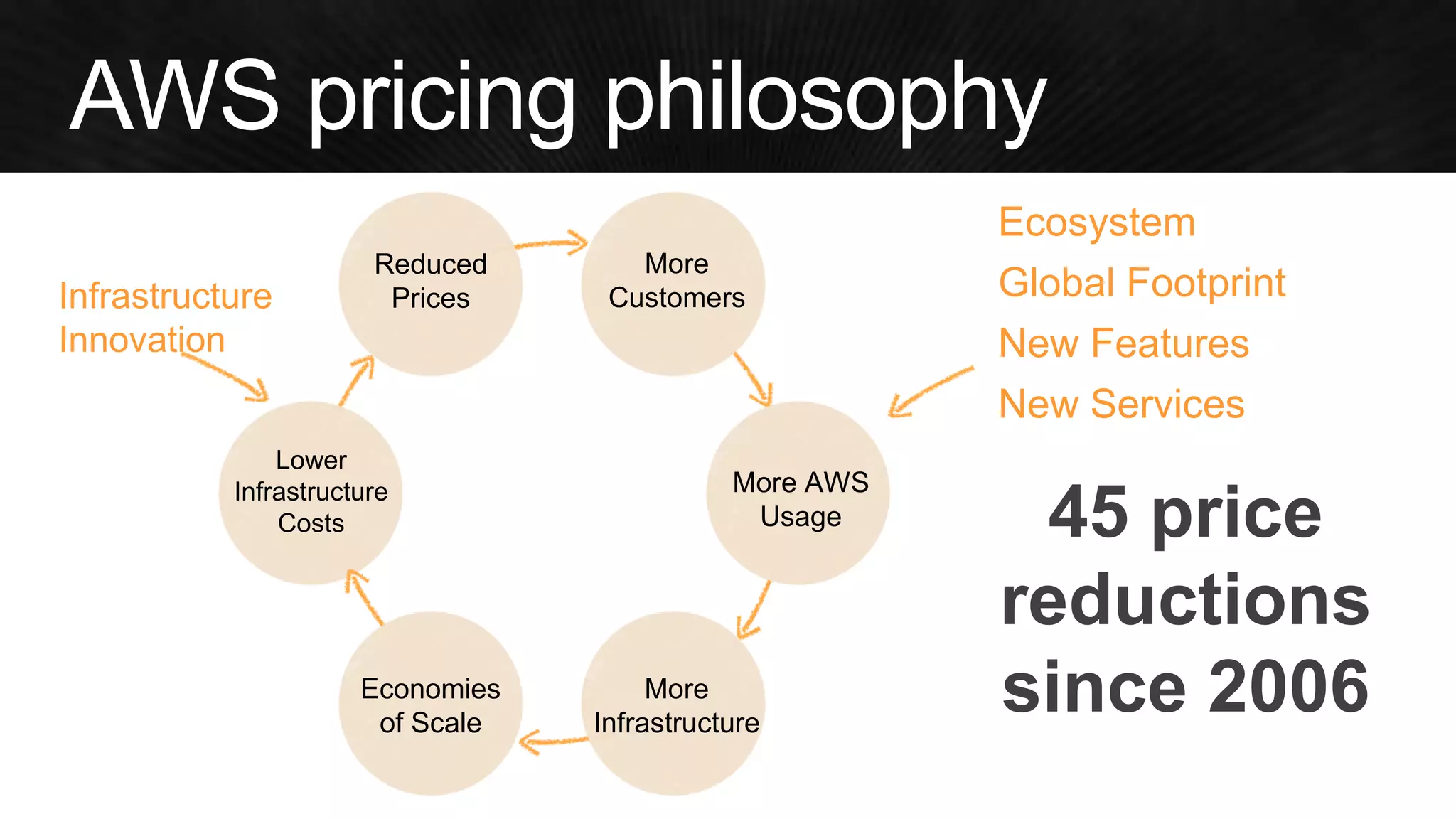

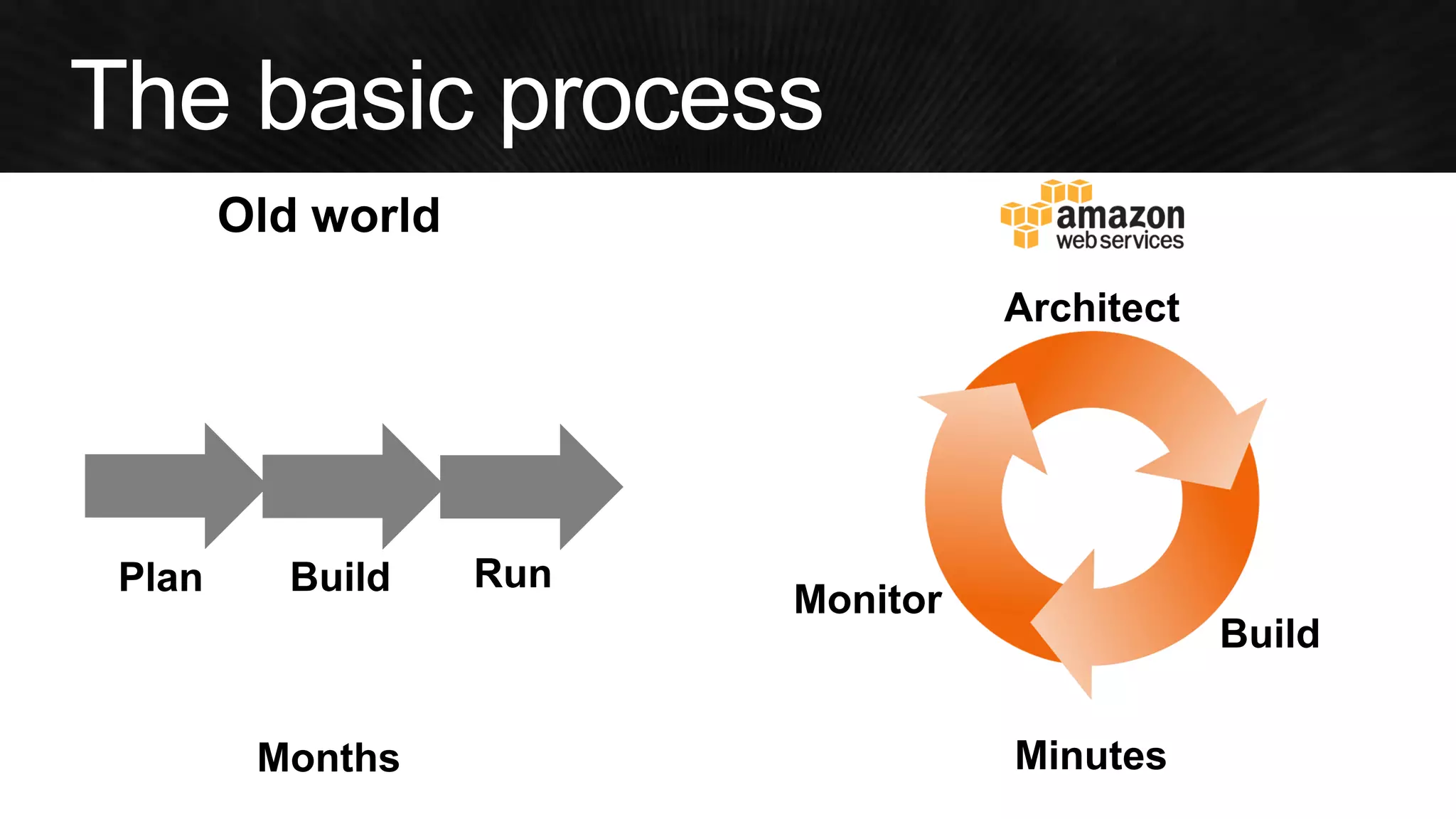

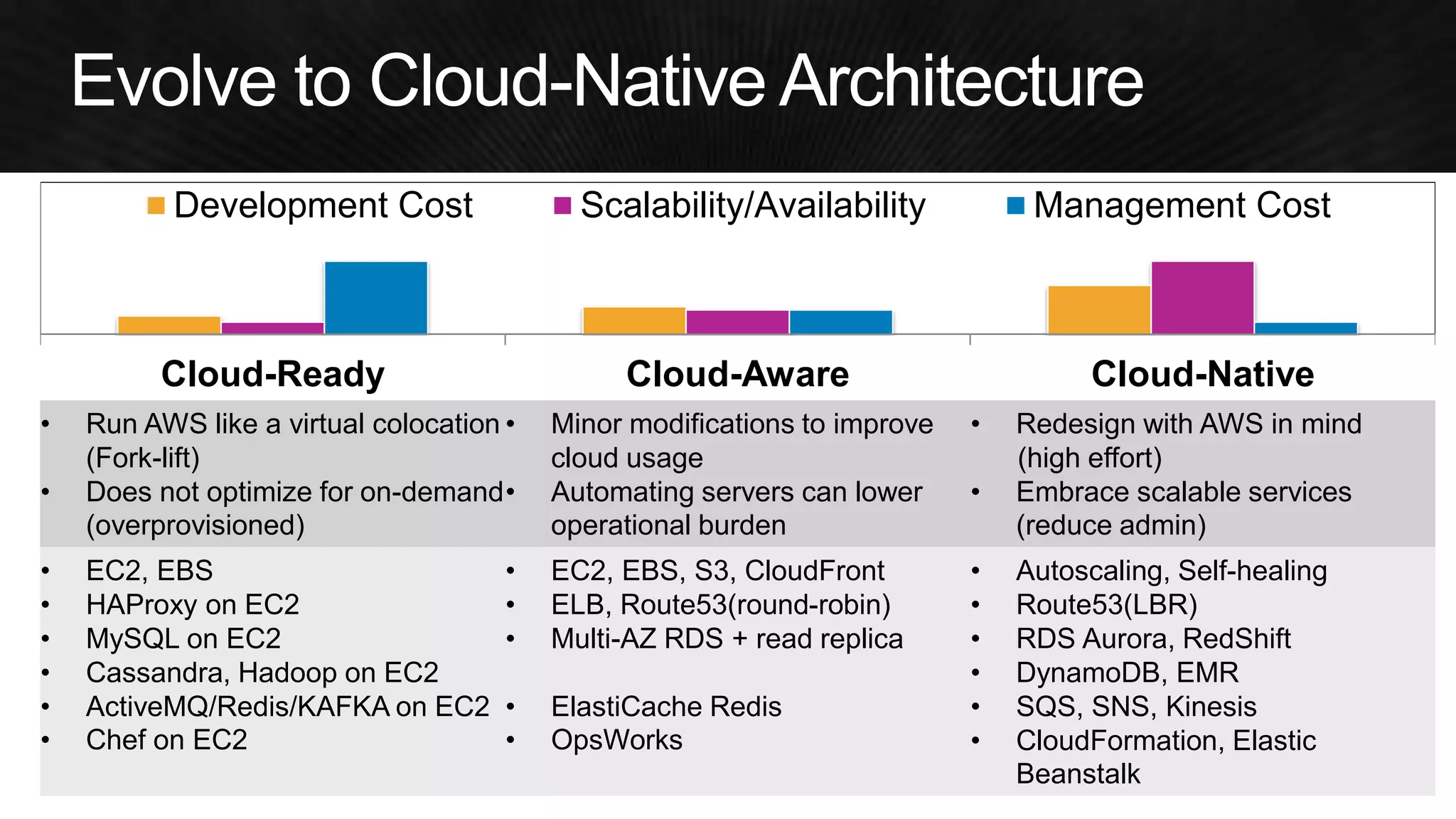

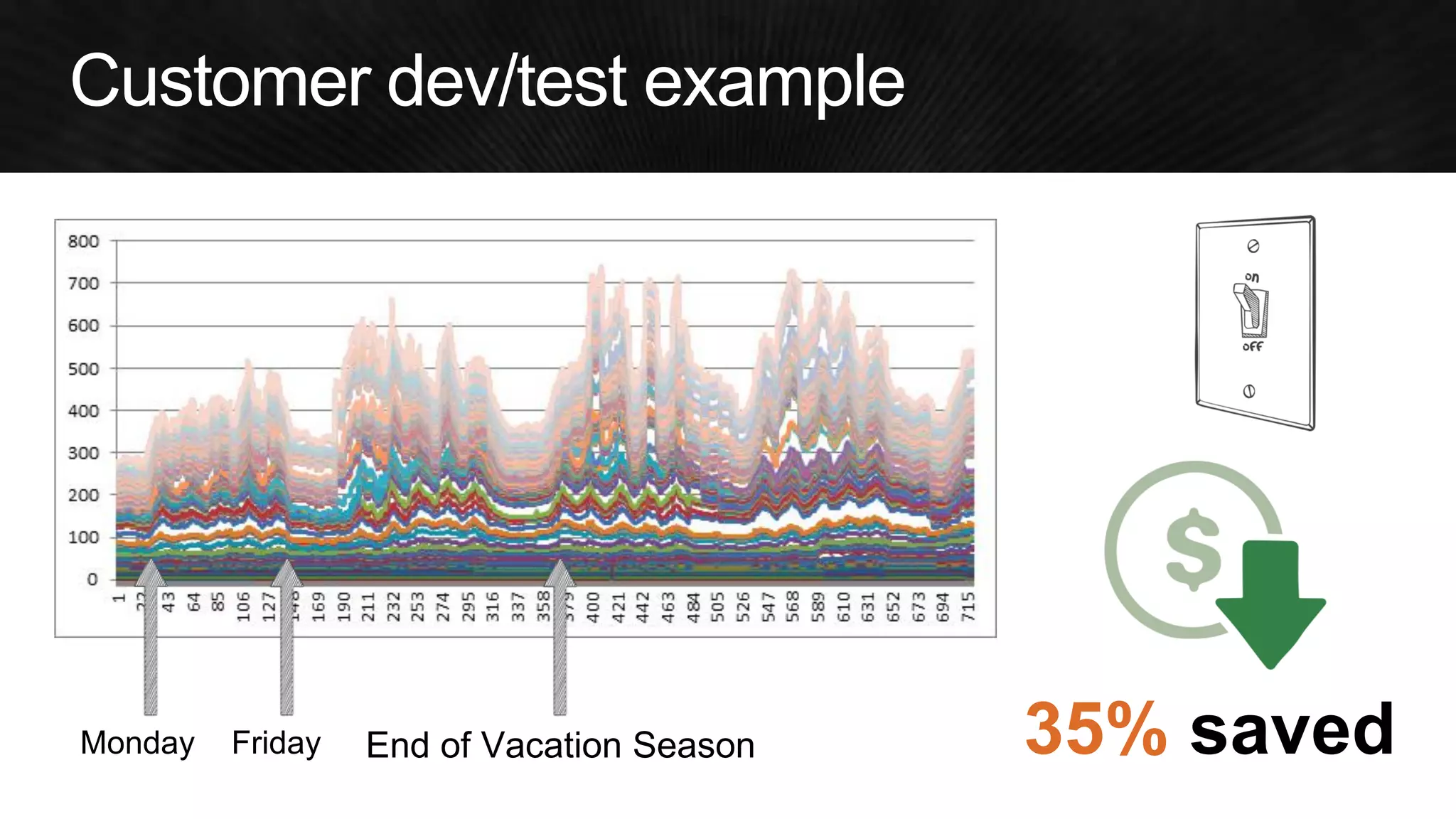

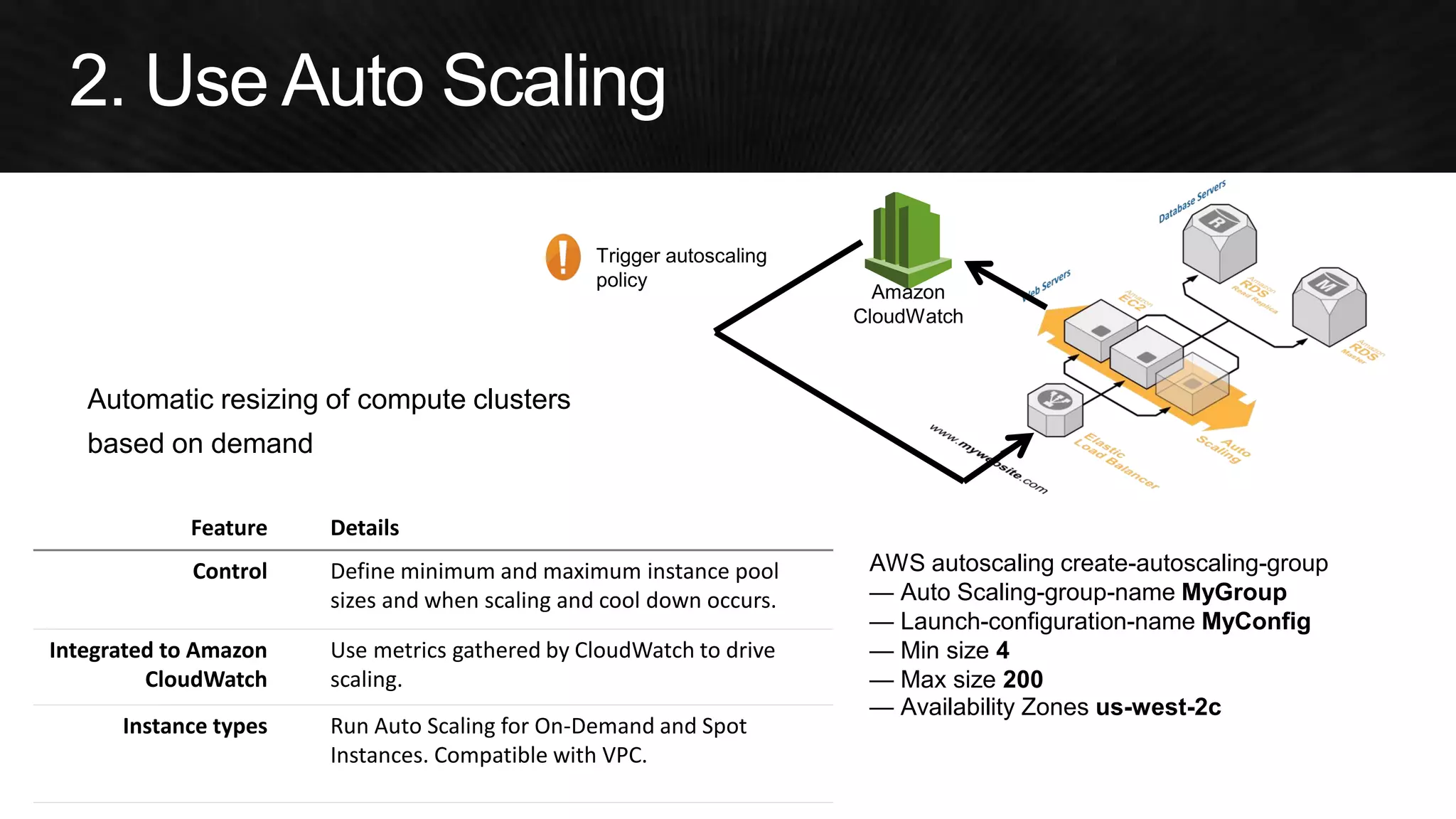

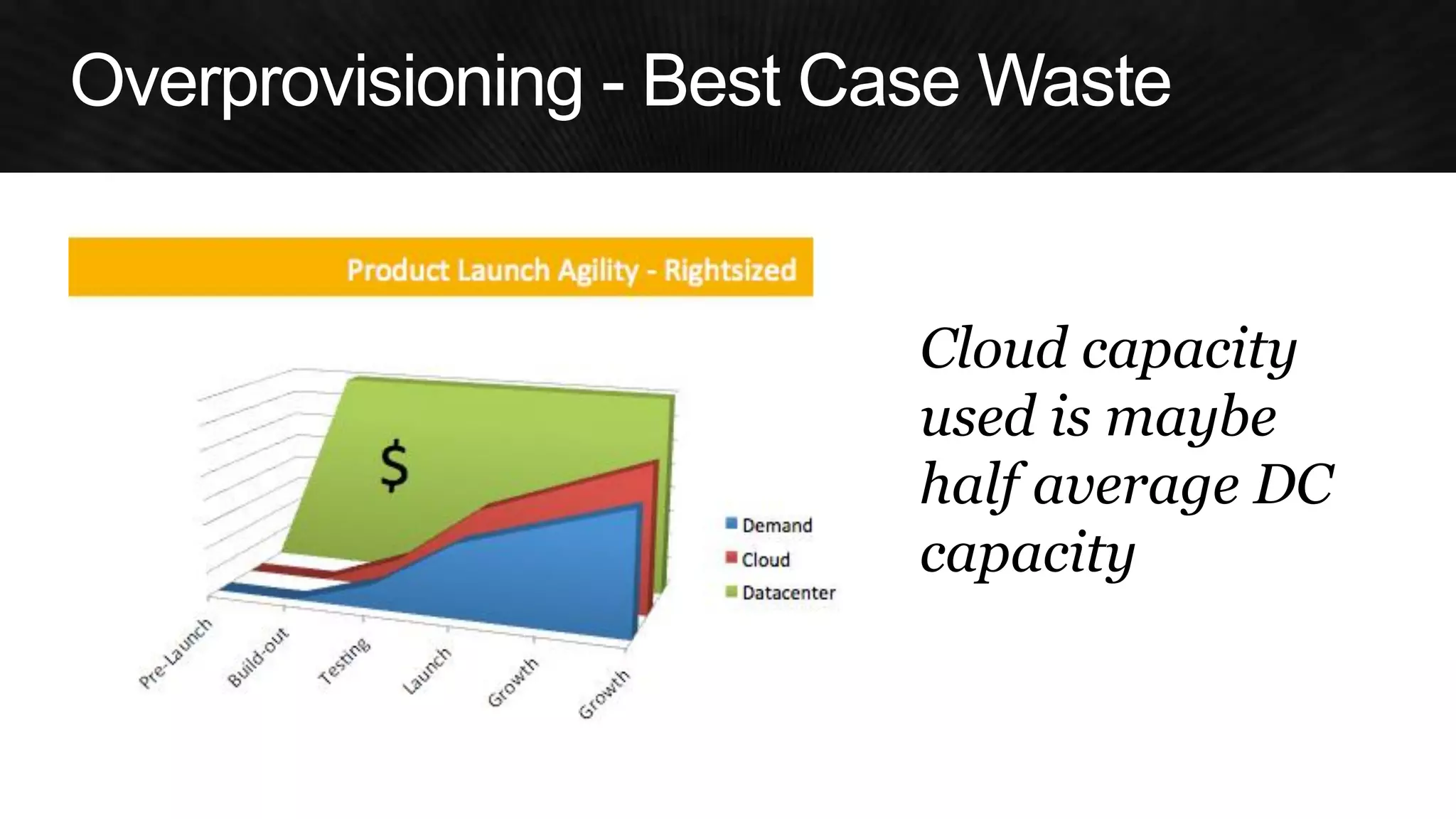

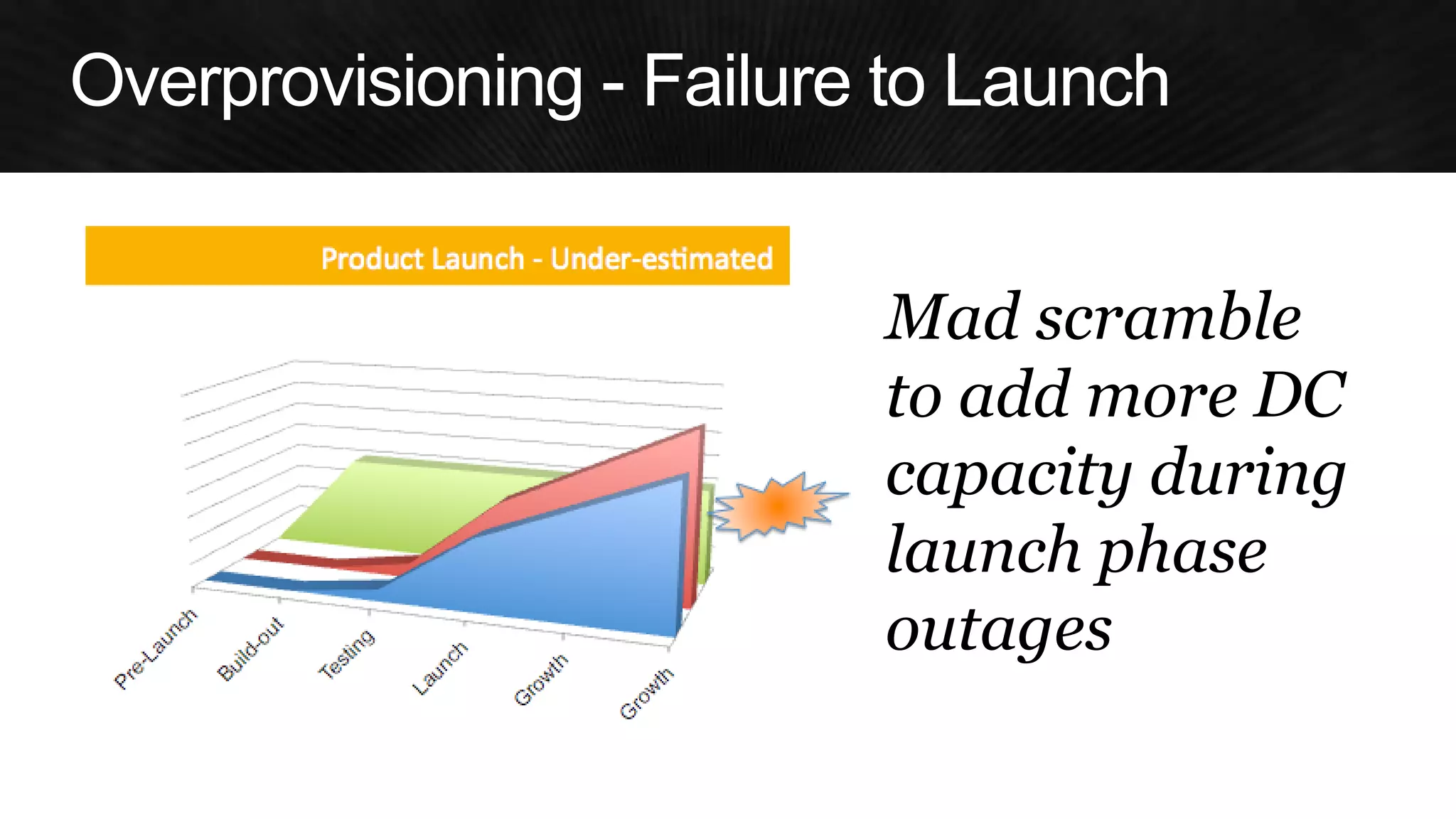

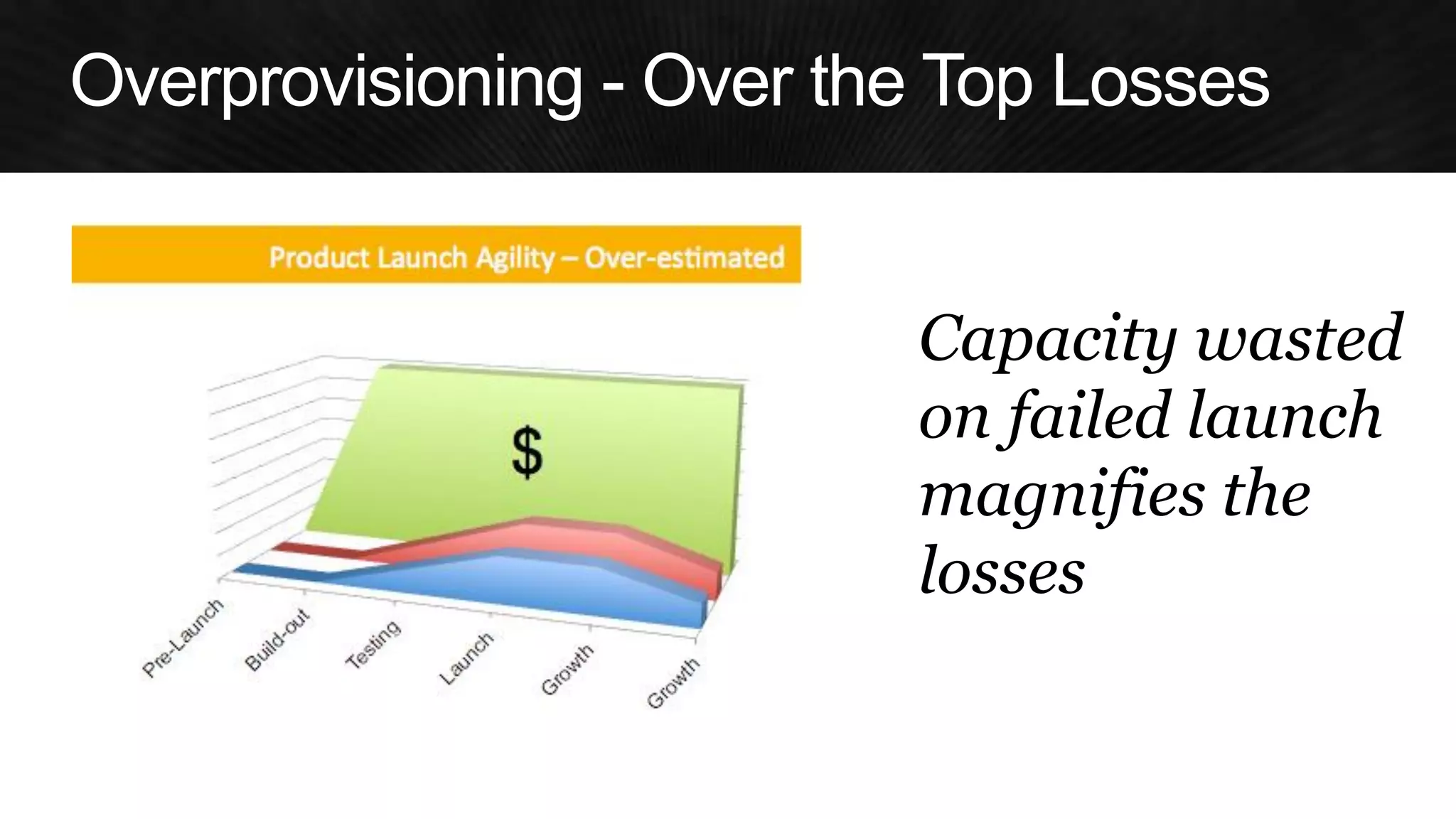

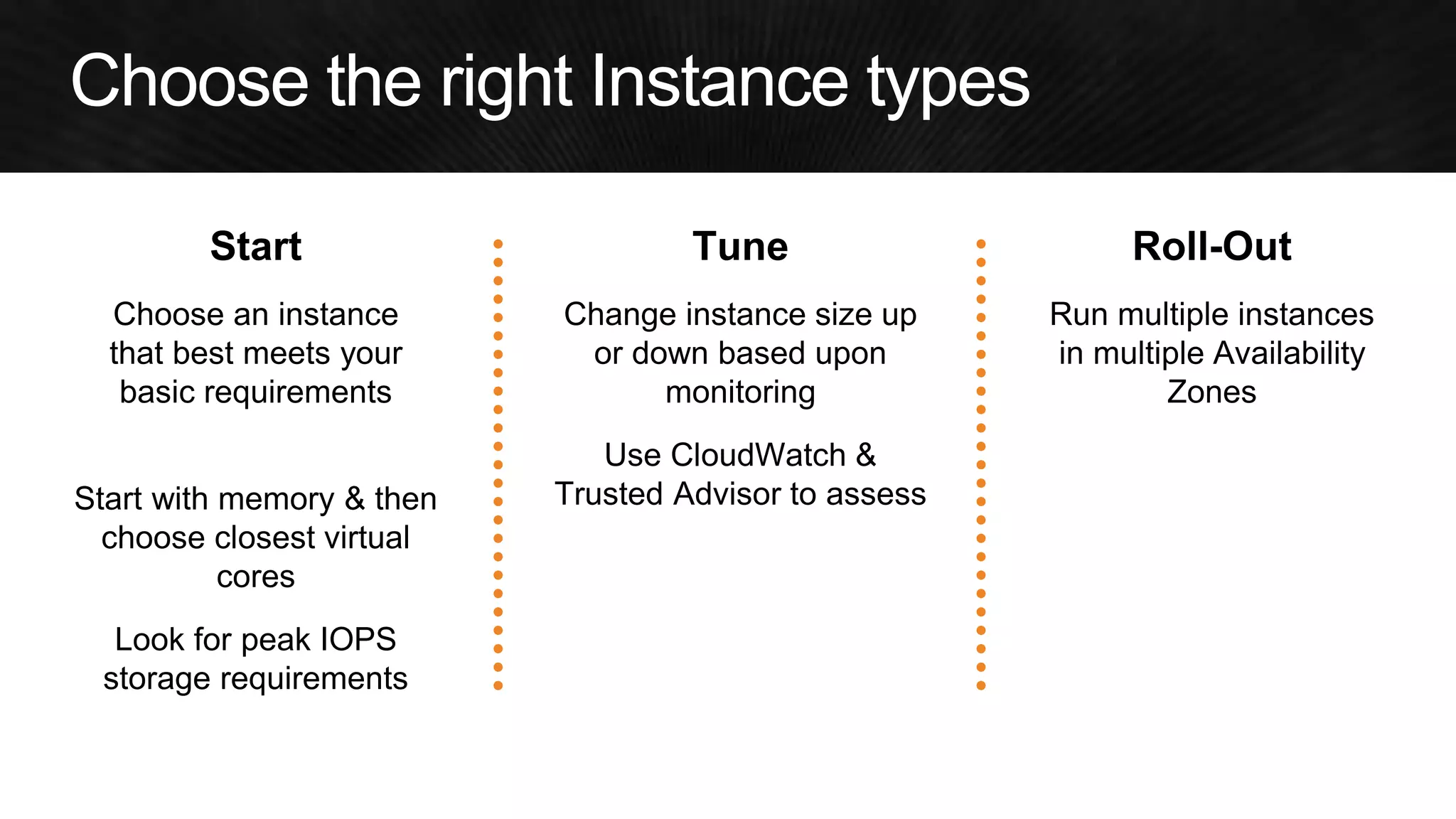

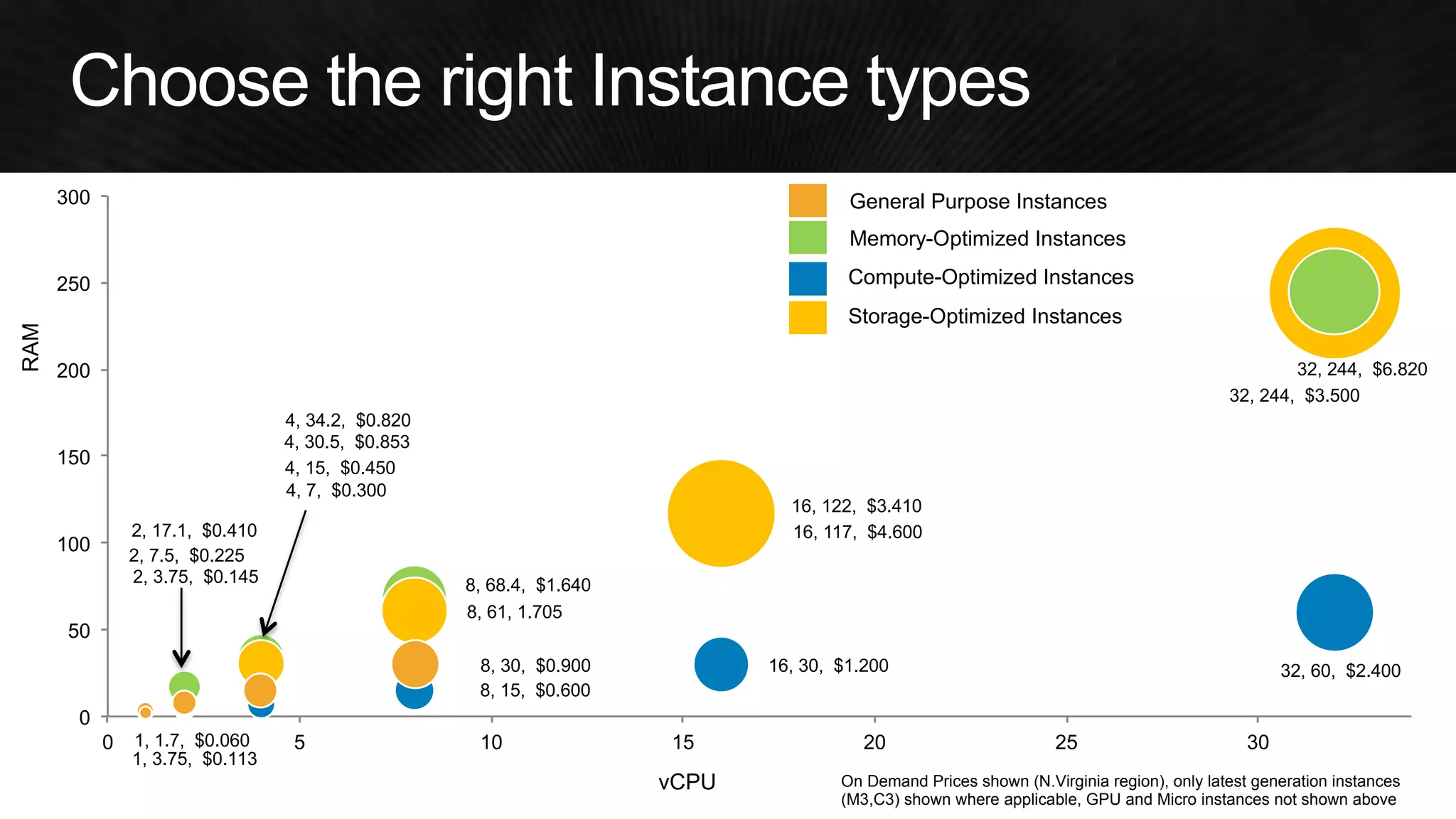

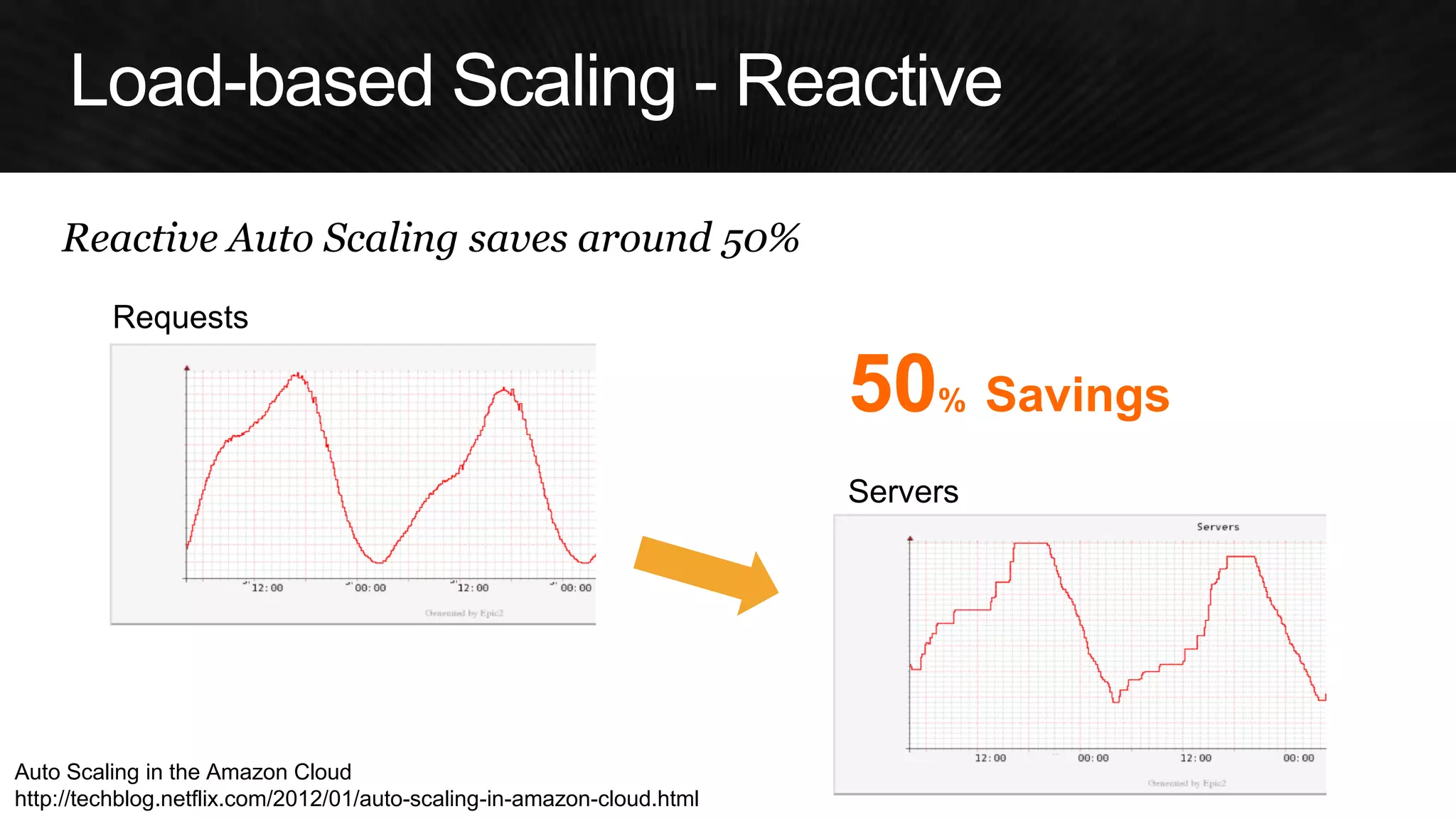

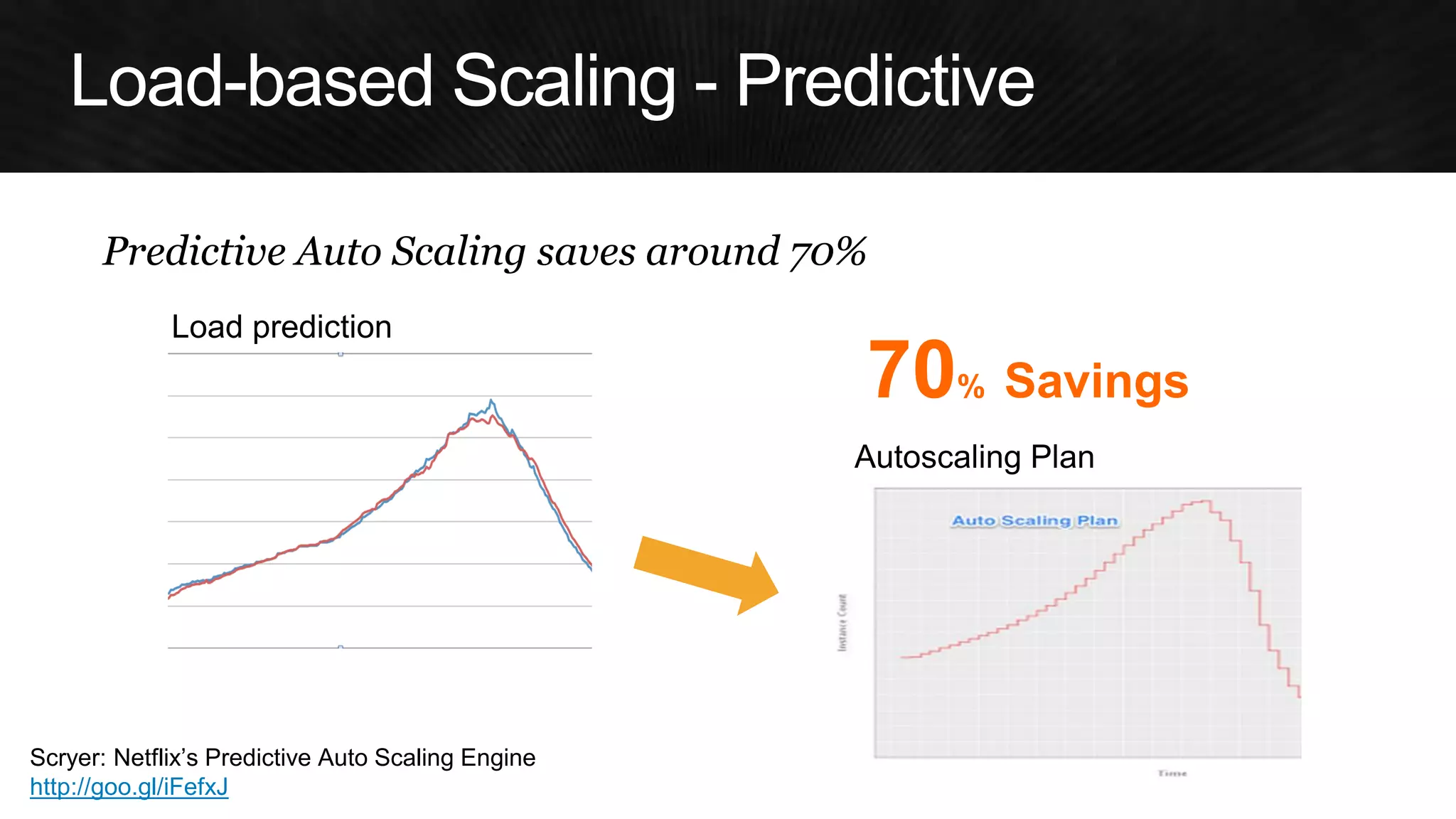

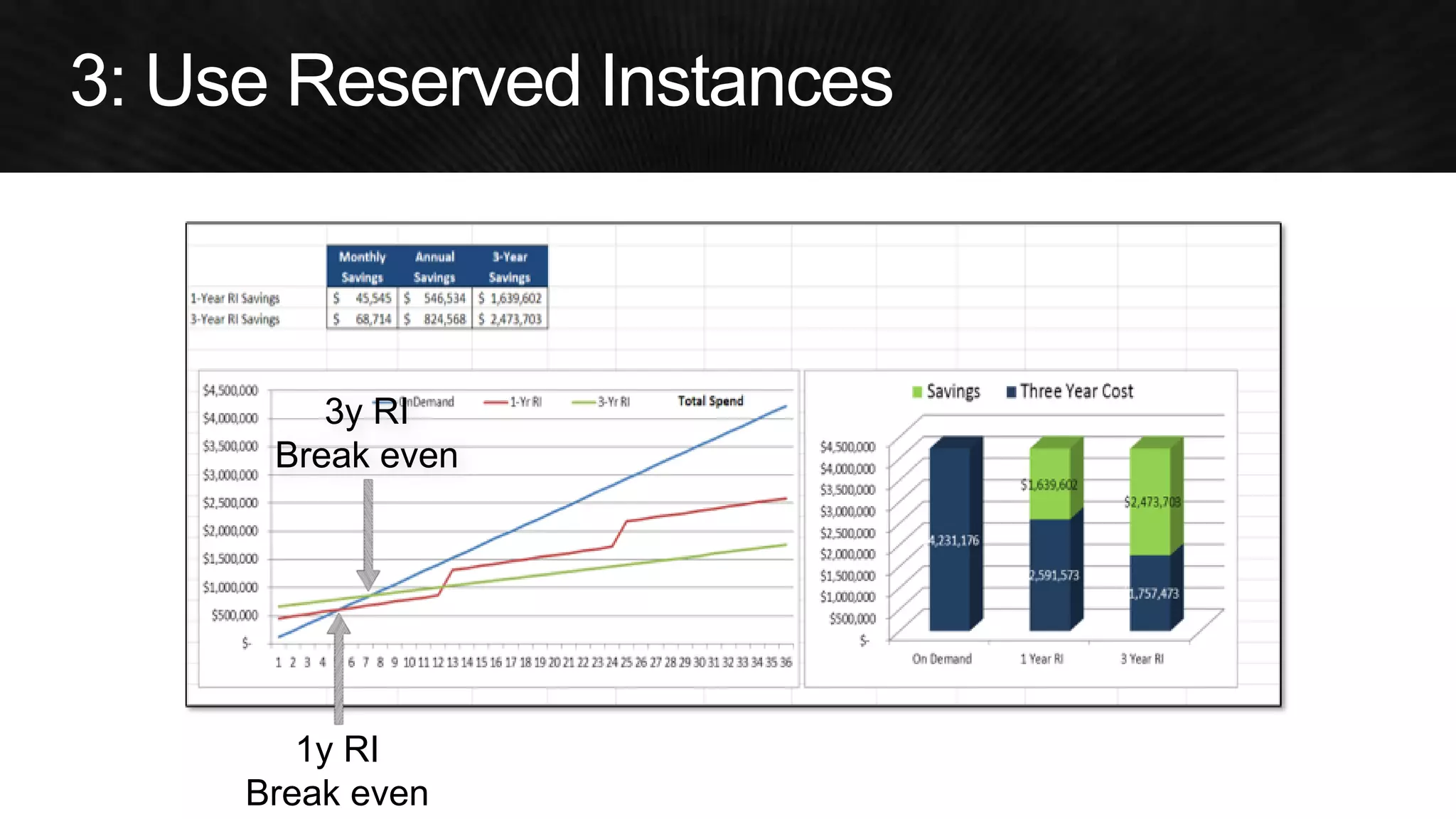

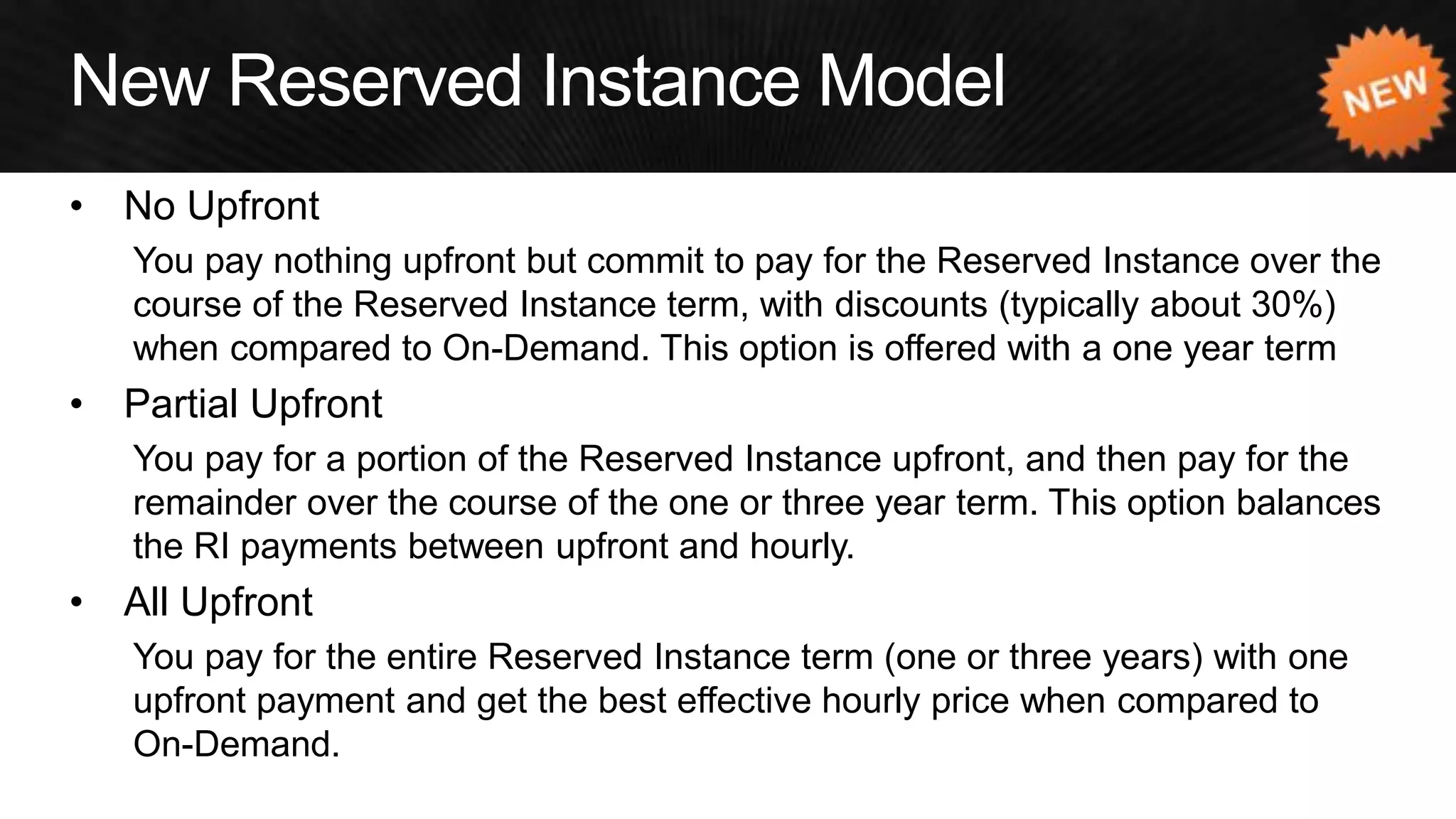

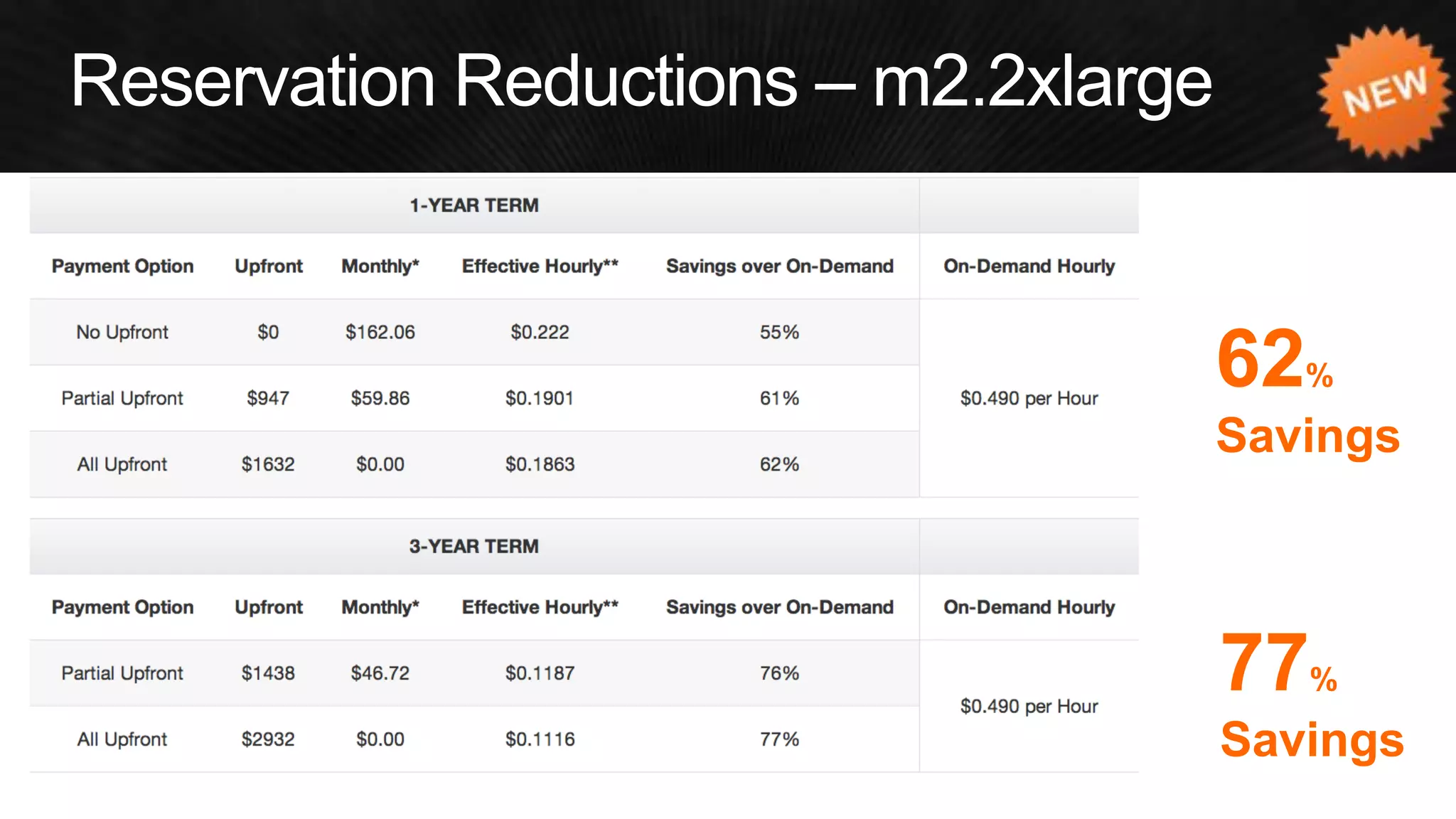

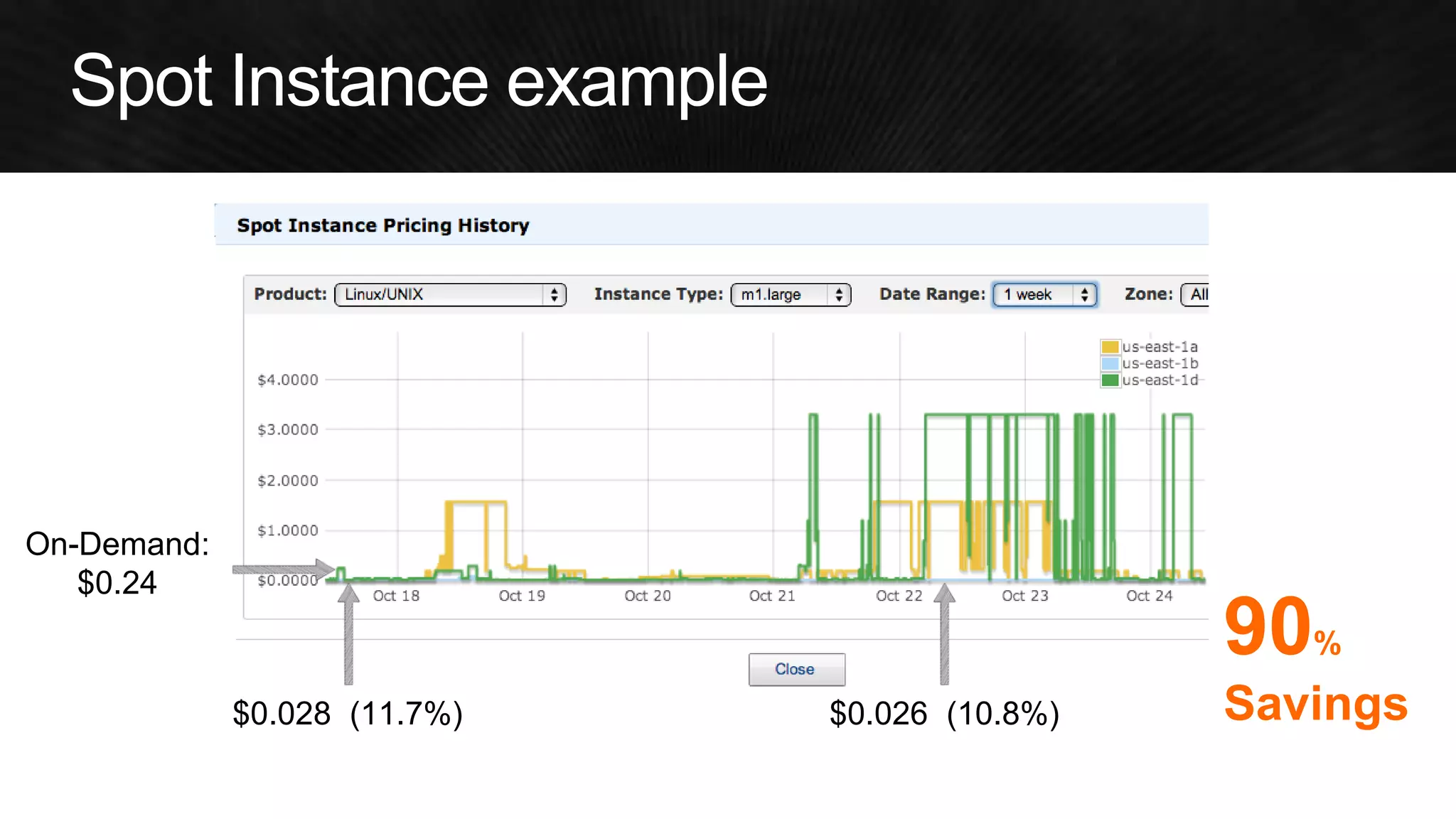

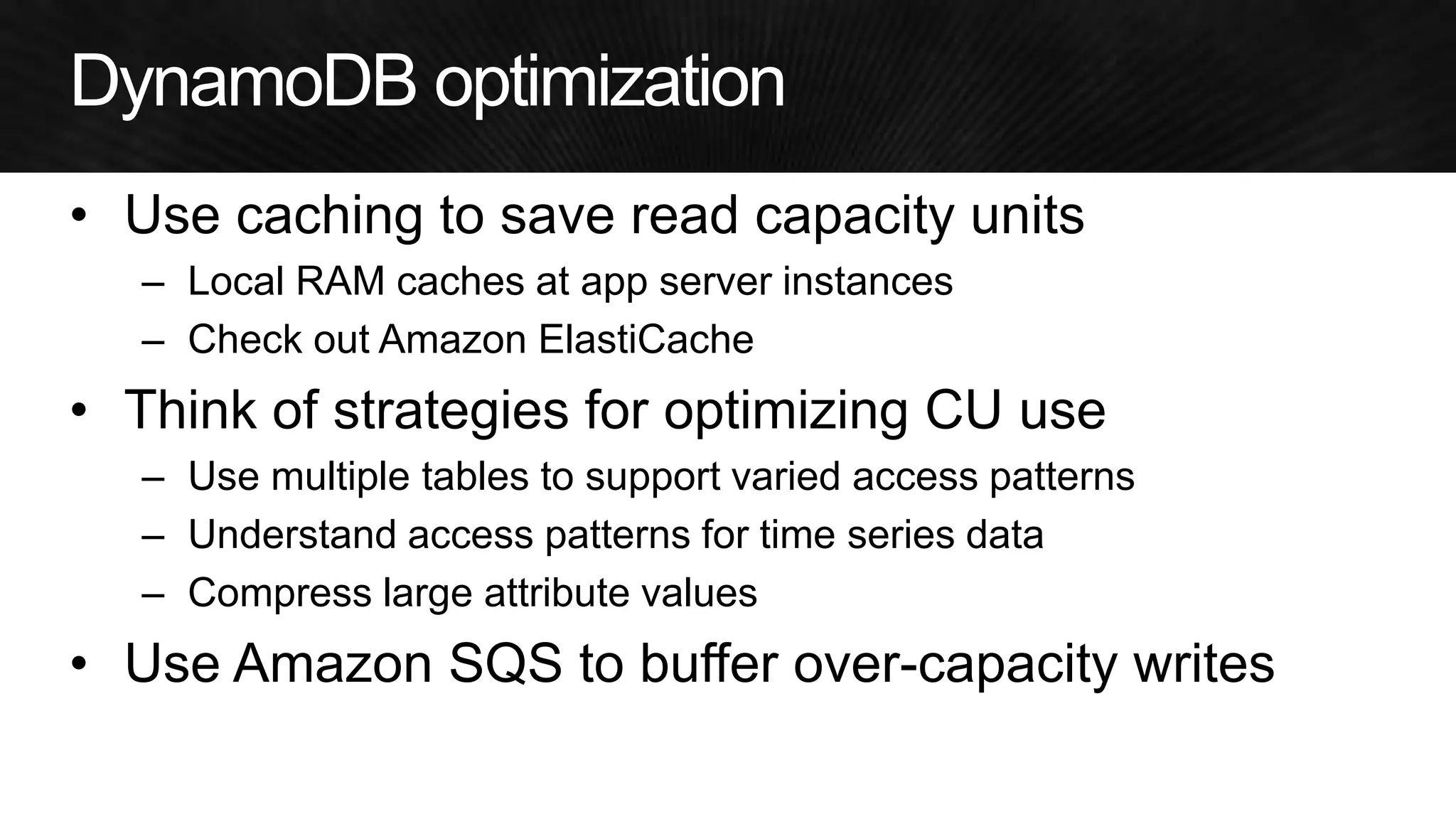

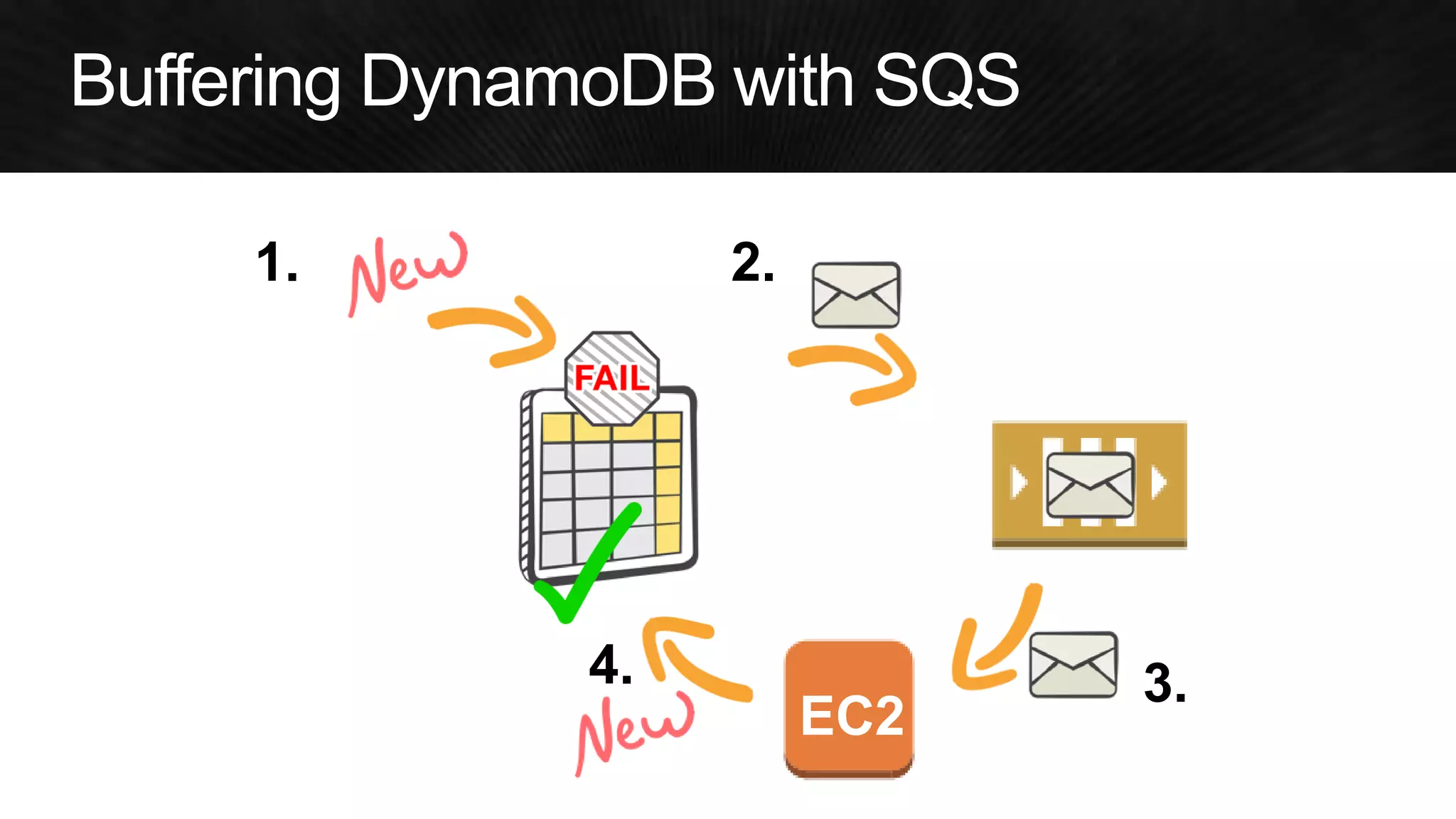

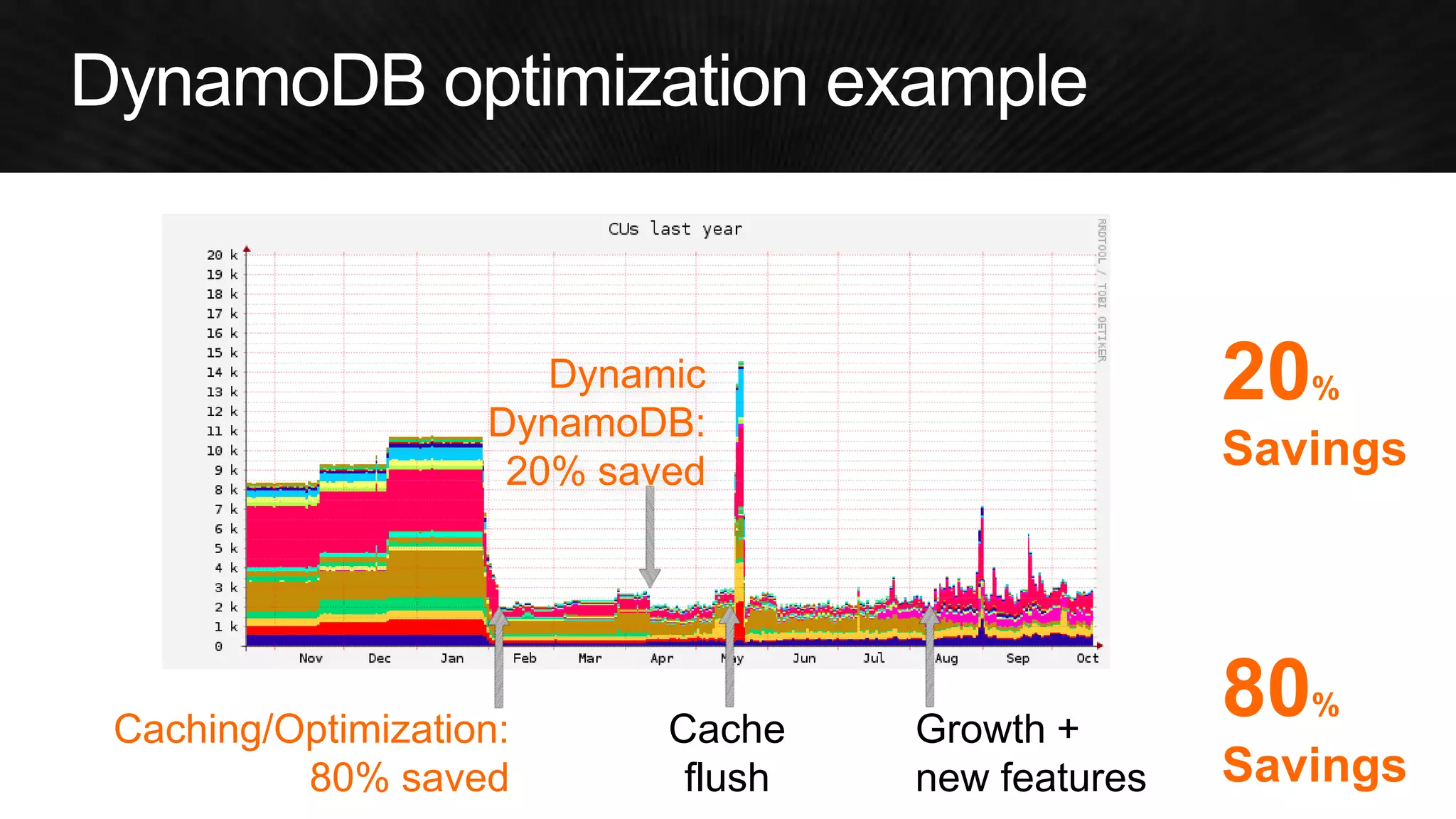

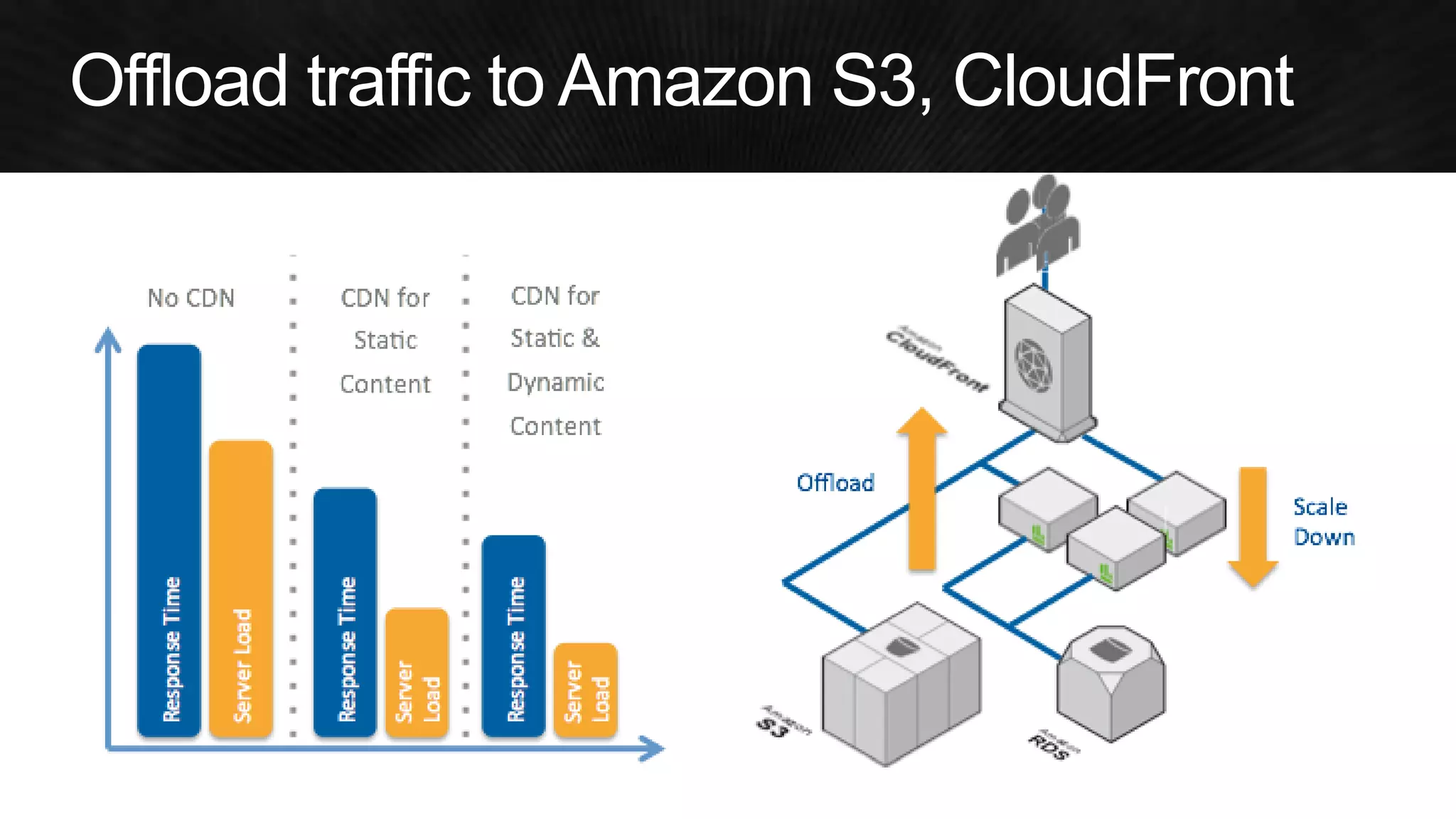

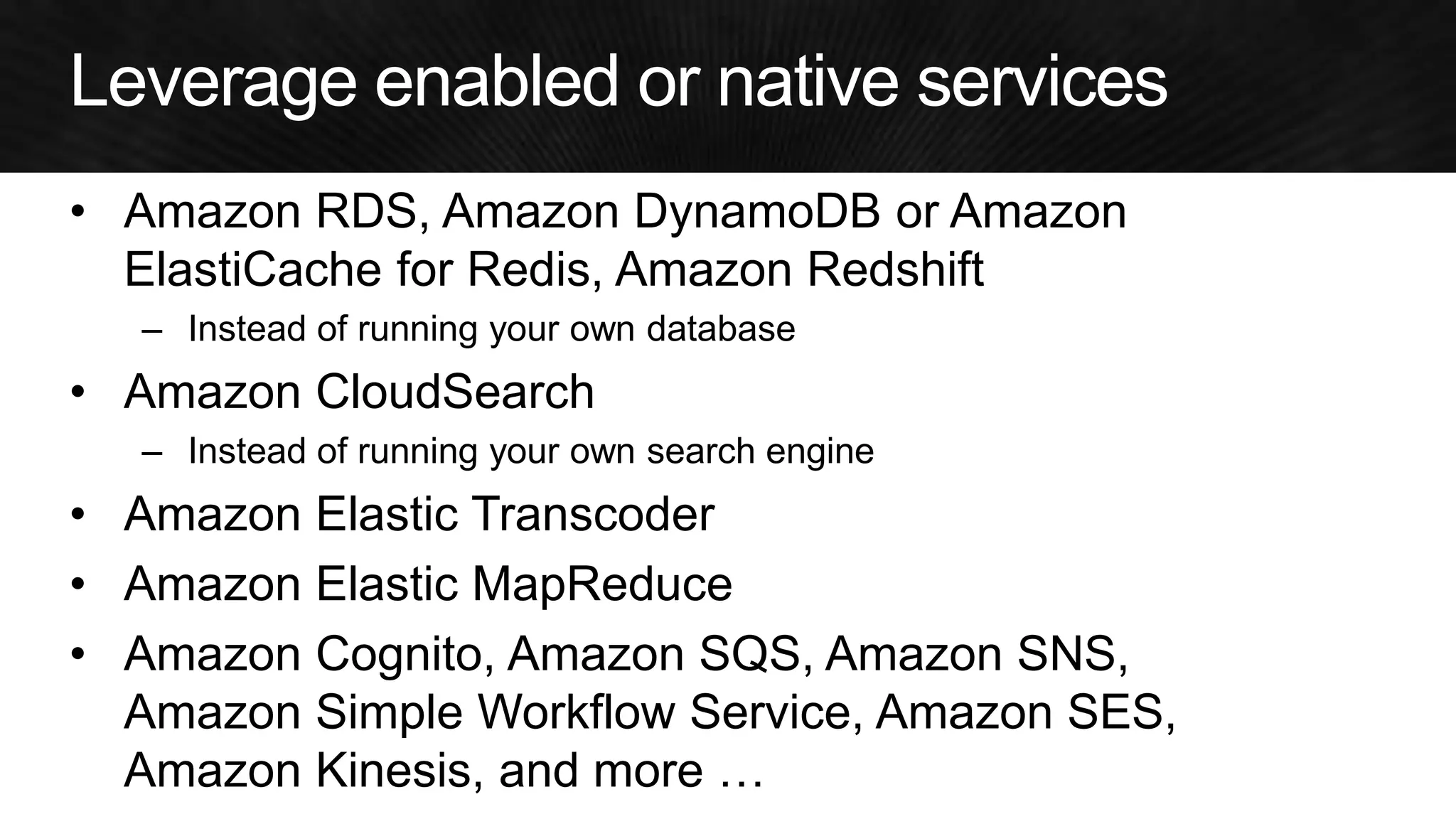

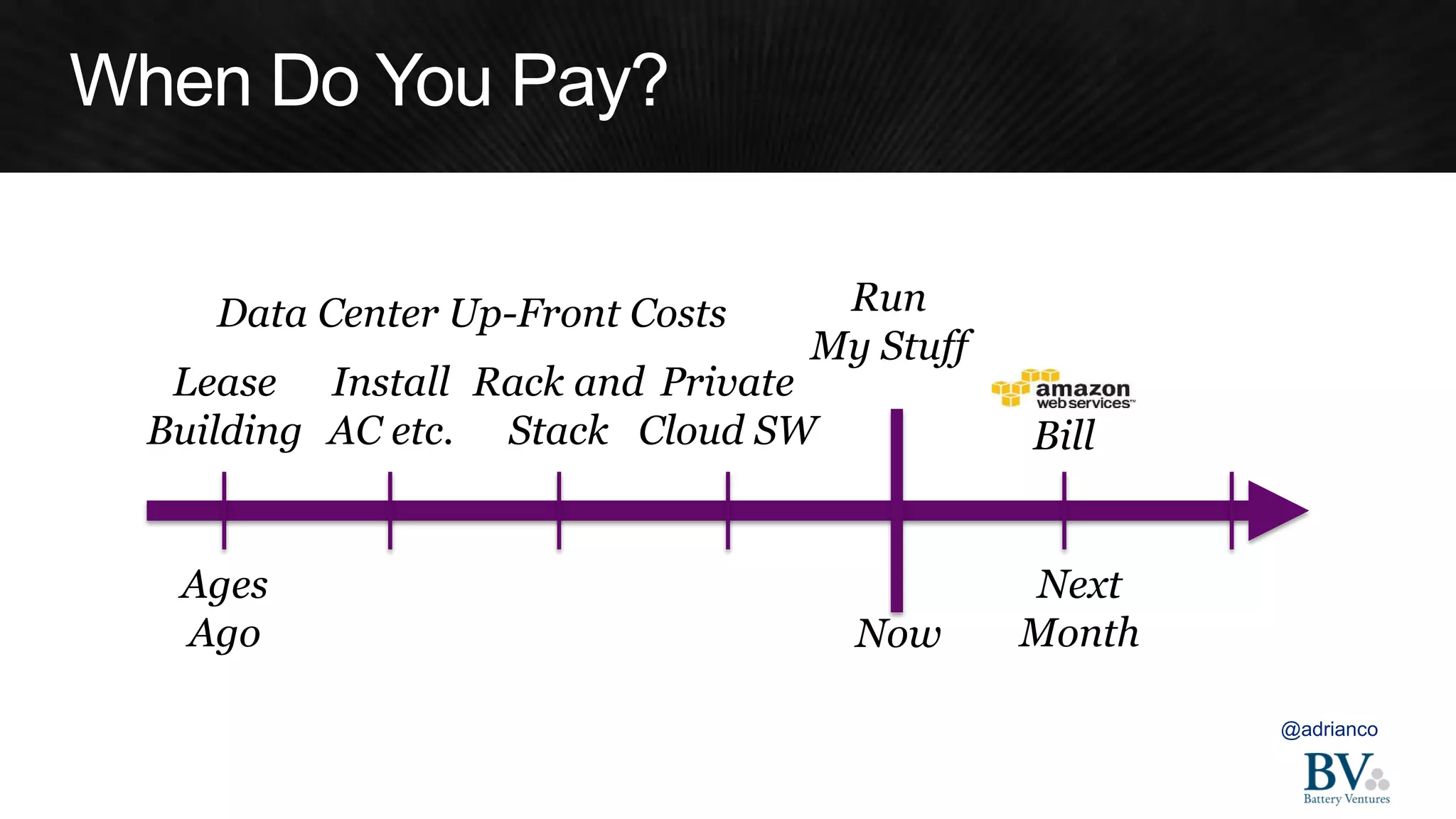

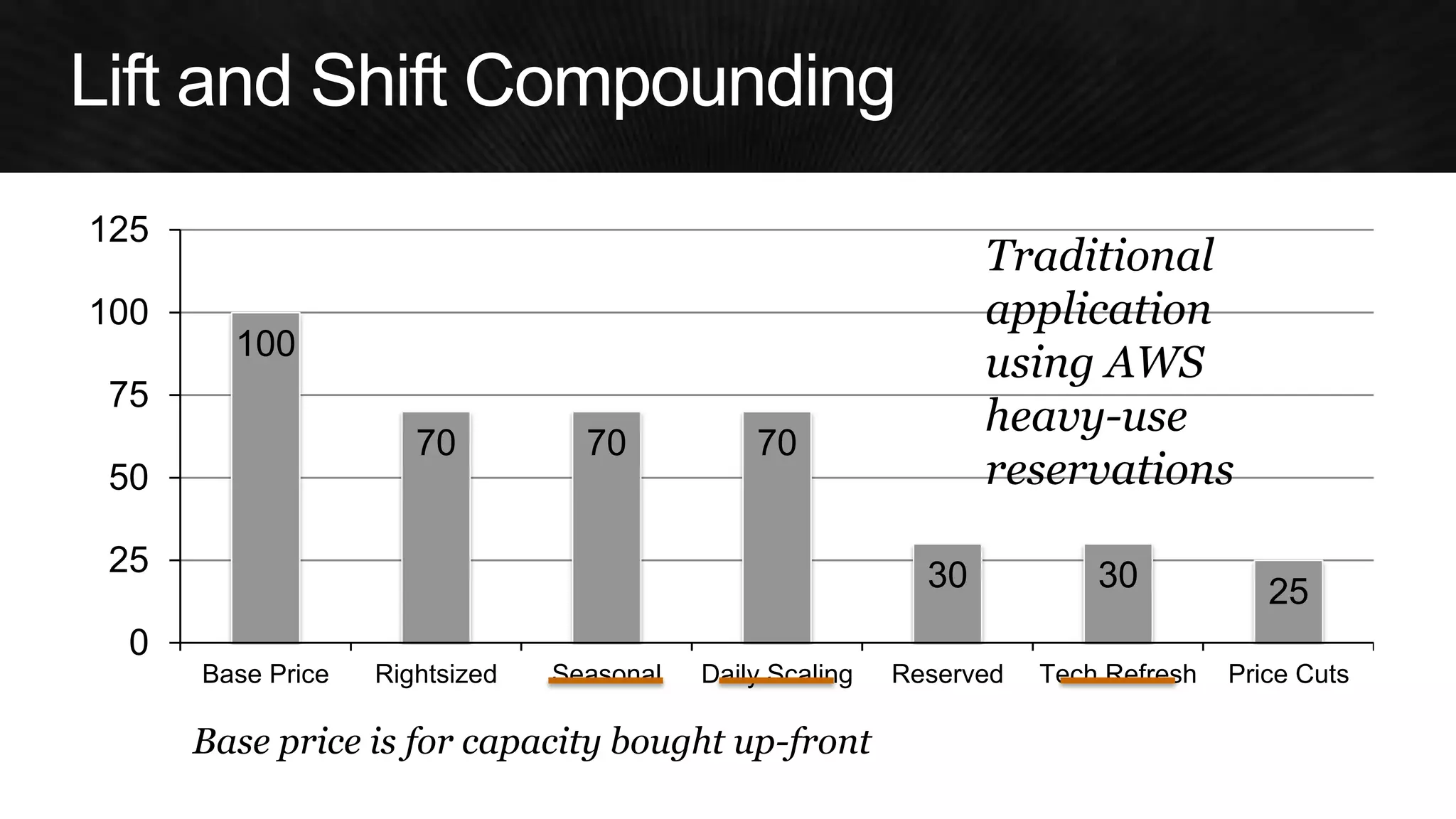

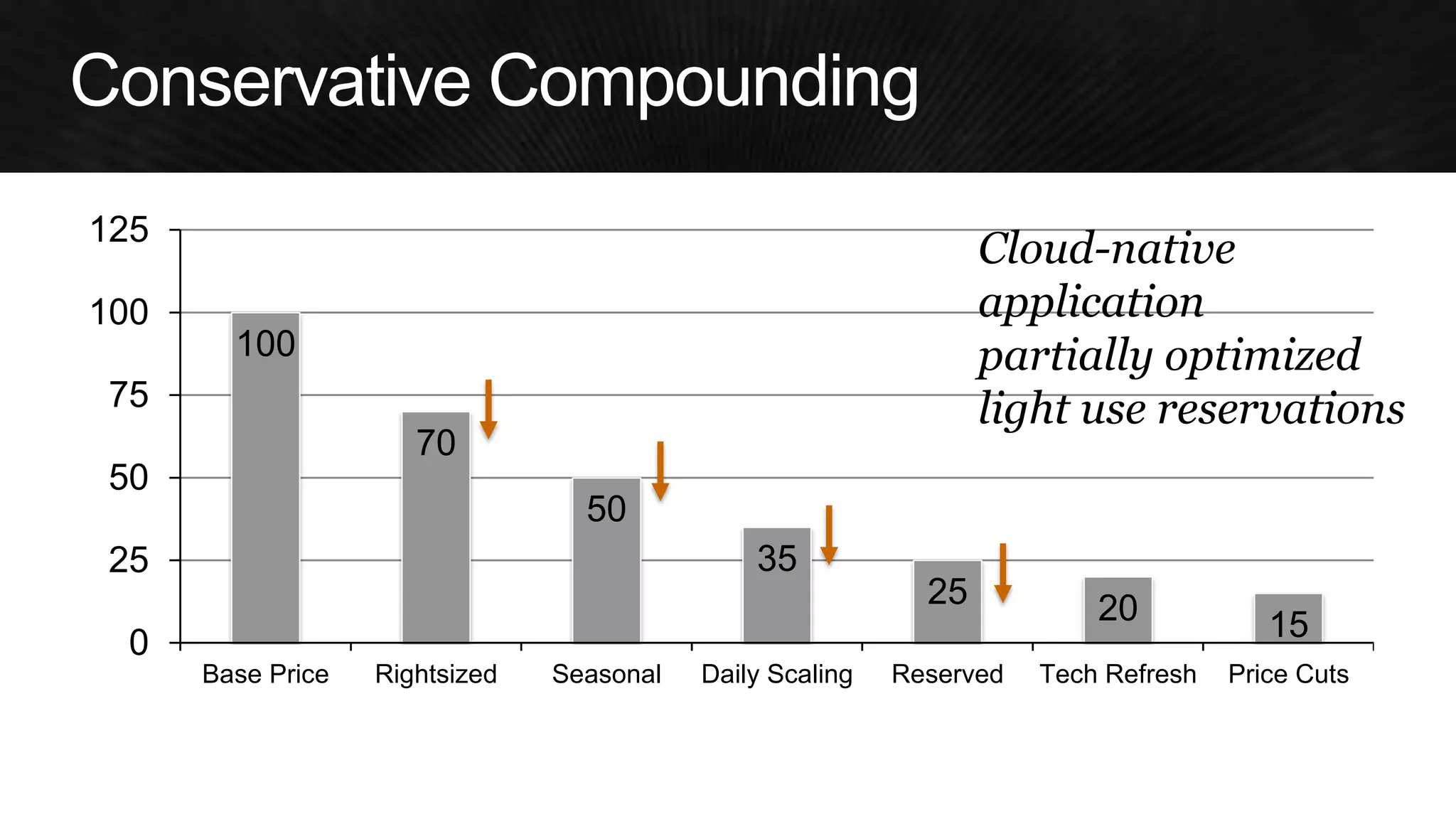

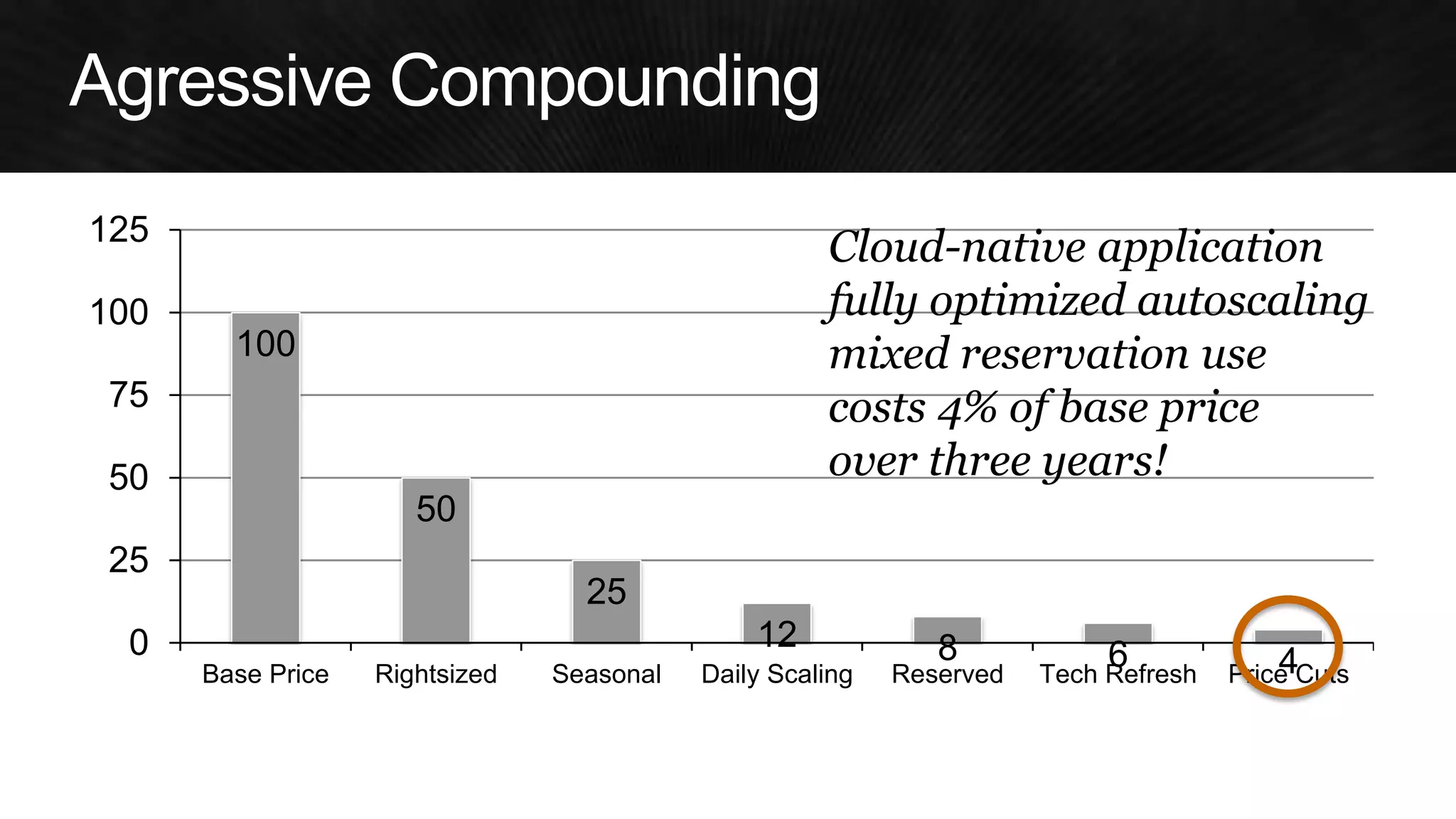

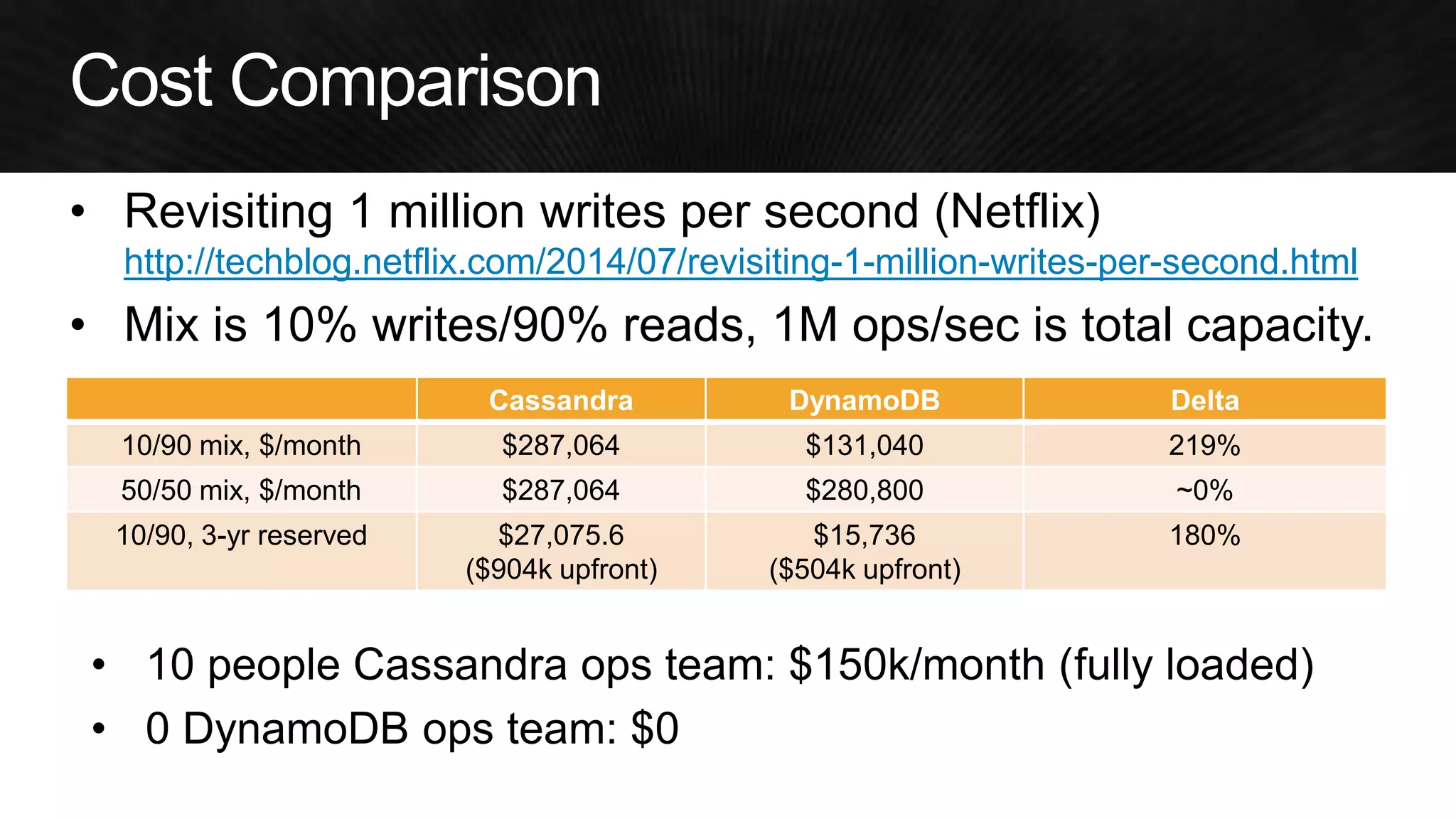

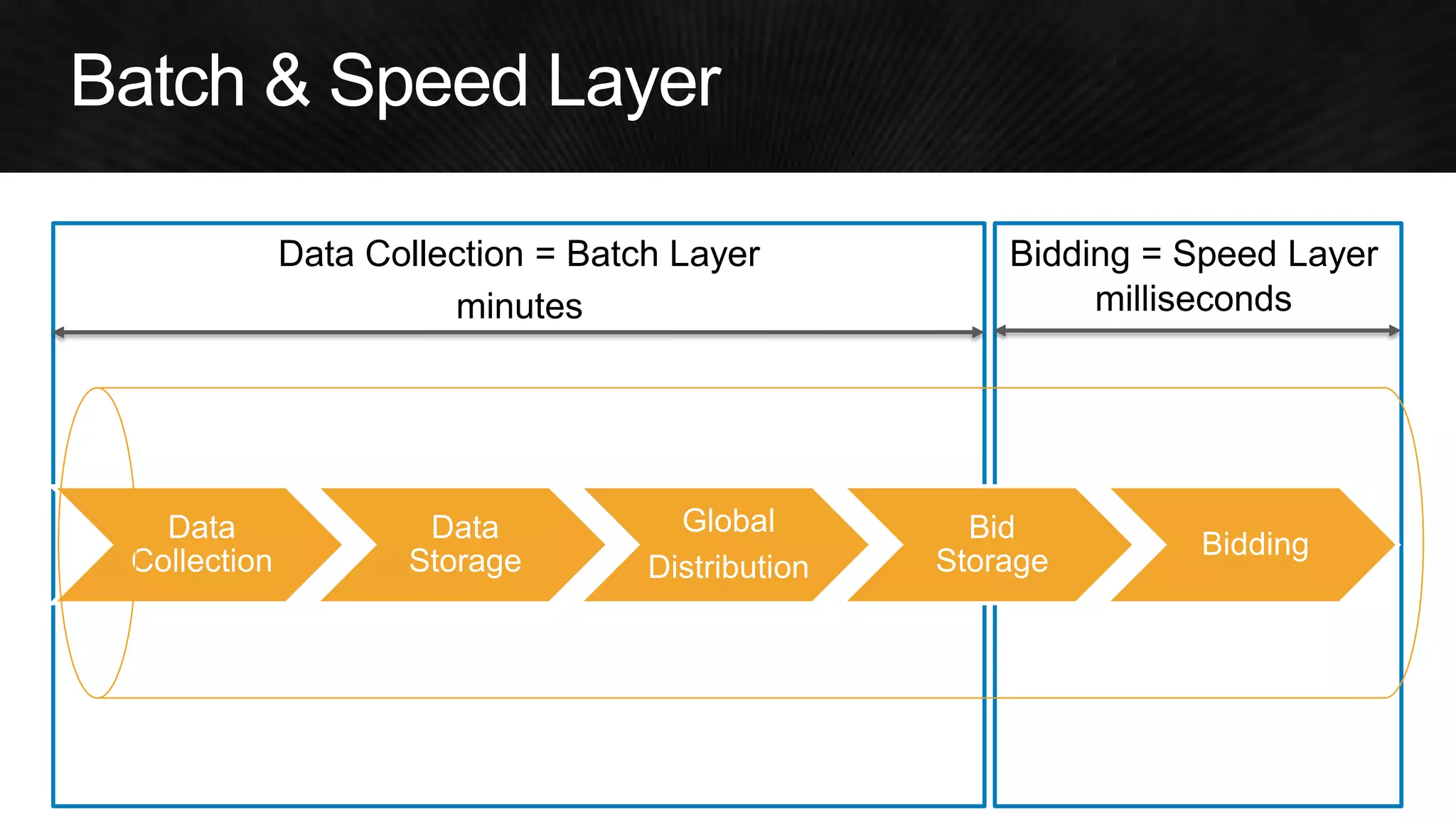

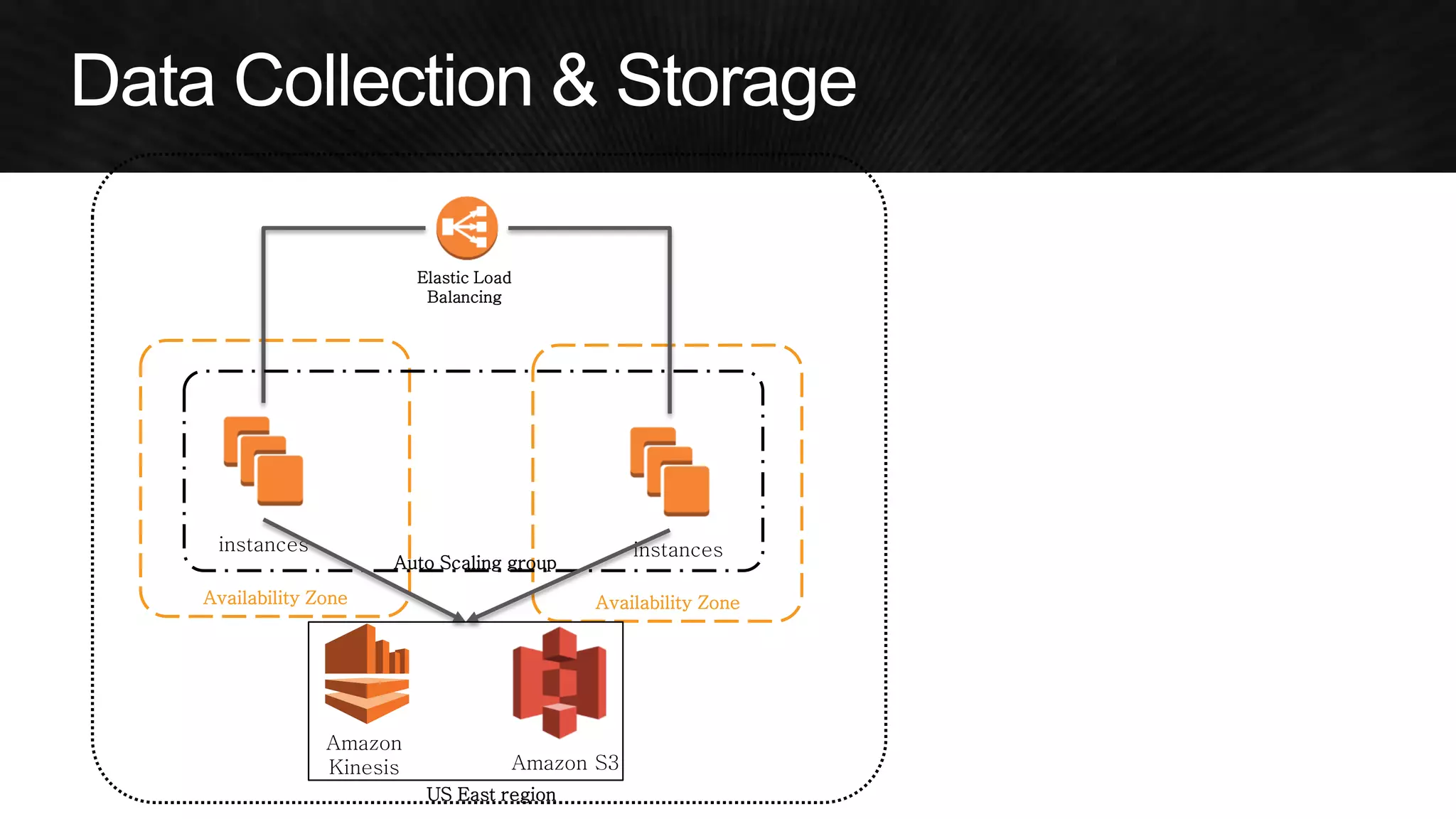

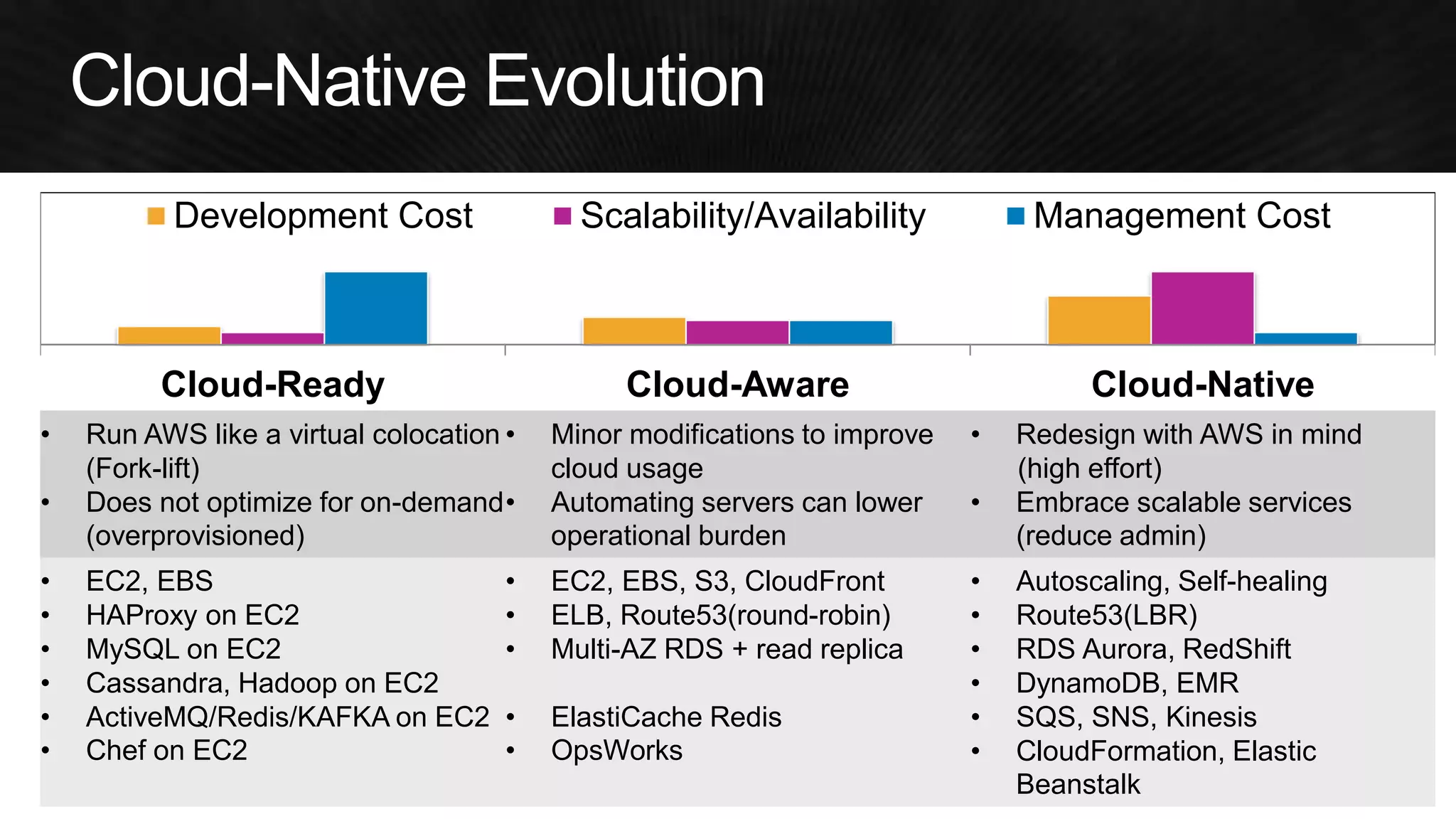

The document discusses various strategies for optimizing costs and performance when using AWS, focusing on real-world examples from Adobe Systems. It emphasizes the importance of cloud-native architectures for reducing total cost of ownership (TCO) and improving disaster recovery, while offering practical tips for managing resources efficiently. Recommendations include leveraging AWS services, implementing automation, and optimizing application design to maximize performance and minimize costs.