This document provides a 3-paragraph summary of an artificial neural networks lecture:

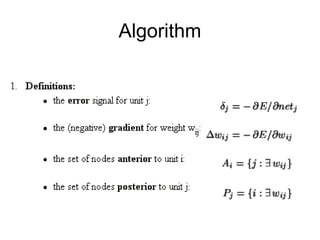

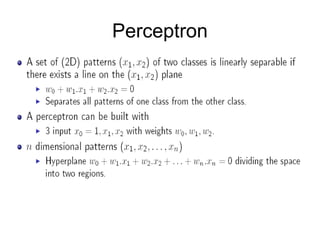

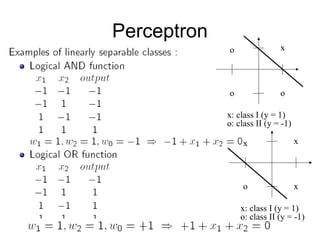

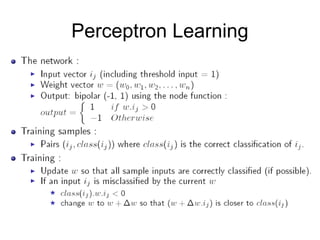

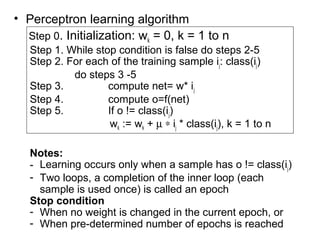

The lecture discusses perceptrons and how they can be used to classify linearly separable and non-linearly separable data. Perceptrons use a learning algorithm to update their weights to correctly classify input patterns. However, perceptrons cannot solve problems like the XOR function that require more complex decision boundaries. The document provides an example of the XOR problem that perceptrons cannot solve on their own.

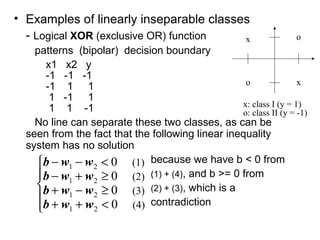

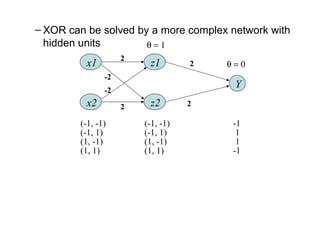

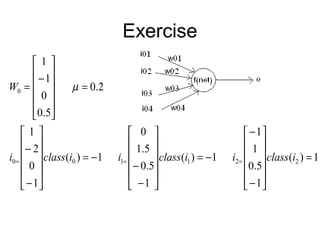

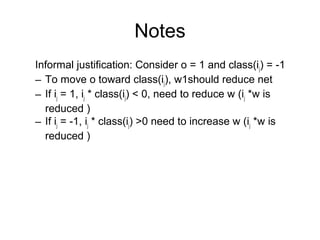

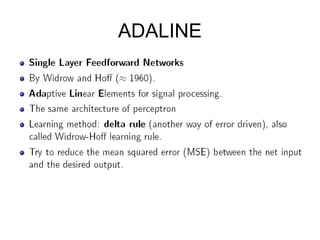

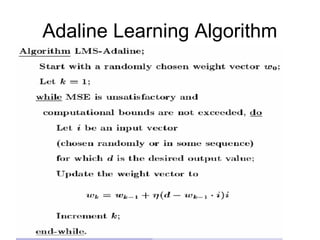

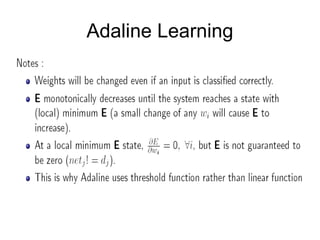

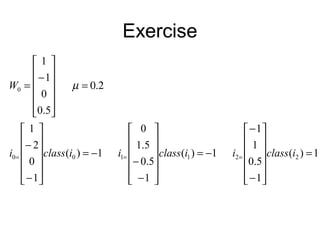

The document then introduces Adaline learning algorithms. Adalines are adaptive linear neurons that can learn weight values using a learning rule to minimize the mean square error between the actual network output and the target output. An exercise is provided to walk through an

![[ ]

[ ]

12

1

1

111

1

00010

0

000

1

6.1

1

5.0

5.1

0

7.006.08.0

*

7.0

0

6.0

8.0

1

0

2

1

*1*2.0

5.0

0

1

1

*)(1

5.25.0021

1

0

2

1

5.0011

*

WW

o

net

iWnet

W

iiclassWWo

net

iWnet

T

T

=

−=

−=

−

−=

=

−

=

−

−

−+

−

=

∗+=+=

=−++=

−

−

−=

=

µ](https://image.slidesharecdn.com/artificialneuralnetwoks2-150705065533-lva1-app6892/85/Artificial-neural-netwoks2-10-320.jpg)

![[ ]

[ ] 5.29.0

1

0

2

1

5.01.04.06.0

5.0

1.0

4.0

6.0

1

5.0

1

1

*2.0

7.0

0

6.0

8.0

1)(1

1.2

1

5.0

1

1

7.006.08.0

*

3

3

22

2

222

<=

−

−

−=

−

=

−

−

+

−

=

=≠−=

−=

−

−

−=

=

net

onVerificati

W

iclasso

net

iWnet T](https://image.slidesharecdn.com/artificialneuralnetwoks2-150705065533-lva1-app6892/85/Artificial-neural-netwoks2-11-320.jpg)

![[ ]

[ ]

−

−

=

−

−

−+

−

=

=

=

−

−=

=

−

=

−

−

−+

−

=

−∗+==

=−++=

−

−

−=

=

2.0

0

4.0

1.0

1

0

2

1

*2*2.0

2.0

0

4.0

3.0

1

1

1

5.0

5.1

0

2.004.03.0

*

2.0

0

4.0

3.0

1

0

2

1

*5.3*2.0

5.0

0

1

1

*)(1

5.25.0021

1

0

2

1

5.0011

*

2

1

1

111

1

00010

0

000

W

o

net

iWnet

W

inetdWWo

net

iWnet

T

T

µ](https://image.slidesharecdn.com/artificialneuralnetwoks2-150705065533-lva1-app6892/85/Artificial-neural-netwoks2-18-320.jpg)

![[ ]

[ ] 6.0

1

0

2

1

1.015.01.04.0

1.0

15.0

1.0

4.0

1

5.0

1

1

*5.1*2.0

2.0

0

4.0

1.0

1)(1

5.0

1

5.0

1

1

2.004.01.0

*

3

3

22

2

222

−=

−

−

−−−=

−

−

−

=

−

−

+

−

−

=

=≠−=

−=

−

−

−−=

=

net

onVerificati

W

iclasso

net

iWnet T](https://image.slidesharecdn.com/artificialneuralnetwoks2-150705065533-lva1-app6892/85/Artificial-neural-netwoks2-19-320.jpg)