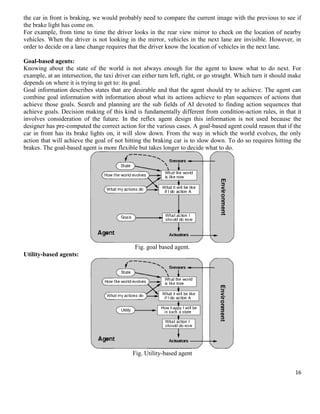

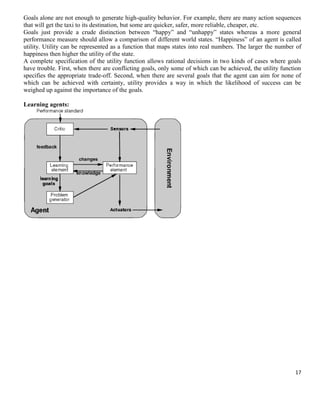

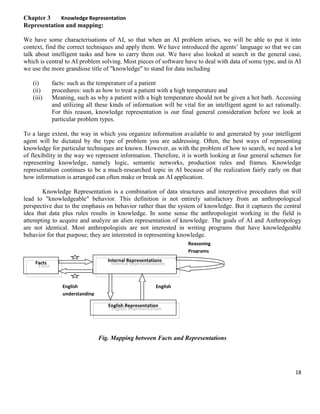

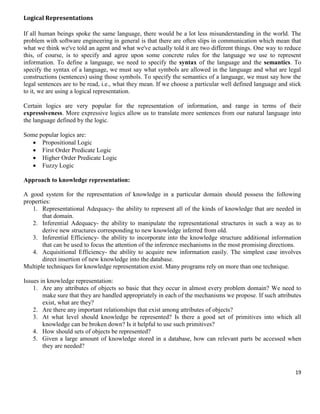

The document provides an overview of artificial intelligence including definitions, types of AI tasks, foundations of AI, history of AI, current capabilities and limitations of AI systems, and techniques for problem solving and planning. It discusses machine learning, natural language processing, expert systems, neural networks, search problems, constraint satisfaction problems, linear and non-linear planning approaches. The key objectives of the course are introduced as understanding common AI concepts and having an idea of current and future capabilities of AI systems.

![Linear planning:

Basic idea: work on one goal until completely solved before moving on to the next goal.

Planning algorithm maintains goal stack.

Implications:

- No interleaving of goal achievement

- Efficient search if goals do not interact (much)

Advantages:

- Reduced search space, since goals are solved one at a time

- Advantageous if goals are (mainly) independent

- Linear planning is sound

Disadvantages:

- Linear planning ma produce suboptimal solutions (based on the number of operators in the plan)

- Linear planning is incomplete.

Sub optimal plans:

Result of linearity, goal interactions and poor goal ordering

Initial state: at(obj1, locA), at(obj2, locA), at(747, locA)

Goals: at(obj1, locB), at(obj2, locB)

Plan: [load(obj1, 747, locA); fly(747, locA, locB); unload(obj1, 747, locB);

fly(747, locB, locA); Load(obj2, 747, locA); fly(747, locA, locB); unload(obj2, 747, locB)]

Concept of non-linear planning:

Use goal set instead of goal stack. Include in the search space all possible sub goal orderings.

Handles goal interactions by interleaving.

Advantages:

- Non-linear planning is sound.

- Non-linear planning is complete.

- Non-linear planning may be optimal with respect to plan length (depending on search strategy employed)

Disadvantages:

- Larger search space, since all possible goal orderings may have to be considered.

- Somewhat more complex algorithm; more bookkeeping.

Non-linear planning algorithm:

NLP (initial-state, goals)

- State = initial state, plan=[]; goalset = goals, opstack = []

- Repeat until goalset is empty

Choose a goal g from the goalset

If g does not match state, then

Choose an operator o whose add-list matches goal g

Push o on the opstack

7](https://image.slidesharecdn.com/chapter1-2-3ai-140308013009-phpapp02/85/Artificial-intelligence-7-320.jpg)

![

While

state

Add the preconditions of o to the goalset

all preconditions of operator on top of opstack are met in

Pop operator 0 from top of opstack

State = apply(0 , state)

Plan = [plan, 0]

Means-Ends Analysis

The means-ends analysis concentrates around the detection of differences between the current state and the

goal state. Once such difference is isolated, an operator that can reduce the difference must be found.

However, perhaps that operator cannot be applied to the current state. Hence, we set up a subproblem of

getting to a state in which it can be applied. The kind of backward chaining in which the operators are selected

and then sub goals are setup to establish the preconditions of the operators is known as operator subgoaling.

Just like the other problem-solving techniques, means -ends analysis relies on a set of rules that can transform

one problem state into another. However, these rules usually are not represented with complete state

descriptions on each side. Instead, they are represented as left side, which describes the conditions that must

be met for the rule to be applicable and a right side, which describes those aspects of the problem state that

will be changed by the application of the rule. A separate data structure called a difference table indexes the

rules by the differences that they can be used to reduce.

Algorithm: Means-Ends Analysis

1. Compare CURRENT to GOAL. If there are no differences between them, then return.

2. Otherwise, select the most important difference and reduce it by doing the following until success or failure

is signaled:

a) Select a new operator O, which is applicable to the current difference. If there are no such operators, then

signal failure.

b) Apply O to CURRENT. Generate descriptions of two states: O-START, a state in which O’s preconditions

are satisfied and O-RESULT, the state that would result if O were applied in O-START.

c) If

(FIRST-PART MEA (CURRENT, O-START)) and

(LAST-PART MEA (O-RESULT, GOAL)) are successful, then signal success and return the result of

concatenating FIRST-PART, O and LAST-PART.

Production rules Systems:

Since search is a very important process in the solution of hard problems for which no more direct techniques

are available, it is useful to structure AI programs in a way that enables describing and performing the search

process. Production systems provide such structures. A production system consists of:

· A set of rules, each consisting of a left side that determines the applicability of the rule and a right side that

describes the operation to be performed if the rule is applied.

· One or more knowledge or databases that contain whatever information is appropriate for the particular task.

· A control strategy that specifies the order in which the rules will be compared to the database and a way of

resolving the conflicts that arise when several rules match at once.

· A rule applier.

Characteristics of Control Strategy

· The first requirement of a good control strategy is that it causes motion. Control strategies that do not cause

motion will never lead to a solution. For it, on each cycle, choose at random from among the applicable rules.

It will lead to a solution eventually.

8](https://image.slidesharecdn.com/chapter1-2-3ai-140308013009-phpapp02/85/Artificial-intelligence-8-320.jpg)

![MyCIN Style probability and its application

In artificial intelligence, MYCIN was an early expert system designed to identify bacteria causing severe

infections, such as bacteremia and meningitis, and to recommend antibiotics, with the dosage adjusted for

patient's body weight — the name derived from the antibiotics themselves, as many antibiotics have the suffix

"-mycin". The Mycin system was also used for the diagnosis of blood clotting diseases.

MYCIN was developed over five or six years in the early 1970s at Stanford University in Lisp by Edward

Shortliffe. MYCIN was never actually used in practice but research indicated that it proposed an acceptable

therapy in about 69% of cases, which was better than the performance of infectious disease experts who were

judged using the same criteria. MYCIN operated using a fairly simple inference engine, and a knowledge base

of ~600 rules. It would query the physician running the program via a long series of simple yes/no or textual

questions. At the end, it provided a list of possible culprit bacteria ranked from high to low based on the

probability of each diagnosis, its confidence in each diagnosis' probability, the reasoning behind each

diagnosis, and its recommended course of drug treatment.

MYCIN was based on certainty factors rather than probabilities. These certainty factors CF are in the range [–

1,+1] where –1 means certainly false and +1 means certainly true. The system was based on rules of the form:

IF: the patient has signs and symptoms s1 s2 … sn , and certain background conditions t1 t2 … tm

hold

THEN: conclude that the patient had disease di with certainty CR

The idea was to use production rules of this kind in an attempt to approximate the calculation of the

conditional probabilities p( di | s1 s2 … sn), and provide a scheme for accumulating evidence that

approximated the reasoning process of an expert.

Practical use / Application:

MYCIN was never actually used in practice. This wasn't because of any weakness in its performance. As

mentioned, in tests it outperformed members of the Stanford medical school faculty. Some observers raised

ethical and legal issues related to the use of computers in medicine — if a program gives the wrong diagnosis

or recommends the wrong therapy, who should be held responsible? However, the greatest problem, and the

reason that MYCIN was not used in routine practice, was the state of technologies for system integration,

especially at the time it was developed. MYCIN was a stand-alone system that required a user to enter all

relevant information about a patient by typing in response to questions that MYCIN would pose.

11](https://image.slidesharecdn.com/chapter1-2-3ai-140308013009-phpapp02/85/Artificial-intelligence-11-320.jpg)

![Causal rules:

Causal rules reflect the assumed direction of causality in the world: some hidden property of the world causes certain

percepts to be generated. For example, a pit causes all adjacent squares to be breezy:

)

)

)

∀

∀

And if all squares adjacent to a given square are pitless, the square will not be breezy:

∀s [∀r Adjacent (r, s)=>¬ Pit (r)] =>¬ Breezy ( s ) .

With some work, it is possible to show that these two sentences together are logically equivalent to the biconditional

sentence. The bi-conditional itself can also be thought of as causal, because it states how the truth value of Breezy is

generated from the world state.

Horn clauses:

In computational logic, a Horn clause is a clause with at most one positive literal. Horn clauses are named

after the logician Alfred Horn, who investigated the mathematical properties of similar sentences in the nonclausal form of first-order logic. Horn clauses play a basic role in logic programming and are important

for constructive logic.

A Horn clause with exactly one positive literal is a definite clause. A definite clause with no negative literals

is also called a fact. The following is a propositional example of a definite Horn clause:

Such a formula can also be written equivalently in the form of an implication:

)

In the non-propositional case, all variables in a clause are implicitly universally quantified with scope the

entire clause. Thus, for example:

)

)

Stands for: ∀

•

•

•

•

)

))

Frames:

A frame represents an entity as a set of slots (attributes) and associated values.

Each slot may have constraints that describe legal values that the slot can take.

A frame can represent a specific entity, or a general concept.

Frames are implicitly associated with one another because the value of a slot can be another frame.

29](https://image.slidesharecdn.com/chapter1-2-3ai-140308013009-phpapp02/85/Artificial-intelligence-29-320.jpg)