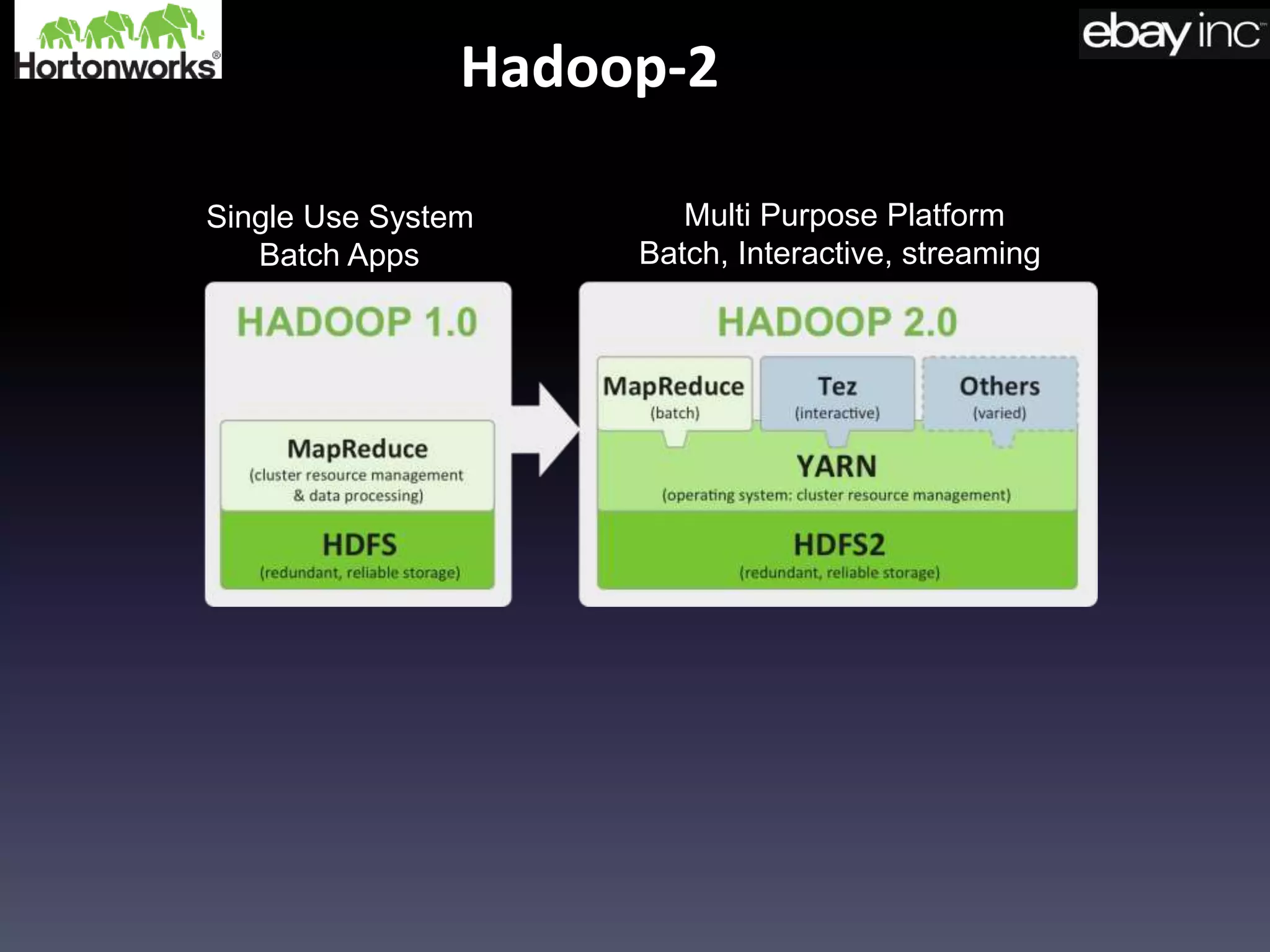

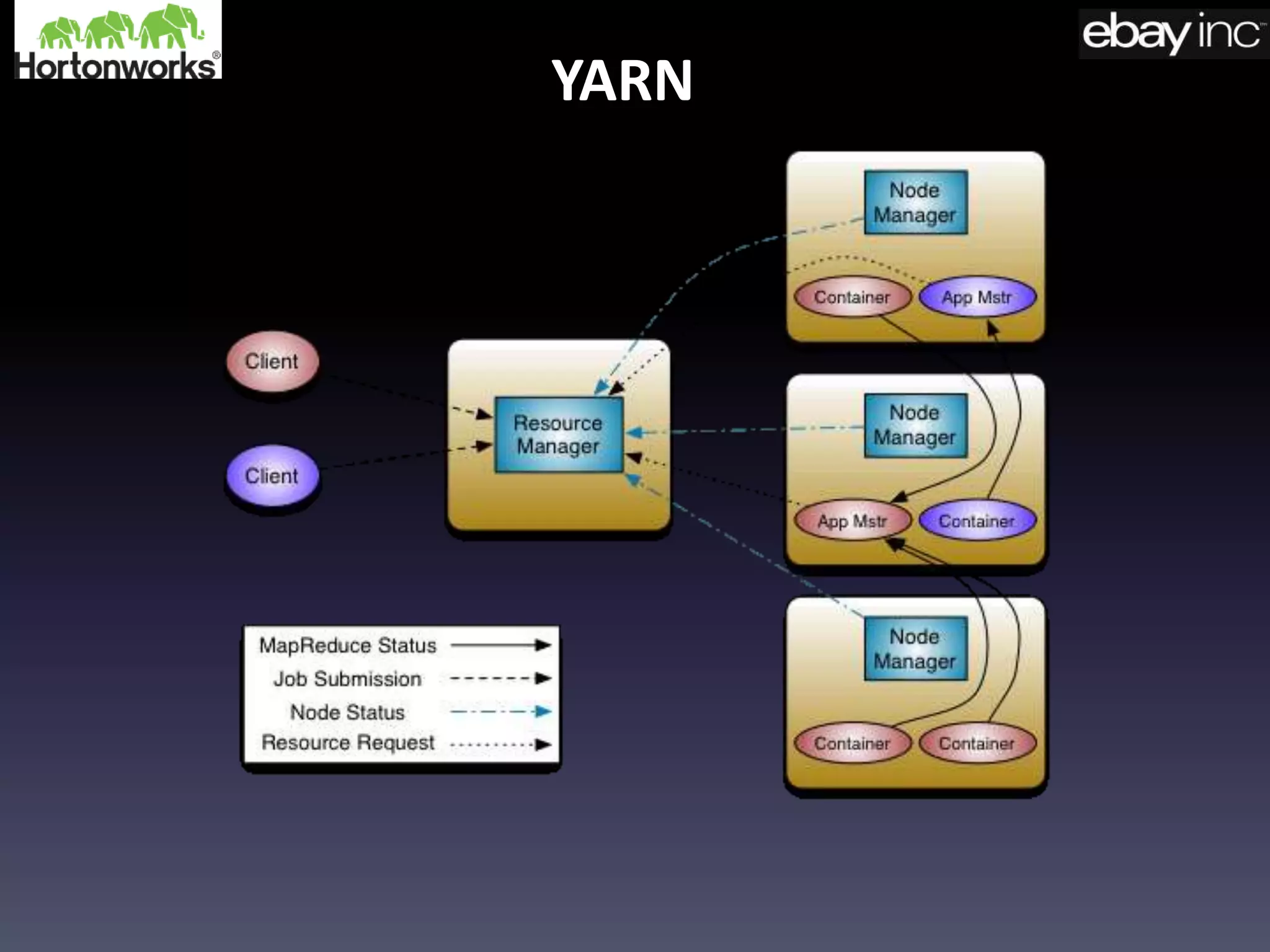

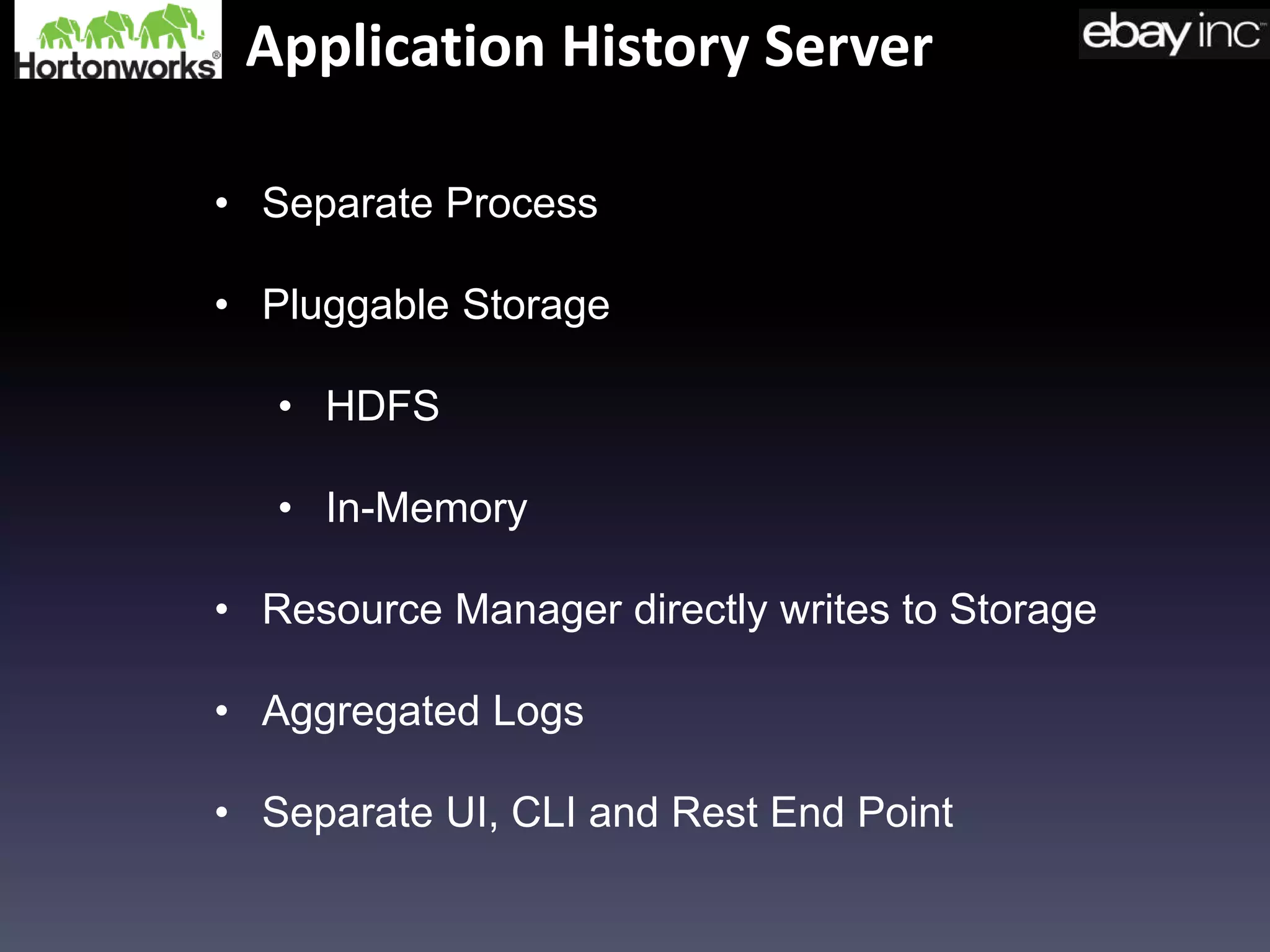

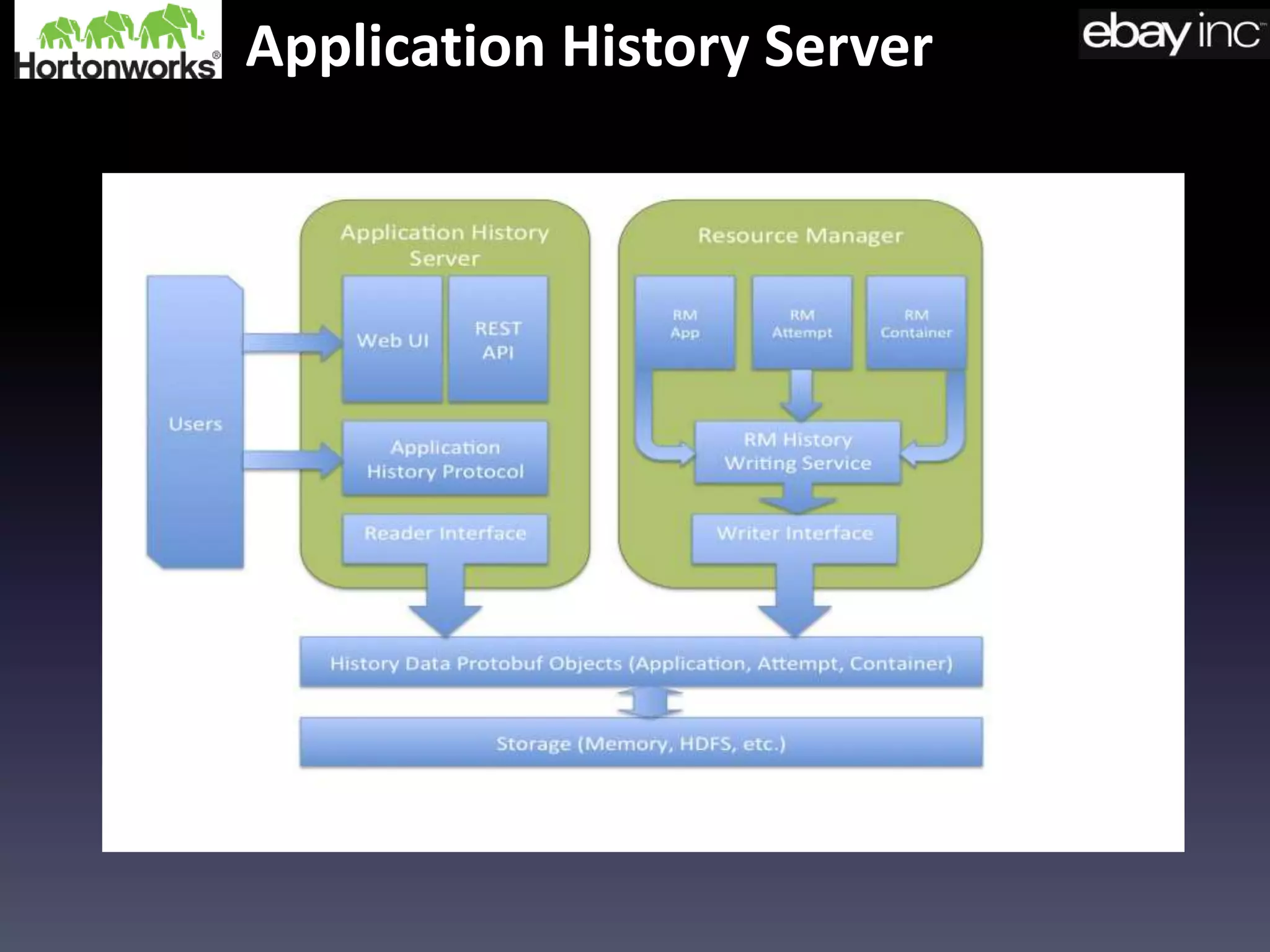

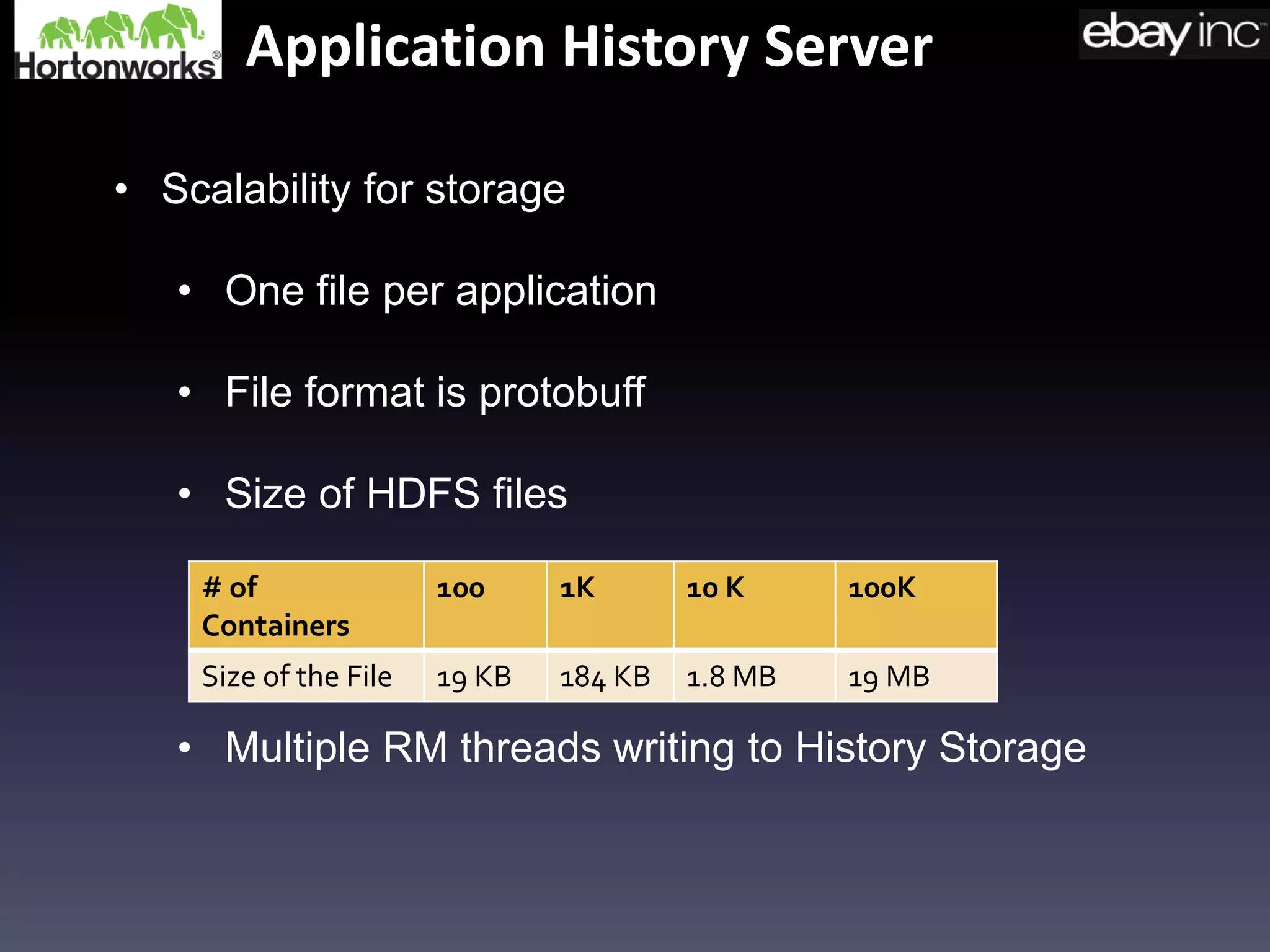

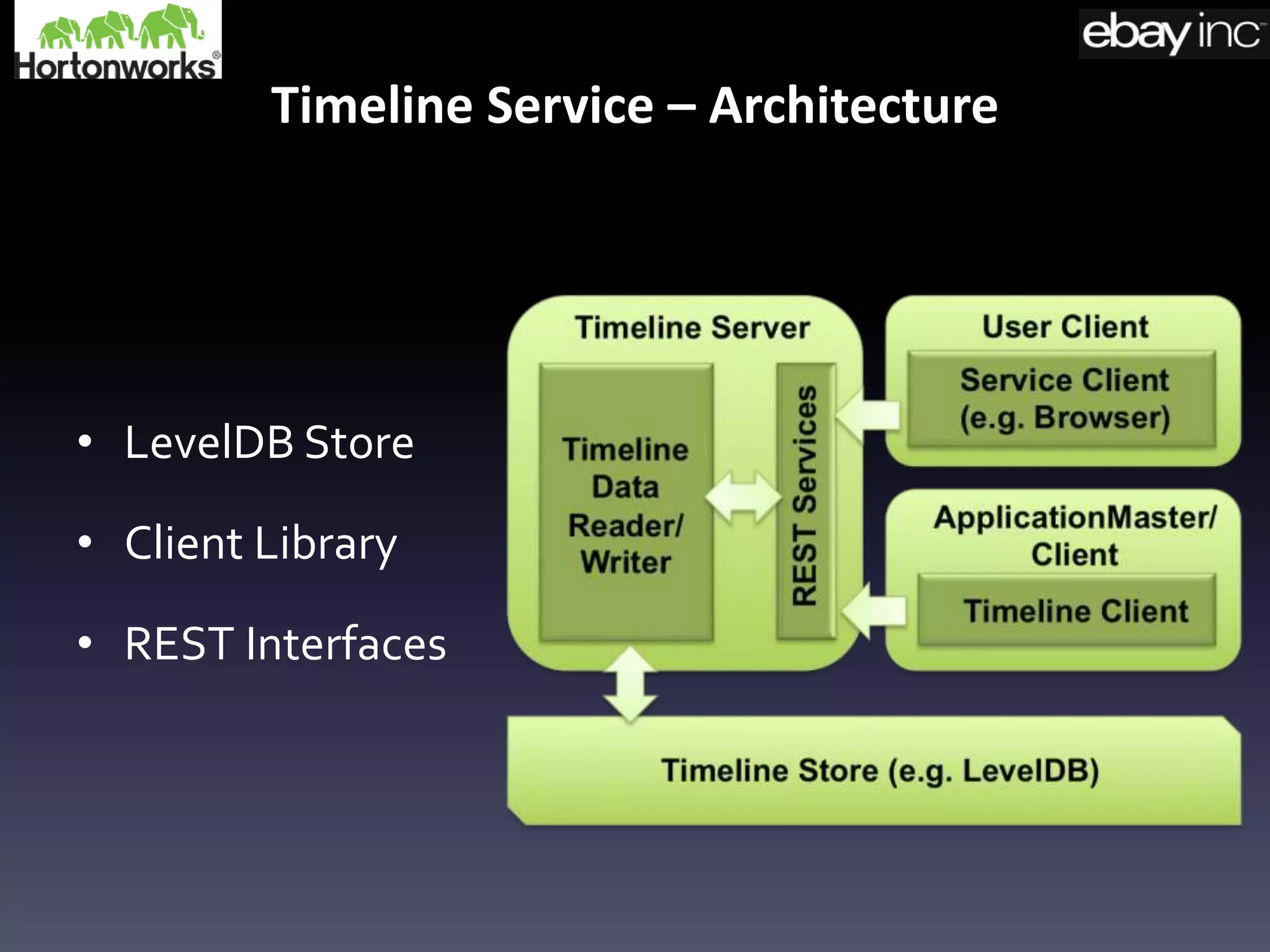

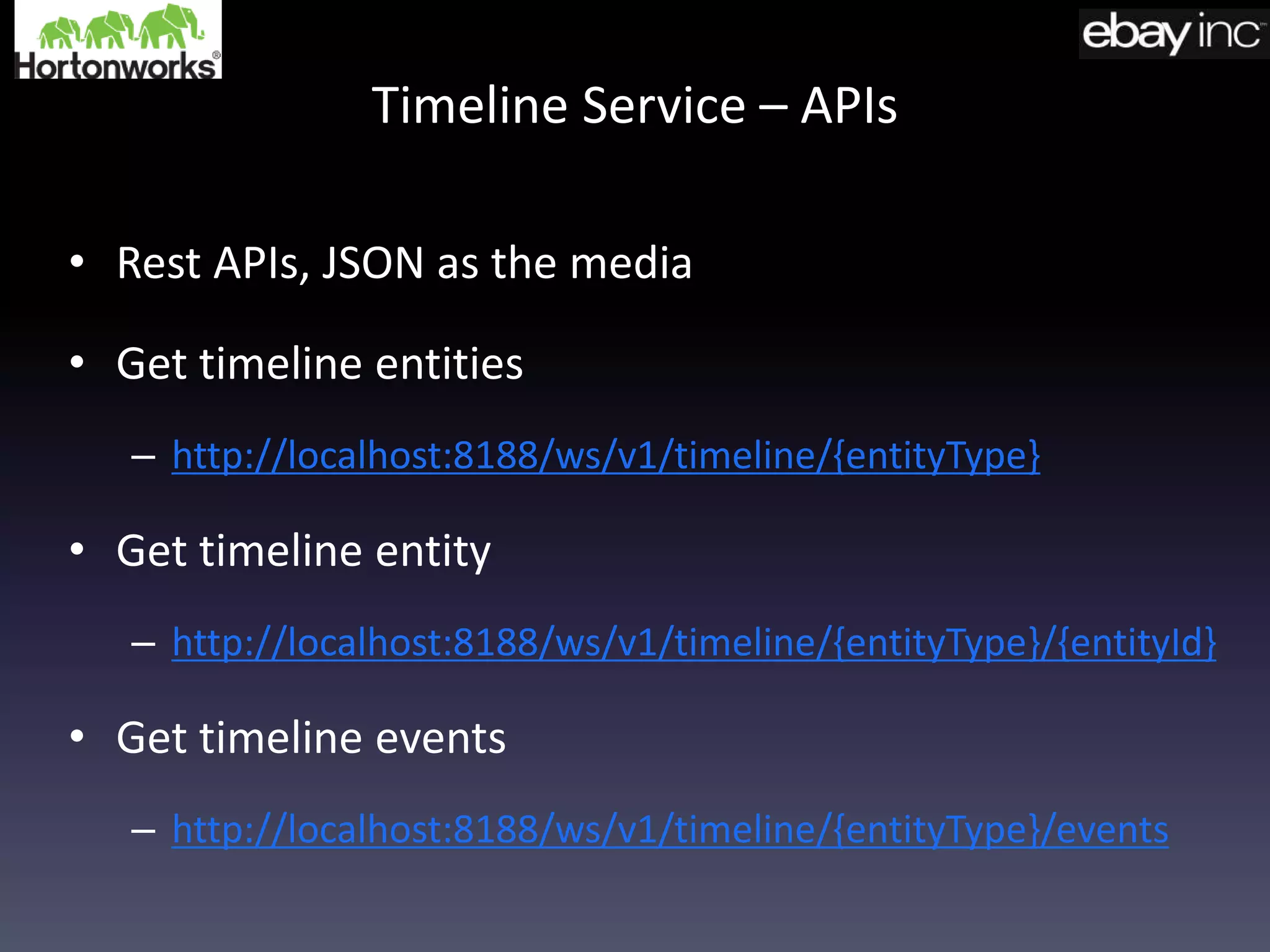

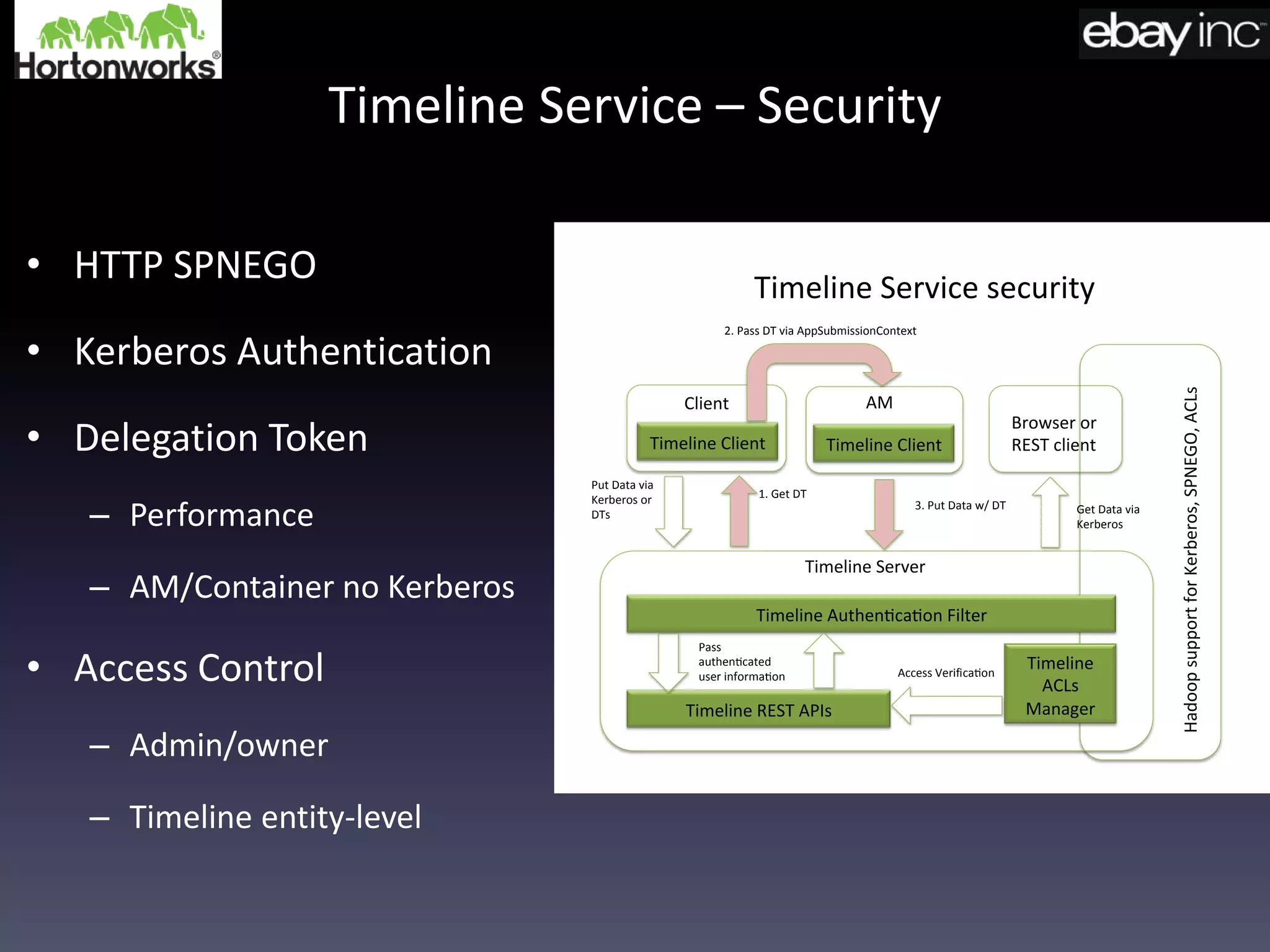

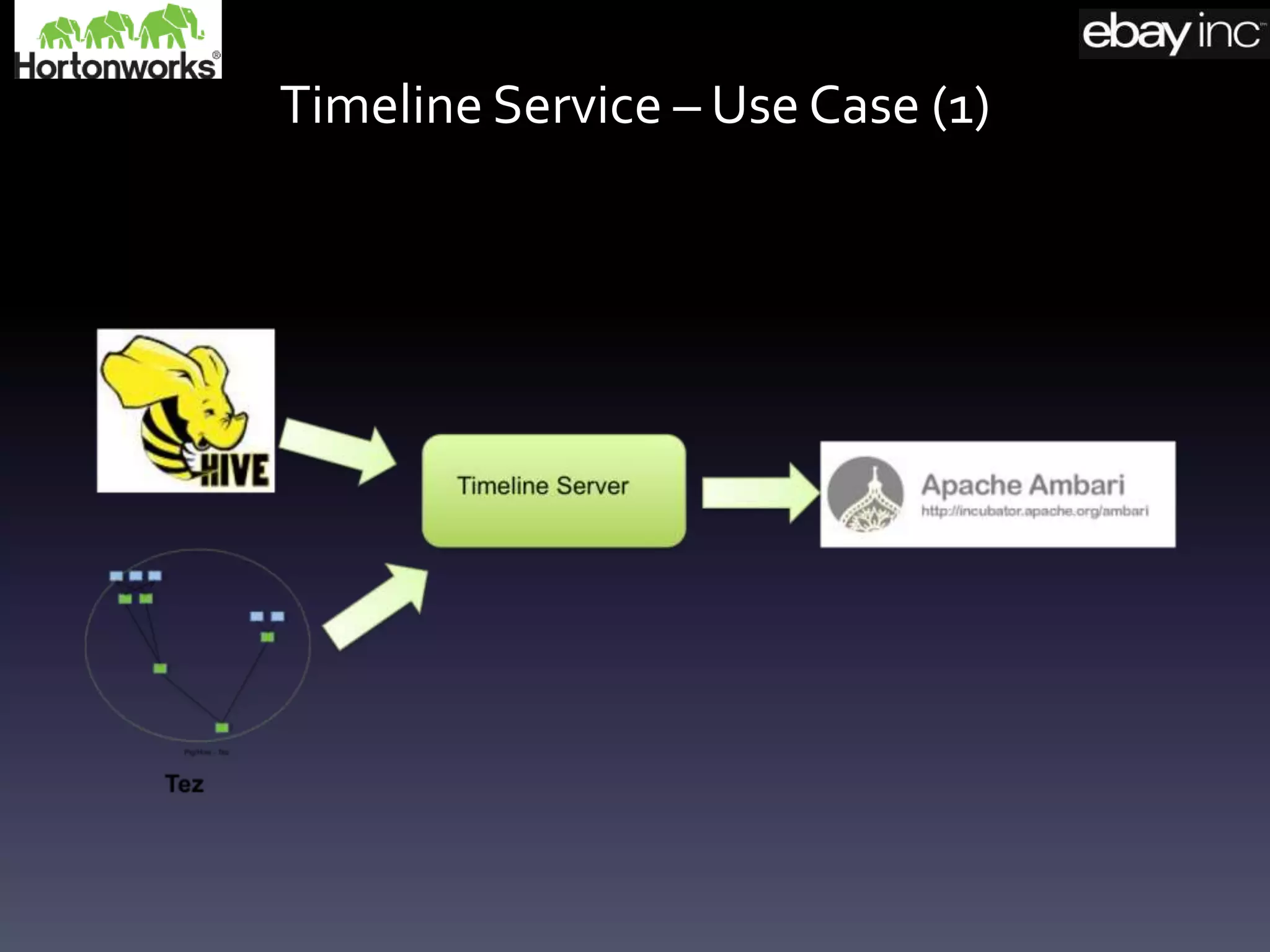

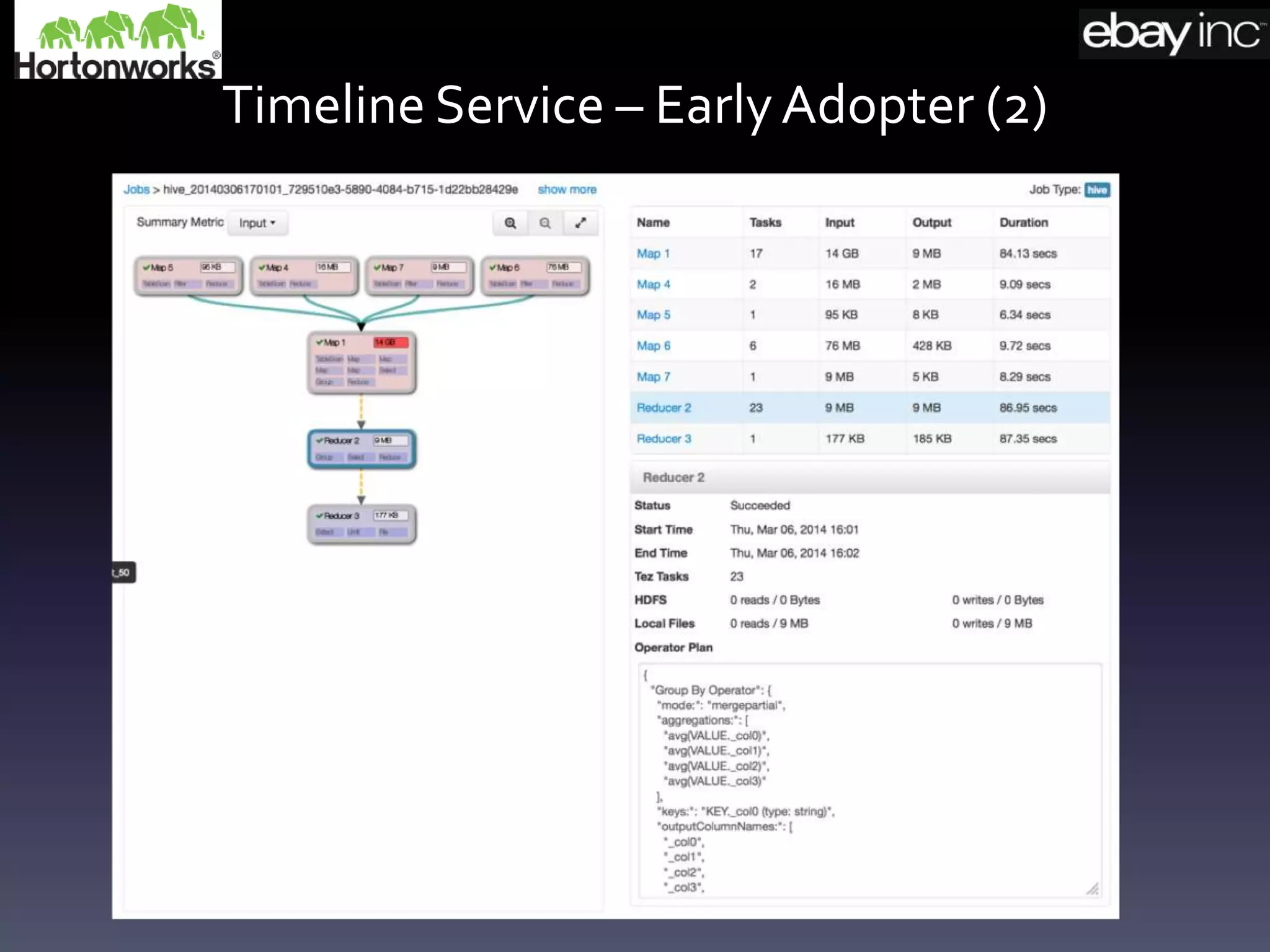

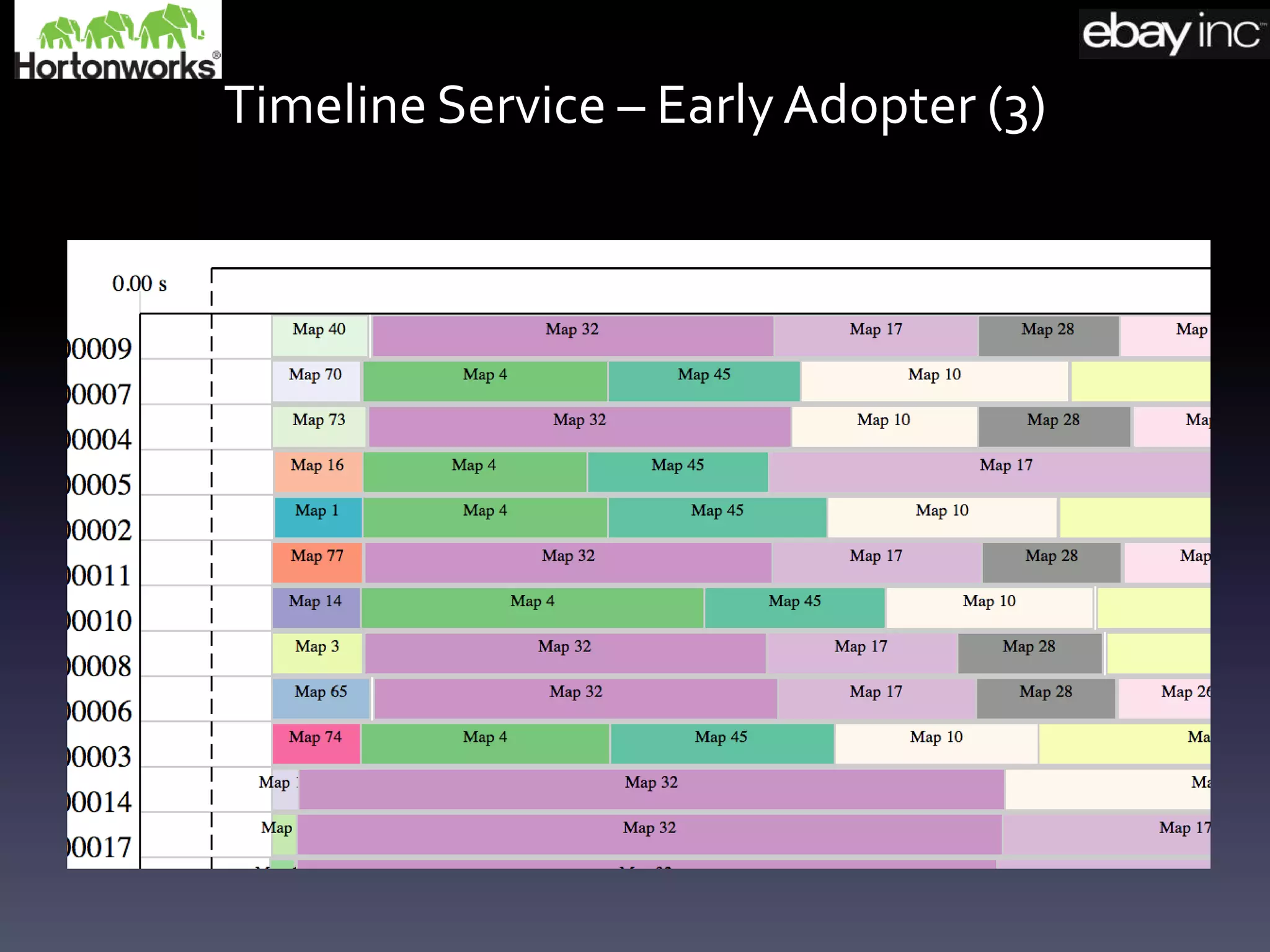

The document discusses the need for a new application history server and timeline service for Hadoop YARN, highlighting the limitations of the existing job history server, particularly for diverse applications. It outlines the architecture and features of the proposed application history server, including pluggable storage options and improved scalability, as well as the functionality of the timeline service. The presentation concludes with future work plans and the necessity for solutions that can handle larger scales for data storage.