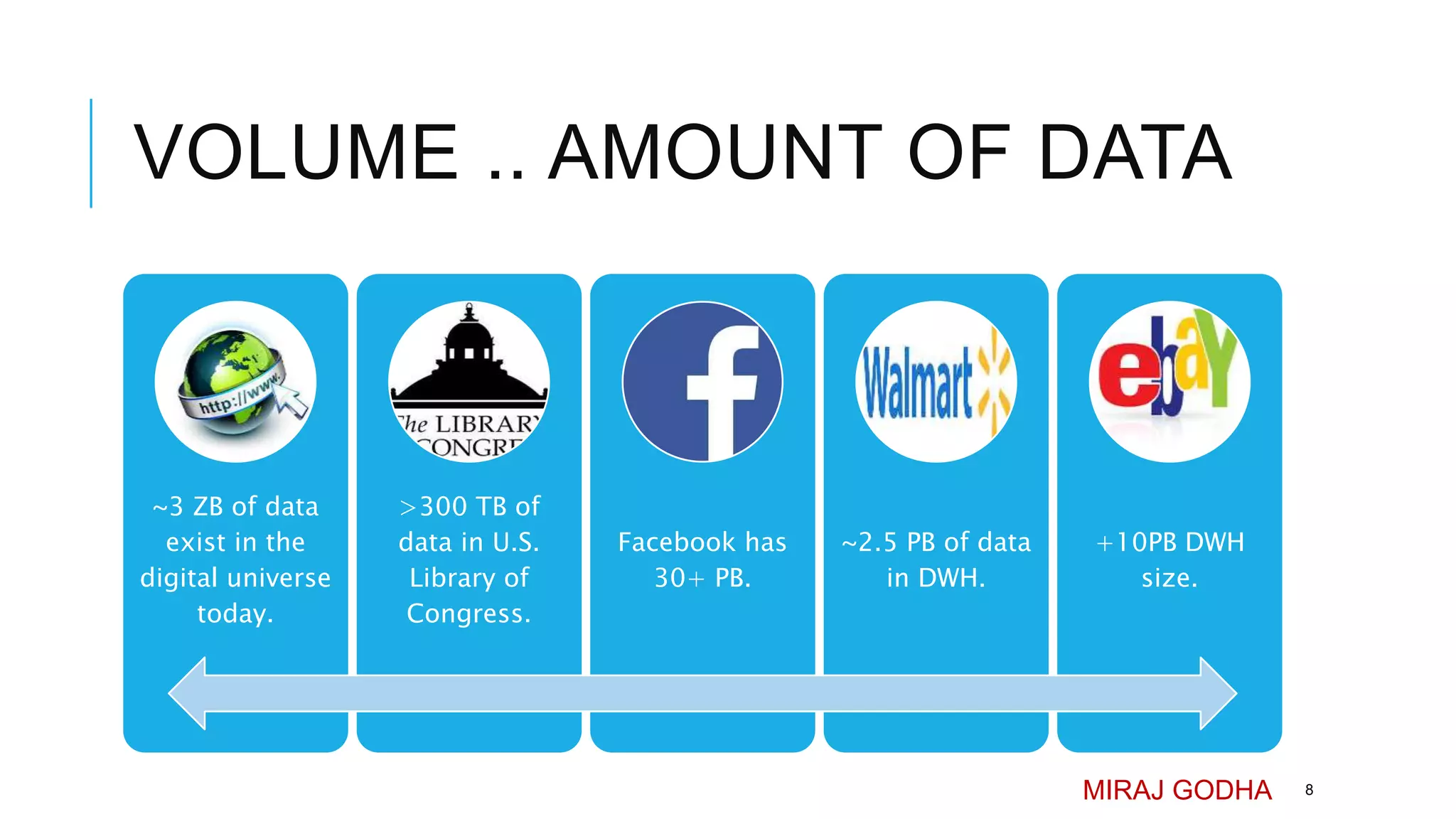

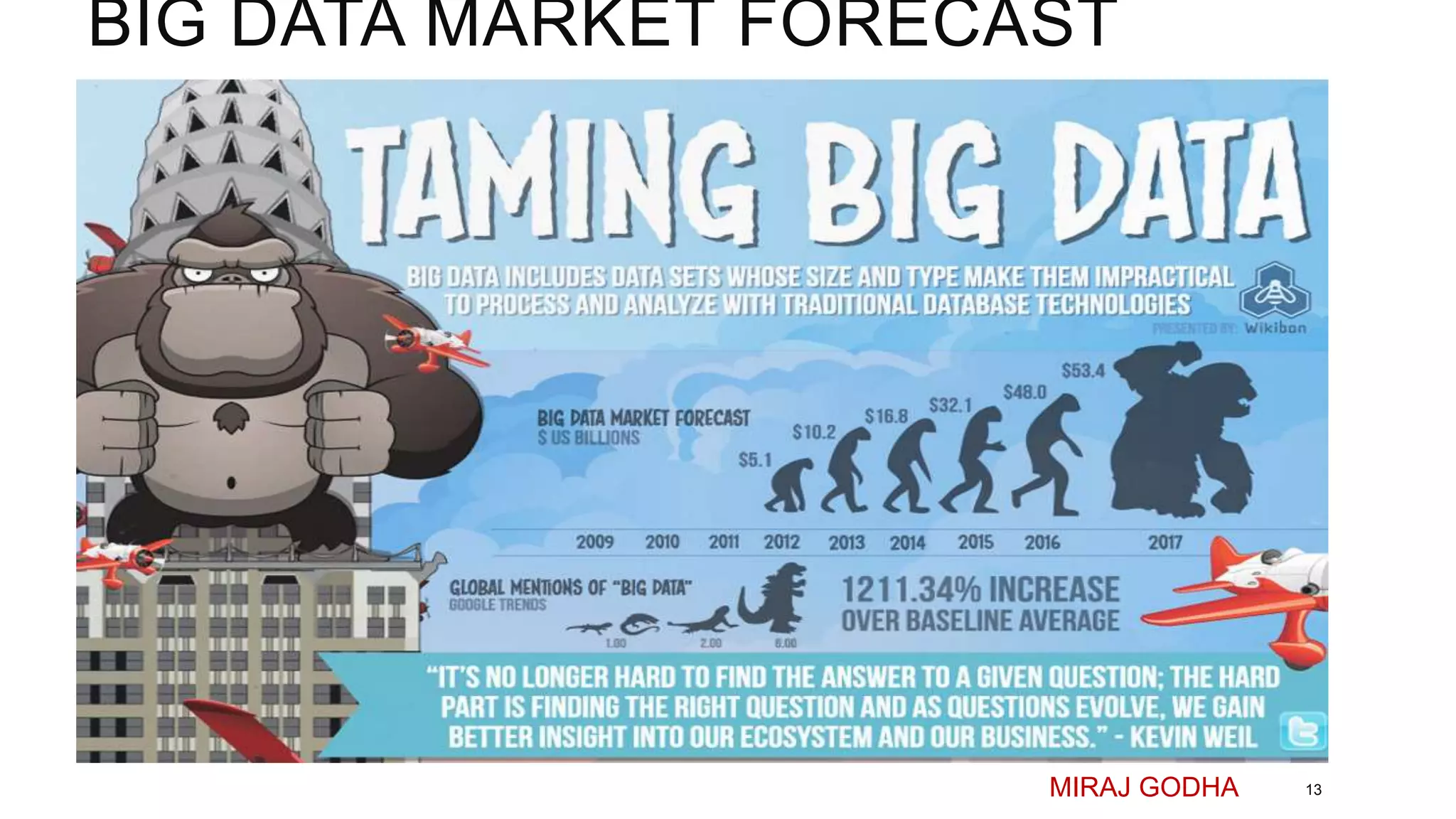

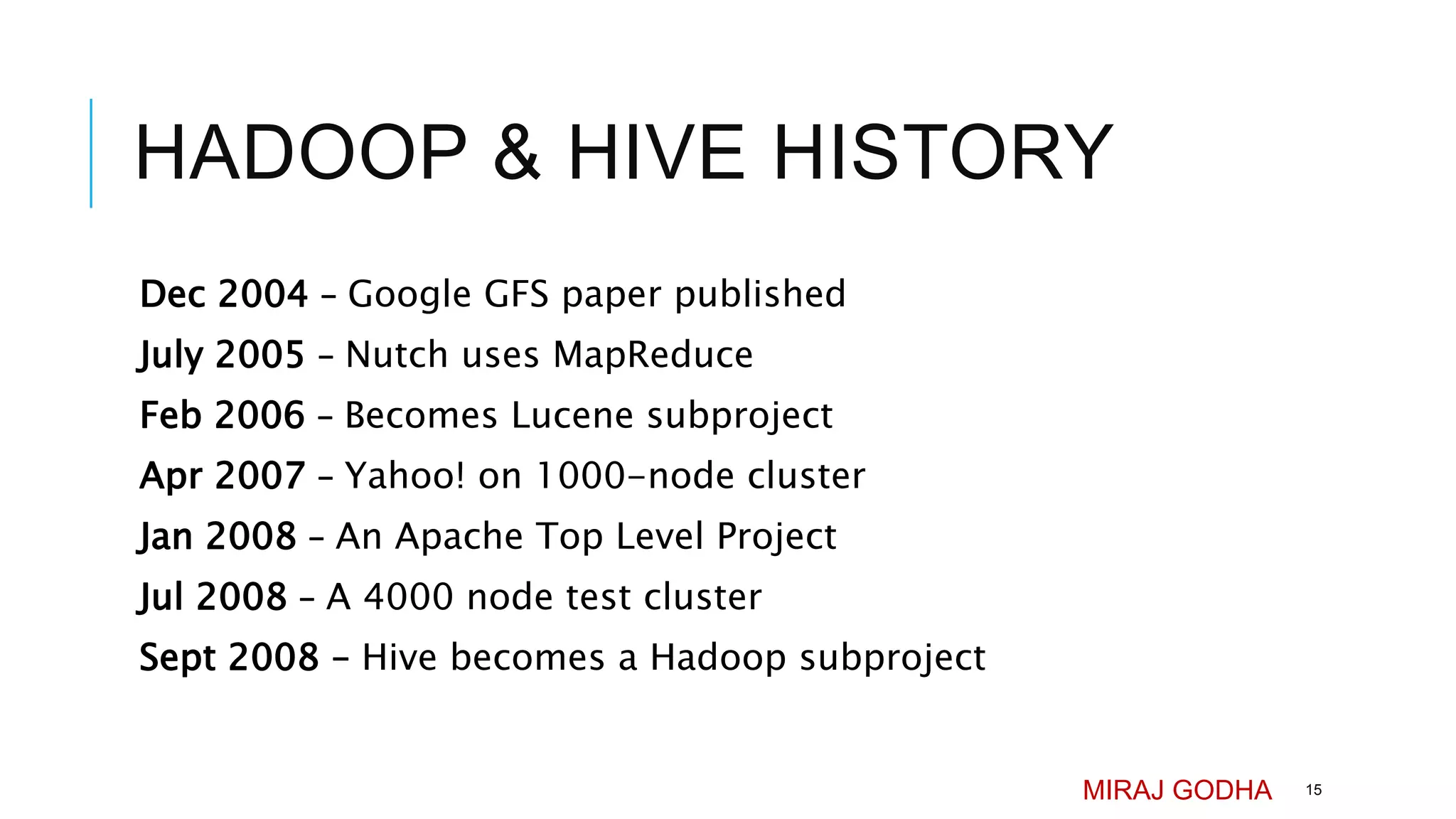

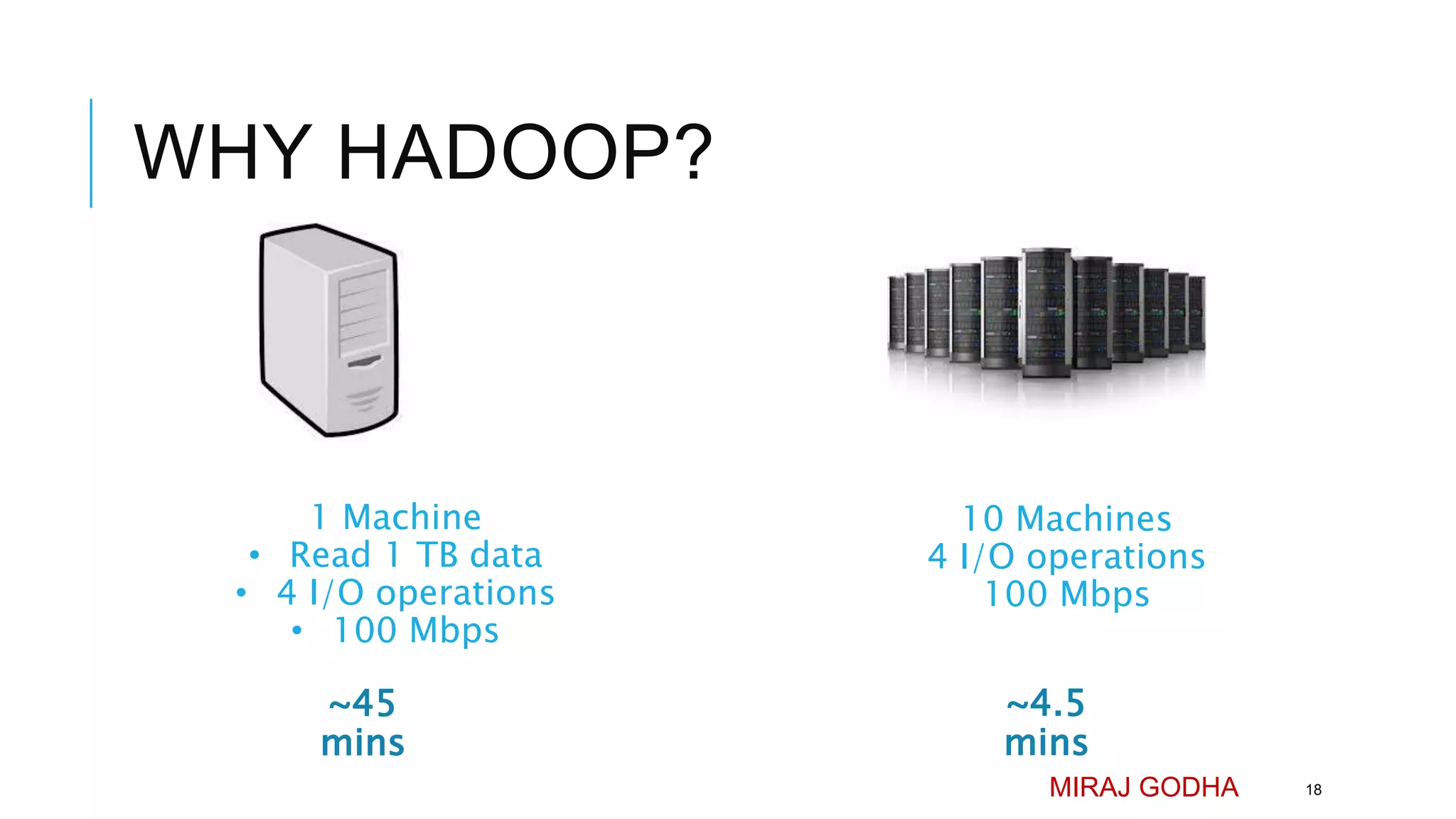

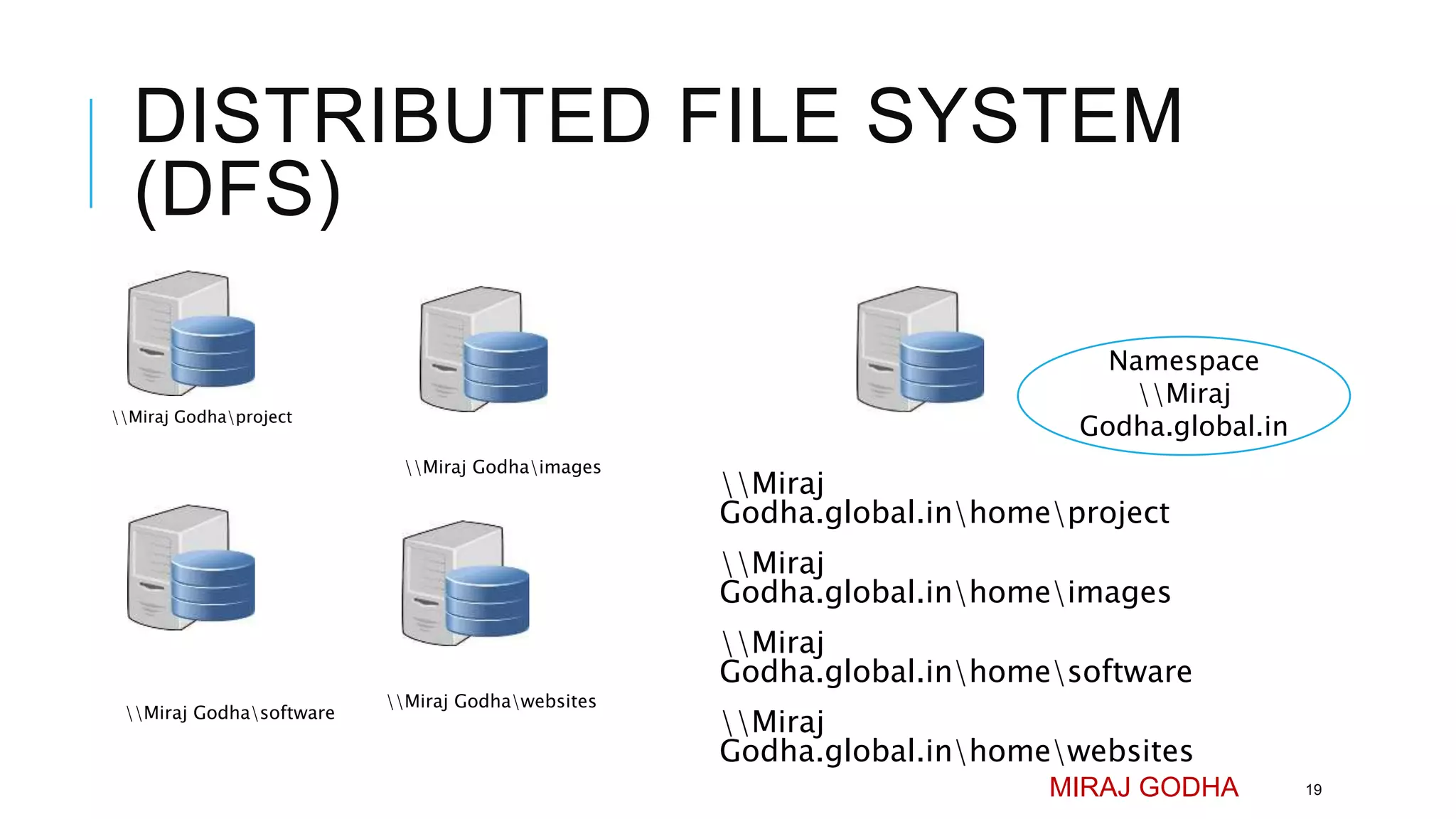

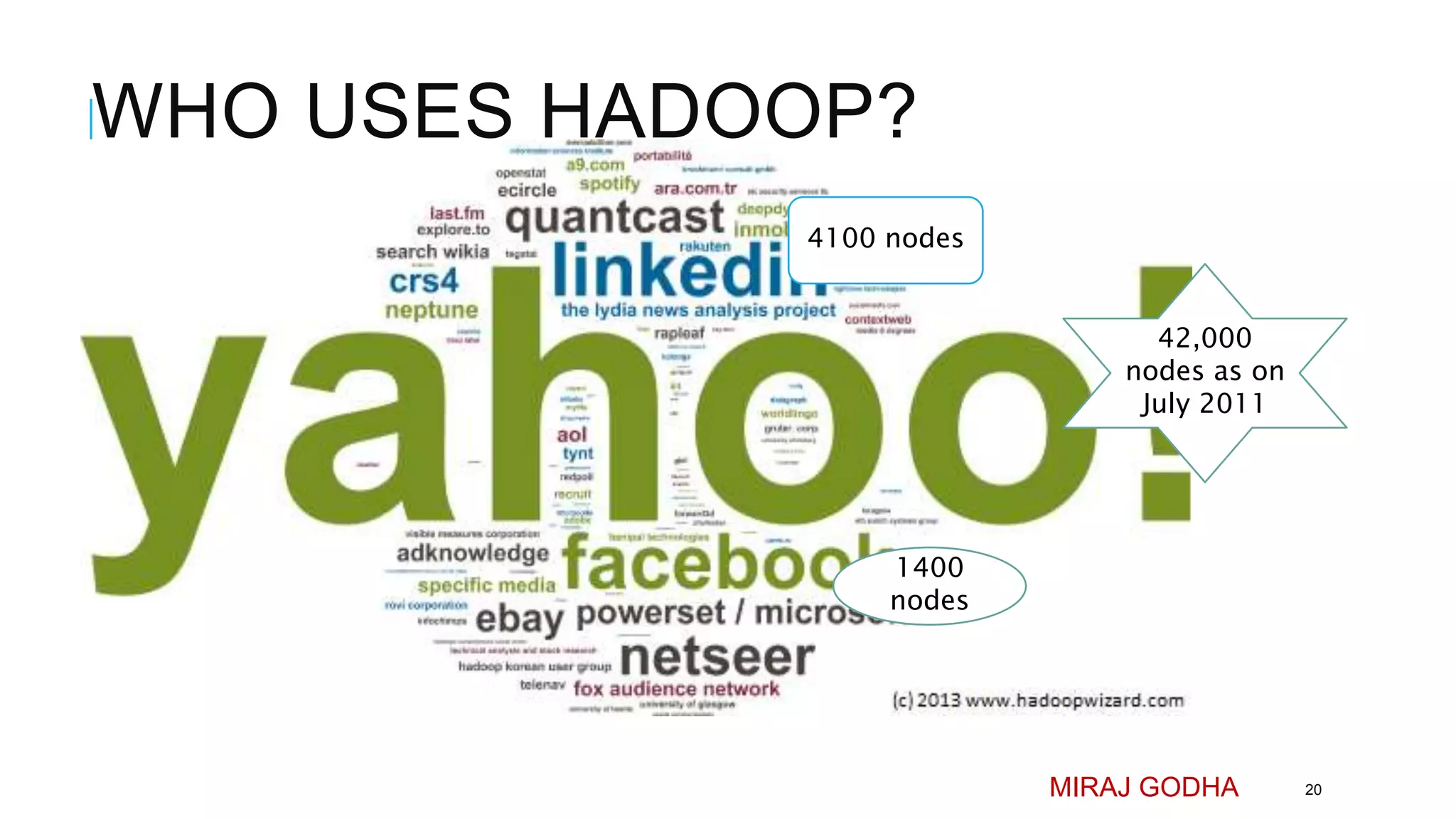

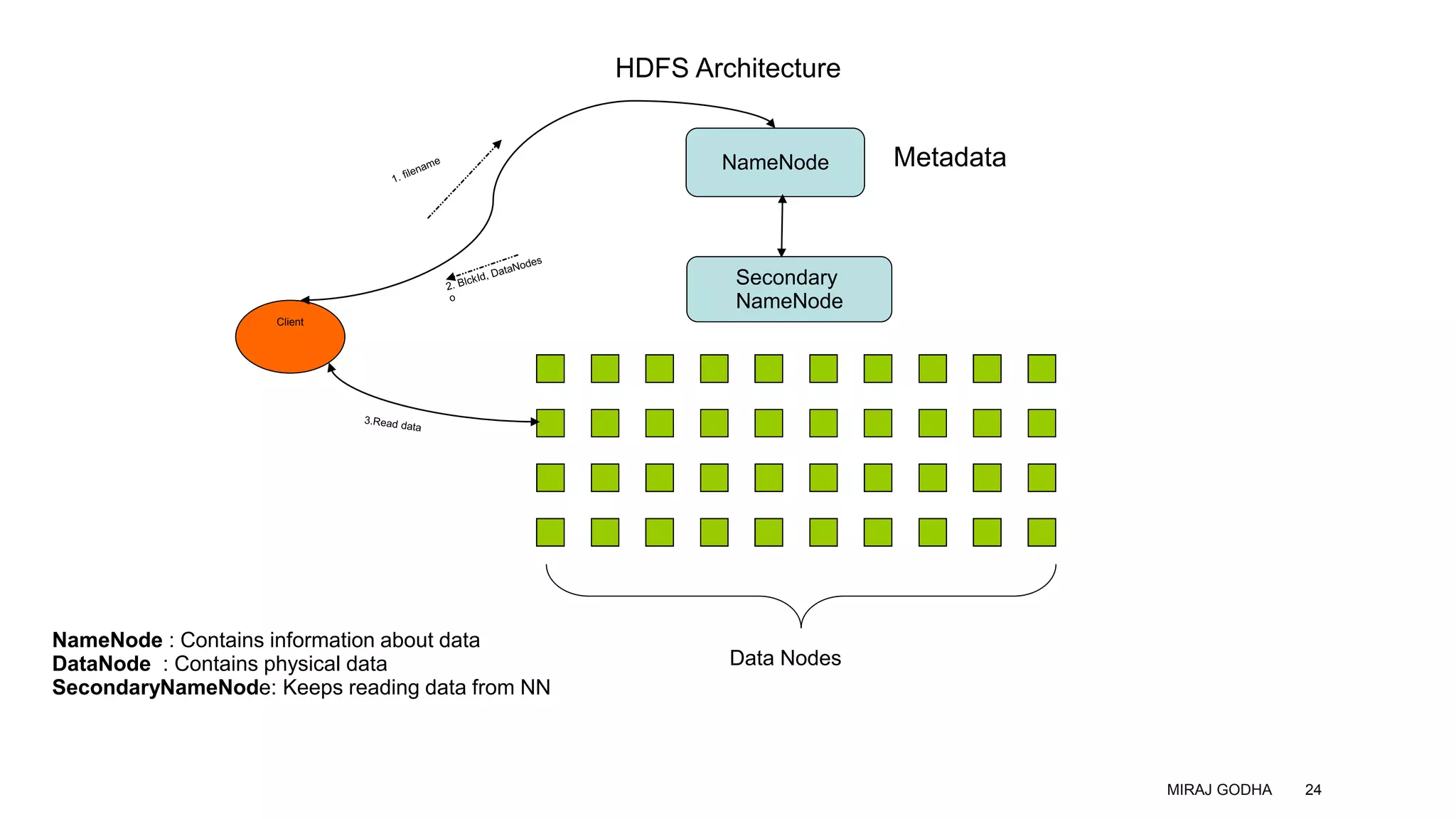

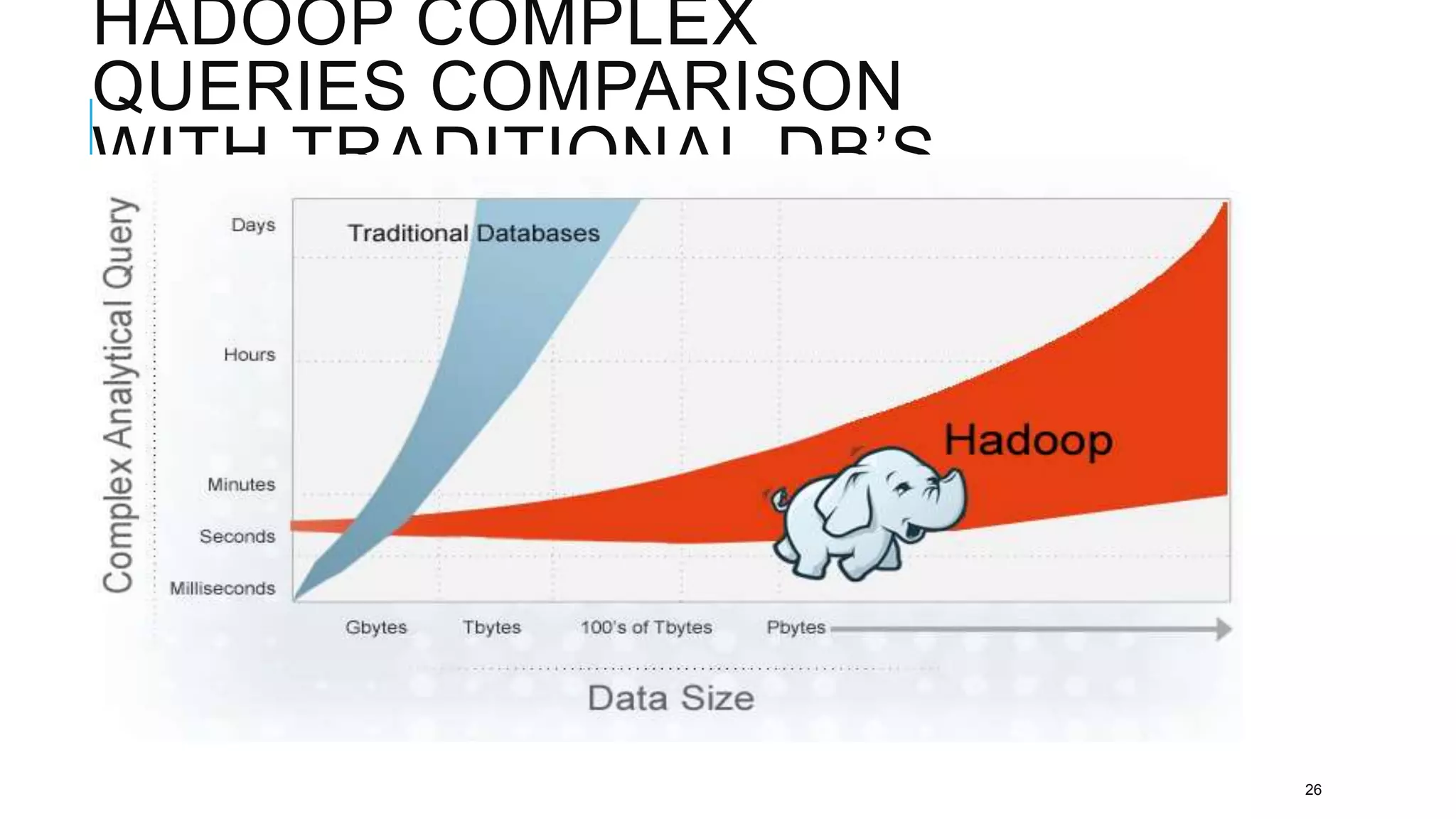

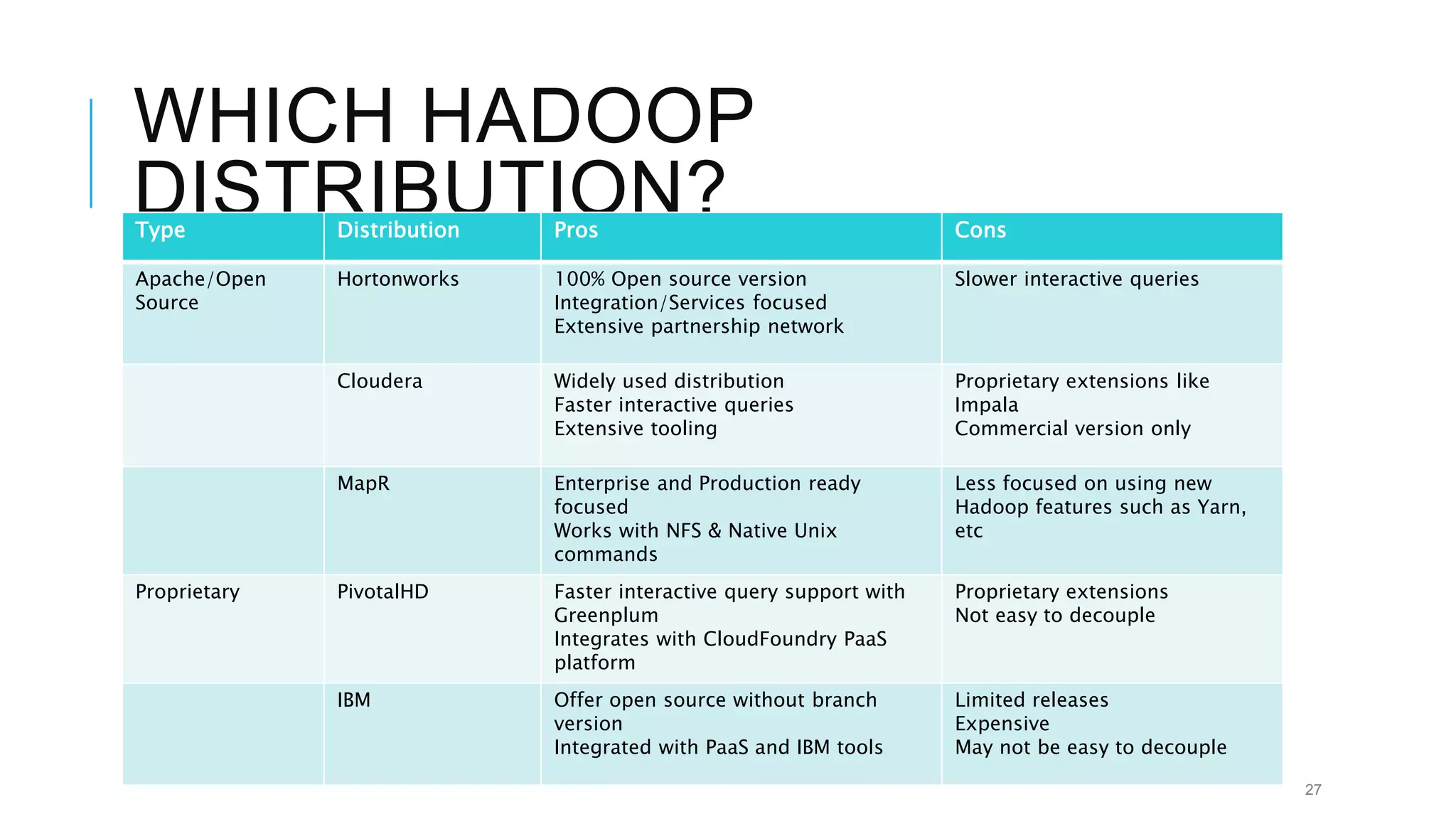

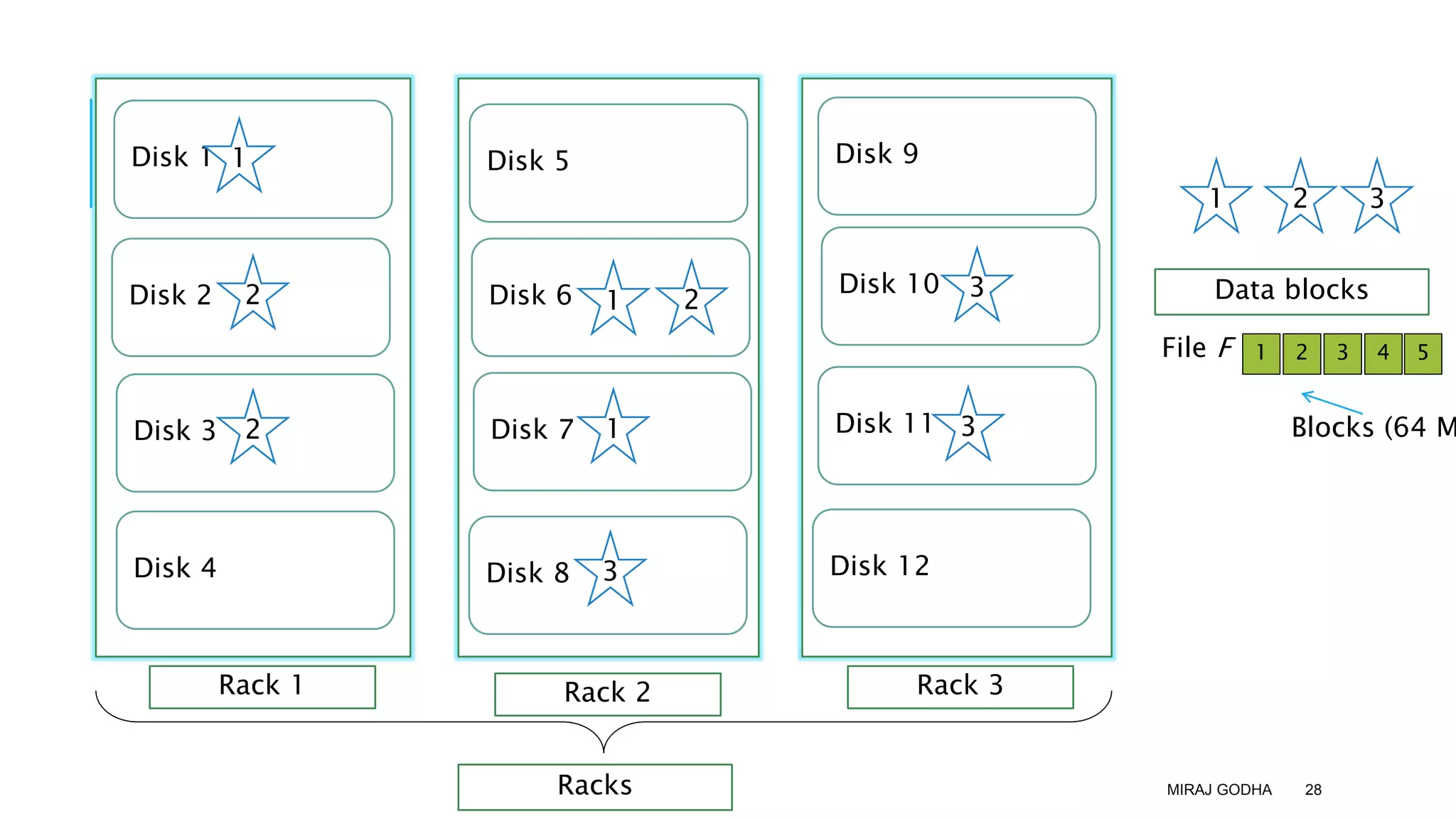

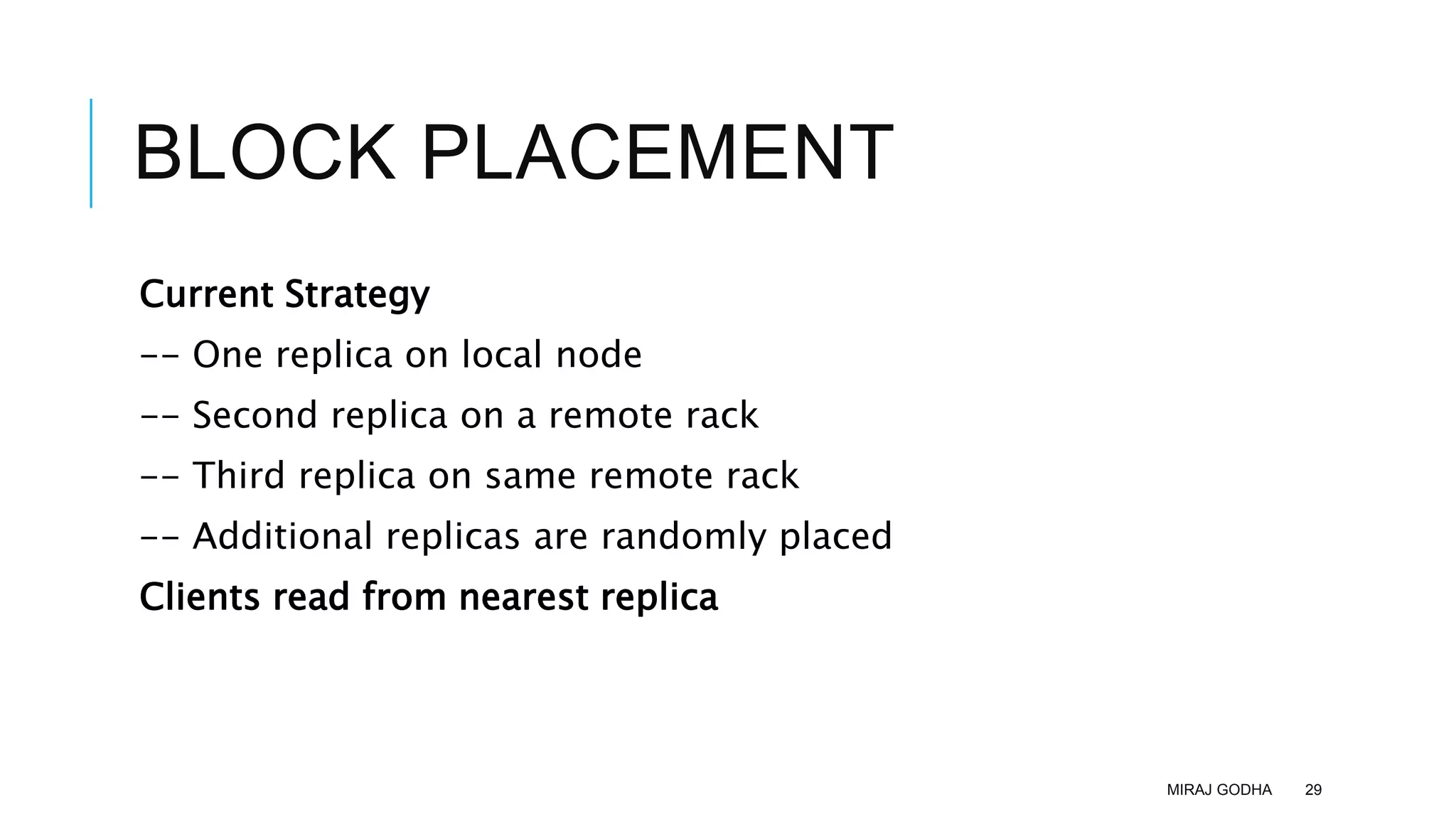

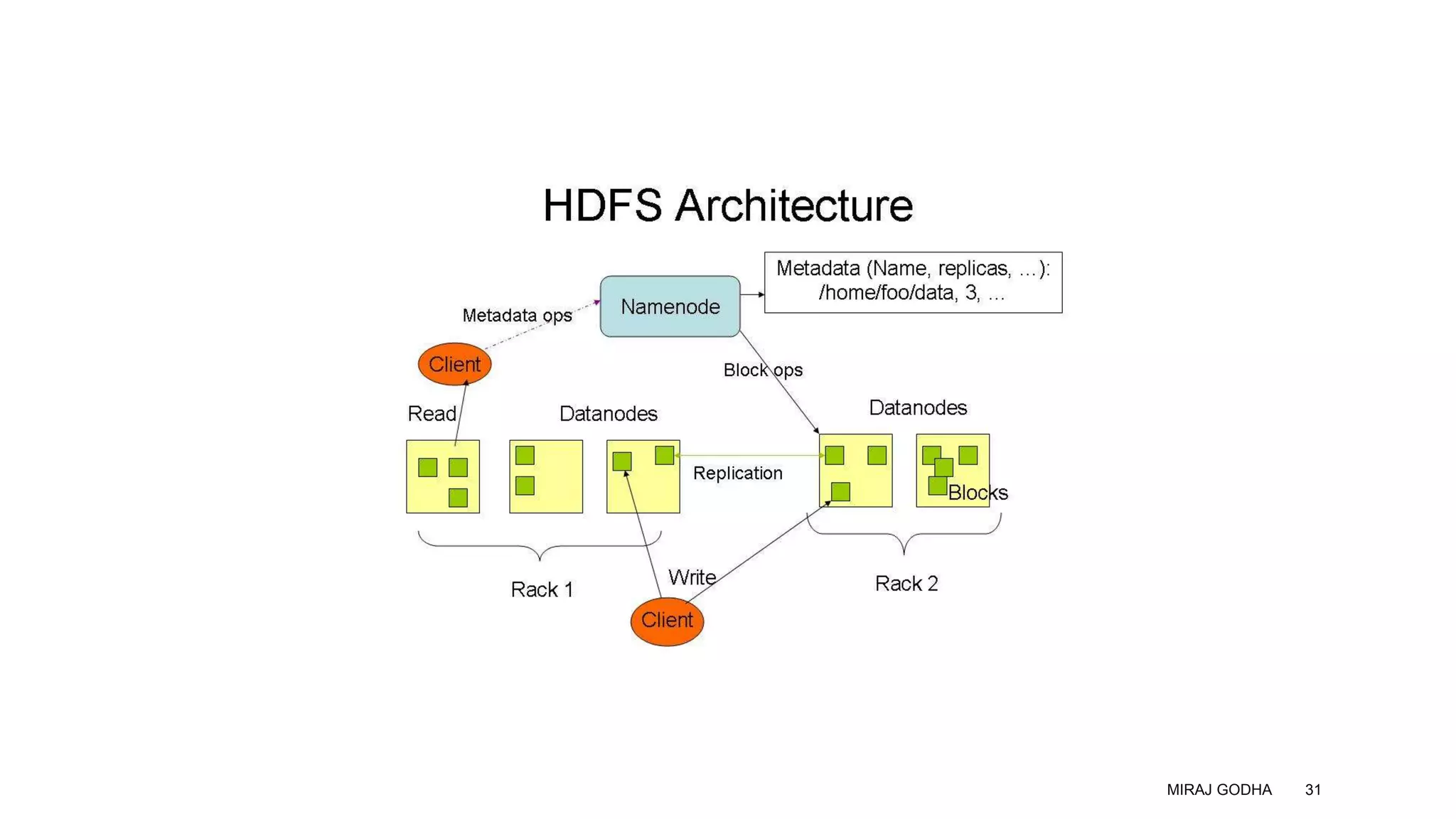

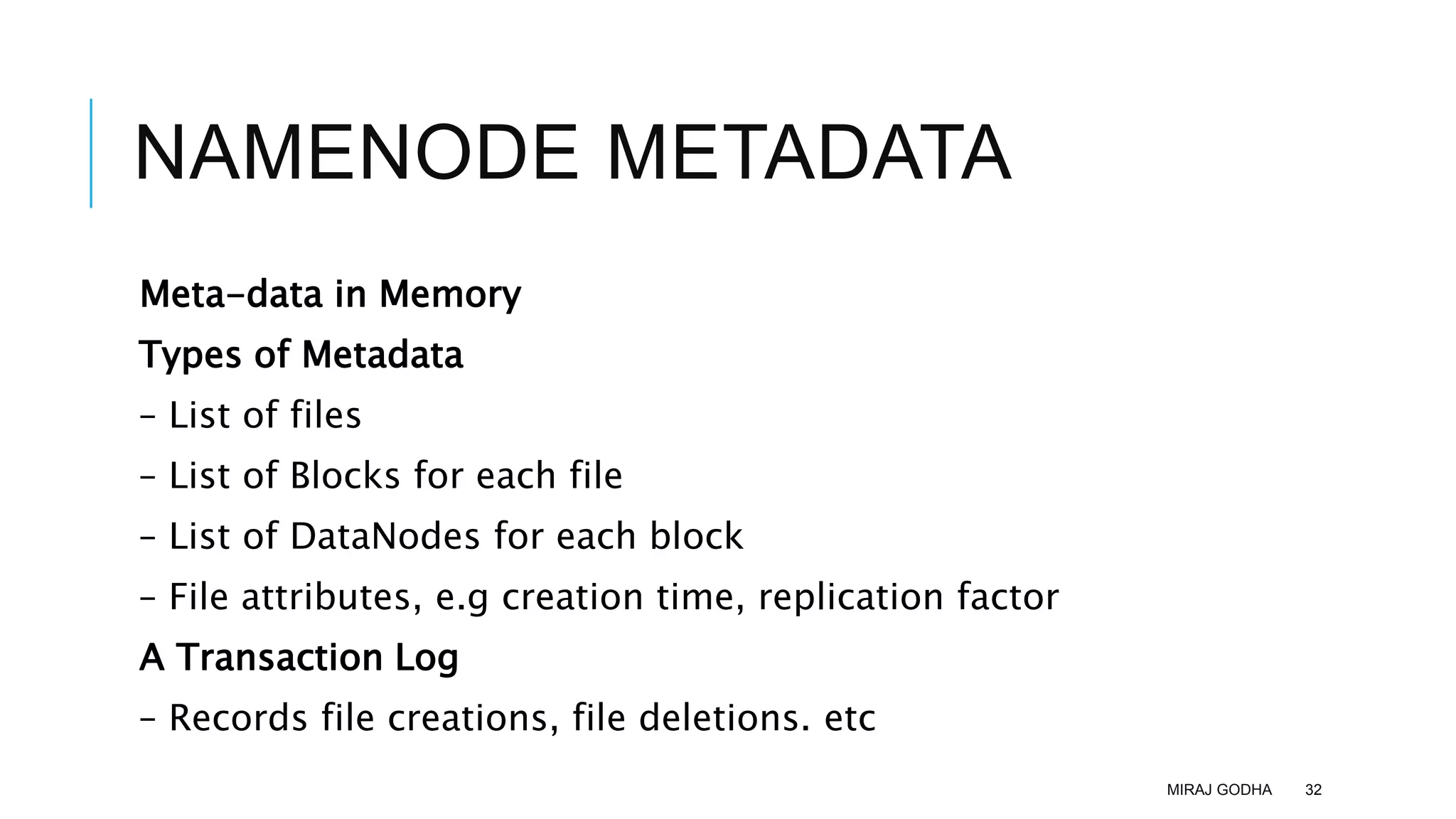

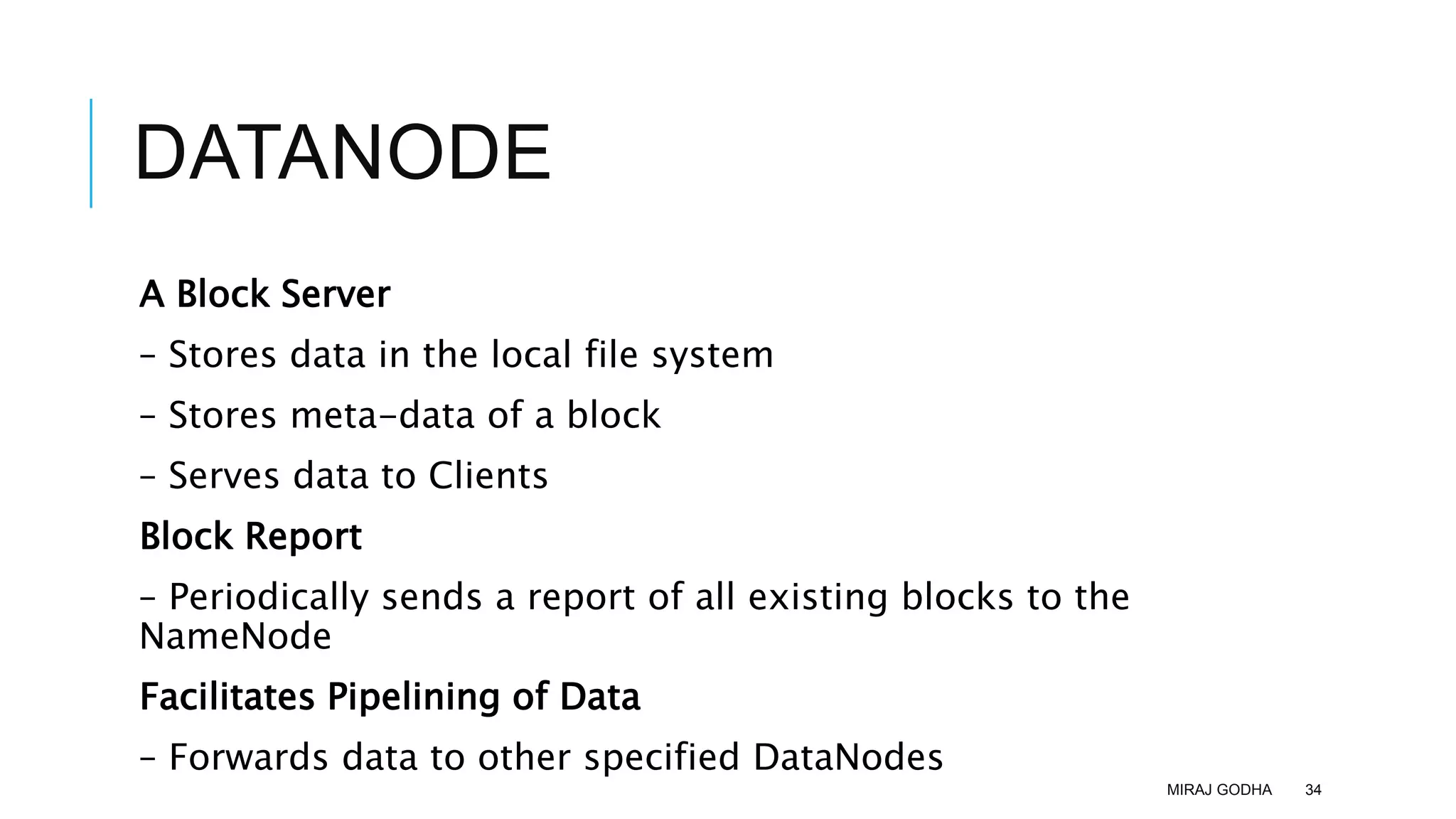

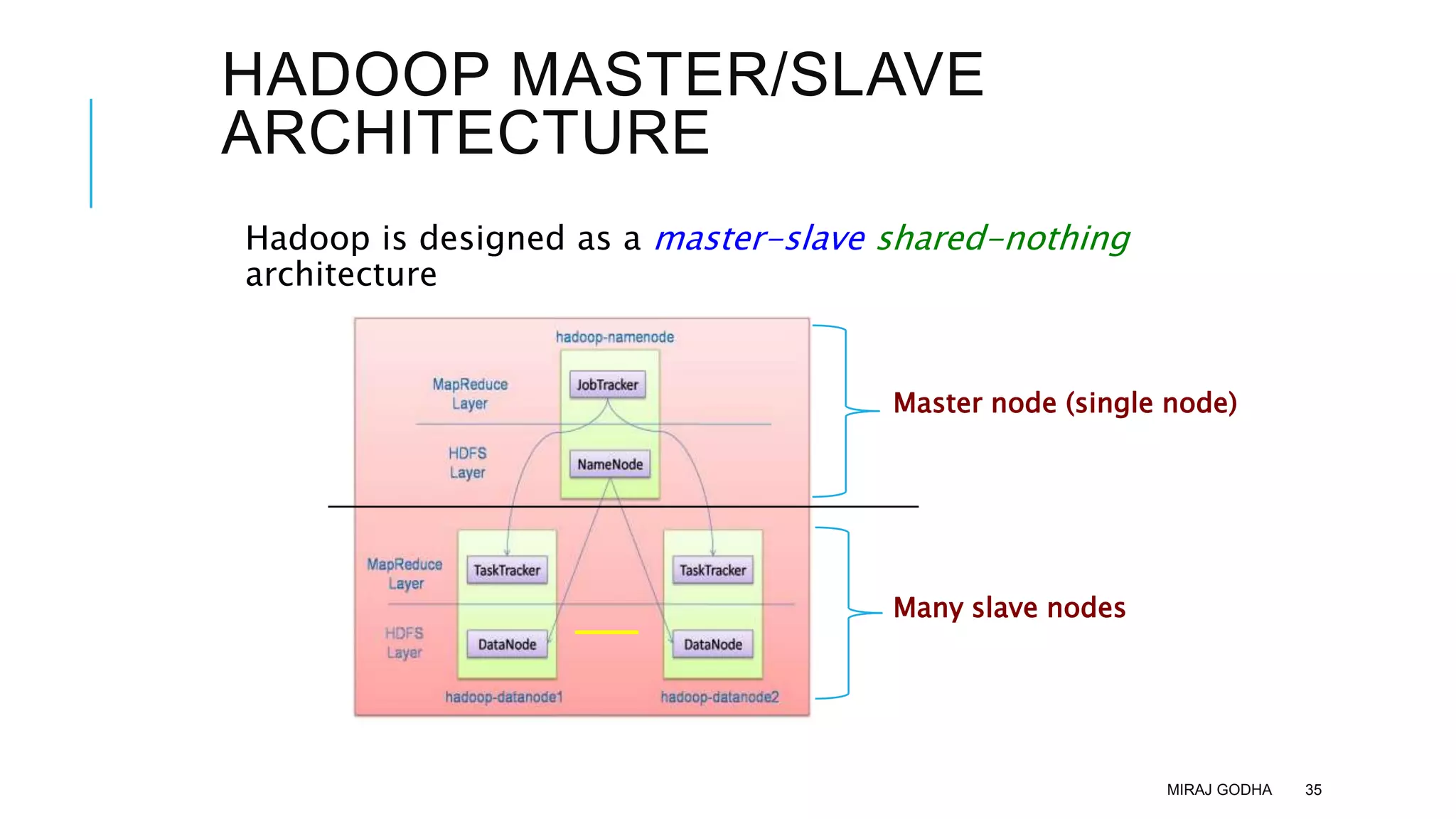

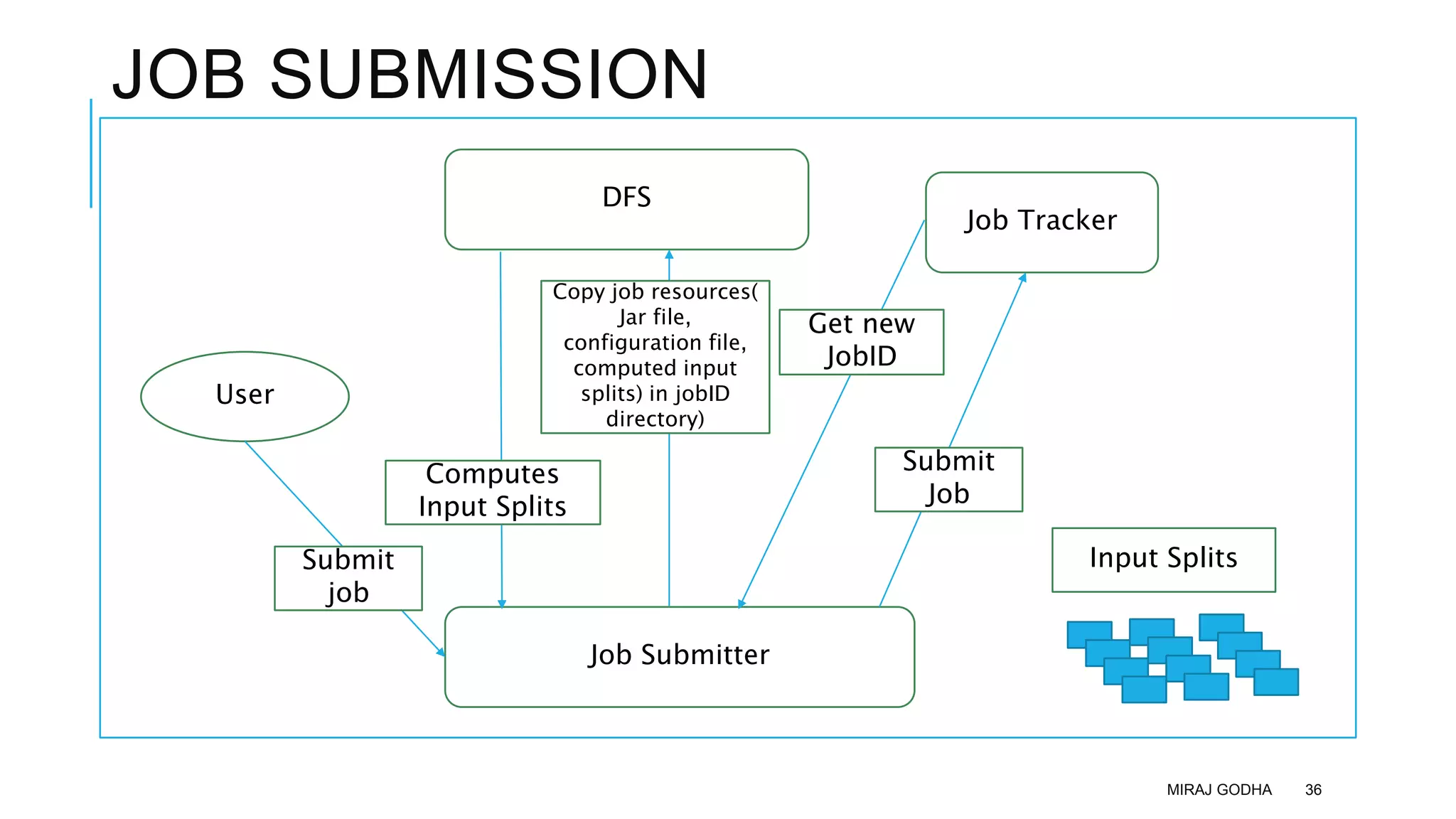

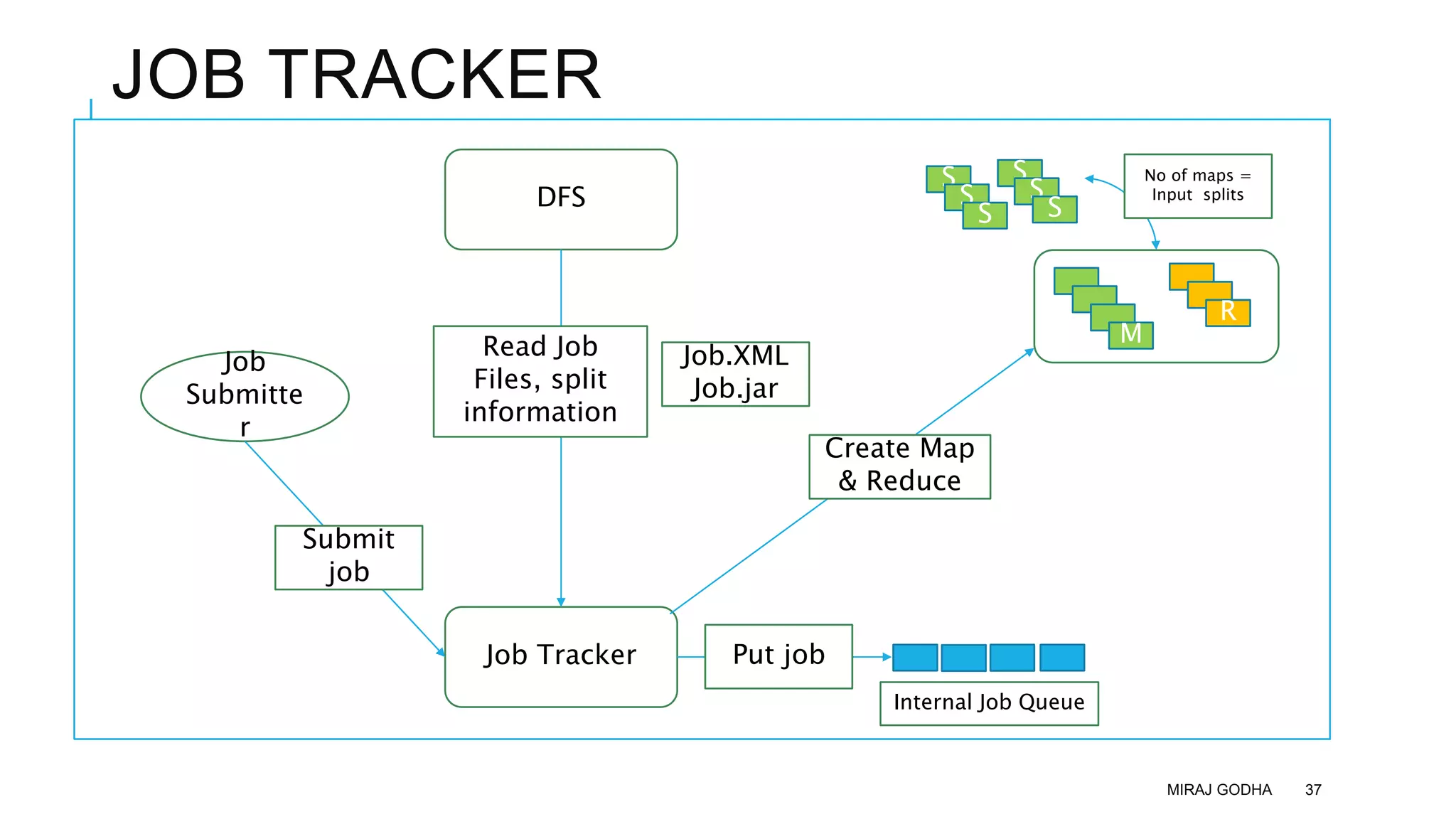

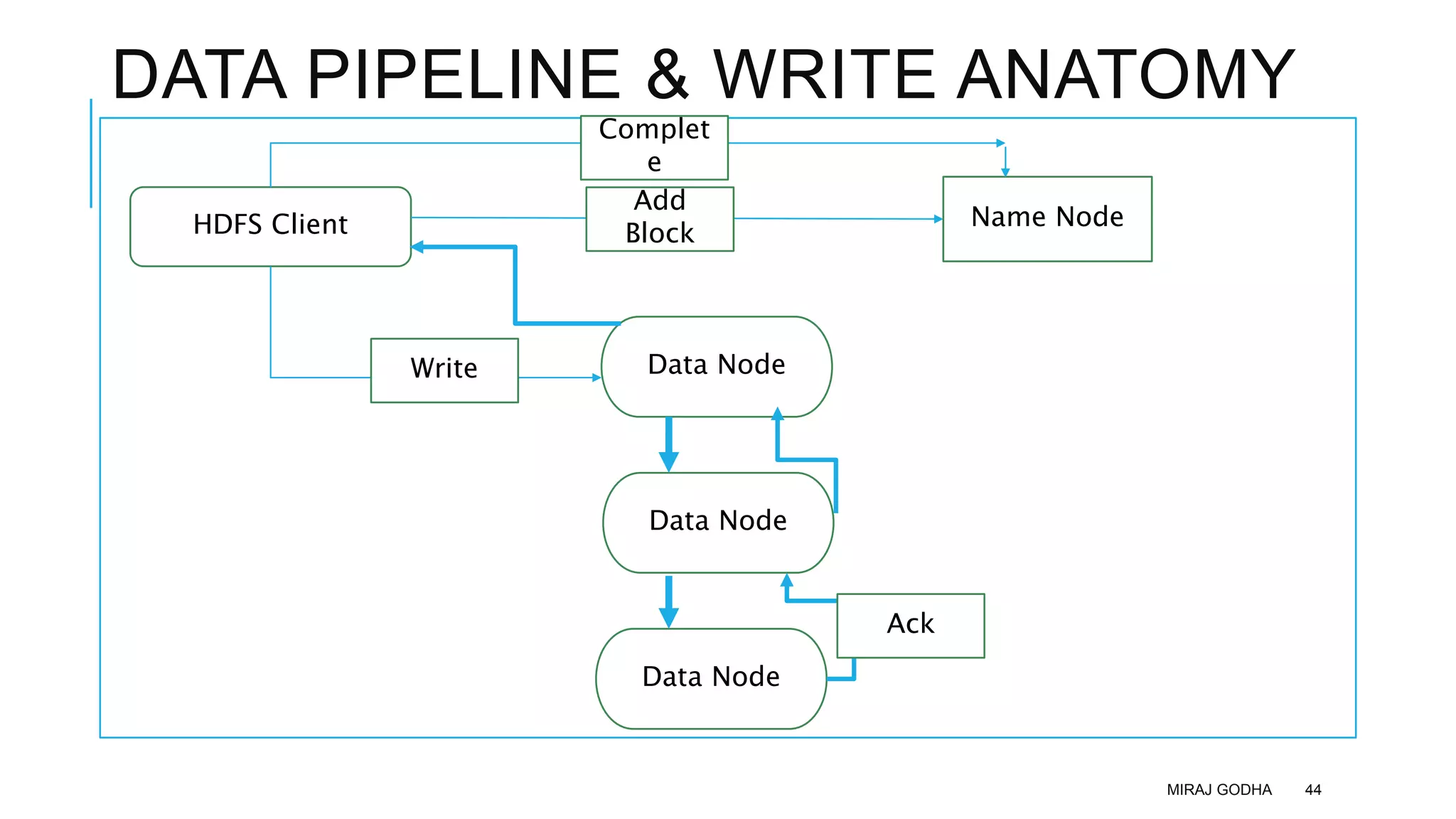

The document provides an overview of Apache Hadoop, detailing its significance in processing large datasets across distributed systems. It discusses the evolution of Hadoop, its architecture, major components like HDFS, and how it addresses challenges in handling big data. Additionally, it highlights real-world applications, career opportunities, and the benefits of using Hadoop for organizations dealing with large volumes of data.