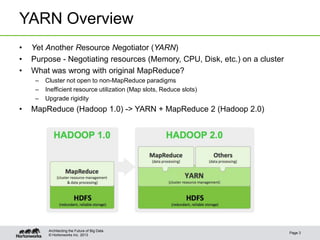

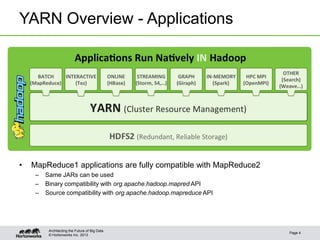

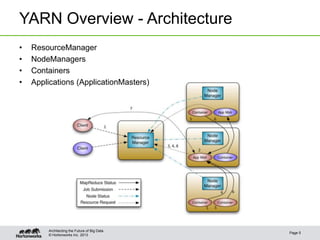

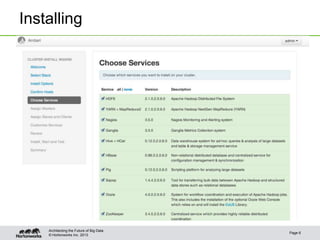

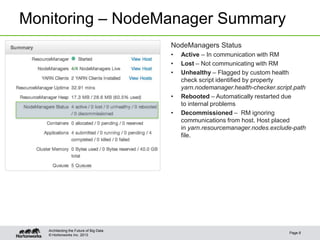

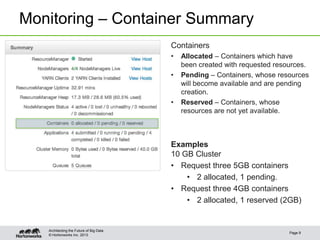

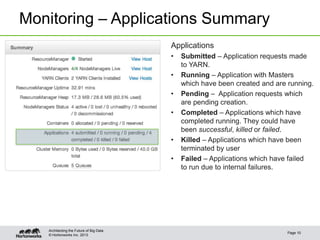

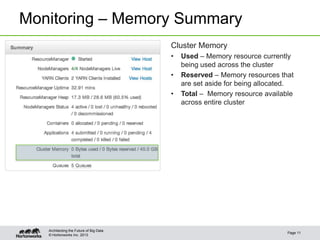

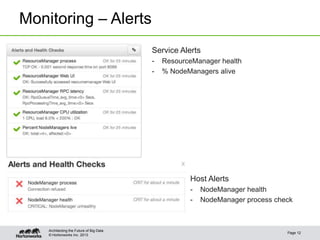

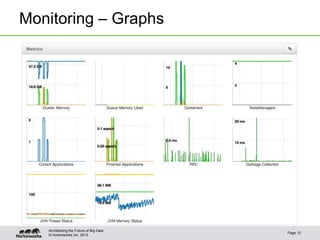

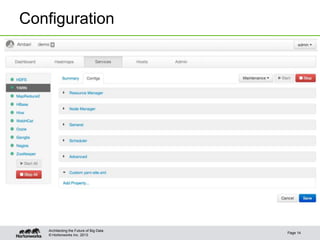

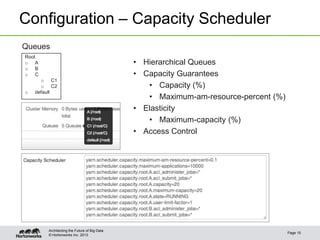

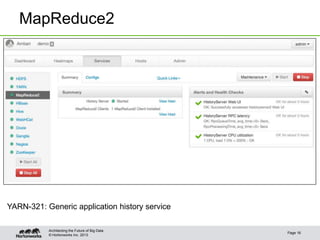

YARN is a resource manager for Hadoop that allows for more efficient resource utilization and supports non-MapReduce applications. It separates resource management from job scheduling and execution. Key components include the ResourceManager, NodeManagers, and Containers. Ambari can be used to monitor YARN components and applications, configure queues and capacity scheduling, and view metrics and alerts. Future work includes supporting more applications and improving Capacity Scheduler configuration and health checks.