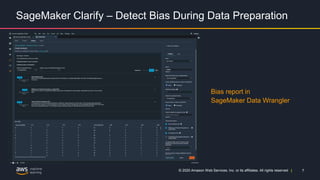

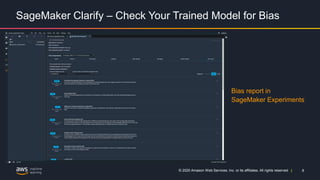

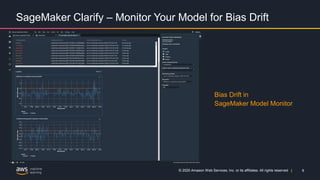

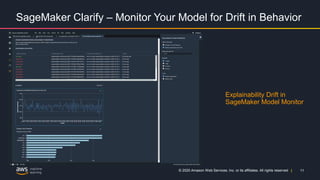

Amazon SageMaker Clarify addresses the challenges of detecting bias and ensuring explainability in machine learning models throughout their lifecycle. It allows users to identify bias in the data preparation stage, evaluate model bias, and monitor for bias drift over time, along with providing explanations for model predictions. The tool is available at no additional cost as part of Amazon SageMaker, supporting regulatory compliance and operational excellence.