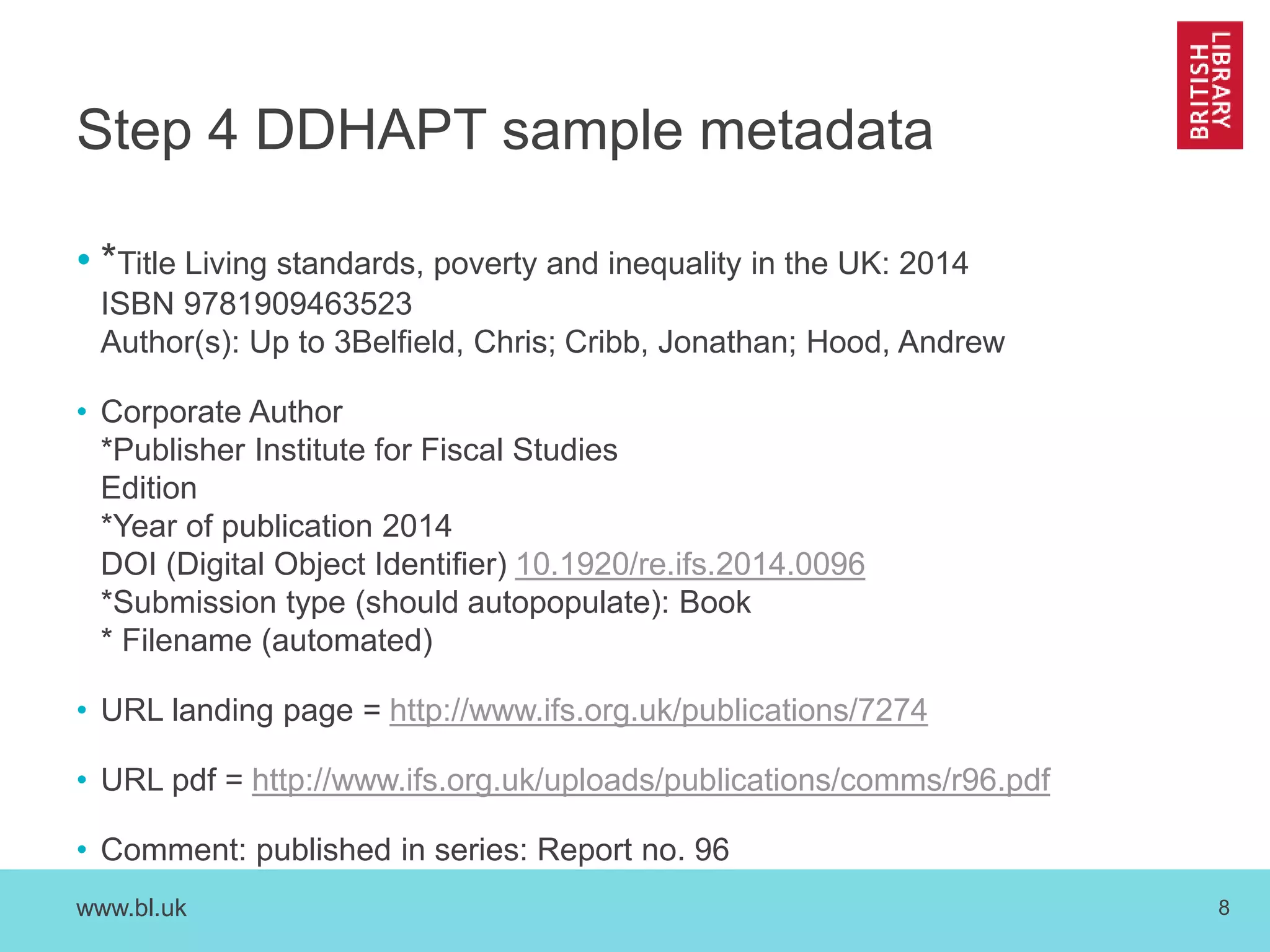

The document details the development and functionality of the Digital Documents Harvester and Processing Tool (DDHAPT) created by Jennie Grimshaw for the British Library. This tool aims to facilitate the preservation and access of non-commercial online publications through systematic harvesting, metadata creation, and efficient processing of documents. Future phases will enhance the tool's capabilities and streamline content collection within the library's digital archive.