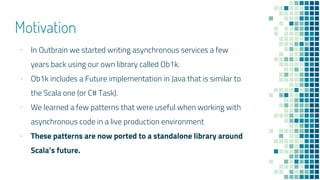

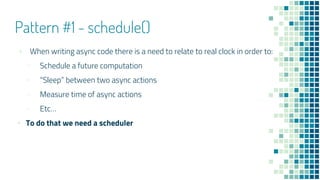

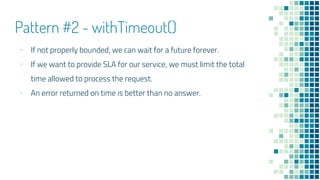

The document discusses advanced patterns in asynchronous programming, focusing on Scala's Future implementation. It introduces various patterns that enhance system resiliency, such as scheduling, timeout handling, error management in sequences, and retry mechanisms. The authors, Asy Ronen and Michael Arenzon, provide examples and implementation details, highlighting the importance of effective asynchronous coding in production environments.

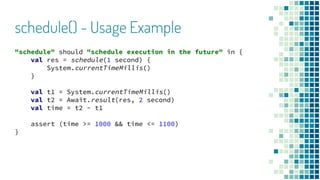

(callable: => T)

(implicit scheduler: Scheduler): Future[T]

def scheduleWith[T](duration: FiniteDuration)

(callable: => Future[T])

(implicit scheduler: Scheduler): Future[T]

schedule() - Definition](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-7-320.jpg)

{

def withTimeout(duration: FiniteDuration)

(implicit scheduler: Scheduler,

executor: ExecutionContext):Future[T] = {

val deadline = schedule(duration) {

throw new TimeoutException("future timeout")

}

Future firstCompletedOf Seq(future, deadline)

}

}

withTimeout() - Implementation

The original future task is not interrupted!](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-10-320.jpg)

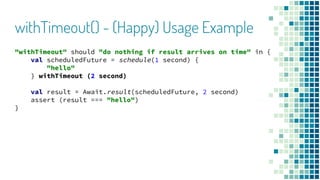

![withTimeout() - Usage Example

"withTimeout" should "throw exception after timeout" in {

val scheduledFuture = schedule(2 second) {

"hello"

} withTimeout (1 second)

assertThrows[TimeoutException] {

Await.result(scheduledFuture, 2 second)

}

}](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-12-320.jpg)

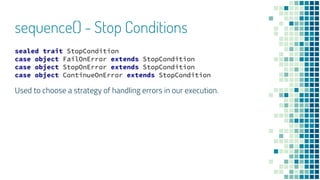

![Pattern #3 - sequence()

▪ Scala’s Future class contain a sequence method that transforms

a List[Future[T]] => Future[List[T]]

▪ However, it has two main drawbacks:

a. If one future fails the whole thing fails but what if 90% of

the results are good enough for us?

b. It doesn’t fail fast i.e. we wait for the slowest result/error to

arrive](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-13-320.jpg)

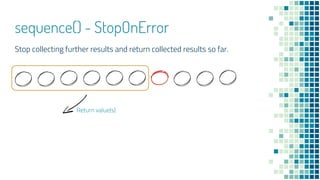

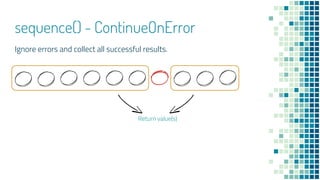

![sequence() - Definition

def sequence[T]

(futures: Seq[Future[T]], stop: StopCondition = FailOnError)

(implicit executor: ExecutionContext): Future[Seq[T]]

def collect[K, T]

(futures: Map[K, Future[T]], stop: StopCondition = FailOnError)

(implicit executor: ExecutionContext): Future[Map[K, T]]](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-14-320.jpg)

![sequence() - Definition (extended)

def sequenceAll[T]

(futures: Seq[Future[T]])

(implicit executor: ExecutionContext): Future[Seq[Try[T]]]

def collectAll[K, T]

(futures: Map[K, Future[T]])

(implicit executor: ExecutionContext): Future[Map[K, Try[T]]]

Return all values & errors](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-19-320.jpg)

![parallelCollect() - Definition

def parallelCollect[T, R]

(elements: Seq[T], parallelism: Int,

stop: StopCondition = FailOnError)

(producer: T => Future[R])

(implicit executor: ExecutionContext): Future[Seq[R]]](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-25-320.jpg)

![parallelCollect() - Usage Example

"Google Search" should "return all results without throttling" in {

val queries: List[String] = createQueries(amount = 10000)

val results = parallelCollect(queries, 10, ContinueOnError) {

query => GoogleSearchClient.sendQuery(query)

} withTimeout(30 second)

val finalRes = Await.result(results, 30 second)

assert (finalRes.size === 10000)

}](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-26-320.jpg)

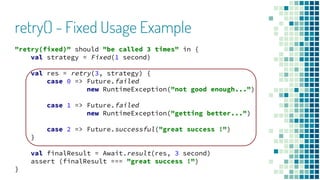

(f: => Future[T]): Future[T] = f recoverWith {

case _ if retries > 0 => retry(retries - 1)(f)

}](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-28-320.jpg)

(producer: Int => Future[T])

(implicit executor: ExecutionContext,

scheduler: Scheduler): Future[T]](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-29-320.jpg)

![retry() - (Real) Implementation

type Conditional = PartialFunction[Throwable, RetryPolicy]

def retry[T](retries: Int)

(policy: Conditional)

(producer: Int => Future[T])

(implicit scheduler: Scheduler,

executor: ExecutionContext): Future[T]](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-30-320.jpg)

![retry() - Conditional Usage Example

"retry(conditional)" should "stop on IOException" in {

val policy: PartialFunction[Throwable, RetryPolicy] = {

case _: TimeoutException => Fixed(1 second)

}

val res = retry(3)(policy) {

case 0 => Future failed new TimeoutException("really slow")

case 1 => Future failed new IOException("something bad")

case 2 => Future successful "great success"

}

try Await.result(res, 3 second) catch {

case _: IOException => succeed

}

}](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-32-320.jpg)

(producer: => Future[T])

(implicit executor: ExecutionContext,

scheduler: Scheduler): Future[T]

This mechanism can only be used with idempotent operations](https://image.slidesharecdn.com/advancedpatternsinasynchronousprogramming-170720172240/85/Advanced-patterns-in-asynchronous-programming-36-320.jpg)