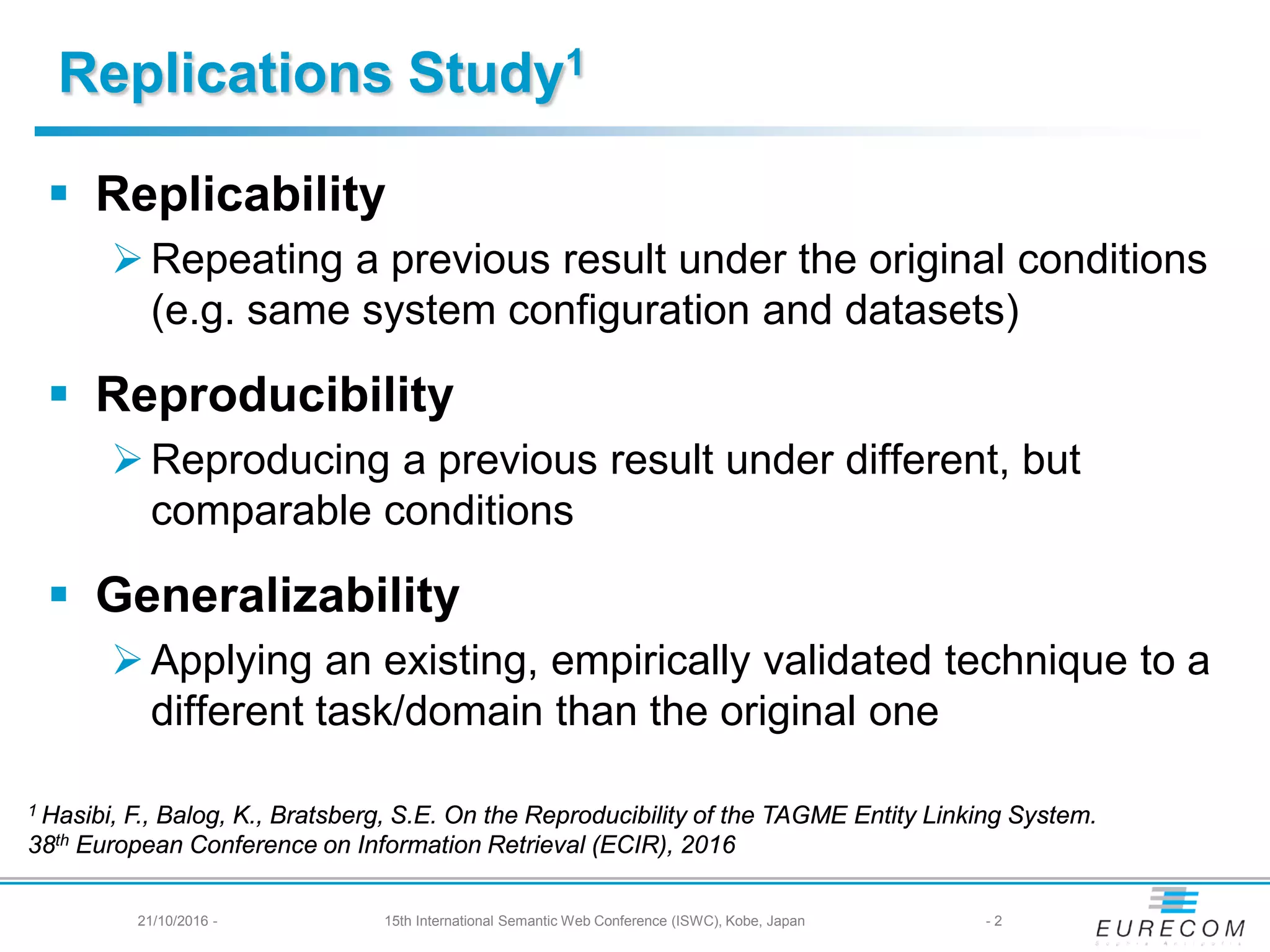

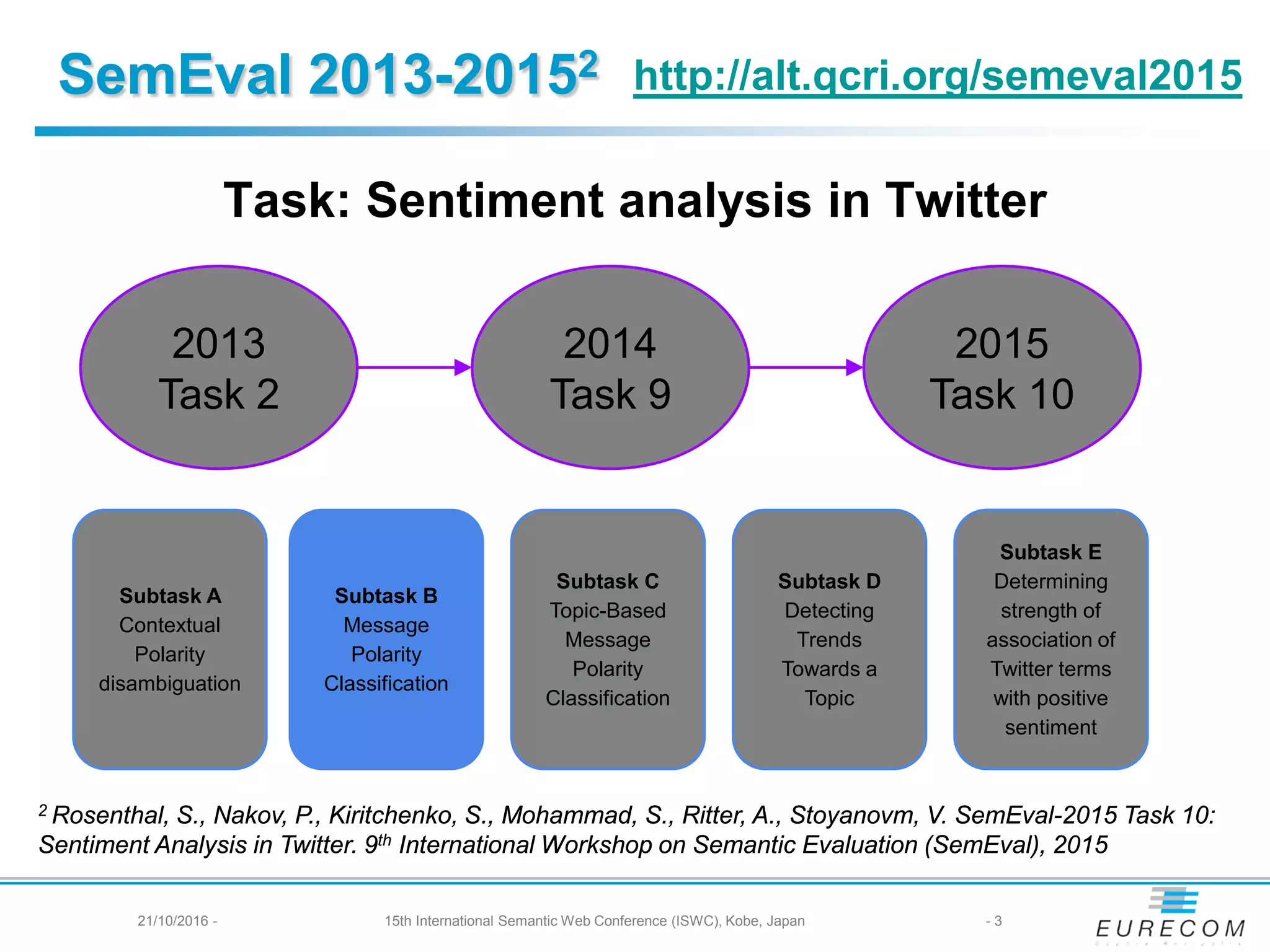

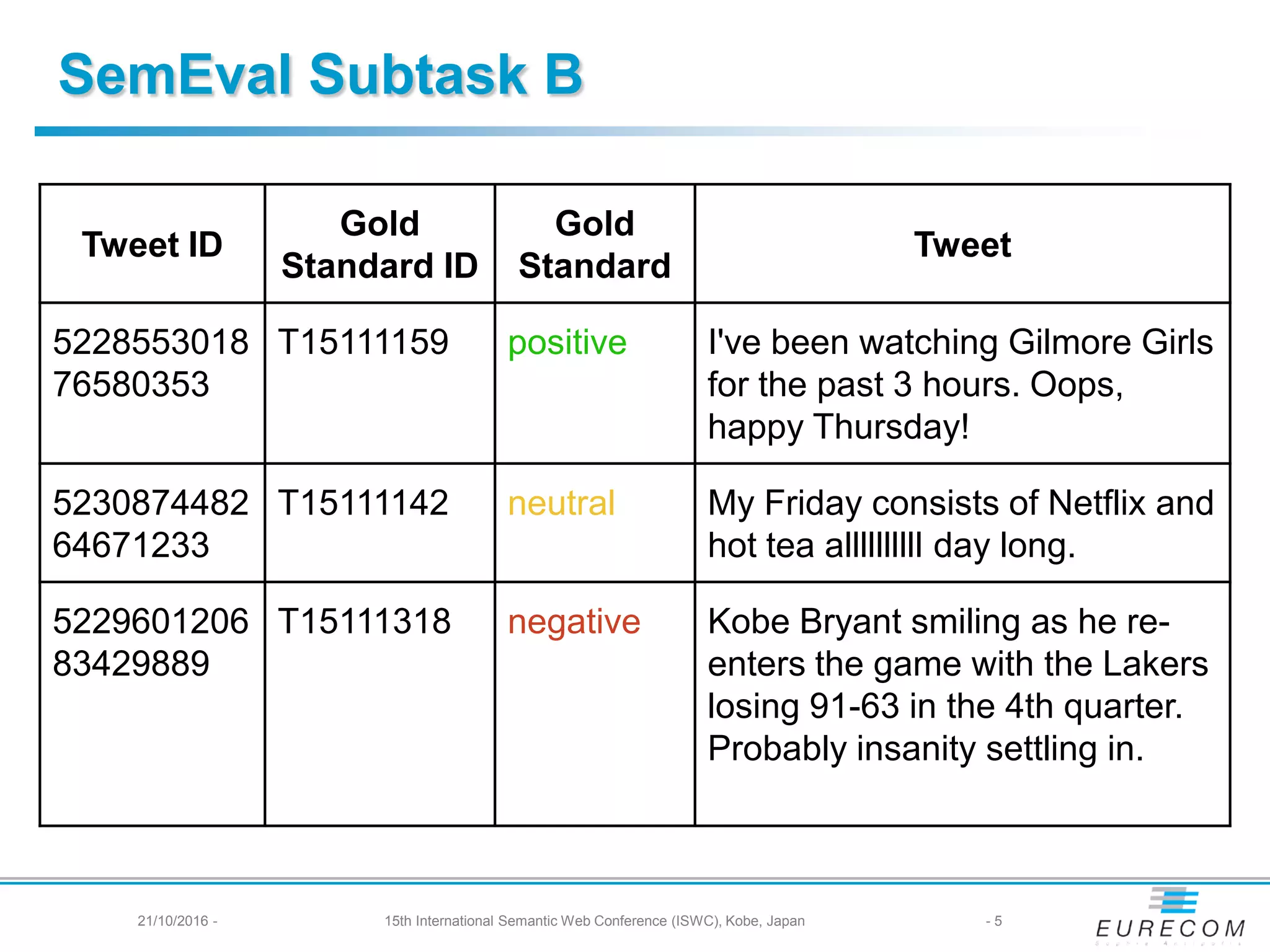

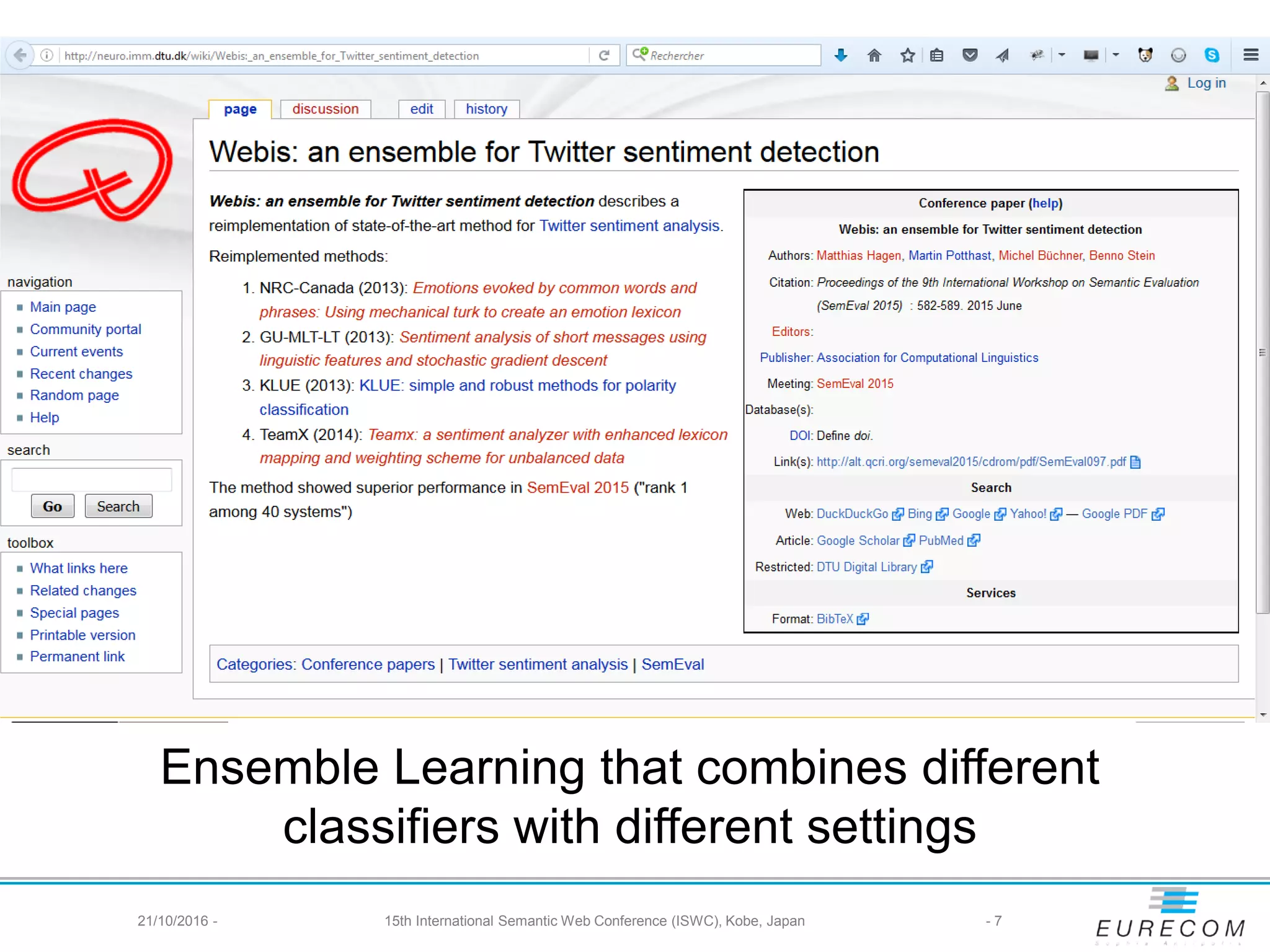

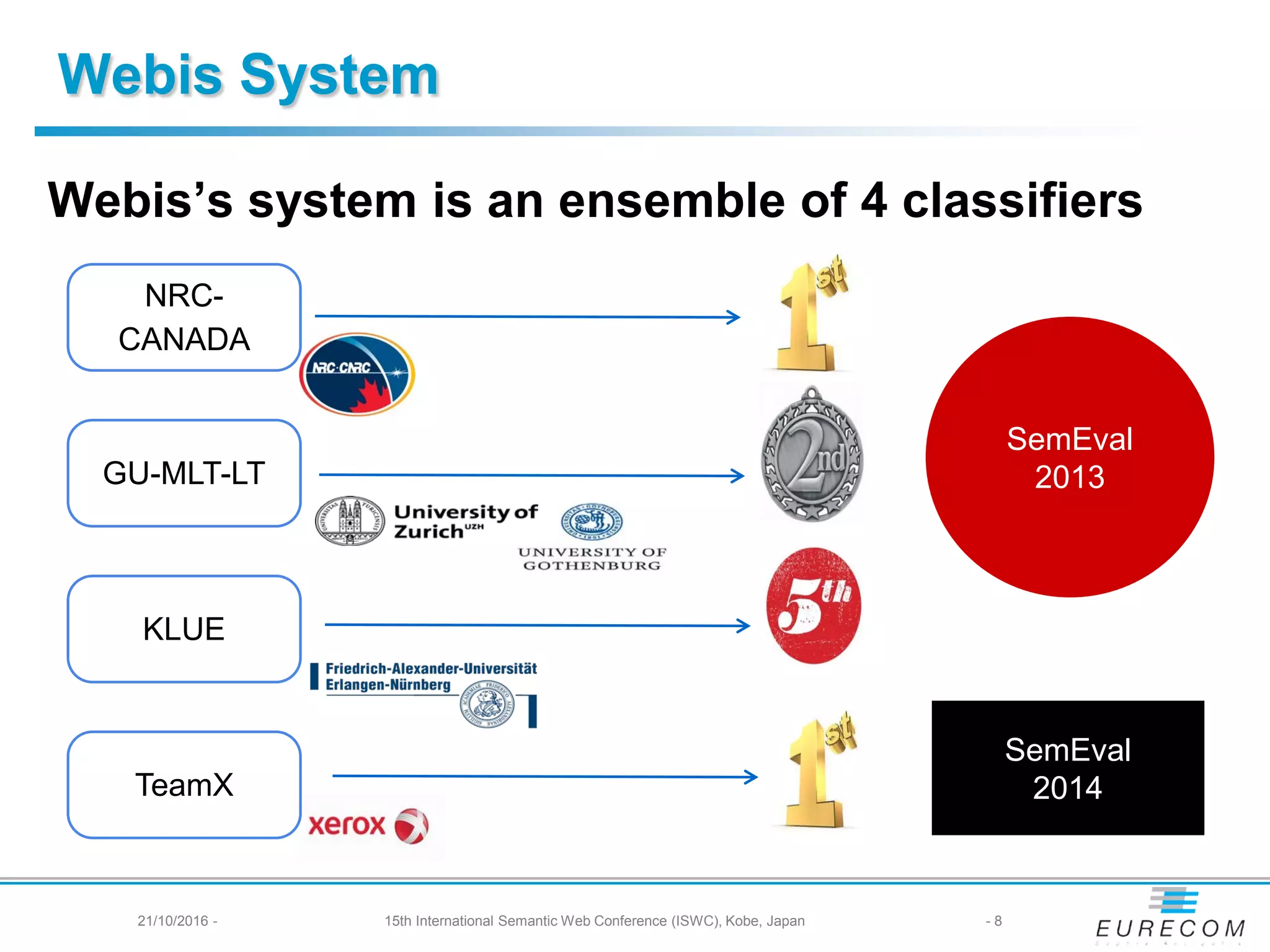

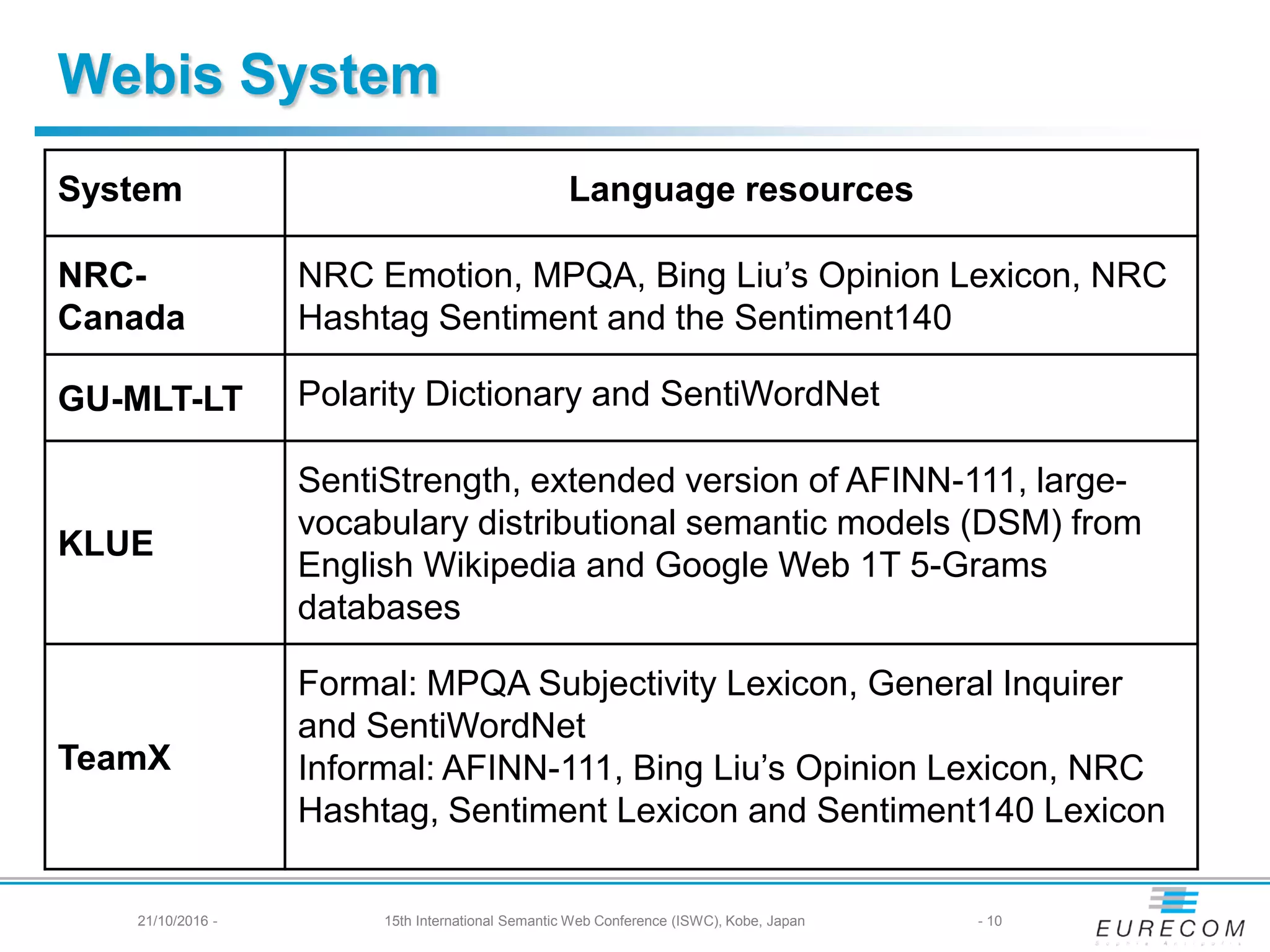

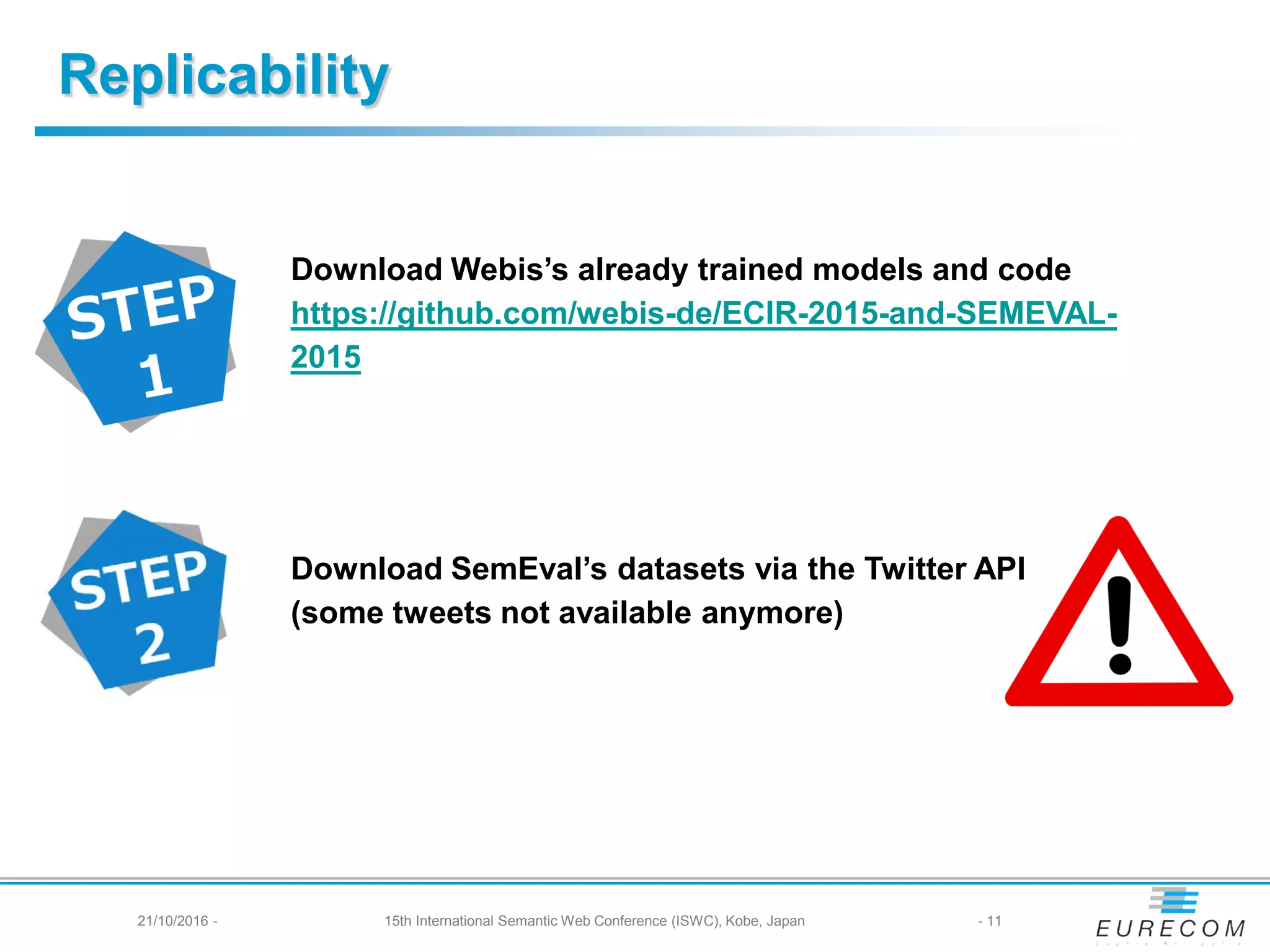

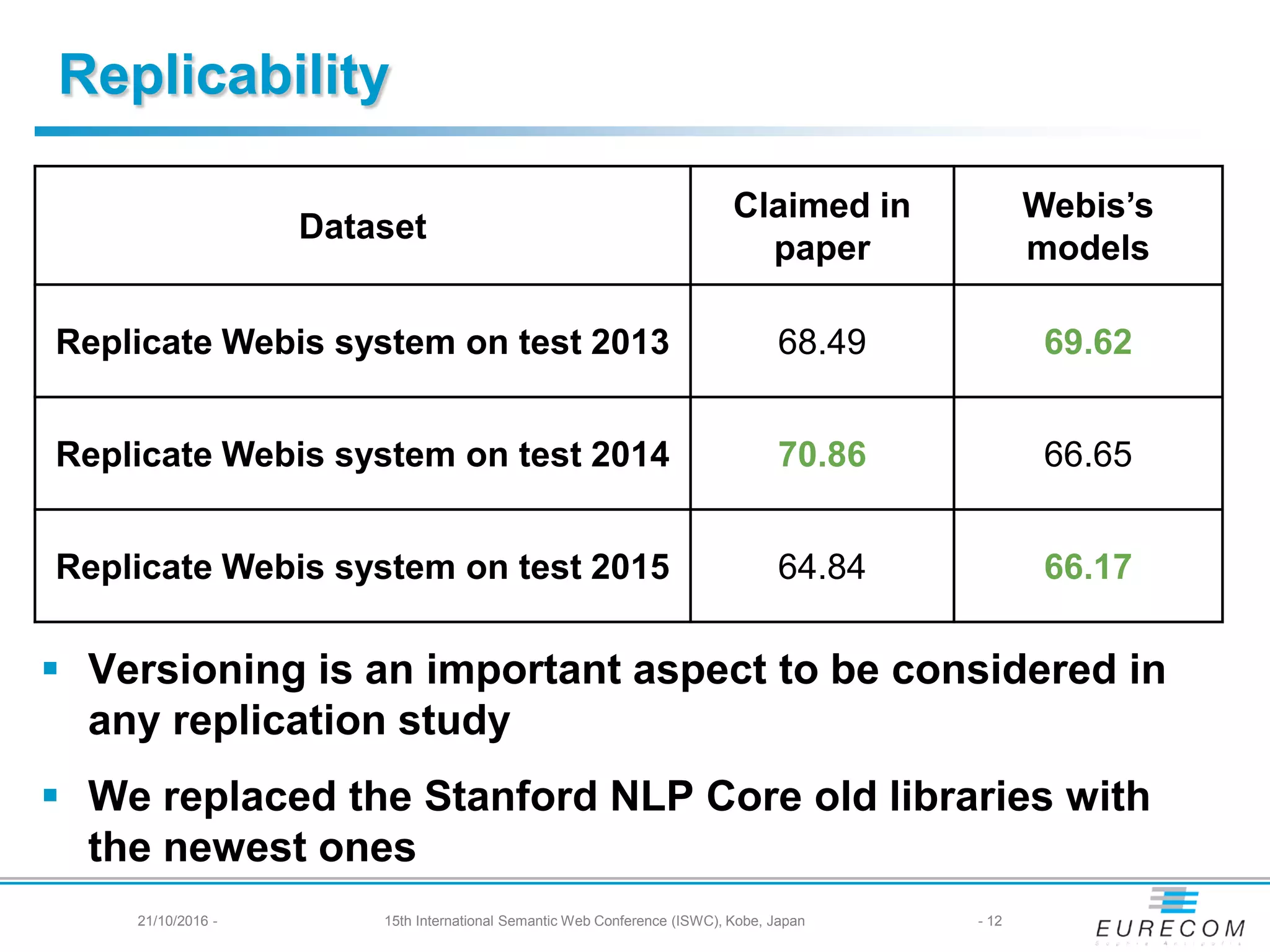

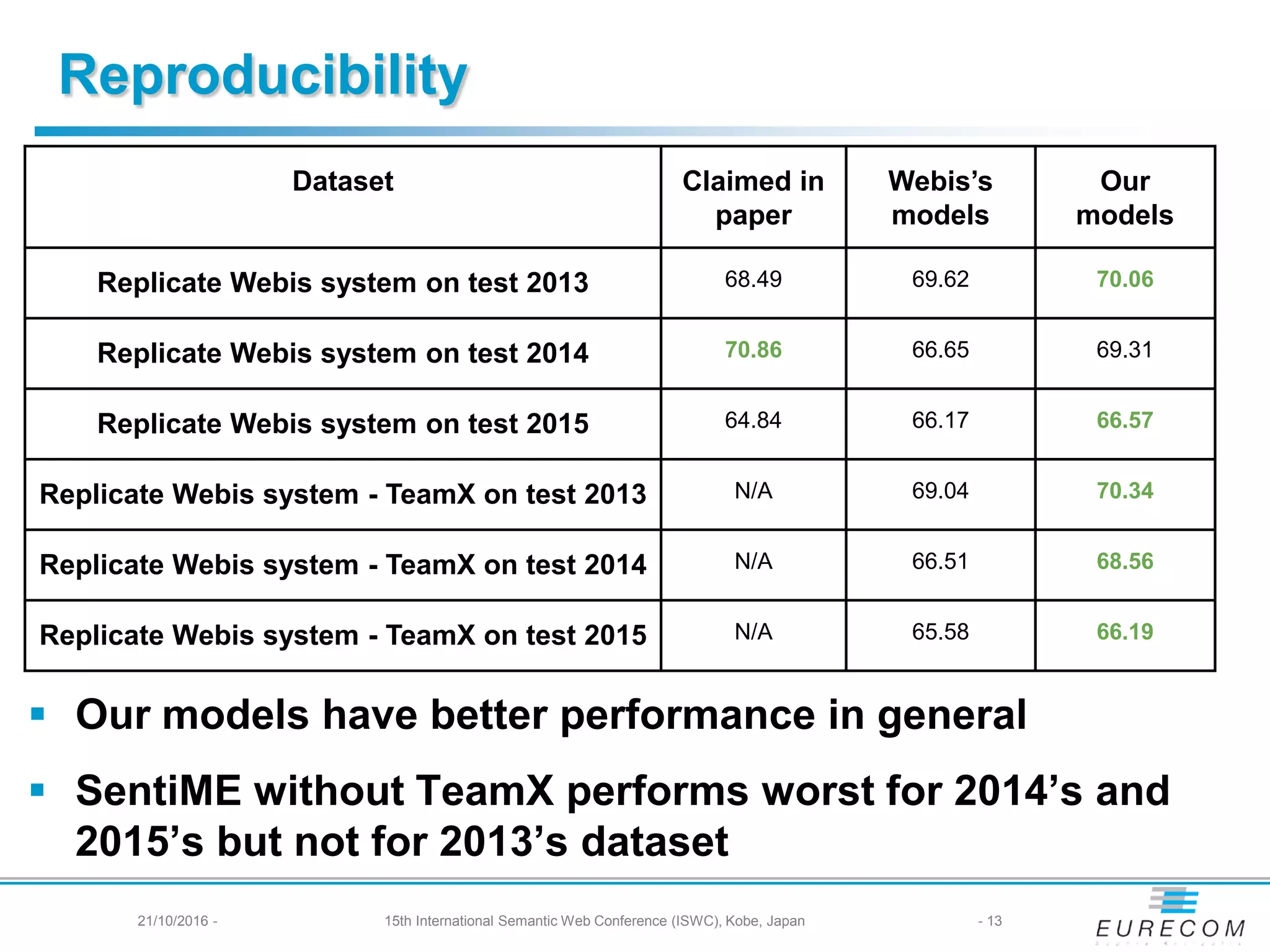

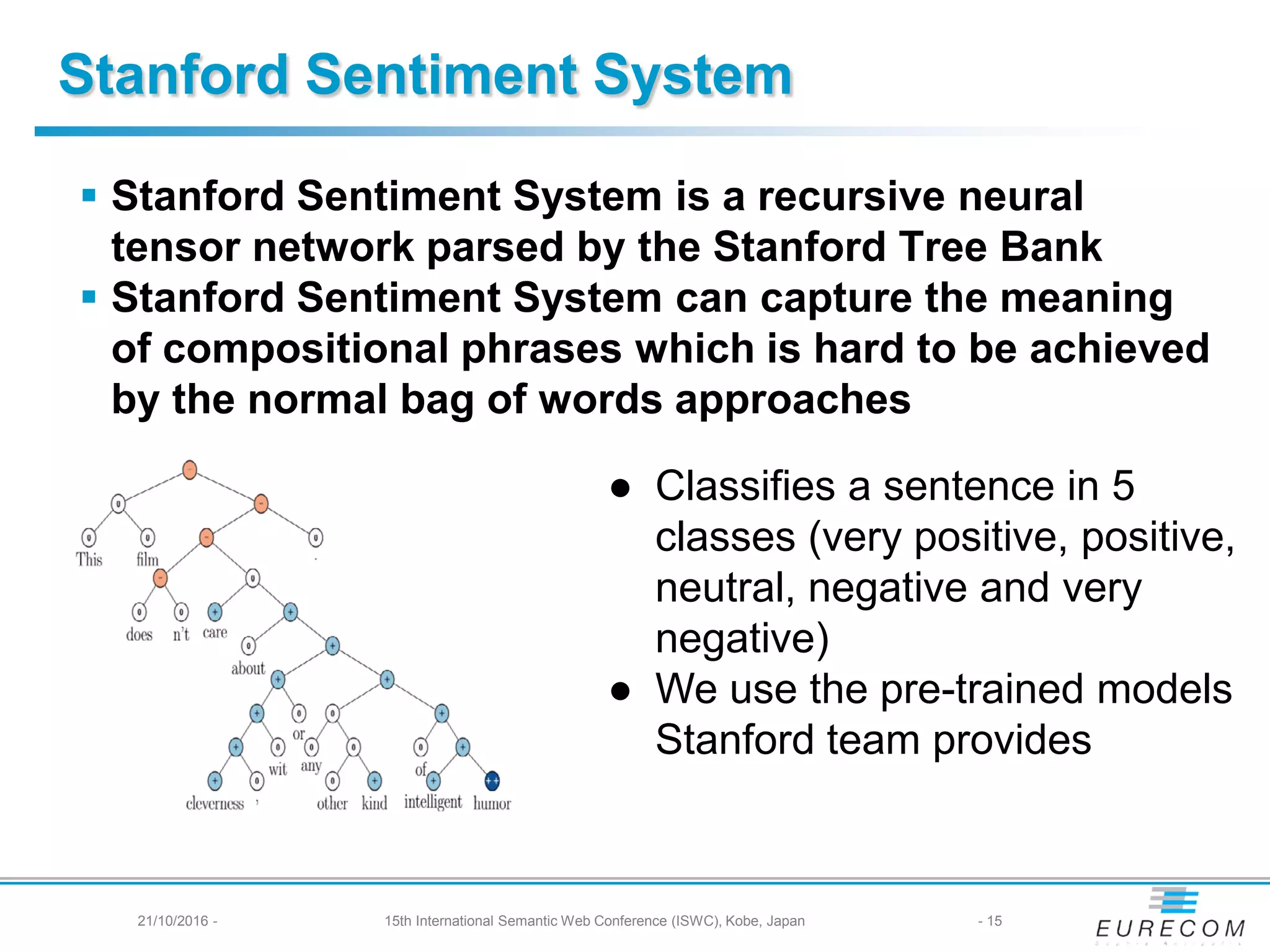

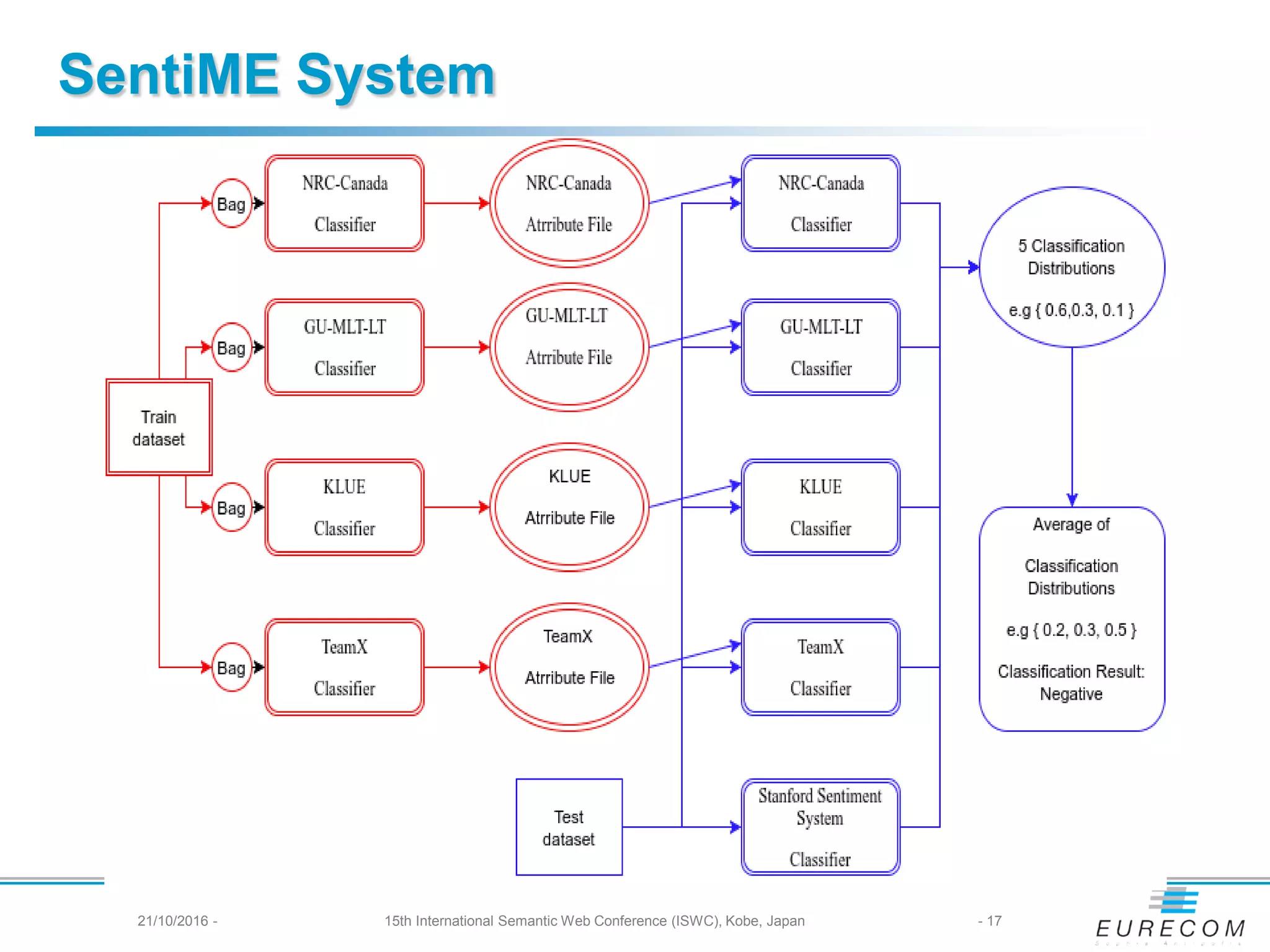

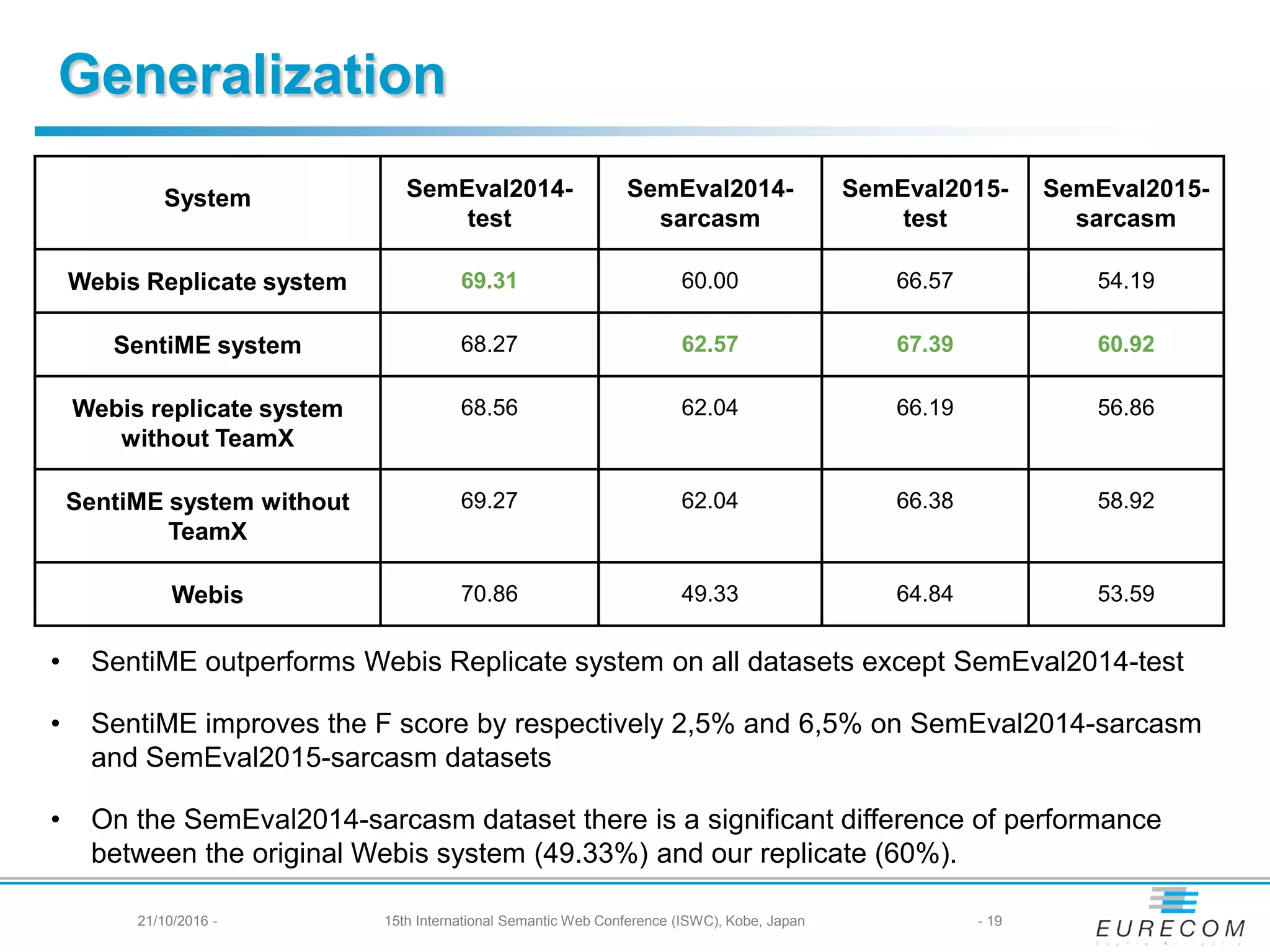

The document describes a study that aimed to replicate and improve upon the top performing system from SemEval Twitter sentiment analysis competitions from 2013-2015. The researchers were able to replicate the Webis system with similar or slightly better performance. They then developed the SentiME system, which included an additional classifier (Stanford Sentiment System) and bagging during training. SentiME outperformed Webis on sarcasm detection datasets, improving F-score by up to 6.5%, demonstrating better generalization capabilities. The study highlights lessons for reproducibility, such as the importance of archiving data, code, and software versions used.