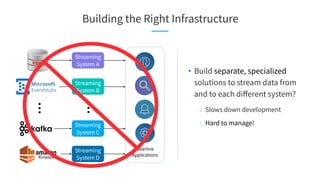

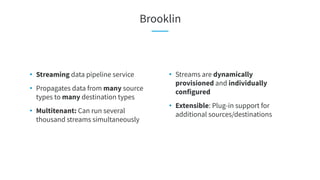

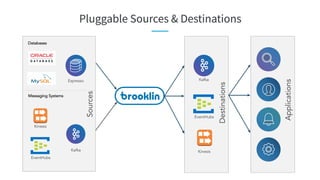

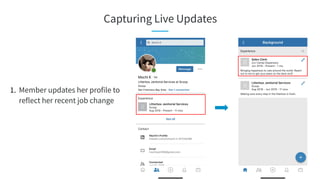

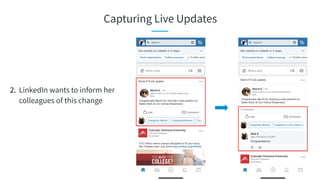

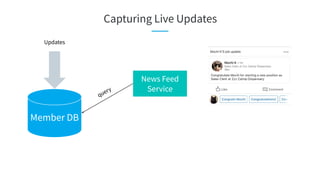

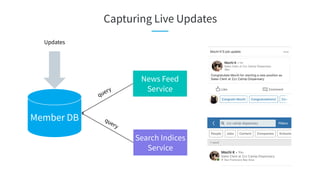

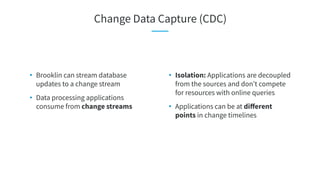

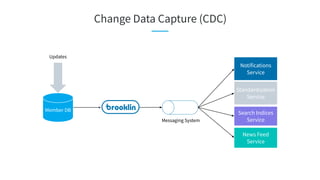

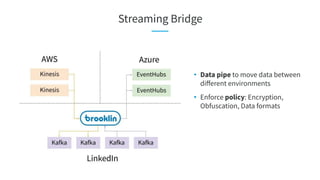

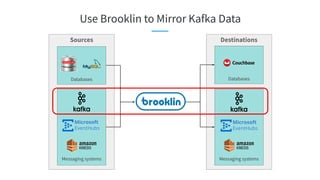

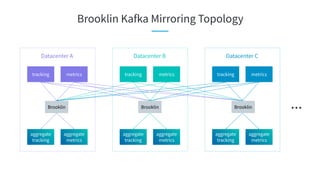

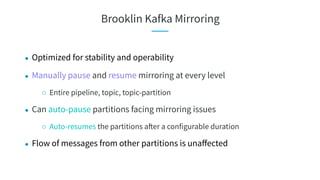

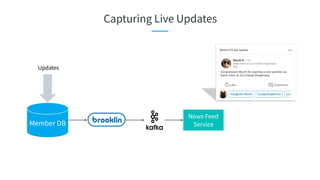

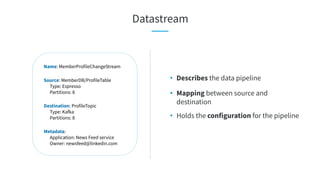

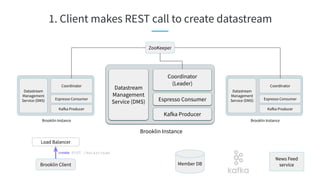

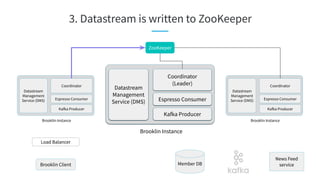

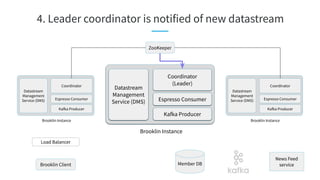

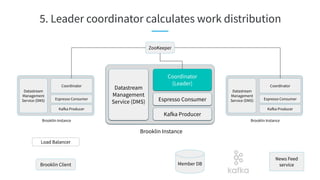

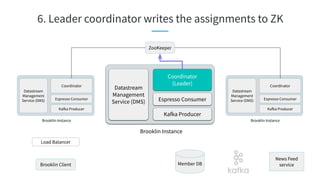

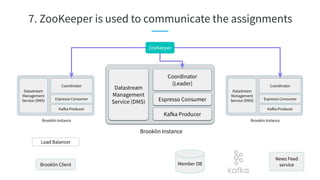

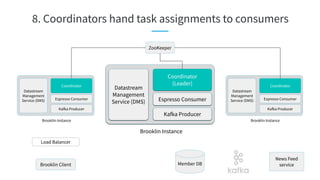

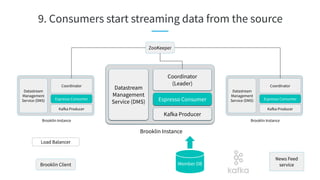

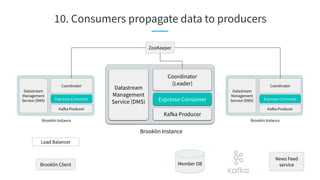

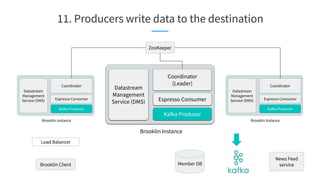

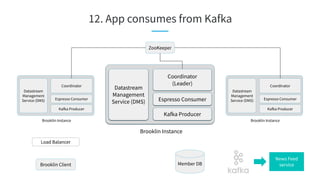

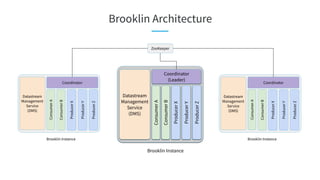

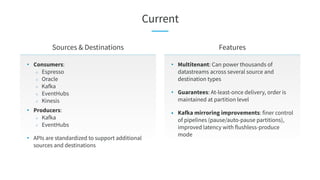

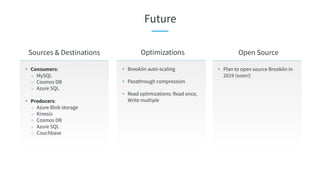

The document discusses Brooklin, a streaming data pipeline service used by LinkedIn to manage near real-time data delivery across various applications and systems, replacing Kafka MirrorMaker due to operational challenges. It outlines scenarios for change data capture, data flow between different environments, and the architecture for creating and managing data streams. Brooklin is designed for extensibility, centralized management, and to optimize data processing efficiency, supporting a significant volume of messages and a diverse range of data sources and destinations.