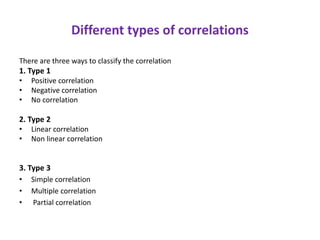

This document discusses correlation coefficient and path coefficient analysis. It defines correlation as a statistical method to analyze the relationship between two or more variables. Correlation determines the degree of relationship but not causation. The document then discusses different types of correlation including positive, negative, linear, non-linear, simple, multiple and partial correlation. It also discusses methods to measure correlation including scatter diagrams, Karl Pearson's coefficient, Spearman's coefficient and concurrent deviation method. Finally, it explains path analysis which can be used to partition correlations into direct and indirect effects when studying causal relationships between variables.

![Karl Pearson’s Correlation Coefficient

Karl Pearson (1857-1936) British mathematician and statistician

r = 1/N[ (X – X) (Y – Y)]

1/N(X – X)2 1/N(Y – Y)2

r = N(XY) – (X) (Y)

[N X2 – (X)2] [NY2 – (Y)2]

r = Covariance ( X, Y)

SD (X) . SD (Y)

An alternative computational equation is given below.

The extent to which two variables vary together is called covariance

and its measurement is the correlation coefficient

Where N= No. of Pairs

or](https://image.slidesharecdn.com/13943056-220929214441-feb39239/85/13943056-ppt-17-320.jpg)