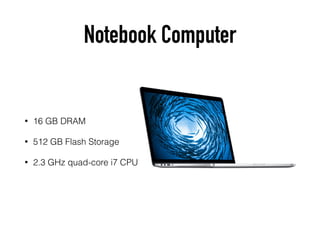

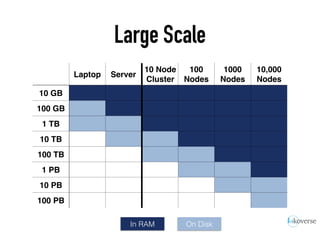

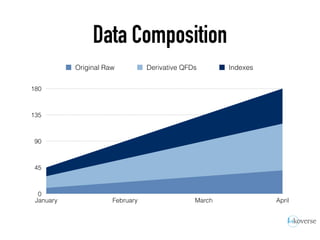

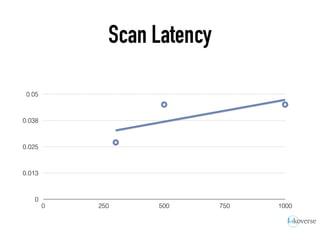

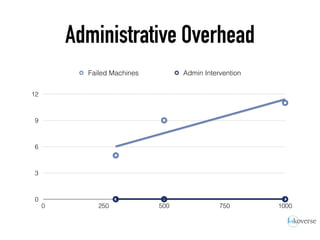

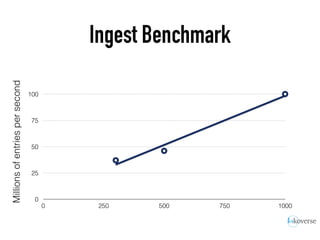

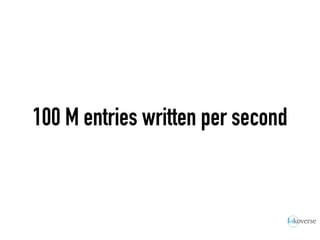

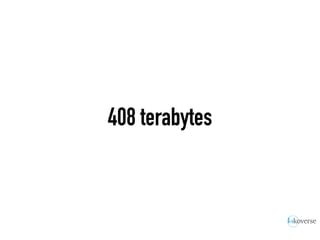

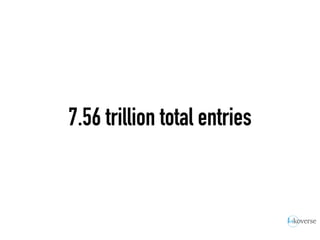

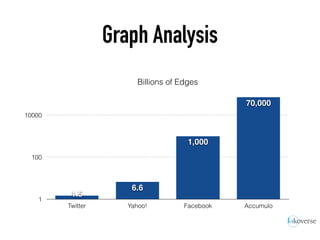

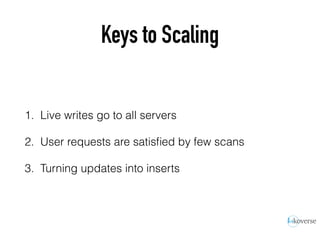

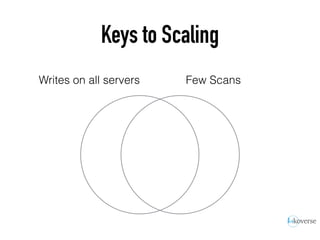

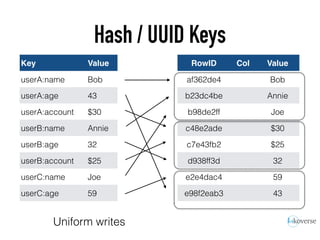

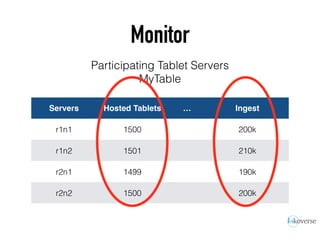

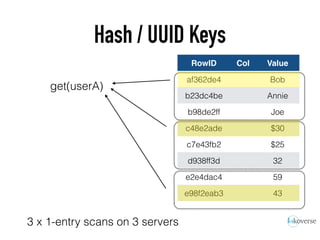

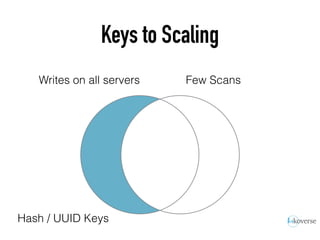

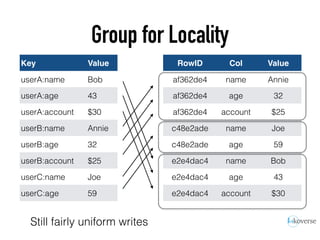

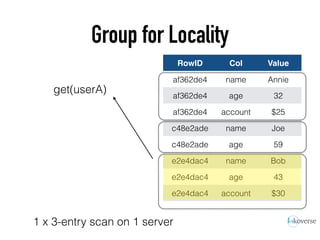

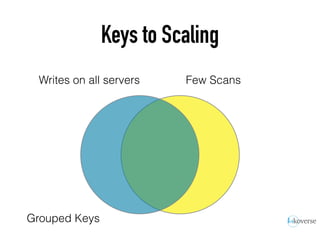

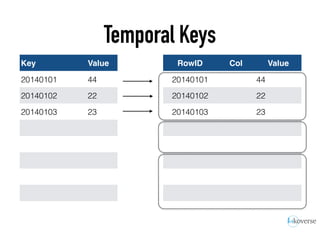

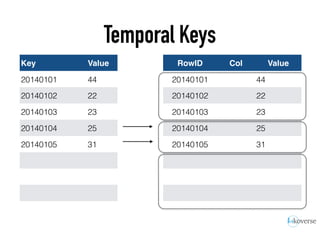

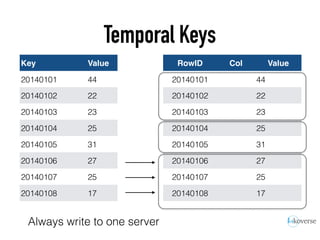

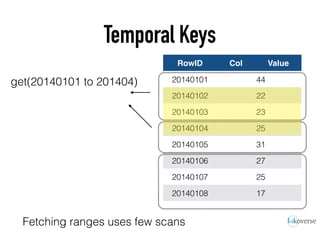

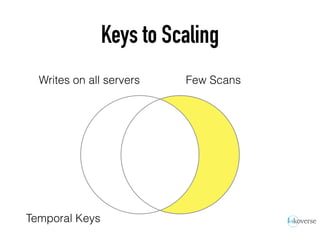

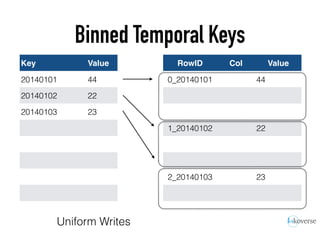

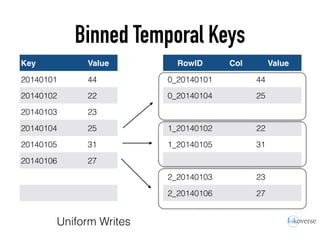

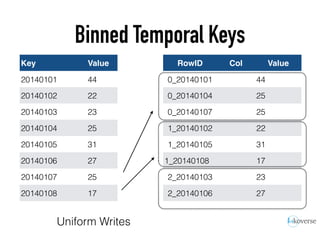

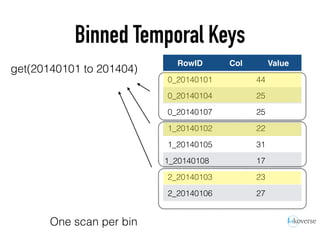

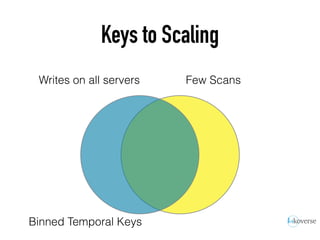

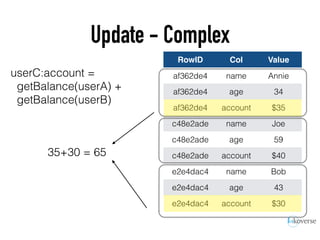

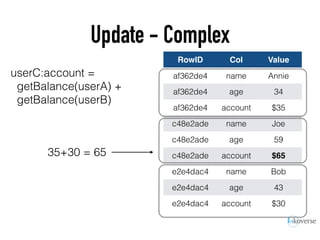

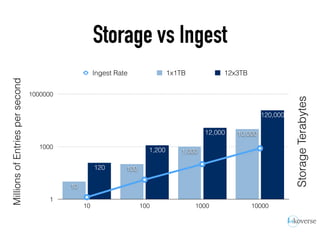

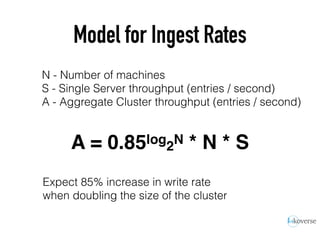

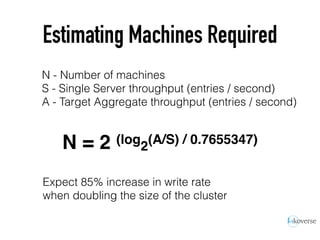

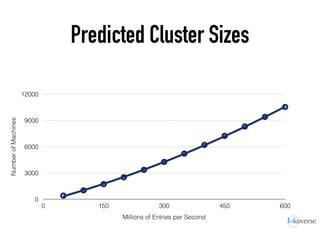

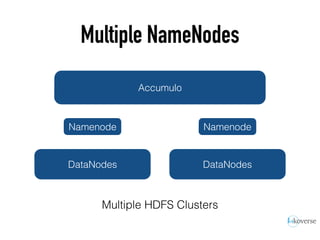

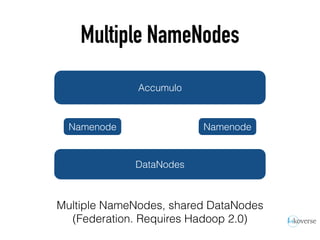

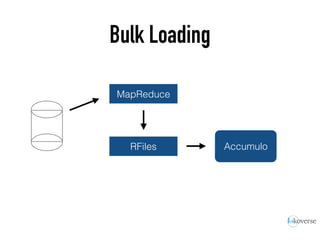

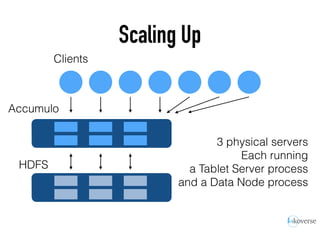

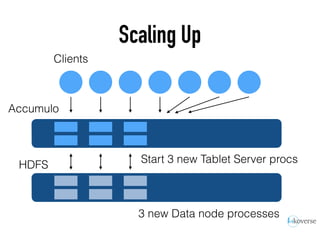

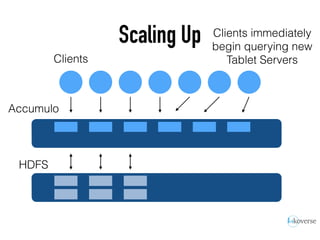

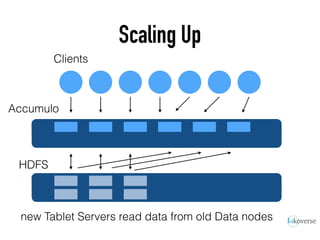

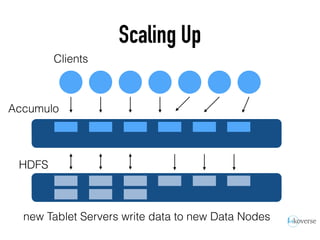

The document discusses the scaling of large-scale Accumulo clusters, detailing performance benchmarks, particular keys to scaling, and guidelines for optimal configuration. It emphasizes the importance of write parallelism, data organization, and effective use of header structures. Additionally, it covers aspects such as hardware requirements, common failure rates, and data management strategies within the infrastructure.