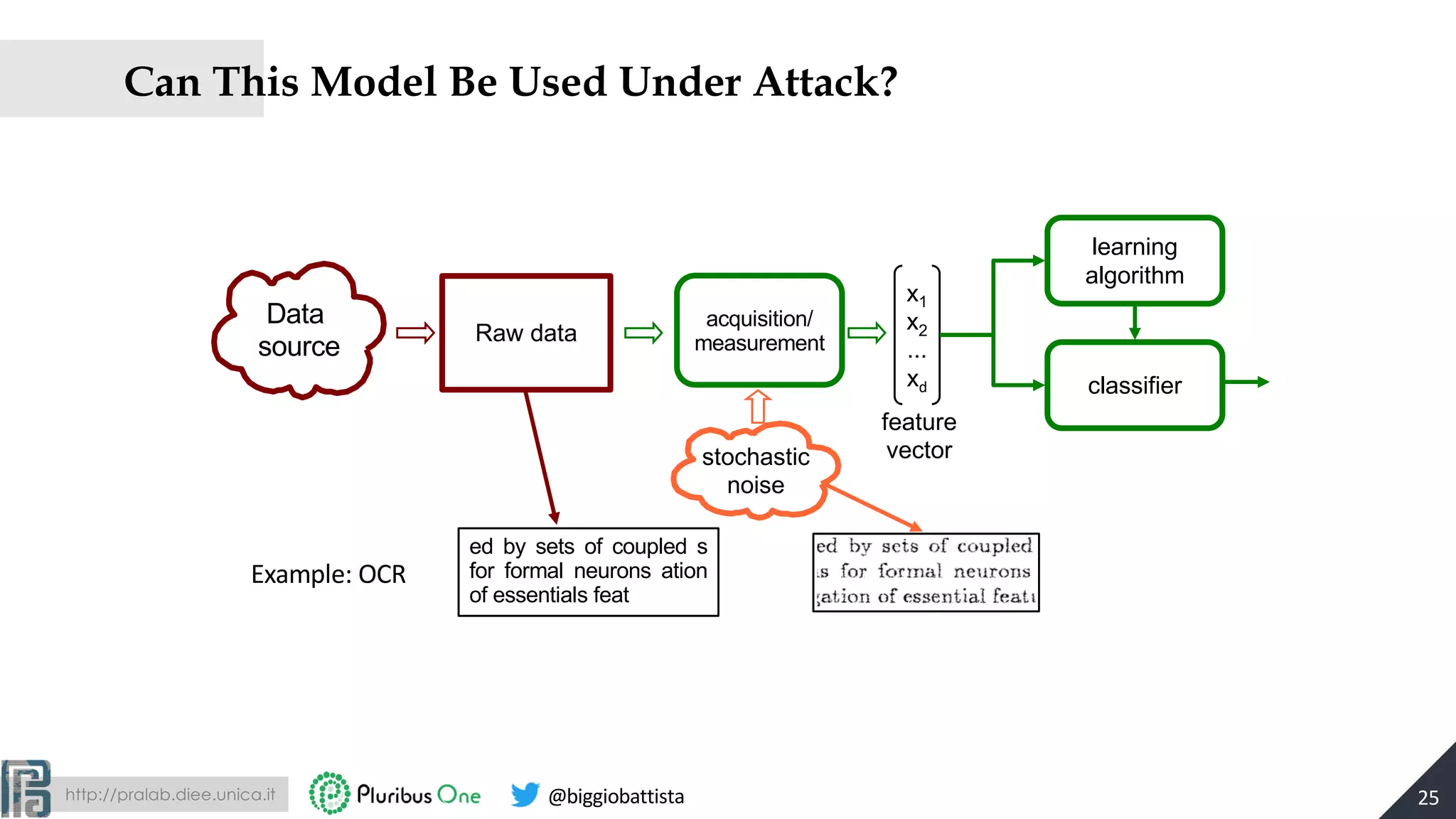

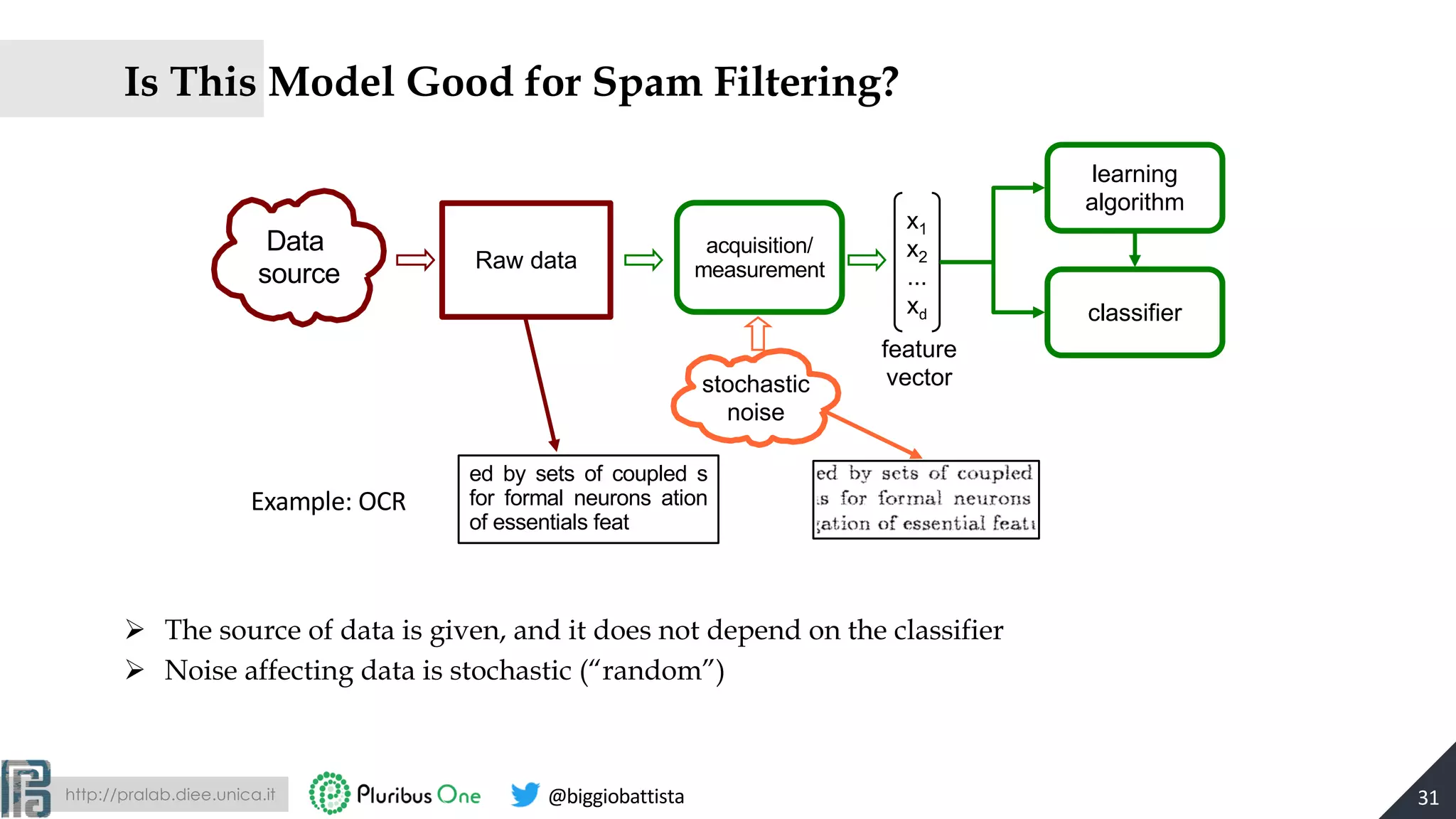

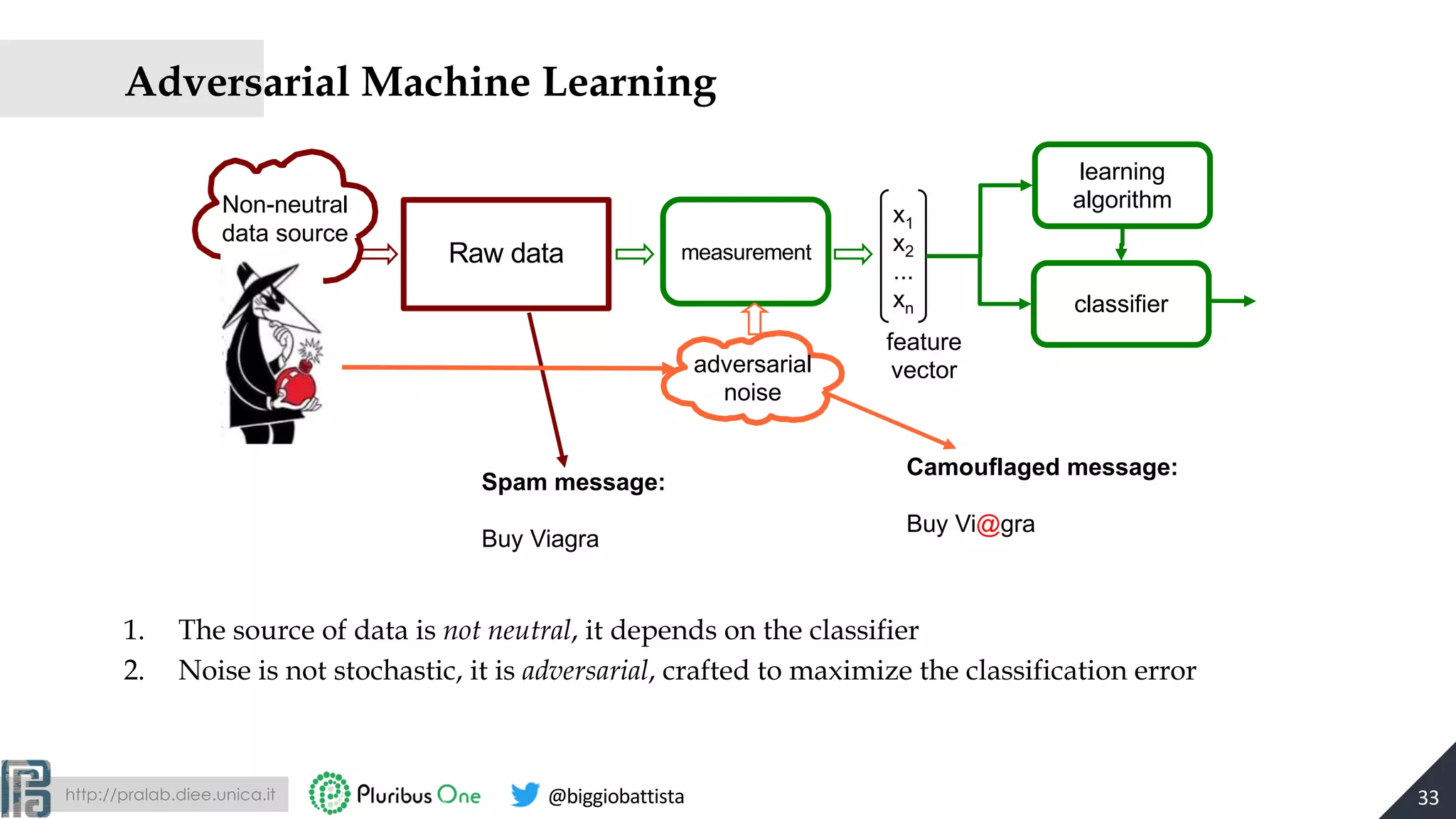

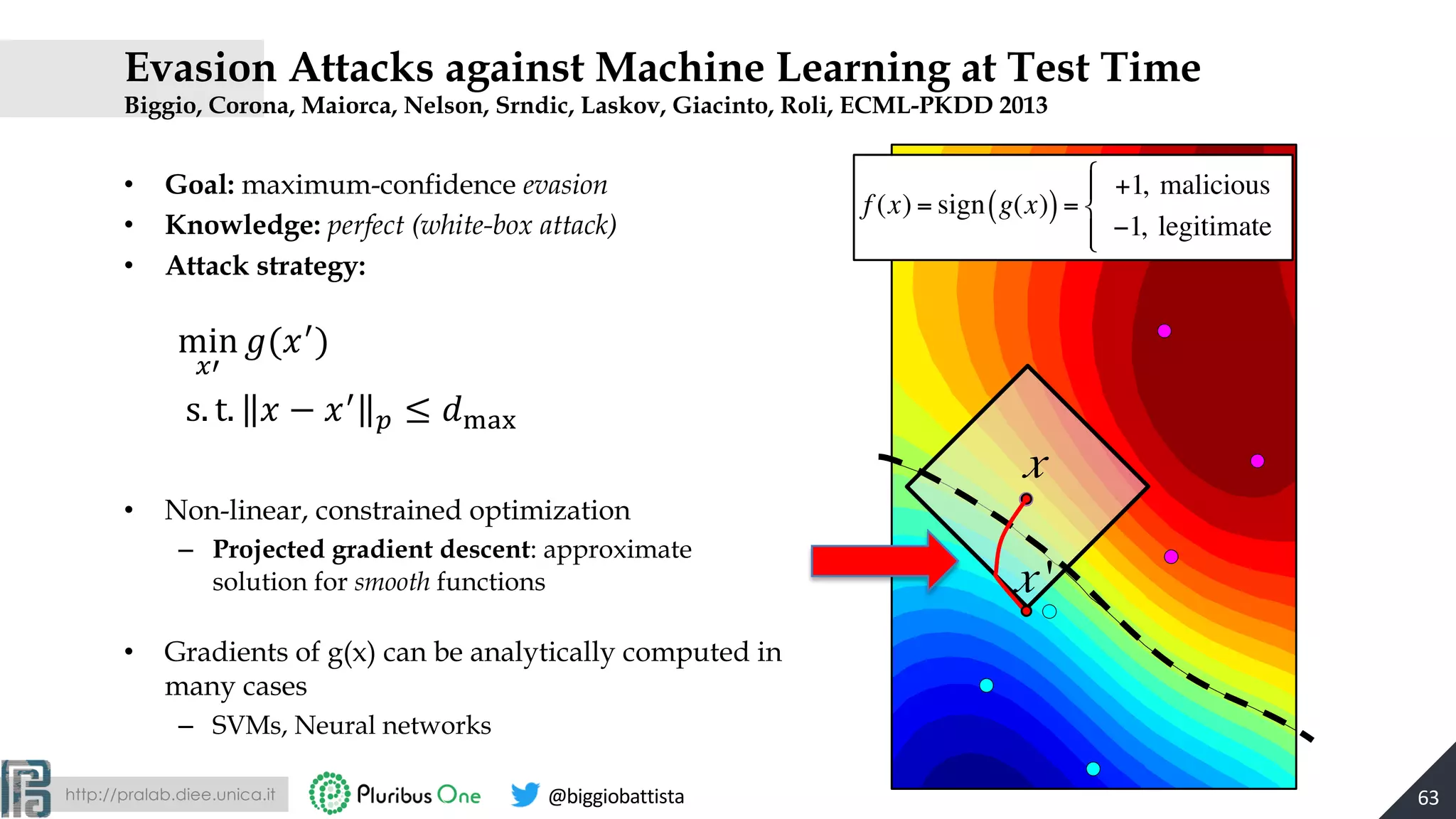

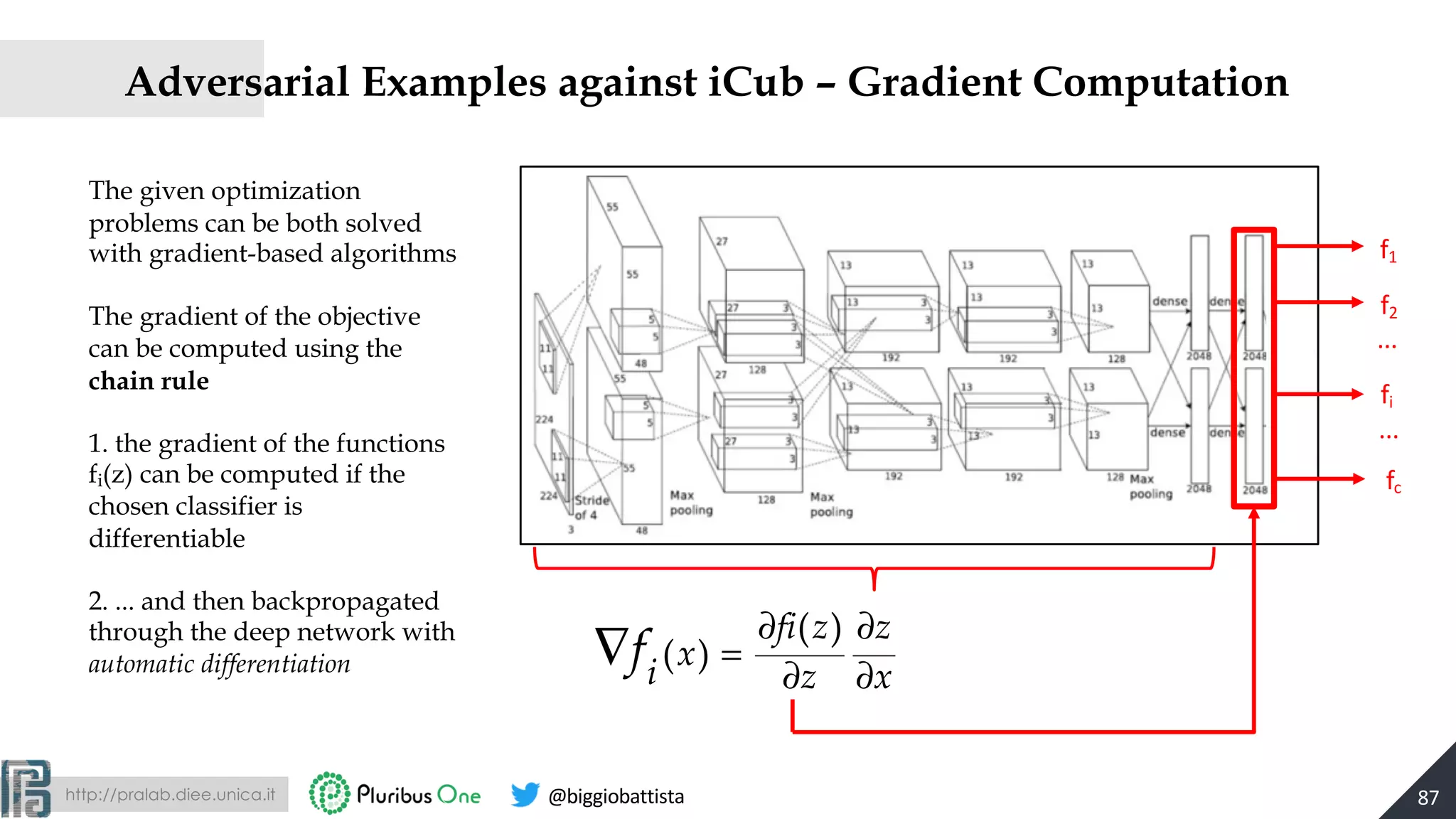

The document discusses the evolution of machine learning and the rise of adversarial machine learning over the past decade. It highlights the security risks posed by adversarial attacks on machine learning systems and emphasizes the need for adversary-aware machine learning to counteract these threats. Key concepts include the distinction between adversarial and stochastic noise, the arms race between adversaries and classifiers, and strategies for designing more secure machine learning systems.

![http://pralab.diee.unica.it @biggiobattista

Evasion of Deep Networks for EXE Malware Detection

• MalConv: convolutional deep network trained on raw bytes to detect EXE malware

• Our attack can evade it by adding few padding bytes

21

[Kolosniaji, Biggio, Roli et al., Adversarial Malware Binaries, EUSIPCO2018]

[Demetrio, Biggio et al., Explaining Vulnerability of DL ..., ITASEC 2019]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-21-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

The Data Source Can Add “Good” Words

29

Total score = 1.0

From: spam@example.it

Buy Viagra !

conference meeting

< 5.0 (threshold)

Linear Classifier

Feature weights

buy = 1.0

viagra = 5.0

conference = -2.0

meeting = -3.0

Ham

üAdding good words is a typical spammers trick [Z. Jorgensen et al., JMLR 2008]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-29-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

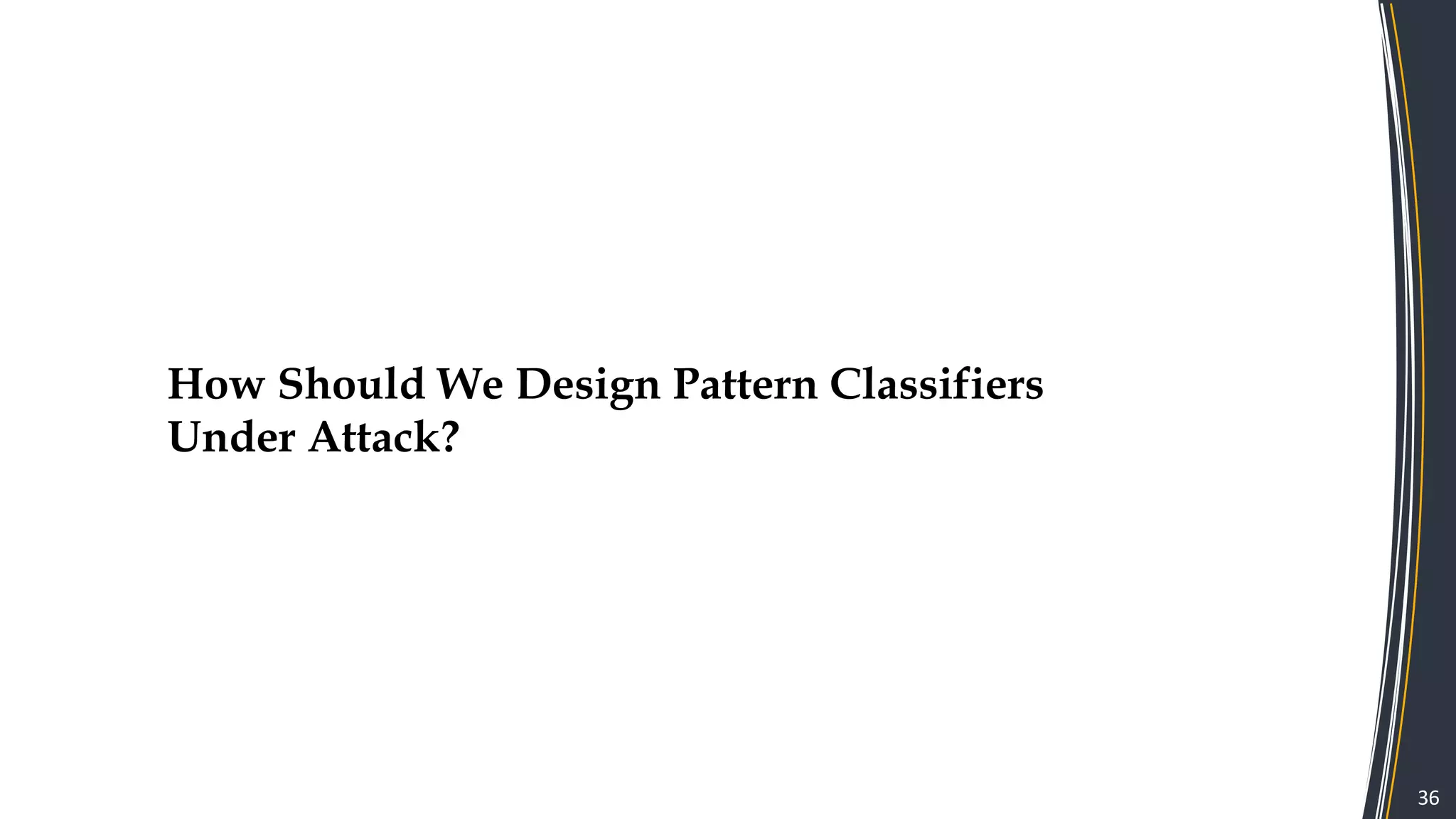

Adversary-aware Machine Learning

37

Machine learning systems should be aware of the arms race with the adversary

[Biggio, Fumera, Roli. Security evaluation of pattern classifiers under attack, IEEE TKDE, 2014]

Adversary System Designer

Analyze system

Devise and

execute attack Analyze attack

Develop countermeasure](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-37-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversary-aware Machine Learning

44

Machine learning systems should be aware of the arms race with the adversary

[Biggio, Fumera, Roli. Security evaluation of pattern classifiers under attack, IEEE TKDE, 2014]

Adversary System Designer

Analyze system

Devise and

execute attack Analyze attack

Develop countermeasure](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-44-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversary-aware Machine Learning

45

Machine learning systems should be aware of the arms race with the adversary

[Biggio, Fumera, Roli. Security evaluation of pattern classifiers under attack, IEEE TKDE, 2014]

System Designer System Designer

Simulate attack Evaluate attack’s impact

Develop countermeasureModel adversary](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-45-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversary’s Goal

• To cause a security violation...

49

Misclassifications

that do not

compromise normal

system operation

Integrity

Misclassifications

that compromise

normal system

operation

(denial of service)

Availability

Querying strategies that

reveal confidential

information on the

learning model or its users

Confidentiality / Privacy

[Barreno et al., Can Machine Learning Be Secure? ASIACCS ‘06]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-49-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversary’s Knowledge

• Perfect-knowledge (white-box) attacks

– upper bound on the performance degradation under attack

50

TRAINING DATA

FEATURE

REPRESENTATION

LEARNING

ALGORITHM

e.g., SVM

x1

x2

...

xd

x xx

x x

x

x

x

x

x

x

x

x xxx

x

- Learning algorithm

- Parameters (e.g., feature weights)

- Feedback on decisions

[B. Biggio, G. Fumera, F. Roli, IEEE TKDE 2014]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-50-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Limited-knowledge Attacks

– Ranging from gray-box to black-box attacks

51

TRAINING DATA

FEATURE

REPRESENTATION

LEARNING

ALGORITHM

e.g., SVM

x1

x2

...

xd

x xx

x x

x

x

x

x

x

x

x

x xxx

x

- Learning algorithm

- Parameters (e.g., feature weights)

- Feedback on decisions

Adversary’s Knowledge

[B. Biggio, G. Fumera, F. Roli, IEEE TKDE 2014]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-51-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Kerckhoffs’ Principle

• Kerckhoffs’ Principle (Kerckhoffs 1883) states that the security of a system should not rely on

unrealistic expectations of secrecy

– It’s the opposite of the principle of “security by obscurity”

• Secure systems should make minimal assumptions about what can realistically be kept secret

from a potential attacker

• For machine learning systems, one could assume that the adversary is aware of the learning

algorithm and can obtain some degree of information about the data used to train the learner

• But the best strategy is to assess system security under different levels of adversary’s

knowledge

52[Joseph et al., Adversarial Machine Learning, Cambridge Univ. Press, 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-52-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversary’s Capability

53[B. Biggio, G. Fumera, F. Roli, IEEE TKDE 2014; M. Barreno et al., ML 2010]

• Attackers may manipulate training data and/or test data

TRAINING

TEST

Influence model at training time to cause subsequent errors at test time

poisoning attacks, backdoors

Manipulate malicious samples at test time to cause misclassications

evasion attacks, adversarial examples](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-53-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

A Deliberate Poisoning Attack?

54

[http://exploringpossibilityspace.blogspot.it/2016

/03/poor-software-qa-is-root-cause-of-tay.html]

Microsoft deployed Tay,

and AI chatbot designed

to talk to youngsters on

Twitter, but after 16 hours

the chatbot was shut

down since it started to

raise racist and offensive

comments.](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-54-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversary’s Capability

• Luckily, the adversary is not omnipotent, she is constrained…

55[R. Lippmann, Dagstuhl Workshop, 2012]

Email messages must be understandable by human readers

Malware must execute on a computer, usually exploiting a

known vulnerability](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-55-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Constraints on data manipulation

– maximum number of samples that can be added to the training data

• the attacker usually controls only a small fraction of the training samples

– maximum amount of modifications

• application-specific constraints in feature space

• e.g., max. number of words that are modified in spam emails

56

d(x, !x ) ≤ dmax

x2

x1

f(x)

x

Feasible domain

x '

TRAINING

TEST

Adversary’s Capability

[B. Biggio, G. Fumera, F. Roli, IEEE TKDE 2014]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-56-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Conservative Design

• The design and analysis of a system should avoid unnecessary or unreasonable assumptions

on the adversary’s capability

– worst-case security evaluation

• Conversely, analysing the capabilities of an omnipotent adversary reveals little about a

learning system’s behaviour against realistically-constrained attackers

• Again, the best strategy is to assess system security under different levels of adversary’s

capability

57[Joseph et al., Adversarial Machine Learning, Cambridge Univ. Press, 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-57-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Detection of Malicious PDF Files

Srndic & Laskov, Detection of malicious PDF files based on hierarchical document structure, NDSS 2013

“The most aggressive evasion strategy we could conceive was successful for only 0.025% of malicious

examples tested against a nonlinear SVM classifier with the RBF kernel [...].

Currently, we do not have a rigorous mathematical explanation for such a surprising robustness. Our

intuition suggests that [...] the space of true features is “hidden behind” a complex nonlinear

transformation which is mathematically hard to invert.

[...] the same attack staged against the linear classifier [...] had a 50% success rate; hence, the robustness

of the RBF classifier must be rooted in its nonlinear transformation”

62](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-62-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Computing Descent Directions

Support vector machines

Neural networks

x1

xd

d1

dk

dm

xf g(x)

w1

wk

wm

v11

vmd

vk1

……

……

g(x) = αi yik(x,

i

∑ xi )+ b, ∇g(x) = αi yi∇k(x, xi )

i

∑

g(x) = 1+exp − wkδk (x)

k=1

m

∑

#

$

%

&

'

(

)

*

+

,

-

.

−1

∂g(x)

∂xf

= g(x) 1− g(x)( ) wkδk (x) 1−δk (x)( )vkf

k=1

m

∑

RBF kernel gradient: ∇k(x,xi

) = −2γ exp −γ || x − xi

||2

{ }(x − xi

)

[Biggio, Roli et al., ECML PKDD 2013] 64](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-64-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

An Example on Handwritten Digits

• Nonlinear SVM (RBF kernel) to discriminate between ‘3’ and ‘7’

• Features: gray-level pixel values (28 x 28 image = 784 features)

Few modifications are

enough to evade detection!

1st adversarial examples

generated with gradient-based

attacks date back to 2013!

(one year before attacks to deep

neural networks)

Before attack (3 vs 7)

5 10 15 20 25

5

10

15

20

25

After attack, g(x)=0

5 10 15 20 25

5

10

15

20

25

After attack, last iter.

5 10 15 20 25

5

10

15

20

25

0 500

−2

−1

0

1

2

g(x)

number of iterations

After attack

(misclassified as 7)

65[Biggio, Roli et al., ECML PKDD 2013]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-65-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Bounding the Adversary’s Knowledge

Limited-knowledge (gray/black-box) attacks

• Only feature representation and (possibly) learning algorithm are known

• Surrogate data sampled from the same distribution as the classifier’s training data

• Classifier’s feedback to label surrogate data

PD(X,Y)data

Surrogate

training data

Send queries

Get labels

f(x)

Learn

surrogate

classifier

f’(x)

This is the same underlying idea

behind substitute models and black-

box attacks (transferability)

[N. Papernot et al., IEEE Euro S&P ’16;

N. Papernot et al., ASIACCS’17]

66[Biggio, Roli et al., ECML PKDD 2013]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-66-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Recent Results on Android Malware Detection

• Drebin: Arp et al., NDSS 2014

– Android malware detection directly on the mobile phone

– Linear SVM trained on features extracted from static

code analysis

[Demontis, Biggio et al., IEEE TDSC 2017]

x2

Classifier

0

1

...

1

0

Android app (apk)

malware

benign

x1

x

f (x)

67](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-67-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Recent Results on Android Malware Detection

• Dataset (Drebin): 5,600 malware and 121,000 benign apps (TR: 30K, TS: 60K)

• Detection rate at FP=1% vs max. number of manipulated features (averaged on 10 runs)

– Perfect knowledge (PK) white-box attack; Limited knowledge (LK) black-box attack

68[Demontis, Biggio et al., IEEE TDSC 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-68-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Why Is Machine Learning So Vulnerable?

• Learning algorithms tend to overemphasize some

features to discriminate among classes

• Large sensitivity to changes of such input

features: !"#(")

• Different classifiers tend to find the same set of

relevant features

– that is why attacks can transfer across models!

70[Melis et al., Explaining Black-box Android Malware Detection, EUSIPCO 2018]

&

g(&)

&’

positivenegative](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-70-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

The Discovery of Adversarial Examples

72

... we find that deep neural networks learn input-output mappings that

are fairly discontinuous to a significant extent. We can cause the

network to misclassify an image by applying a certain hardly

perceptible perturbation, which is found by maximizing the network’s

prediction error ...

[Szegedy, Goodfellow et al., Intriguing Properties of NNs, ICLR 2014, ArXiv 2013]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-72-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversarial Examples and Deep Learning

• C. Szegedy et al. (ICLR 2014) independently developed a gradient-based attack against deep

neural networks

– minimally-perturbed adversarial examples

73[Szegedy, Goodfellow et al., Intriguing Properties of NNs, ICLR 2014, ArXiv 2013]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-73-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

!(#) ≠ &

School Bus (x) Ostrich

Struthio Camelus

Adversarial Noise (r)

The adversarial image x + r is visually

hard to distinguish from x

Informally speaking, the solution x + r is

the closest image to x classified as l by f

The solution is approximated using using a box-constrained limited-memory BFGS

Creation of Adversarial Examples

74[Szegedy, Goodfellow et al., Intriguing Properties of NNs, ICLR 2014, ArXiv 2013]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-74-2048.jpg)

![Many Black Swans After 2014…

[Search https://arxiv.org with keywords “adversarial examples”]

75

• Several defenses have been proposed against adversarial

examples, and more powerful attacks have been developed

to show that they are ineffective. Remember the arms race?

• Most of these attacks are modifications to the optimization

problems reported for evasion attacks / adversarial

examples, using different gradient-based solution

algorithms, initializations and stopping conditions.

• Most popular attack algorithms: FGSM (Goodfellow et al.),

JSMA (Papernot et al.), CW (Carlini & Wagner, and follow-

up versions)](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-75-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Why Adversarial Perturbations against Deep Networks are

Imperceptible?

• Large sensitivity of g(x) to input changes

– i.e., the input gradient !"#(") has a large norm (scales with input dimensions!)

– Thus, even small modifications along that direction will cause large changes in the predictions

77

[Simon-Gabriel et al., Adversarial vulnerability of NNs increases

with input dimension, arXiv 2018]

&

g(&)

&’

!"#(")](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-77-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Regularization also impacts (reduces) the size of input gradients

– High regularization requires larger perturbations to mislead detection

– e.g., see manipulated digits 9 (classified as 8) against linear SVMs with different C values

Regularization, Input Gradients and Adversarial Robustness

78[Demontis, Biggio, et al., Why Do Adversarial Attacks Transfer? ... USENIX 2019]

high regularization

large perturbation

low regularization

imperceptible perturbation

C=0.001, eps=1.7 C=1.0, eps=0.47](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-78-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Regularization, Input Gradients and Adversarial Robustness

0.0 0.2 0.4 0.6 0.8 1.0

Regularization (weight decay)

0.05

0.10

0.15

Sizeofinputgradients

0.0

0.2

0.4

0.6

0.8

1.0

Testerror

High complexity Low complexity

test error (no attack)

test error (" = 0.3)

79[Demontis, Biggio, et al., Why Do Adversarial Attacks Transfer? ... USENIX 2019]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-79-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Why Do Adversarial Attacks Transfer?

Regularization and Transferability

80

• Excerpt of our experimental results for evasion attacks on Android malware (DREBIN)

– ‘x’ for low-complexity (strongly-regularized) models

– ‘o’ for high-complexity (weakly-regularized) models

[Demontis, Biggio, et al., Why Do Adversarial Attacks Transfer? ... USENIX 2019]

10 1

100

Size of input gradients (S)

0.2

0.4

0.6

0.8

1.0

Evasionrate("=5)

SVM

logistic

ridge

SVM-RBF

NN

white-box attacks (target)

10 2

10 1

Variability of loss landscape (V)

0.3

0.4

0.5

0.6

Transferrate(✏=30)

black-box attacks (surrogate)black-box attacks (surrogate)](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-80-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Is Deep Learning Safe for Robot Vision?

• Evasion attacks against the iCub humanoid robot

– Deep Neural Network used for visual object recognition

82[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-82-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista [http://old.iit.it/projects/data-sets]

iCubWorld28 Data Set: Example Images

83](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-83-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

From Binary to Multiclass Evasion

• In multiclass problems, classification errors occur in different classes.

• Thus, the attacker may aim:

1. to have a sample misclassified as any class different from the true class (error-generic attacks)

2. to have a sample misclassified as a specific class (error-specific attacks)

84

cup

sponge

dish

detergent

Error-generic attacks

any class

cup

sponge

dish

detergent

Error-specific attacks

[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-84-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Error-generic Evasion

• Error-generic evasion

– k is the true class (blue)

– l is the competing (closest) class in feature space (red)

• The attack minimizes the objective to have the sample misclassified as the closest class (could

be any!)

85

1 0 1

1

0

1

Indiscriminate evasion

[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-85-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Error-specific evasion

– k is the target class (green)

– l is the competing class (initially, the blue class)

• The attack maximizes the objective to have the sample misclassified as the target class

Error-specific Evasion

86

max

1 0 1

1

0

1

Targeted evasion

[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-86-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Example of Adversarial Images against iCub

An adversarial example from class laundry-detergent, modified by

the proposed algorithm to be misclassified as cup

88[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-88-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

The “Sticker” Attack against iCub

Adversarial example generated

by manipulating only a

specific region, to simulate a

sticker that could be applied

to the real-world object.

This image is classified as cup.

89[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-89-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Experiments on Android Malware

• Infinity-norm regularization is the optimal regularizer against sparse evasion attacks

– Sparse evasion attacks penalize | " |# promoting the manipulation of only few features

Results on Adversarial Android Malware

[Demontis, Biggio et al., Yes, ML Can Be More Secure!..., IEEE TDSC 2017]

Absolute weight values |$| in descending order

Why? It bounds the maximum weight absolute values!

min

w,b

w ∞

+C max 0,1− yi f (xi )( )

i

∑ , w ∞

= max

i=1,...,d

wiSec-SVM

93](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-93-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Detecting & Rejecting Adversarial Examples

input perturbation (Euclidean distance)

97[Melis, Biggio et al., Is Deep Learning Safe for Robot Vision? ICCVW ViPAR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-97-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista [S. Sabour at al., ICLR 2016]

Why Rejection (in Representation Space) Is Not Enough?

98](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-98-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Goal: to maximize classification error

• Knowledge: perfect / white-box attack

• Capability: injecting poisoning samples into TR

• Strategy: find an optimal attack point xc in TR that maximizes classification error

xc

classification error = 0.039classification error = 0.022

Poisoning Attacks against Machine Learning

xc

classification error as a function of xc

[Biggio, Nelson, Laskov. Poisoning attacks against SVMs. ICML, 2012] 105](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-105-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Poisoning is a Bilevel Optimization Problem

• Attacker’s objective

– to maximize generalization error on untainted data, w.r.t. poisoning point xc

• Poisoning problem against (linear) SVMs:

Loss estimated on validation data

(no attack points!)

Algorithm is trained on surrogate data

(including the attack point)

[Biggio, Nelson, Laskov. Poisoning attacks against SVMs. ICML, 2012]

[Xiao, Biggio, Roli et al., Is feature selection secure against training data poisoning? ICML, 2015]

[Munoz-Gonzalez, Biggio, Roli et al., Towards poisoning of deep learning..., AISec 2017]

max

$%

& '()*, ,∗

s. t. ,∗

= argmin6 ℒ '89 ∪ ;<, =< , ,

max

$%

>

?@A

B

max(0,1 − =?,∗ ;? )

s. t. ,∗

= argminH,I

A

J

HK

H + C ∑O@A

P

max(0,1 − =O, ;O ) + C max(0,1 − =<, ;< )

106](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-106-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

xc

(0) xc

Gradient-based Poisoning Attacks

• Gradient is not easy to compute

– The training point affects the classification function

• Trick:

– Replace the inner learning problem with its equilibrium (KKT)

conditions

– This enables computing gradient in closed form

• Example for (kernelized) SVM

– similar derivation for Ridge, LASSO, Logistic Regression, etc.

107

xc

(0)

xc

[Biggio, Nelson, Laskov. Poisoning attacks against SVMs. ICML, 2012]

[Xiao, Biggio, Roli et al., Is feature selection secure against training data poisoning? ICML, 2015]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-107-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Experiments on MNIST digits

Single-point attack

• Linear SVM; 784 features; TR: 100; VAL: 500; TS: about 2000

– ‘0’ is the malicious (attacking) class

– ‘4’ is the legitimate (attacked) one

xc

(0)

xc

108[Biggio, Nelson, Laskov. Poisoning attacks against SVMs. ICML, 2012]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-108-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Experiments on MNIST digits

Multiple-point attack

• Linear SVM; 784 features; TR: 100; VAL: 500; TS: about 2000

– ‘0’ is the malicious (attacking) class

– ‘4’ is the legitimate (attacked) one

109[Biggio, Nelson, Laskov. Poisoning attacks against SVMs. ICML, 2012]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-109-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Robust Regression with TRIM

• TRIM learns the model by retaining only training points with the smallest residuals

argmin

',),*

+ ,, -, . =

1

|.|

2

3∈*

5 63 − 83

9 + ;Ω(>)

@ = 1 + A B, . ⊂ 1, … , @ , . = B

[Jagielski, Biggio et al., IEEE Symp. Security and Privacy, 2018] 114](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-114-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Experiments with TRIM (Loan Dataset)

• TRIM MSE is within 1% of original model MSE

Existing methods

Our defense

No defense

Better defense

115[Jagielski, Biggio et al., IEEE Symp. Security and Privacy, 2018]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-115-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Attacks against Machine Learning

Integrity Availability Privacy / Confidentiality

Test data Evasion (a.k.a. adversarial

examples)

- Model extraction / stealing

Model inversion (hill-climbing)

Membership inference attacks

Training data Poisoning (to allow subsequent

intrusions) – e.g., backdoors or

neural network trojans

Poisoning (to maximize

classification error)

-

[Biggio & Roli, Wild Patterns, 2018 https://arxiv.org/abs/1712.03141]

Misclassifications that do

not compromise normal

system operation

Misclassifications that

compromise normal

system operation

Attacker’s Goal

Attacker’s Capability

Querying strategies that reveal

confidential information on the

learning model or its users

Attacker’s Knowledge:

• perfect-knowledge (PK) white-box attacks

• limited-knowledge (LK) black-box attacks (transferability with surrogate/substitute learning models)

117](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-117-2048.jpg)

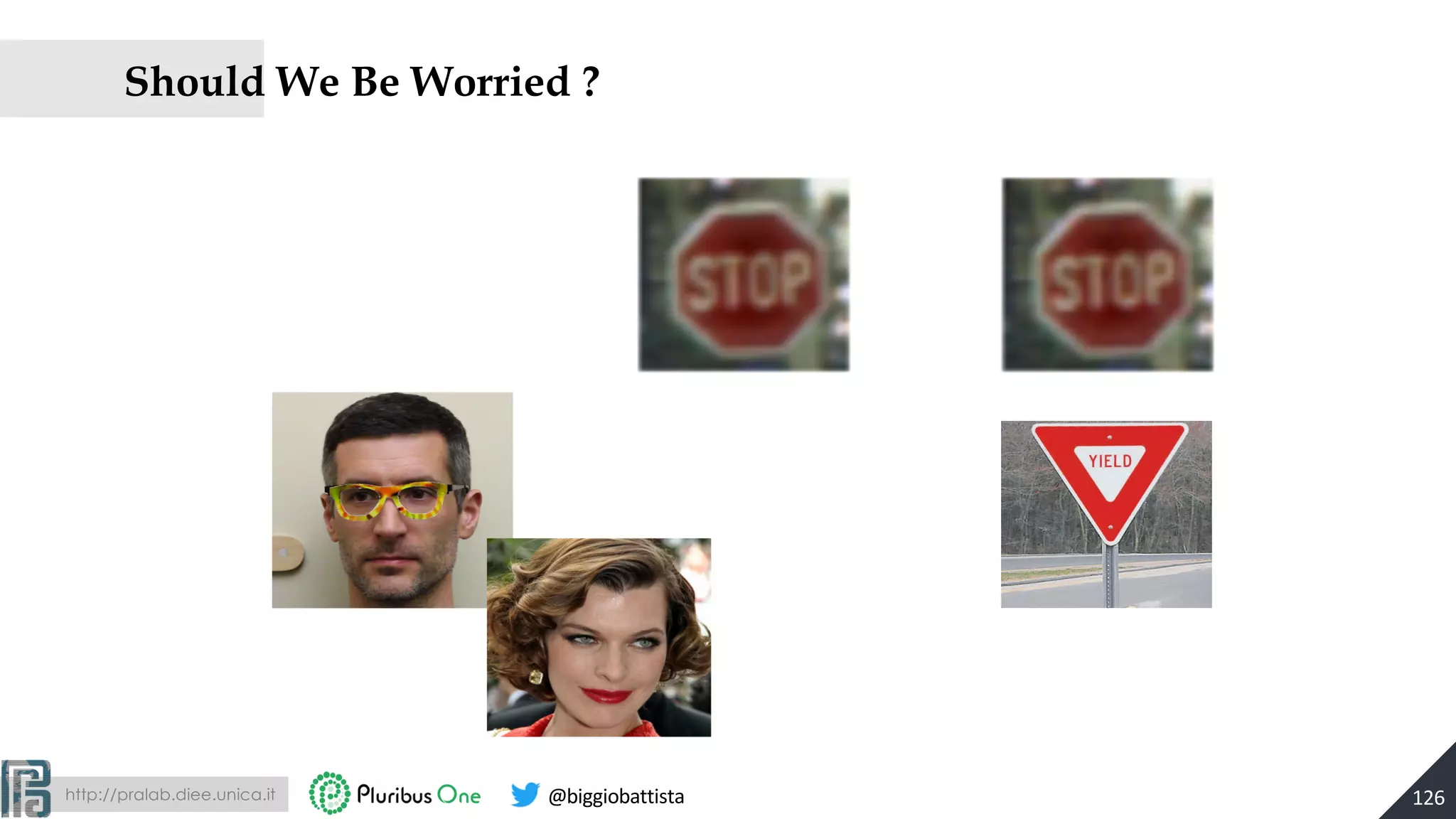

![http://pralab.diee.unica.it @biggiobattista

World Is Not Digital…

• ….Previous cases of adversarial examples have common characteristic: the

adversary is able to precisely control the digital representation of the

input to the machine learning tools…..

122

[M. Sharif et al., ACM CCS 2016]

School Bus (x) Ostrich

Struthio Camelus

Adversarial Noise (r)](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-122-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Adversarial images fool deep networks even when they operate in the physical world, for

example, images are taken from a cell-phone camera?

– Alexey Kurakin et al. (2016, 2017) explored the possibility of creating adversarial images for

machine learning systems which operate in the physical world. They used images taken from a

cell-phone camera as an input to an Inception v3 image classification neural network

– They showed that in such a set-up, a significant fraction of adversarial images crafted using the

original network are misclassified even when fed to the classifier through the camera

[Alexey Kurakin et al., ICLR 2017]

Adversarial Images in the Physical World

124](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-124-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista [M. Sharif et al., ACM CCS 2016]

The adversarial perturbation is

applied only to the eyeglasses

image region

Adversarial Glasses

125](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-125-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

No, We Should Not…

127

In this paper, we show experiments that suggest that a trained neural

network classifies most of the pictures taken from different distances and

angles of a perturbed image correctly. We believe this is because the

adversarial property of the perturbation is sensitive to the scale at which

the perturbed picture is viewed, so (for example) an autonomous car will

misclassify a stop sign only from a small range of distances.

[arXiv:1707.03501; CVPR 2017]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-127-2048.jpg)

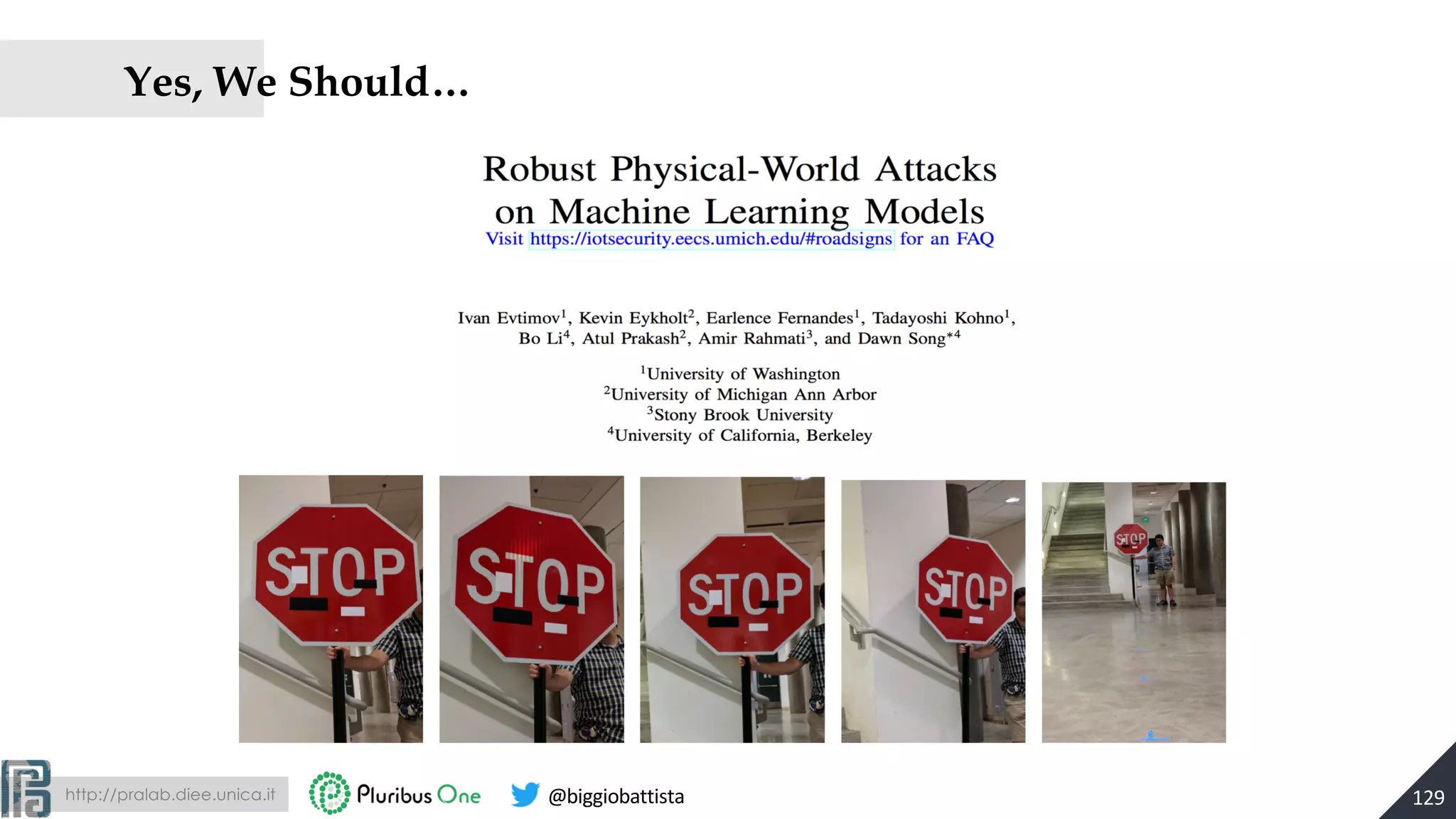

![http://pralab.diee.unica.it @biggiobattista

Yes, We Should...

128[Athalye et al., Synthesizing robust adversarial examples. ICLR, 2018]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-128-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Adversarial Road Signs

[Evtimov et al., CVPR 2017] 130](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-130-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

• Adversarial examples can exist in the physical world, we can fabricate concrete

adversarial objects (glasses, road signs, etc.)

• But the effectiveness of attacks carried out by adversarial objects is still to be

investigated with large scale experiments in realistic security scenarios

• Gilmer et al. (2018) have recently discussed the realism of security threat caused

by adversarial examples, pointing out that it should be carefully investigated

– Are indistinguishable adversarial examples a real security threat ?

– For which real security scenarios adversarial examples are the best attack vector?

Better than attacking components outside the machine learning component

– …

131

Is This a Real Security Threat?

[Justin Gilmer et al., Motivating the Rules of the Game for

Adversarial Example Research, https://arxiv.org/abs/1807.06732]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-131-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

!(#) ≠ &

The adversarial image x + r is visually

hard to distinguish from x

…There is a torrent of work that views increased robustness to restricted

perturbations as making these models more secure. While not all of this work

requires completely indistinguishable modications, many of the papers focus on

specifically small modications, and the language in many suggests or implies that

the degree of perceptibility of the perturbations is an important aspect of their

security risk…

Indistinguishable Adversarial Examples

133

[Justin Gilmer et al., Motivating the Rules of the Game

for Adversarial Example Research, arXiv 2018]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-133-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

…At the time of writing, we were unable to find a compelling example

that required indistinguishability…

To have the largest impact, we should both recast future adversarial

example research as a contribution to core machine learning and

develop new abstractions that capture realistic threat models.

137

Are Indistinguishable Perturbations a Real Security Threat?

[Justin Gilmer et al., Motivating the Rules of the Game

for Adversarial Example Research, arXiv 2018]](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-137-2048.jpg)

![http://pralab.diee.unica.it @biggiobattista

Black Swans to the Fore

142

[Szegedy et al., Intriguing properties of neural networks, 2014]

After this “black swan”, the issue of

security of DNNs came to the fore…

Not only on scientific specialistic

journals…](https://image.slidesharecdn.com/2019-mls-padua-biggio-advml-tutorial-191002124513/75/Wild-Patterns-A-Half-day-Tutorial-on-Adversarial-Machine-Learning-2019-International-Summer-School-on-Machine-Learning-and-Security-MLS-Padova-2019-142-2048.jpg)