$TITLE ECON 480 FINAL EXAM MATHEMATICAL PROGRAMMING QUESTION T.docx

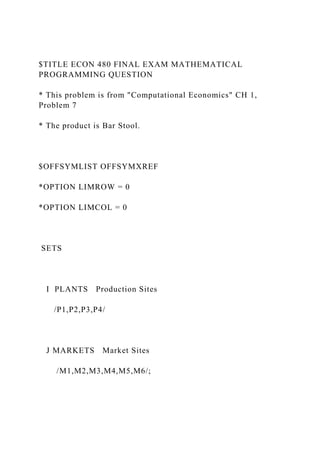

- 1. $TITLE ECON 480 FINAL EXAM MATHEMATICAL PROGRAMMING QUESTION * This problem is from "Computational Economics" CH 1, Problem 7 * The product is Bar Stool. $OFFSYMLIST OFFSYMXREF *OPTION LIMROW = 0 *OPTION LIMCOL = 0 SETS I PLANTS Production Sites /P1,P2,P3,P4/ J MARKETS Market Sites /M1,M2,M3,M4,M5,M6/;

- 2. *Data TABLE NETREV(I,J) Net Revenue in Dollars M1 M2 M3 M4 M5 M6 P1 29 51 59 54 56 27 P2 44 43 29 63 53 46 P3 63 37 63 62 46 47 P4 51 53 54 51 43 38; * The Net Revenues are different because the product is * sold at different prices in the six markets and the * transportation cost from each plant to each market is also different PARAMETERS CAPACITY(I) Capacity at each plant

- 3. /P1 1200 P2 1800 P3 1100 P4 1900/ DEMAND(J) Demand at each market /M1 1000 M2 1200 M3 700 M4 1500 M5 500 M6 1000/; VARIABLES X(I,J) Montly Shippments from plant i to market j TOTPROF Objective Function Value;

- 4. POSITIVE VARIABLE X; EQUATIONS OBJFUNC OBJECTIVE FUNCTION PLANCONS(I) PLANT CONSTRAINT DEMCONS(J) DEMAND CONSTRAINT; OBJFUNC .. TOTPROF =E= SUM((I,J), NETREV(I,J) * X(I,J)); PLANCONS(I) .. SUM(J, X(I,J)) =L= CAPACITY(I); DEMCONS(J) .. SUM(I, X(I,J)) =G= DEMAND(J); MODEL MAXPROF /OBJFUNC,PLANCONS,DEMCONS/; SOLVE MAXPROF USING LP MAXIMIZING TOTPROF;

- 5. � [EXECUTE] [OPENWINDOW_1] MAXIM=1 TOP=0 LEFT=0 HEIGHT=254 WIDTH=480 FILE0=w:econ480final.gms FILE1=w:econ480final.lst FILE2=w:econ480final.gms [MRUFILES] 1=w:econ480final.lst 2=w:econ480final.gms NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation.

- 6. [Type here] Donald Wheeler stated in his second article "Transforming the Data Can Be Fatal to Your Analysis," "out of respect for those who are interested in learning how to better analyze data, I feel the need to further explain why the transformation of data can be fatal to your analysis." My motivation for writing this article is not only improved data analysis but formulating analyses so that a more significant business performance reporting question is also addressed within the assessment; i.e., a paradigm shift from the objectives of the traditional four-step Shewhart system, which Wheeler referenced in his article. The described statistical business performance charting methodology can, for example, reduce firefighting when the approach replaces organizational goal- setting red-yellow-green scorecards, which often have no structured plan for making improvements. This article will show, using real data, why an appropriate data transformation can be essential to

- 7. determine the best action or non- action to take when applying this overall system in both manufacturing and transactional processes. Should Traditional Control Charting Procedures be Enhanced? I appreciate the work of the many statistical-analysis icons. For example, more than 70 years ago Walter Shewhart introduced statistical control charting. W. Edwards Deming extended this work to the business system with his profound knowledge philosophy and introduced the terminology common and special cause variability. I also respect and appreciate the work that Wheeler has done over the years. For example, his book Understanding Variation has provided insight to many. However, Wheeler and I have a difference of opinion about the need to transform data when a transformation makes physical sense. The reason for writing this article is to provide additional information on the reasoning for my position. I hope that this supplemental explanation will provide readers with enough insight so that they can make the best logical decision relative to considering data transformations or not.

- 8. In his last article, Wheeler commented: "However, rather than offering a critique of the points raised in my original article, he [Breyfogle] chose to ignore the arguments against transforming the data and to simply repeat his mantra of ‘transform, transform, transform.' " http://www.qualitydigest.com/inside/quality-insider- column/transforming-data-can-be-fatal-your-analysis.html NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] In fact, I had agreed with Wheeler’s stated position that, "If a transformation makes sense both in terms of the original data and the objectives of the analysis, then it will be okay to use that transformation." What I did take issue with was his statement: "… Therefore, we do not have to pre-qualify our data before we place them on a process behavior chart. We do not need to check the data for normality,

- 9. nor do we need to define a reference distribution prior to computing limits. Anyone who tells you anything to the contrary is simply trying to complicate your life unnecessarily." In my article, I stated "I too do not want to complicate people’s lives unnecessarily; however, it is important that someone’s over-simplification does not cause inappropriate behavior." Wheeler kept criticizing my random data set parameter selection and the fact that I did not use real data. However, Wheeler failed to comment on the additional points I made relative to addressing a fundamental issue that was lacking in his original article, one that goes beyond the transformation question. This important issue is the reporting of process performance relative to customer requirement needs, i.e., a goal or specification limits. This article will elaborate more on the topic using real data which will lead to the same conclusion as my previous article: For some processes an appropriate transformation is a very important step in leading to the most suitable action or non-action. In addition, this article will describe how this metric reporting system can create performance statements,

- 10. offering a prediction statement of how the process is performing relative to customer needs. It is important to reiterate that appropriate transformation selection, when necessary, needs to be part of this overall system. In his second article, Wheeler quoted analysis steps from Shewhart’s Economic Control of Quality of Manufactured Product. These steps, which were initiated over seven decades ago, focus on an approach to identify out-of-control conditions. However, the step sequence did not address whether the process output was capable of achieving a desired performance output level, i.e., expected process non-conformance rate from a stable process. Wheeler referenced a study "… encompassing more than 1,100 probability models where 97.3 percent of these models had better than 97.5-percent coverage at three-sigma limits." From this statement, we could infer that Wheeler believes that industry should be satisfied with a greater than 2-percent false-signal rate. For processes that do not follow a normal distribution, this could

- 11. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] translate into huge business costs and equipment downtime searching for special-cause conditions that are in fact common-cause. After an organization chases phantom special-cause-occurrences over some period of time, it is not surprising that they would abandon control charting all together. Wheeler also points out how traditional control charting has been around for more than 70 years and how the limits have been thoroughly proven. I don't disagree with the three sampling standard deviation limits; however, how often is control charting really used throughout businesses? In the 1980s, there was a proliferation of control charts; however, look at us now. Has the usage of these charts continued and, if they are used, are they really applied with the intent originally envisioned by Shewhart? I suggest there is not the widespread usage of control charts that quality-tools training

- 12. classes would lead students to believe. But why is there not more frequent usage of control charts in both manufacturing and transactional processes? To address this underutilization, I am suggesting that while the four-step Shewhart methodology has its applicability, we now need to revisit how these concepts can better be used to address the needs of today's businesses, not only in manufacturing but transactional processes as well. To assess this tool-enhancement need, consider that the output of a process (Y) is a function of its inputs (Xs) and its step sequence, which can be expressed as Y=f(X). The primary purpose of Wheeler's described Shewhart four-step sequence is to identify when a special cause condition occurs so that an appropriate action can be immediately taken. This procedure is most applicable to the tracking of key process input variables (Xs) that are required to maintain a desired output process response for situations where the process has demonstrated that it provides a satisfactory level of performance for the customer-driven output, i.e., Y. However, from a business point of view, we need to go beyond what is currently academically

- 13. provided and determine what the enterprise needs most as a whole. One business-management need is an improved performance reporting system for both manufacturing and transactional processes. This enhanced reporting system needs to structurally evaluate and report the Y output of a process for the purpose of leading to the most appropriate action or non- action. An example application transactional process need for such a charting system is telephone hold time in a call center. For situations like this, there is a natural boundary: hold time cannot get below zero. For this type of situation, a log-normal transformation can often be used to describe adequately the distribution of call-center hold time. Is this distribution a perfect fit? No, but it makes physical sense NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here]

- 14. and is often an adequate representation of what physically happens; that is, a general common-cause distribution consolidation bounded by zero with a tail of hold times that could get long. From a business view point, what is desired at a high level is a reporting methodology that describes what the customer experiences relative to hold time, the Y output for this process. This is an important business requirement need that goes beyond the four- step Shewhart process. I will now describe how to address this need through an enhancement to the Shewhart four-step control charting system. This statistical business performance charting (SBPC) methodology provides a high-level view of how the process is performing. With SBPC, we are not attempting to manage the process in real time. With this performance measurement system, we consider assignable-cause differences between sites, working shifts, hours of the day, and days of the week to be a source of common-cause input variability to the overall process—in other words, Deming's responsibility of management variability. With the SBPC system, we first evaluate the process for

- 15. stability. This is accomplished using an individuals chart where there is an infrequent subgrouping time interval so that input variability occurs between subgroups. For example, if we think that Monday's hold time could be larger than the other days of the week because of increased demand, we should consider selecting a weekly subgrouping frequency. With SBPC, we are not attempting to adjust the number of operators available to respond to phone calls in real time since the company would have other systems to do that. What SBPC does is assess how well these process-management systems are addressing the overall needs of its customers and the business as a whole. The reason for doing this is to determine which of the following actions or non-actions are most appropriate, as described in Table 1. Table 1: Statistical Business Performance Charting (SBPC) Action Options 1. Is the process unstable or did something out of the ordinary occur, which requires action or no action? 2. Is the process stable and meeting internal and external customer

- 16. needs? If so, no action is required. 3. Is the process stable but does not meet internal and external customer needs? If so, process improvement efforts are needed. The four-step Shewhart model that Wheeler referenced focuses only on step number one. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] In my previous article, I used randomly generated data to describe the importance of considering a data transformation when there is a physical reason for such a consideration. I thought that it would be best to use random data since we knew the answer; however, Wheeler repeatedly criticized me for selecting a too-skewed distribution and not using real data. I will now use real data, which will lead to the same conclusion as my previous article. Real-data Example A process needs to periodically change from producing one

- 17. product to producing another. It is important for the changeover time to be as small as possible since the production line will be idle during changeover. The example data used in this discussion is a true enterprise view of a business process. This reports the time to change from one product to another on a process line. It includes six months of data from 14 process lines that involved three different types of changeouts, all from a single factory. The factory is consistently managed to rotate through the complete product line as needed to replenish stock as it is purchased by the customers. The corporate leadership considers this process to be predictable enough, as it is run today, to manage a relatively small finished goods inventory. With this process knowledge, what is the optimal method to report the process behavior with a chart? Figure 1 is an individuals chart of changeover time. From this control chart, which has no transformation as Wheeler suggests, nine incidents are noted that should have been investigated in real time. In addition, one would conclude that this process is not stable or is out of control. But, is

- 18. it? NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] Figure 1: Individuals Chart of Changeover Time (Untransformed Data) One should note that in Figure 1 the lower control limit is a negative number, which makes no physical sense since changeover time cannot be less than zero. Wheeler makes the statement, "Whenever we have skewed data there will be a boundary value on one side that will fall inside the computed three-sigma limits. When this happens, the boundary value takes precedence over the computed limit and we end up with a one-sided chart." He also says, "The important fact about nonlinear transformations is not that they reduce the false-alarm rate, but

- 19. rather that they obscure the signals of process change." It seems to me that these statements can be contradictory. Consider that the response that we are monitoring is time, which has a zero boundary, and where a lower value is better. For many situations, our lower-control limit will be zero with Wheeler’s guidelines (e.g., Figure 1). Consider that the purpose of an improvement effort is to reduce changeover time. An improved reduction in changeover time can be difficult to detect using this “one-sided” control chart, when the lower- control limit is at the boundary condition—zero in this case. Wheeler's article makes no mention of what the process customer requirements are or the reporting of its capability relative to specifications, which is an important aspect of lean Six Sigma programs. Let's address that point now. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here]

- 20. The current process has engineering evaluating any change that takes longer than twelve hours. This extra step is expensive and distracts engineering from its core responsibility. The organization's current reporting system does not address how frequently this engineering intervention occurs. In his article, Wheeler made no mention of making such a computation for either normal or non- normal distributed situations. This need occurs frequently in industry when processes are to be assessed on how they are performing relative to specification requirements. Let's consider making this estimate from a normal probability plot of the data, as shown in Figure 2. This estimate would be similar to a practitioner manually calculating the value using a tabular z- value with a calculated sample mean and standard deviation. Figure 2: Probability Plot of the Untransformed Data We note from this plot how the data do not follow a straight line; hence, the normal distribution does

- 21. not appear to be a good model for this data set. Because of this lack-of-fit, the percentage-of-time estimate for exceeding 12 hours is not accurate; i.e., 46% (100- 54 = 46). NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] We need to highlight that technically we should not be making an assessment such as this because the process is not considered to be in control when plotting untransformed data. For some, if not most, processes that have a long tail, we will probably never appear to have an in-control process, no matter what improvements are made; however, does that make sense? The output of a process is a function of its steps and input variables. Doesn’t it seem logical to expect some level of natural variability from input variables and the execution of process steps? If we agree to this assumption, shouldn’t we expect a large percentage of

- 22. process output variability to have a natural state of fluctuation; i.e., be stable? To me this statement is true for most transactional and manufacturing processes, with the exception of things like naturally auto-correlated data situations such as the stock market. However, with traditional control charting methods, it is often concluded that the process is not stable even when logic tells us that we should expect stability. Why is there this disconnection between our belief and what traditional control charts tell us? The reason is that underlying control-chart-creation assumptions and practices are often not consistent with what occurs naturally in the real world. One of these practices is not using suitable transformations when they are needed to improve the description of process performance, for instance, when a boundary condition exists. It is important to keep in mind that the reason for process tracking is to determine which actions or non-actions are most appropriate, as described in Table 1. Let’s now return to our real-data example analysis.

- 23. For this type of bounded situation, often a log-normal distribution will fit the data well, since changeover time cannot physically go below a lower limit of zero, such as the previously described call-center situation. With the SBPC approach, we want first to assess process stability. If a process has a current stable region, we can consider that this process is predictable. Data from the latest region of stability can be considered a random sample of the future, given that the process will continue to operate as it has in the recent past's region of stability. When these continuous data are plotted on an appropriate probability plot coordinate system, a prediction statement can be made: What percentage of time will the changeover take longer than 12 NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here]

- 24. hours? Figure 3 shows a control chart in conjunction with a probability plot. A netting-out of the process analysis results is described below the graphics: The process is predictable where about 38 percent of the time it takes longer than 12 hours. Unlike the previous non-transformed analysis, we would now conclude that the process is stable, i.e., in control. Unlike the non-transformed analysis, this analysis considers that the skewed tails, which we expect from this process, to be the result of common-cause process variability and not a source for special cause investigation. Because of this, we conclude, for this situation, that the transformation provides an improved process discovery foundation model to build upon, when compared to a non-transformed analysis approach. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here]

- 25. The process is predictable where about 38% percent of the time it takes longer than 12 hours. Figure 3: Report-out. Let’s now compare the report-outs of both the untransformed (Figure 1) and transformed data (Figure 3). Consider what actions your organization might take if presented each of these report-outs separately. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] The Figure 1 report can be attempting to explain common cause events as though each occurrence has an assignable cause that needs to be addressed. Actions resulting from this line of thinking can lead to much frustration and unnecessary process-procedural

- 26. tampering that result in increased process-response variability, as Deming illustrated in his funnel experiment. When Figure 3's report-out format is used in our decision making process, we would assess the options in Table 1 to determine which action is most appropriate. With this data-transformed analysis, number three in Table 1 would be the most appropriate action, assuming that we consider the 38 percent frequency of occurrence estimate above 12 hours excessive. Wheeler stated, "If you are interested in looking for assignable causes you need to use the process behavior chart (control chart) in real time. In a retrospective use of the chart you are unlikely to ever look for any assignable causes …" This statement is contrary to what is taught and applied in the analyze phase of lean Six Sigma’s define-measure-analyze-improve-control (DMAIC) process improvement project execution roadmap. Within the DMAIC analyze phase, the practitioner evaluates historical data statistically for the purpose of gaining insight into what might be done to improve his process improvement project’s

- 27. process. It can often be very difficult to determine assignable causes with a real-time-search-for-signals approach that Wheeler suggests, especially when the false signal rate can be amplified in situations where an appropriate transformation was not made. Also, with this approach, we often do not have enough data to test that the hypothesis of a particular assignable cause is true; hence, we might think that we have identified an assignable cause from a signal, but this could have been a chance occurrence that did not, in fact, negatively impact our process. In addition, when we consider how organizations can have thousands of processes, the amount of resources to support this search-for- signal effort can be huge. Deming in Out of the Crisis stated, "I should estimate that in my experience most troubles and most possibilities for improvement add up to proportions something like this: 94 percent belong to the system (responsibility of management), 6 percent [are] special."

- 28. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] With a search-for-signal strategy it seems as if we are trying to resolve the 6 percent of issues that Deming estimates. It would seem to me that it would be better to focus our efforts on how we can better address the 94 percent common-cause issues that Deming describes. To address this matter from a different point of view, consider extending a classical assignable cause investigation from special cause occurrences to determining what needs to be done to improve a process’ common-cause variability response if the process does not meet the needs of the customer or the business. I have found with the SBPC reporting approach that assignable causes that negatively impact process performance from a common-cause point of view can best be determined by collecting data over some period of time to test hypotheses that assess differences

- 29. between such factors as machines, operators, day of the week, raw material lots, and so forth. When undergoing process improvement efforts, the team can use collected data within the most recent region of stability to test out compiled hypothesis statements that it thinks could affect the process output level. These analyses can provide guiding light insight to process-improvement opportunities. This is a more efficient analytical discovery approach than a search-for-signals strategy where the customer needs are not defined or addressed relative to current process performance. For the example SBPC plot in Figure 3, the team discovered through hypotheses tests that there was a significant difference in the output as a function of the type of change, the shift that made the change, and the number of performed tests made during the change. This type of information helps the team determine where to focus its efforts in determining what should be done differently to improve the process. Improvement to the system would be demonstrated by a statistically significant shift of the SPBC report-out to a new-improved level of

- 30. stability. This system of analysis and discovery would apply for both processes that need data transformation and those that don't, that is, the SPBC system highlights and does not obscure the signals of process change. Detection of an Enterprise Process Change Wheeler stated, "However, in practice, it is not the false-alarm rate that we are concerned with, but rather the ability to detect signals of process changes. And that is why I used real data in my article. There we saw that a nonlinear transformation may make the histogram look more bell-shaped, but in NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] addition to distorting the original data, it also tends to hide all of the signals contained within those data."

- 31. Let's now examine how well a shift can be detected with our real-data example, using both non- transformed and transformed data control charts. A traditional test for a process control chart is the average run length until an out-of-control indication is detected, typically for a one standard deviation shift in the mean. This does not translate well to cycle-time-based data where there are already values near the natural limit of zero. For our analysis, we will assume a 30-percent reduction in the average cycle time to be somewhat analogous to a shift of one standard deviation in the mean of a normally distributed process. In our sample data, there were 590 changeovers in the six- month period. The 30-percent reduction in cycle time was introduced after point 300. A comparison of the transformed and untransformed process behavior charting provides a clear example of the benefits of the transformation, noting that, for the non-transformed report-out, a lower control limit of zero, per Wheeler's suggestion, was included as a lower bound reference line. In Figure 4a, the two charts show the untransformed data analysis, the upper chart appearing to

- 32. have become stable after the simulated process change. When a staging has been created (lower chart in Figure 4a), the new chart stage identifies four special cause incidents that were considered common-cause events in the transformed data set, as shown in the lower chart in Figure 4b. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] Figure 4a: Non-transformed Analysis of a Process Shift NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here]

- 33. Figure 4b: Transformed Analysis of a Process Shift The two transformed charts in Figure 4b show a change in the process with the introduction of the special-cause indications after the simulated change was implemented. When staging is introduced into this charting, the special-cause indications are eliminated; i.e., the process is considered stable after the change. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] Using Wheeler’s guidelines, a simple reduction in process cycle time would appear to be the removal of special causes. This is fine in the short term, but as we collect more data on the new process and introduce staging into the data charting we would surely, for skewed process performance, return to a situation were special-cause signals would reoccur, as shown in the lower chart in Figure 4a. We

- 34. should highlight that these special cause events appear as common-cause variability in the transformed-data-set analysis shown in the lower chart in Figure 4b. If you follow a guideline of examining a behavioral chart that has an appropriate transformation, you would have noted a special cause occurring when the simulated process change was interjected. From this analysis, the success of the process improvement effort would have been recognized and the process behavior chart limits would be re-set to new limits that reflect the new process response level. Since the transformed data set process is stable, we can report- out a best estimate for how the process is performing relative to the 12 hour cycle time criteria. In Figure 5, the SBPC description provides an estimate of how the process performed before and after the change, where 100-63 = 37% and 100-87 = 13%. Since the process has a recent region of stability, the data from this recent region can provide a predictive estimate of future performance unless something changes within the process

- 35. and/or its inputs; i.e., our estimate is that 13 percent of the cycle times will be above 12 hours. NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] The process has been predictable since observation 300 where now about 13% of the time it takes longer than 12 hours for a changeover. This 13% value is a reduction from about 37% before the new process change was made. Figure 5: SBPC Report-out Describing Impact of Process Change. I wonder if much of the dissatisfaction and lack of use of

- 36. business control charting derive from the use of non-transformed data. Immediately after the change, the process looks to be good, and the improvement effort is recognized as a success. However, in a few weeks or months after the control chart has been staged, the process will show to be out of control again because the original data is no longer the primary driver for the control limits. The organization will assume the problem has returned and possibly consider the earlier effort to now be a failure. In addition, Wheeler’s four-step Shewhart process made no mention of how to assess how well a process is performing relative to customer needs, a very important aspect in the real business world. Conclusions The purpose of traditional individuals charting is to identify in a timely fashion when special-cause conditions occur so that corrective actions can be taken. The application of this technique is most NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data

- 37. transformation. [Type here] beneficial when tracking the inputs to a process that has an output level which is capable of meeting customer needs. False signals can occur if the process measurement by nature is not normally distributed, for example, in processes that cannot be below zero. Investigation into these false signals can be expensive and lead to much frustration when no reason is found for out-of-control conditions. The Wheeler suggested four-step Shewhart process has its application; however, a more pressing issue for businesses is in the area of high-level predictive performance measurements. SBPC provides an enhanced control charting system that addresses these needs; e.g., an individuals chart in conjunction with an appropriate probability plot for continuous data. Appropriate data transformation considerations need to be part of the overall SBPC implementation process. With SBPC, we are not limited to identifying out-of-control

- 38. conditions but also are able to report the capability of the process in regions of stability in terms that everyone can understand. With this form of reporting, when there is a recent region of stability, we can consider data from this region to be a random sample of the future. With statistical business performing charting approach, we might be able to report that our process has been stable for the last 14 weeks with a prediction that 10 percent of our incoming calls will take longer than a goal of one minute. I expect that the one real issue behind this entire discussion is the idea of "what is good enough?" Wheeler shared a belief that a control charting method that allows up to 2.5 percent of process measures to trigger a cause and corrective action effort that will not find a true cause in a business as "good enough." Wheeler goes so far as to relate the 95 percent confidence concept from hypothesis testing to imply that up to 5 percent false special cause detections are acceptable. Using the above- described concept of transforming process data where the transformation is appropriate for the process-data type will lead to process behavior charting that matches the sensitivity and false-cause

- 39. detection that we have all learned to expect when tracking normally distributed data in a typical manufacturing environment. Why would anyone want to have a process behavior chart that will be interpreted differently for each use in an organization? The answer should be clear: use transformations when they are appropriate and then your organization can interpret all control charts in the same manner. Why be "good enough" when you have the ability to be correct? NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] The "to transform or not transform" issue addressed in this paper led to SBPC reporting and its advantages over the classical control-charting approach described by Wheeler. However, the potential for SBPC predictive reporting has much larger implications than reporting a widget

- 40. manufacturing process output. Traditional organizational performance measurement reporting systems have a table of numbers, stacked bar charts, pie charts, and red-yellow-green goal-based scorecards that provide only historical data and make no predictive statements. Using this form of metric reporting to run a business is not unlike driving a car by only looking at the rear view mirror, a dangerous practice. When predictive SBPC system reporting is used to track interconnected business process map functions, an alternative forward-looking dashboard performance reporting system becomes available. With this metric system, organizations can systematically evaluate future expected performance and make appropriate adjustments if they don't like what they see, not unlike looking out a car's windshield and turning the steering wheel or applying the brake if they don't like where they are headed. How SBPC can be integrated within a business system that analytically/innovatively determines strategies with the alignment of improvement projects that

- 41. positively impact the overall business will be described in a later article. DISCUSS REGISTER ) ABOUT THE AUTHOR Forrest Breyfogle—New Paradigms CEO and president of Smarter Solution s Inc., Forrest W. Breyfogle III is the creator of the integrated enterprise excellence (IEE) management system, which takes lean Six Sigma and the balanced scorecard to the next level. A professional engineer, he’s an ASQ fellow who serves on the board of advisors for the University of Texas Center for Performing Excellence. He received the 2004 Crosby

- 42. Medal for his book, Implementing Six Sigma. E-mail him at [email protected] javascript:%20void%200; http://www.qualitydigest.com/user/login?destination=comment/r eply/8970#comment-form http://www.qualitydigest.com/user/register?destination=commen t/reply/8970#comment-form http://www.smartersolutions.com/ mailto:[email protected] NOT Transforming the Data Can Be Fatal to Your Analysis A case study, with real data, describes the need for data transformation. [Type here] Comments

- 43. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 1 In “Do You Have Leptokurtophobia?” Don Wheeler stated, “‘But the software suggests transforming the data!’ Such advice is simply another piece of confusion. The fallacy of transforming the data is as follows: “The first principle for understanding data is that no data have meaning apart from their context. Analysis begins with context, is driven by context, and ends with the results being interpreted in the context of the original data. This principle requires that there

- 44. must always be a link between what you do with the data and the original context for the data. Any transformation of the data risks breaking this linkage. If a transformation makes sense both in terms of the original data and the objectives of the analysis, then it will be okay to use that transformation. Only you as the user can determine when a transformation will make sense in the context of the data. (The software cannot do this because it will never know the context.) Moreover, since these sensible transformations will tend to be fairly simple in nature, they do not tend to distort the data.” I agree with Wheeler in that data transformations that make no physical sense can lead to the wrong action or nonaction; however, his following statement concerns me. “Therefore, we do not have to

- 45. pre-qualify our data before we place them on a process behavior chart. We do not need to check the data for normality, nor do we need to define a reference distribution prior to computing limits. Anyone who tells you anything to the contrary is simply trying to complicate your life unnecessarily.” I, too, do not want to complicate people’s lives unnecessarily; however, it is important that someone’s oversimplification does not cause inappropriate behavior. The following illustrates, from a high level, or what I call a 30,000-foot-level, when and how to apply transformations and present results to others so that the data analysis leads to the most appropriate action or nonaction. Statistical software makes the application of transformations simple.

- 46. Why track a process? There are three reasons for statistical tracking and reporting of transactional and manufacturing process outputs: 1. Is the process unstable or did something out of the ordinary occur, which requires action or no action? 2. Is the process stable and meeting internal and external customer needs? If so, no action is required. http://www.qualitydigest.com/inside/quality-insider-column/do- you-have-leptokurtophobia.html Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data.

- 47. 2 3. Is the process stable but does not meet internal and external customer needs? If so, process improvement efforts are needed. W. Edwards Deming, in his book, Out of the Crisis (Massachusetts Institute of Technology, 1982) stated, “We shall speak of faults of the system as common causes of trouble, and faults from fleeting events as special causes…. Confusion between common causes and special causes leads to frustration of everyone, and leads to greater variability and to higher costs, exactly contrary to what is needed. I should estimate that in my experience, most troubles and most possibilities for improvement add up to proportions something like this: 94 percent belong to the

- 48. system (responsibility of management), 6 percent special.” With this perspective, the second portion of item No. 1 could be considered a special-cause occurrence, while items No. 2 and 3 could be considered common-cause occurrence. A tracking system is needed for determining which of the three above categories best describes a given situation. Is the individuals control chart robust to non-normality? The following will demonstrate how an individuals control chart is not robust to non-normally distributed data. The implication of this is that an erroneous decision could be made relative to the three listed reasons, if an appropriate transformation is not made.

- 49. To enhance the process of selecting the most appropriate action or nonaction from the three listed reasons, an alternate control charting approach will be presented, accompanied by a procedure to describe process capability/performance reporting in terms that are easy to understand and visualize. Let’s consider a hypothetical application. A panel’s flatness, which historically had a 0.100 in. upper specification limit, was reduced by the customer to 0.035 in. Consider, for purpose of illustration, that the customer considered a manufacturing nonconformance rate above 1 percent to be unsatisfactory. Physical limitations are that flatness measurements cannot go below zero, and experience has shown

- 50. that common-cause variability for this type of situation often follows a log-normal distribution. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 3 The person who was analyzing the data wanted to examine the process at a 30,000-foot-level view to determine how well the shipped parts met customers’ needs. She thought that there might be differences between production machines, shifts of the day, material lot-to-lot thickness, and several other input variables. Because she wanted typical variability of these inputs as a source of common-

- 51. cause variability relative to the overall dimensional requirement, she chose to use an individuals control chart that had a daily subgrouping interval. She chose to track the flatness of one randomly- selected, daily-shipped product during the last several years that the product had been produced. She understood that a log-normal distribution might not be a perfect fit for a 30,000-foot-level assessment, since a multimodal distribution could be present if there were a significant difference between machines, etc. However, these issues could be checked out later since the log-normal distribution might be close enough for this customer-product- receipt point of view. To model this situation, consider that 1,000 points were randomly generated from a log-normal

- 52. distribution with a location parameter of two, a scale parameter of one, and a threshold of zero (i.e., log normal 2.0, 1.0, 0). The distribution from which these samples were drawn is shown in figure 1. A normal probability plot of the 1,000 sample data points is shown in figure 2. Figure 1: Distribution From Which Samples Were Selected Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 4 Figure 2: Normal Probability Plot of the Data

- 53. From figure 2, we statistically reject the null hypothesis of normality technically, because of the low p-value, and physically, since the normal probability plotted data does not follow a straight line. This is also logically consistent with the problem setting, where we do not expect a normal distribution for the output of such a process having a lower boundary of zero. A log-normal probability plot of the data is shown in figure 3. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 5

- 54. Figure 3: Log-Normal Probability Plot of the Data From figure 3, we fail to statistically reject the null hypothesis of the data being from a log-normal distribution, since the p-value is not below our criteria of 0.05, and physically, since the log-normal probability plotted data tends to follow a straight line. Hence, it is reasonable to model the distribution of this variable as log normal. If the individuals control chart is robust to data non-normality, an individuals control chart of the randomly generated log-normal data should be in statistical control. In the most basic sense, using the simplest run rule (a point is “out of control” when it is beyond the control limits), we would expect such data to give a false alarm on the average three or

- 55. four times out of 1,000 points. Further, we would expect false alarms below the lower control limit to be equally likely to occur, as would false alarms above the upper control limit. Figure 4 shows an individuals control chart of the randomly generated data. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 6 Figure 4: Individuals Control Chart of the Random Sample Data The individuals control chart in figure 4 shows many out-of- control points beyond the upper control

- 56. limit. In addition, the individuals control chart shows a physical lower boundary of zero for the data, which is well within the lower control limit of 22.9. If no transformation is needed when plotting non-normal data in a control chart, then we would expect to see a random scatter pattern within the control limits, which is not prevalent in the individuals control chart. Figure 5 shows a control chart using a Box-Cox transformation with a lambda value of zero, the appropriate transformation for log-normally distributed data. This control chart is much better behaved than the control chart in figure 4. Almost all 1,000 points in this individuals control chart are in statistical control. The number of false alarms is consistent with the design and definition of

- 57. the individuals control chart control limits. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 7 Figure 5: Individuals Control Chart With a Box-Cox Transformation Lambda Value of Zero Determining actions to take Previously three decision-making action options were described, where the first option was: 1. Is the process unstable or did something out of the ordinary occur, which requires action or no action?

- 58. For organizations that did not consider transforming data to address this question, as illustrated in figure 4, many investigations would need to be made where common-cause variability was being reacted to as though it were special cause. This can lead to much organizational firefighting and frustration, especially when considered on a plantwide or corporate basis with other control chart metrics. If data are not from a normal distribution, an individuals control chart can generate false signals, leading to unnecessary tampering with the process. For organizations that did consider transforming data to address this question, as illustrated in figure 5, there is no over reaction to common-cause variability as though it were special cause. For the transformed data analysis, let’s next address the other

- 59. questions: 2. Is the process stable and meeting internal and external customer needs? If so, no action is required. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 8 3. Is the process stable but does not meet internal and external customer needs? If so, process improvement efforts are needed. When a process has a recent region of stability, we can make a statement not only about how the

- 60. process has performed in the stable region but also about the future, assuming nothing will change in the future either positively or negatively relative to the process inputs or the process itself. However, to do this, we need to have a distribution that adequately fits the data from which this estimate is to be made. For the previous specification limit of 0.100 in., figure 6 shows a good distribution fit and best- estimate process capability/performance nonconformance estimate of 0.5 percent (100.0 - 99.5). For this situation, we would respond positively to item number two since the percent nonconformance is below 1 percent; i.e., we determined that the process is stable and meeting internal and external customer needs of a less than 1-percent nonconformance rate; hence, no action is required.

- 61. However, from figure 6 we also note that the nonconformance rate we expect to increase to about 6.3 percent (100–93.7) with the new specification limit of 0.35 in. Because of this, we would now respond positively to item number three, since the nonconformance percentage is above the 1- percent criterion. That is, we determined that the process is stable but does not meet internal and external customer needs; hence, process improvement efforts are needed. This metric improvement need would be “pulling” for the creation of an improvement project. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data.

- 62. 9 Figure 6: Log-Normal Plot of Data and Nonconformance Rate Determination for Specifications of 0.100 in. and 0.35 in. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 10

- 63. Figure 7: Predictability Assessment Relative to a Specification of 0.35 in. It is important to present the results from this analysis in a format that is easy to understand, such as described in figure 7. With this approach, we demonstrate process predictability using a control chart in the left corner of the report-out and then use, when appropriate, a probability plot to describe graphically the variability of the continuous-response process with its demonstrated predictability statement. With this form of reporting, I suggest including a box at the bottom of the plots that nets out how the process is performing; e.g., with the new specification requirement of 0.035, our process is predictable with an approximate nonconformance rate of 6.3 percent.

- 64. A lean Six Sigma improvement project that follows a project define-measure-analyze-improve- control execution roadmap could be used to determine what should be done differently in the process so that the new customer requirements are met. Within this project it might be determined in the analyze phase that there is a statistically significant difference in production machines that now needs to be addressed because of the tightened 0.035 tolerance. This statistical difference between machines was probably also prevalent before the new specification requirement; however, this difference was not of practical importance since the customer requirement of 0.100 was being met at the specified customer frequency level of a less than 1- percent nonconformance rate.

- 65. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 11 Upon satisfactory completion of an improvement project, the 30,000-foot-level control chart would need to shift to a new level of stability that had a process capability/performance metric that is satisfactory relative to a customer 1 percent maximum nonconformance criterion. Generalized statistical assessment The specific distribution used in the prior example, log normal (2.0, 1.0, 0), has an average run length (ARL) for false rule-one errors of 28 points. The single sample used showed 33 out-of-control

- 66. points, close to the estimated value of 28. If we consider a less skewed log-normal distribution, log normal (4, 0.25, 0), the ARL for false rule-one errors drops to 101. Note that a normal distribution will have a false rule-one error ARL of around 250. The log-normal (4, 0.25, 0) distribution passes a normality test over half the time with samples of 50 points. In one simulation, a majority, 75 percent, of the false rule-one errors occurred on the samples that tested as non-normal. This result reinforces the conclusion that normality or a near-normal distribution is required for a reasonable use of an individuals chart or a significantly higher false rule-one error rate will occur. Conclusions

- 67. The output of a process is a function of its steps and inputs variables. Doesn’t it seem logical to expect some level of natural variability from input variables and the execution of process steps? If we agree to this presumption, shouldn’t we expect a large percentage of process output variability to have a natural state of fluctuation, that is, to be stable? To me this statement is true for many transactional and manufacturing processes, with the exception of things like naturally auto-correlated data situations such as the stock market. However, with traditional control charting methods, it is often concluded that the process is not stable even when logic tells us that we should expect stability. Why is there this disconnection between our belief and what traditional control charts tell us? The

- 68. reason is that often underlying control-chart-creation assumptions are not valid in the real world. Figures 4 and 5 illustrate one of these points where an appropriate data transformation is not made. The reason for tracking a process can be expressed as determining which actions or nonactions are most appropriate. Non-normal data: To Transform or Not to Transform Sometimes you need to transform non-normal data. 12 1. Is the process unstable or did something out of the ordinary occur, which requires action or no action?

- 69. 2. Is the process stable and meeting internal and external customer needs? If so, no action is required. 3. Is the process stable but does not meet internal and external customer needs? If so, process improvement efforts are needed. This article described why appropriate transformations from a physical point of view need to be a part of this decision-making process. The box at the bottom of figure 7 describes the state of the examined process in terms that everyone can understand; i.e., the process is predictable with an estimate 6.7-percent nonconformance rate. An organization gains much when this form of scorecard-value- chain reporting is used throughout

- 70. its enterprise and is part of its decision-making process and improvement project selection. DISCUSS ABOUT THE AUTHOR Forrest Breyfogle—New Paradigms CEO and president of Smarter