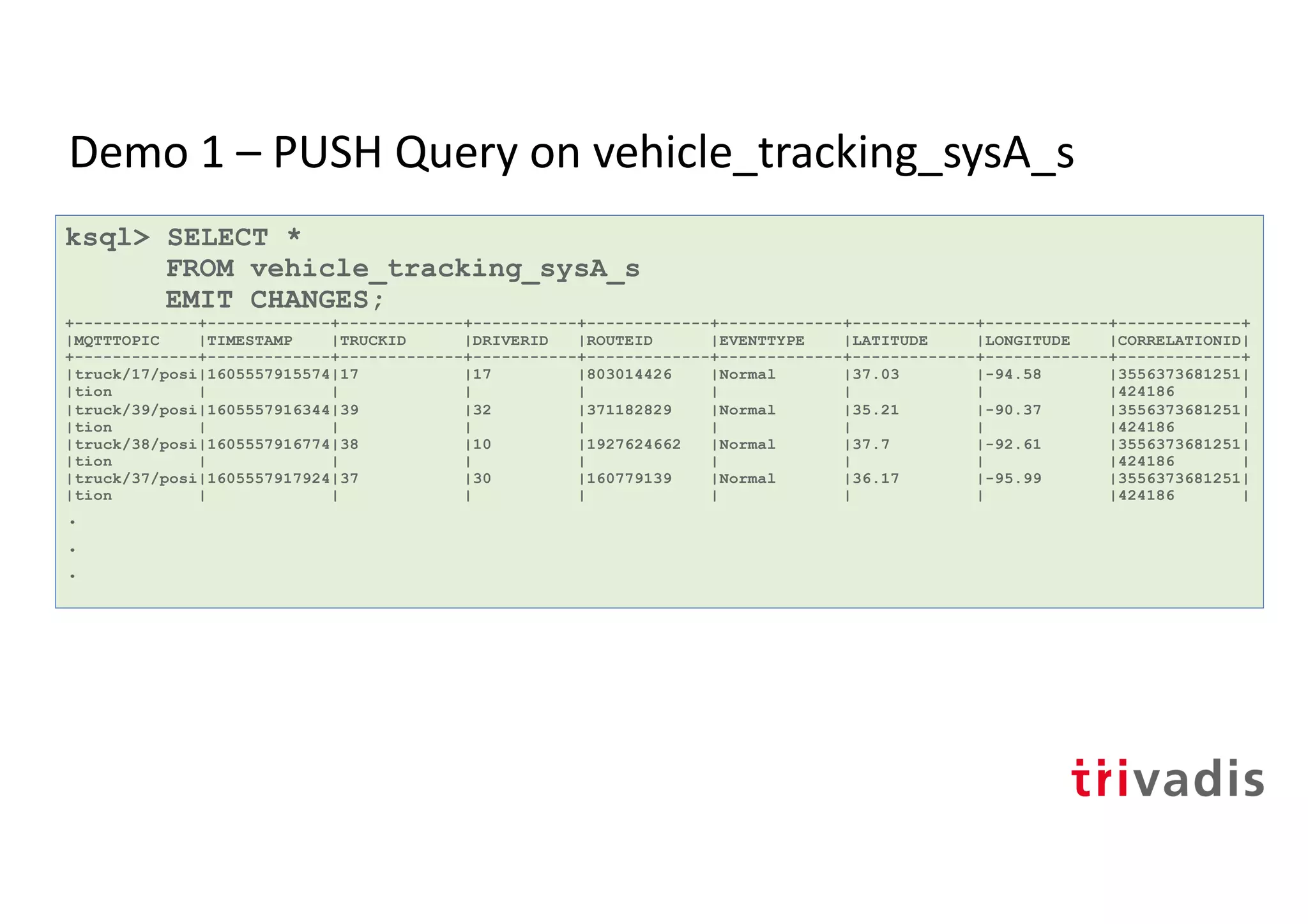

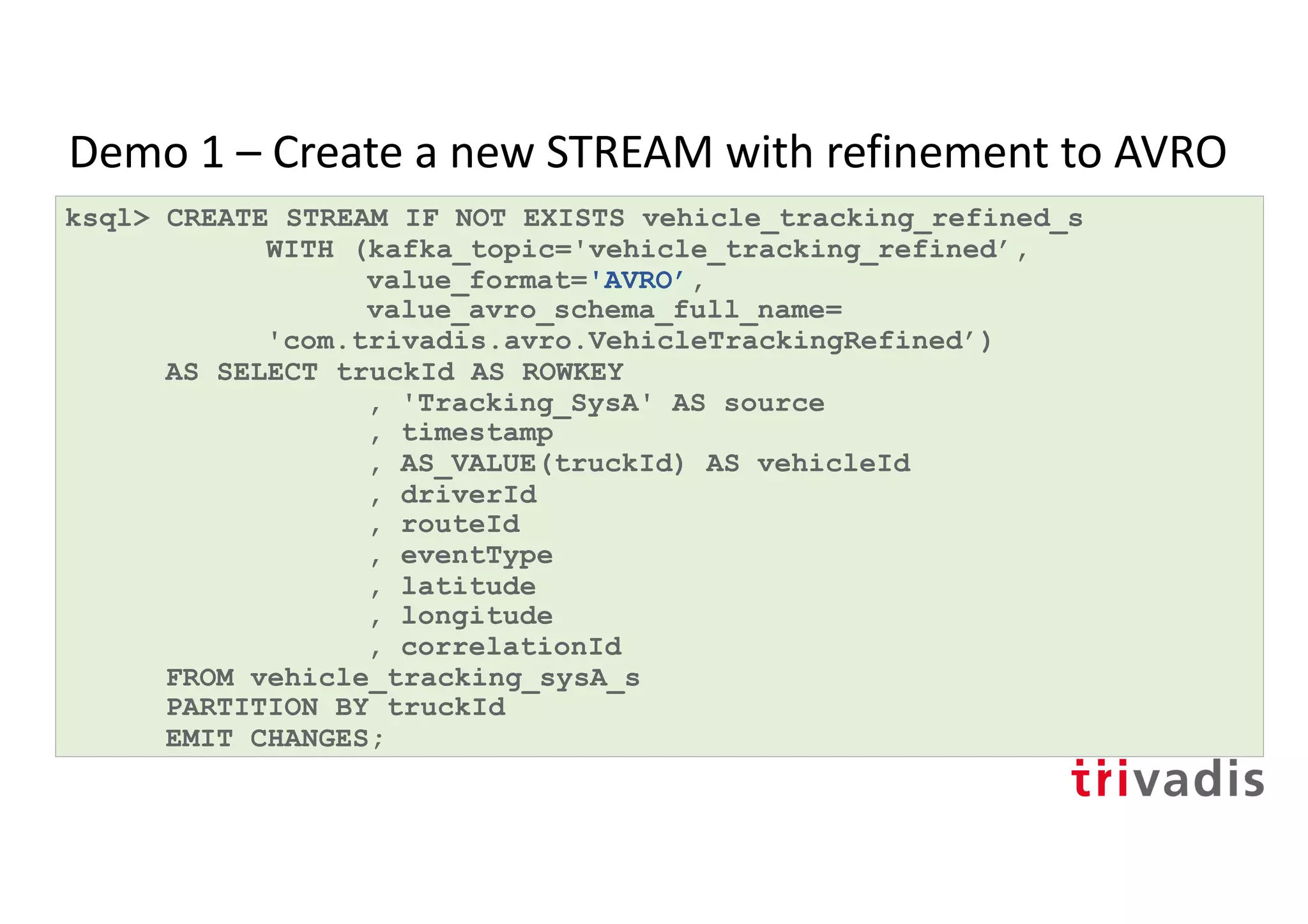

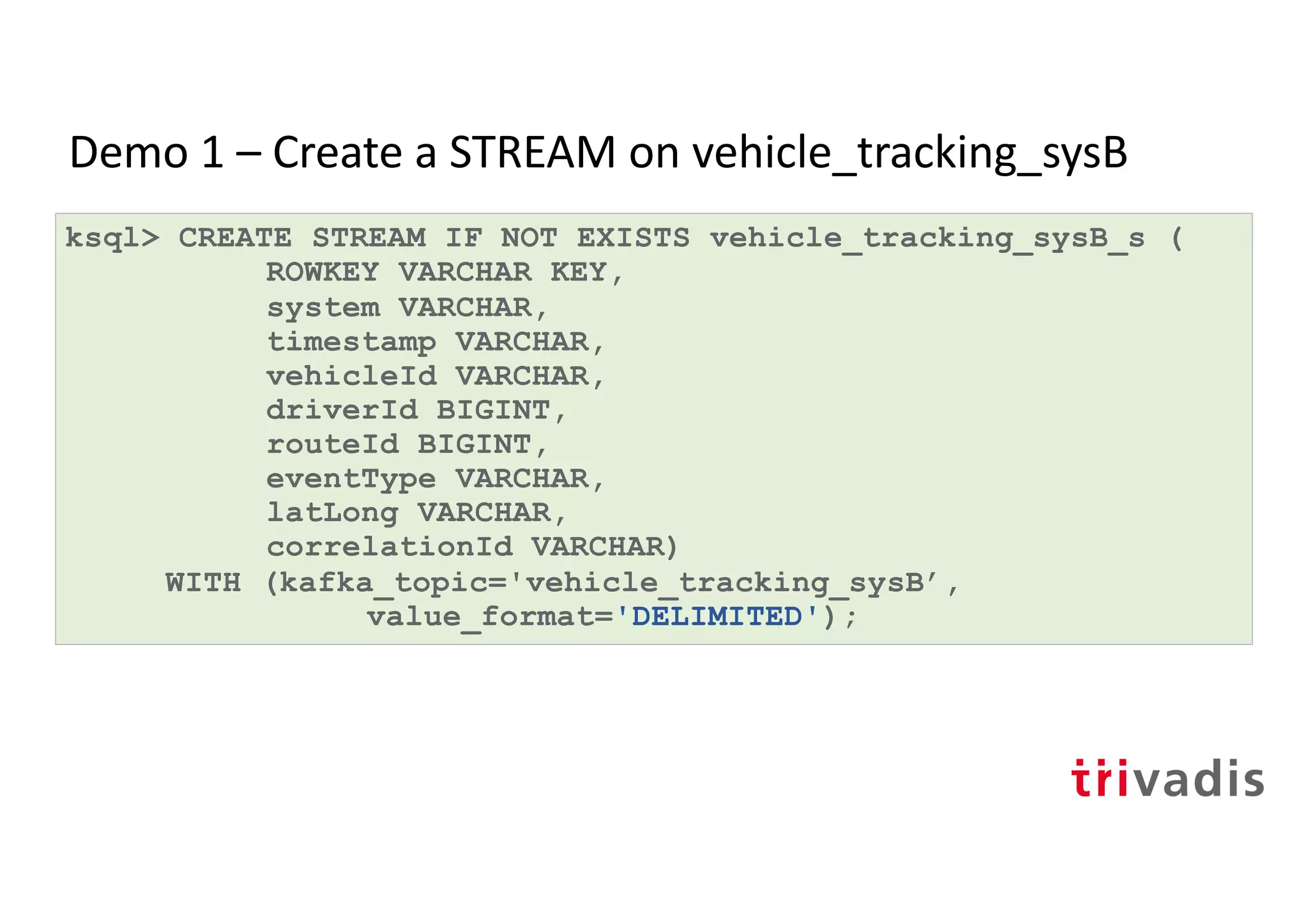

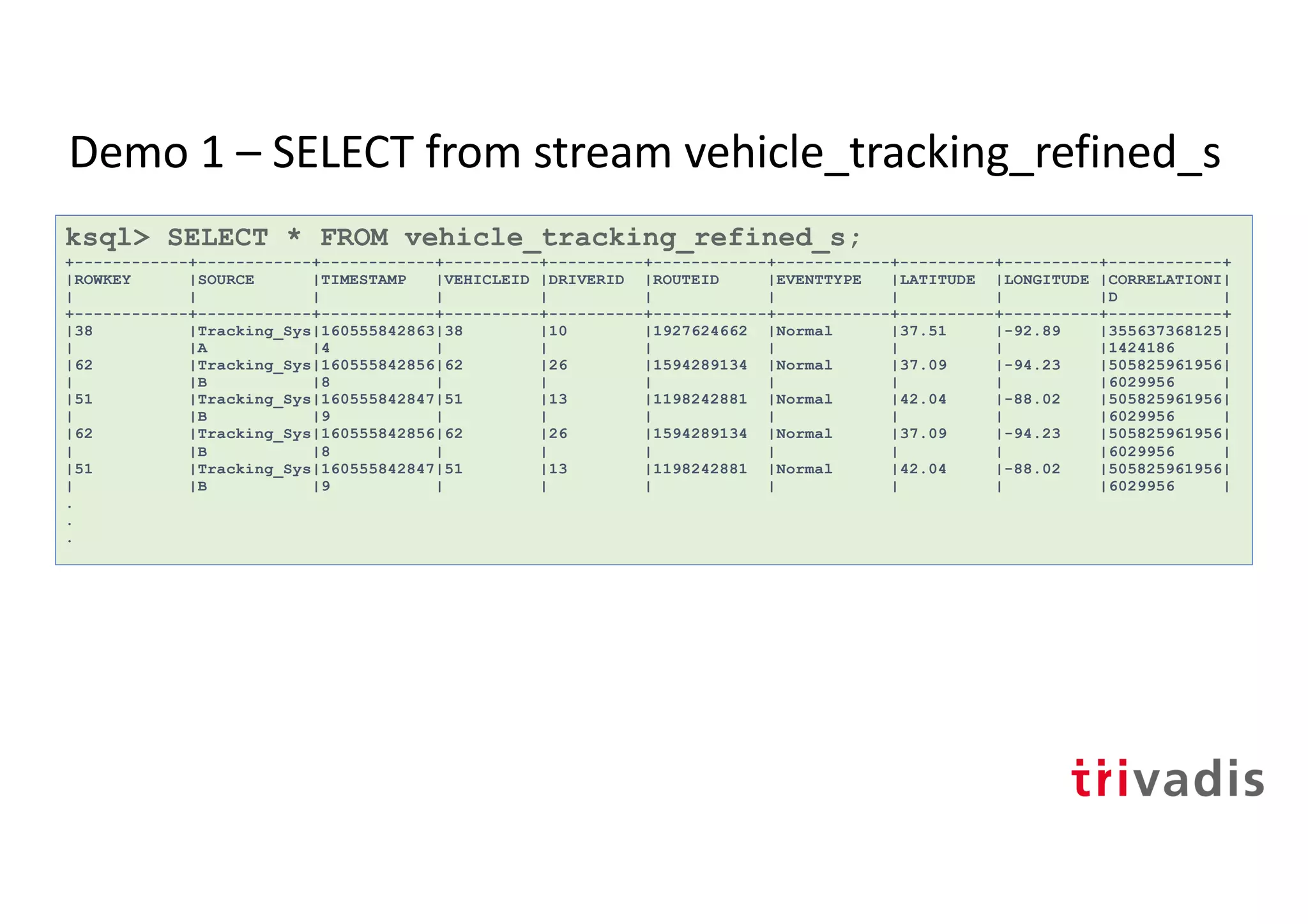

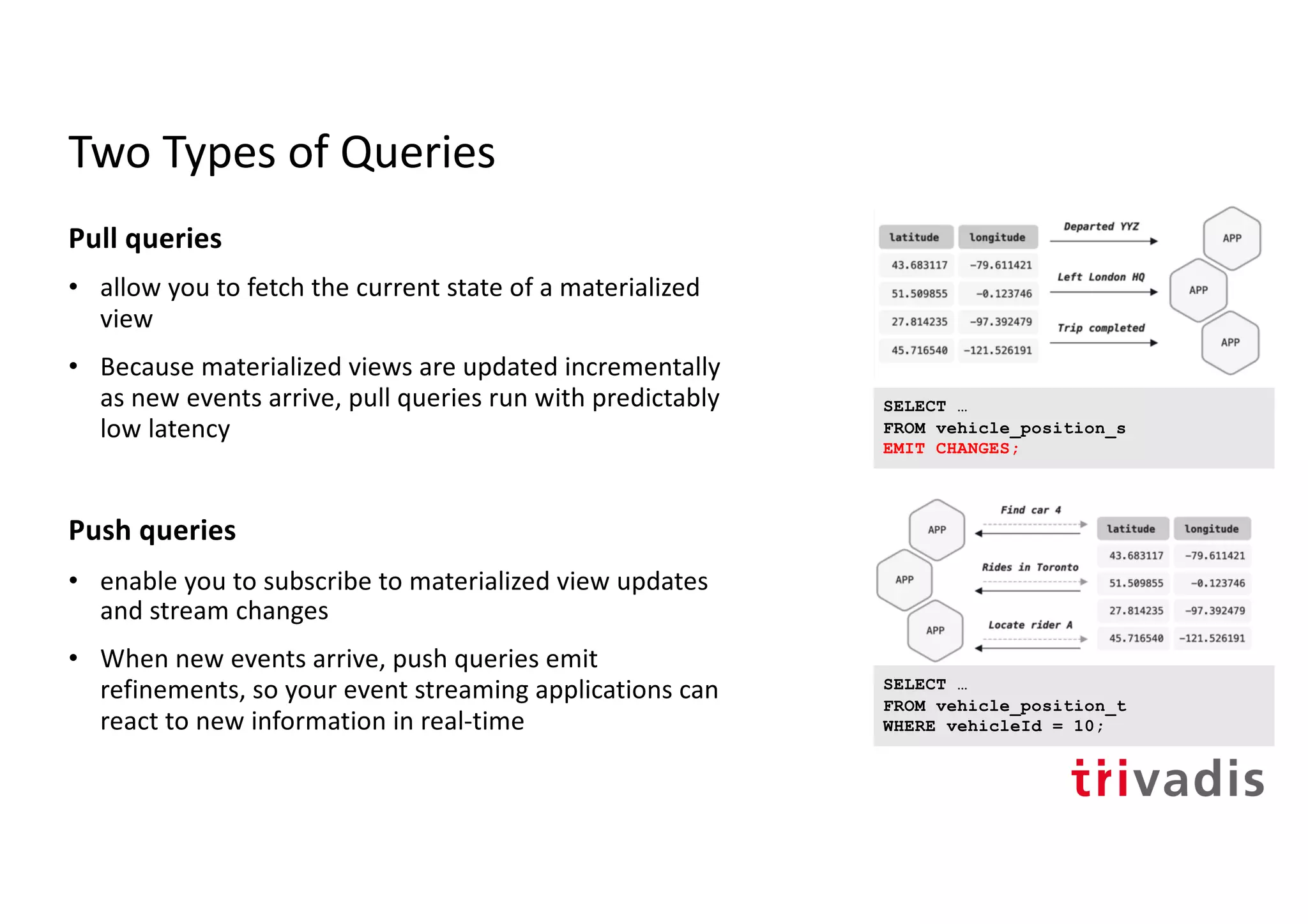

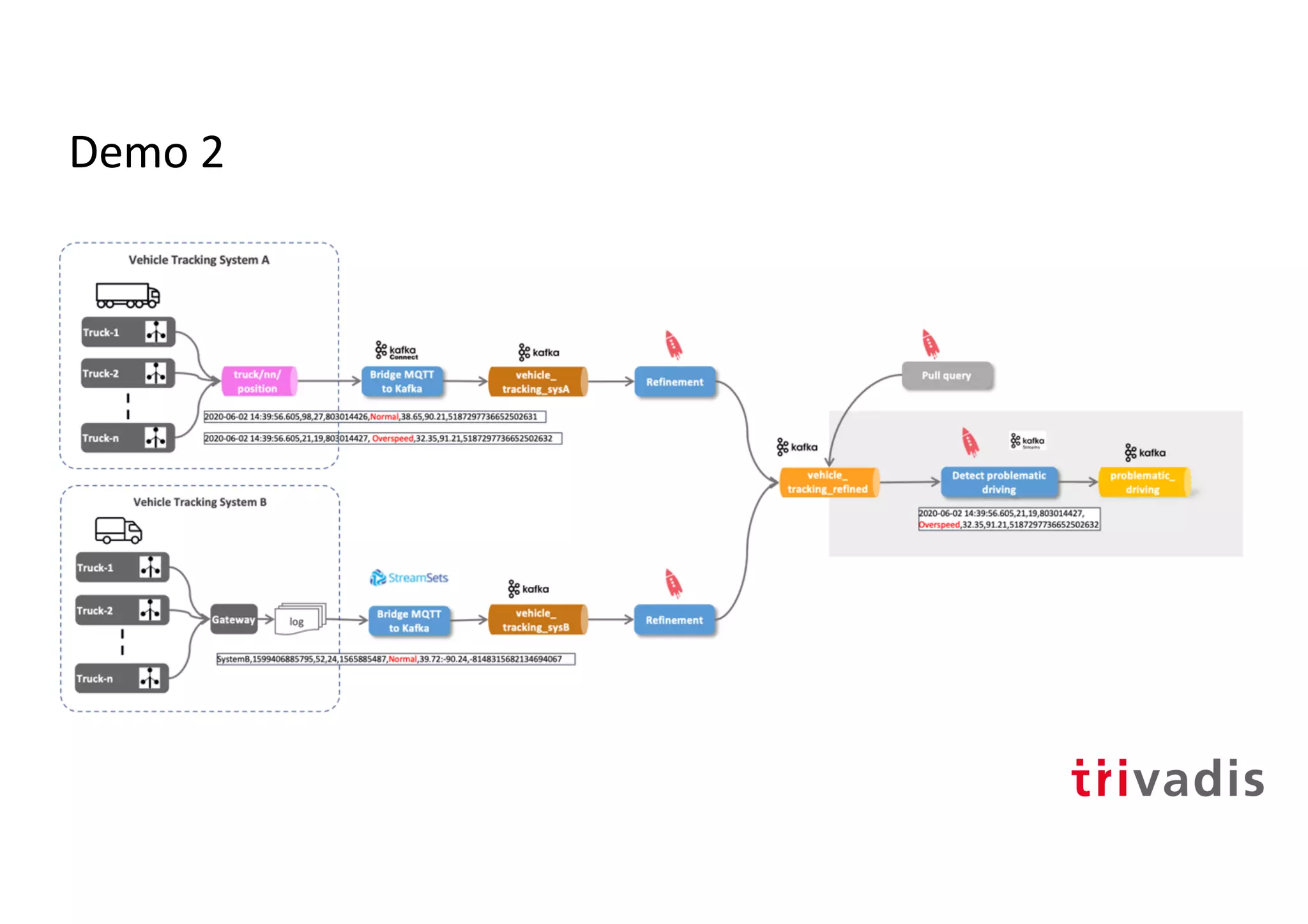

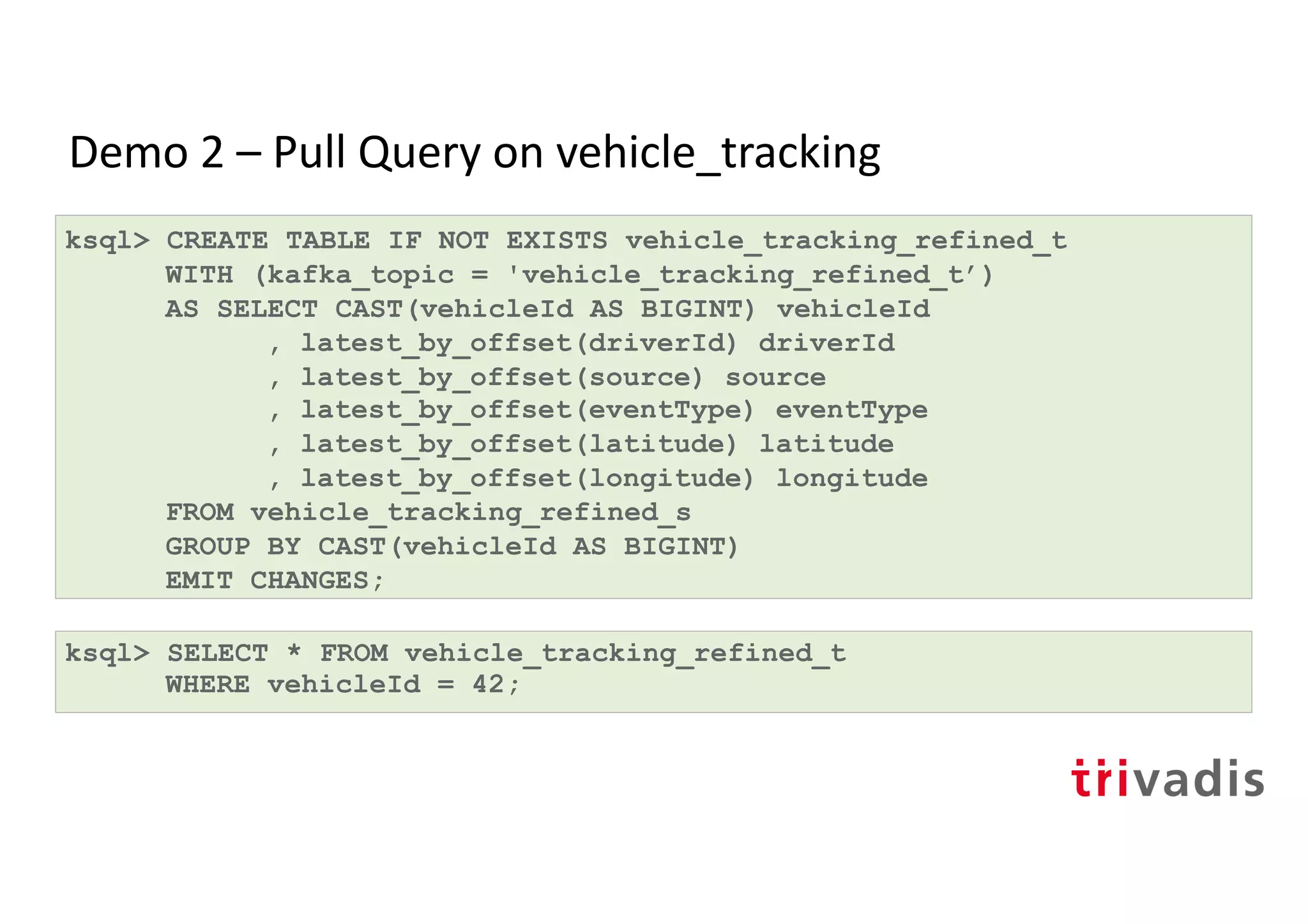

The document outlines the capabilities and features of ksqlDB, a streaming SQL engine for Apache Kafka, allowing users to process streams of data with minimal coding in a SQL dialect. It describes concepts such as streams, tables, and the use of push/pull queries for real-time data handling, as well as how to create connections and manage data enrichment. Additionally, it provides a series of examples demonstrating stream and table creation, queries, and data transformations.

![Demo 1 – INSERT INTO existing stream SELECT form other

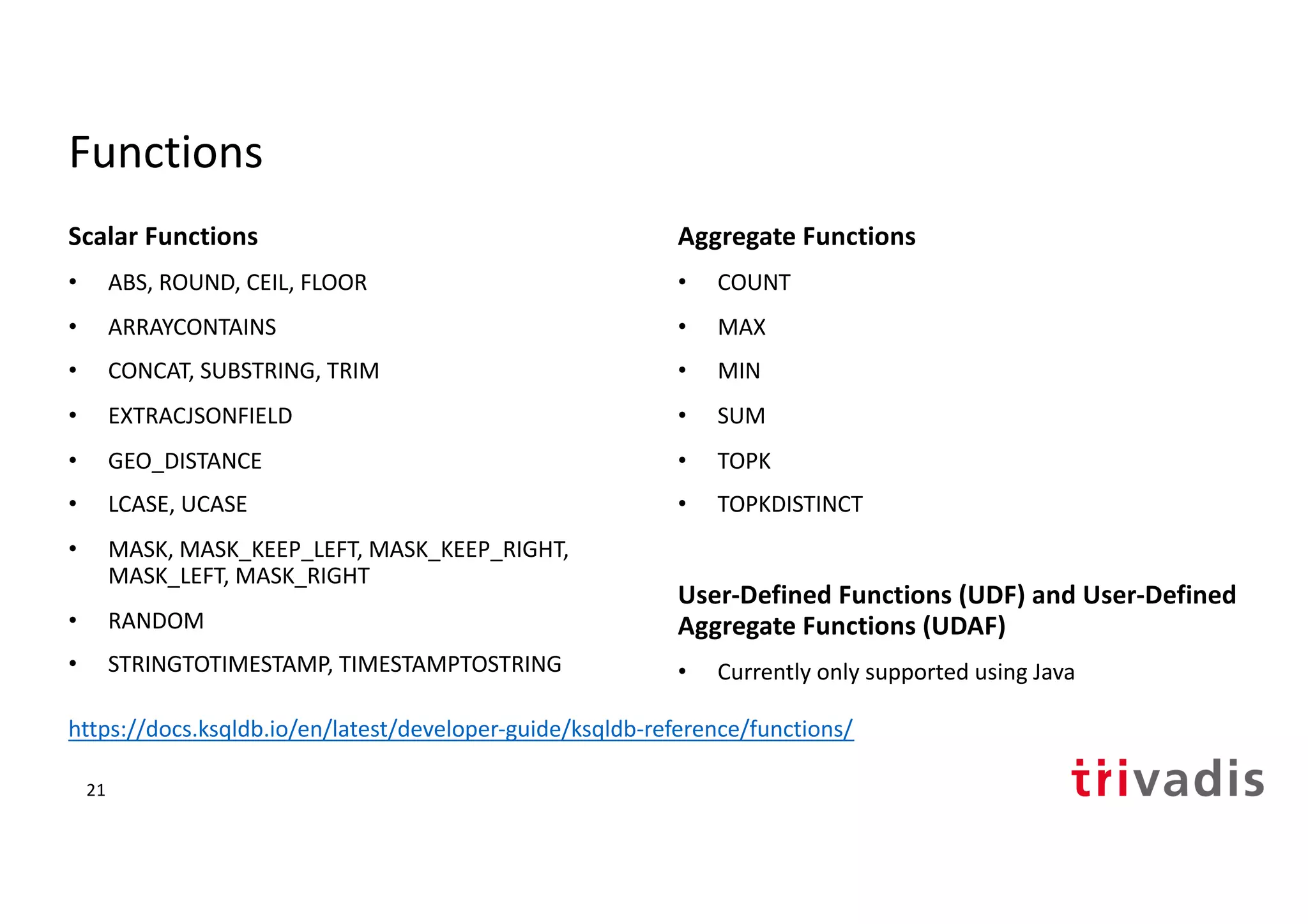

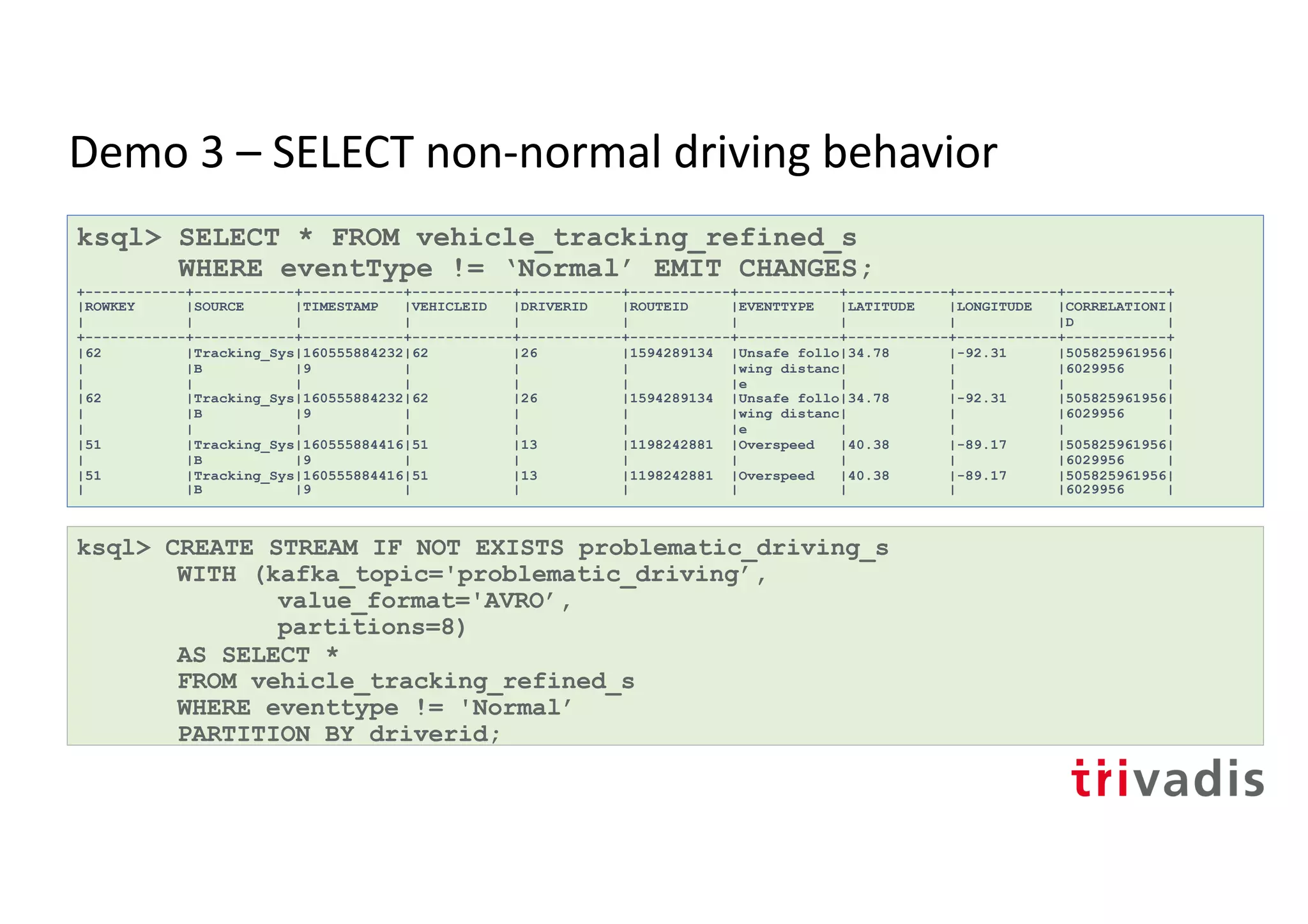

stream

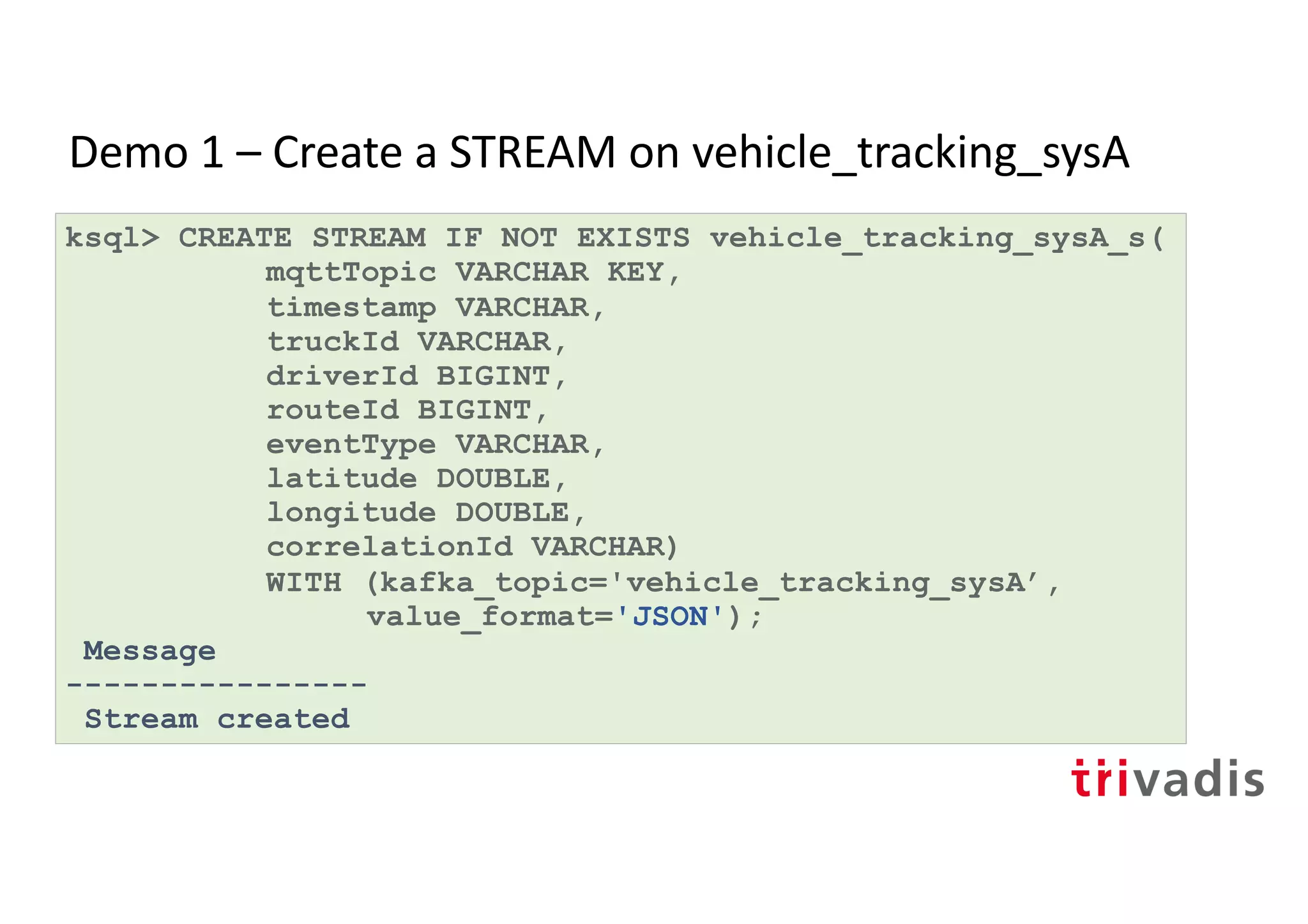

ksql> INSERT INTO vehicle_tracking_refined_s

SELECT ROWKEY

, 'Tracking_SysB' AS source

, timestamp

, vehicleId

, driverId

, routeId

, eventType

, cast(split(latLong,':')[1] as DOUBLE) as latitude

, CAST(split(latLong,':')[2] AS DOUBLE) as longitude

, correlationId

FROM vehicle_tracking_sysB_s

EMIT CHANGES;](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-15-2048.jpg)

![CREATE STREAM

Create a new stream, backed by a Kafka topic, with the specified columns and properties

Supported column data types:

• BOOLEAN, INTEGER, BIGINT, DOUBLE, VARCHAR or STRING

• ARRAY<ArrayType>

• MAP<VARCHAR, ValueType>

• STRUCT<FieldName FieldType, ...>

Supports the following serialization formats: CSV, JSON, AVRO

• KSQL adds the implicit columns ROWTIME and ROWKEY to every stream

CREATE STREAM stream_name ( { column_name data_type } [, ...] )

WITH ( property_name = expression [, ...] );

19](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-17-2048.jpg)

![SELECT (Push Query)

Push a continuous stream of updates to the ksqlDB stream or table

Result of this statement will not be persisted in a Kafka topic and will only be printed out in the console

This is a continuous query, to stop the query in the CLI press CTRL-C

• from_item is one of the following: stream_name, table_name

SELECT select_expr [, ...]

FROM from_item

[ LEFT JOIN join_table ON join_criteria ]

[ WINDOW window_expression ]

[ WHERE condition ]

[ GROUP BY grouping_expression ]

[ HAVING having_expression ]

EMIT output_refinement

[ LIMIT count ];](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-18-2048.jpg)

![CREATE STREAM … AS SELECT …

Create a new KSQL table along with the corresponding Kafka topic and stream the result of the SELECT

query as a changelog into the topic

WINDOW clause can only be used if the from_item is a stream

CREATE STREAM stream_name

[WITH ( property_name = expression [, ...] )]

AS SELECT select_expr [, ...]

FROM from_stream [ LEFT | FULL | INNER ]

JOIN [join_table | join_stream]

[ WITHIN [(before TIMEUNIT, after TIMEUNIT) | N TIMEUNIT] ] ON join_criteria

[ WHERE condition ]

[PARTITION BY column_name];](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-20-2048.jpg)

![INSERT INTO … AS SELECT …

Stream the result of the SELECT query into an existing stream and its underlying topic

schema and partitioning column produced by the query must match the stream’s schema and key

If the schema and partitioning column are incompatible with the stream, then the statement will

return an error

stream_name and from_item must both

refer to a Stream. Tables are not supported!

CREATE STREAM stream_name ...;

INSERT INTO stream_name

SELECT select_expr [., ...]

FROM from_stream

[ WHERE condition ]

[ PARTITION BY column_name ];](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-21-2048.jpg)

![SELECT (Pull Query)

Pulls the current value from the materialized table and terminates

The result of this statement isn't persisted in a Kafka topic and is printed out only in the console

Pull queries enable to fetch the current state of a materialized view

They're a great match for request/response flows and can be used with ksqlDB REST API

SELECT select_expr [, ...]

FROM aggregate_table

WHERE key_column = key

[ AND window_bounds ];](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-25-2048.jpg)

![CREATE CONNECTOR

Create a new connector in the Kafka Connect cluster

with the configuration passed in the WITH clause

Kafka Connect is an open source component of

Apache Kafka that simplifies loading and exporting

data between Kafka and external systems

ksqlDB provides functionality to manage and

integrate with Connect

CREATE SOURCE | SINK CONNECTOR [IF NOT EXISTS] connector_name

WITH( property_name = expression [, ...]);

Source: ksqlDB Documentation](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-32-2048.jpg)

![CREATE TABLE

Create a new table with the specified columns and properties

Supports same data types as CREATE STREAM

KSQL adds the implicit columns ROWTIME and ROWKEY to every table as well

KSQL has currently the following requirements for creating a table from a Kafka topic

• message key must also be present as a field/column in the Kafka message value

• message key must be in VARCHAR aka STRING format

CREATE TABLE table_name ( { column_name data_type } [, ...] )

WITH ( property_name = expression [, ...] );](https://image.slidesharecdn.com/ksqldb-201118112142/75/ksqlDB-Stream-Processing-simplified-33-2048.jpg)