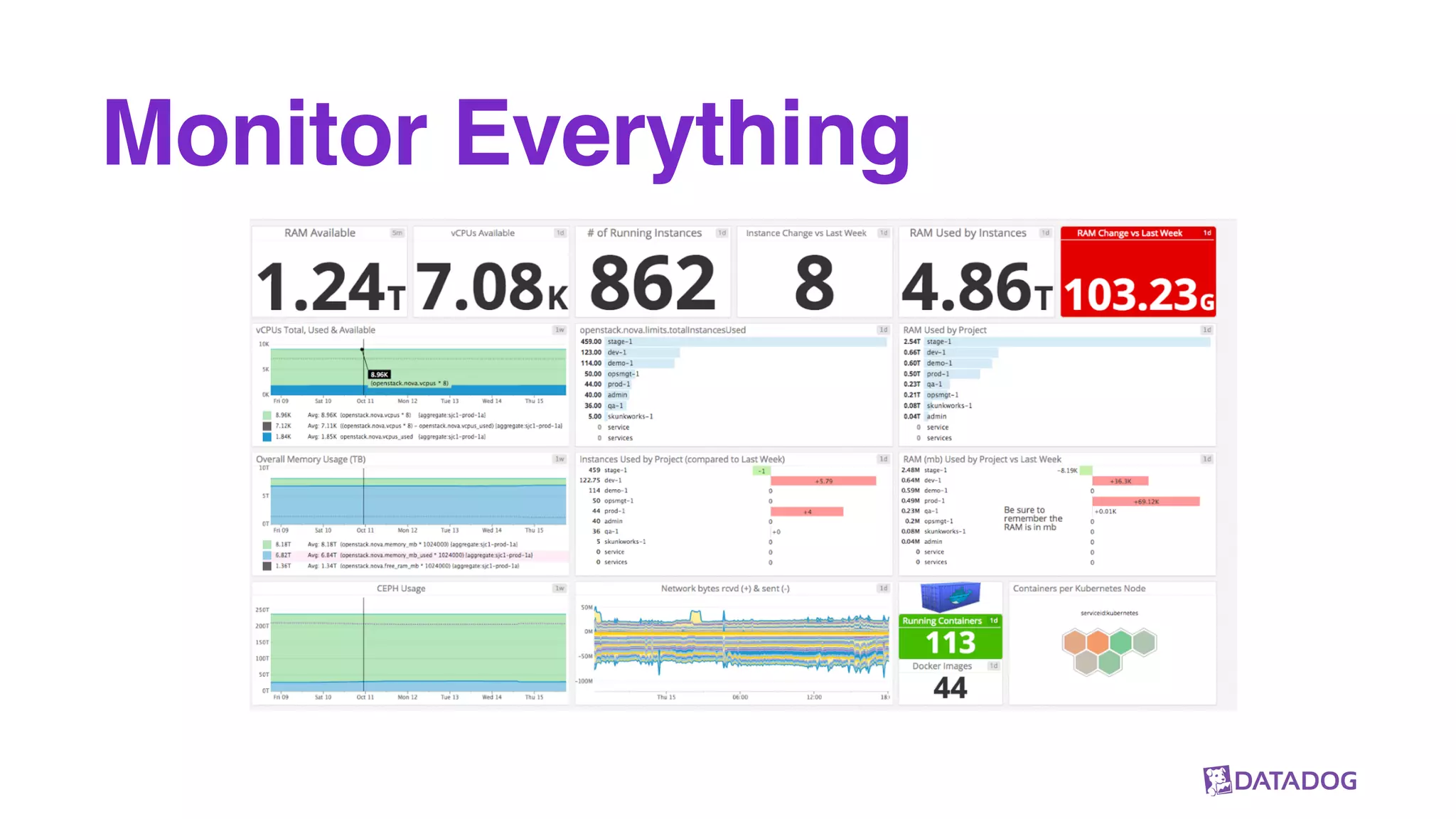

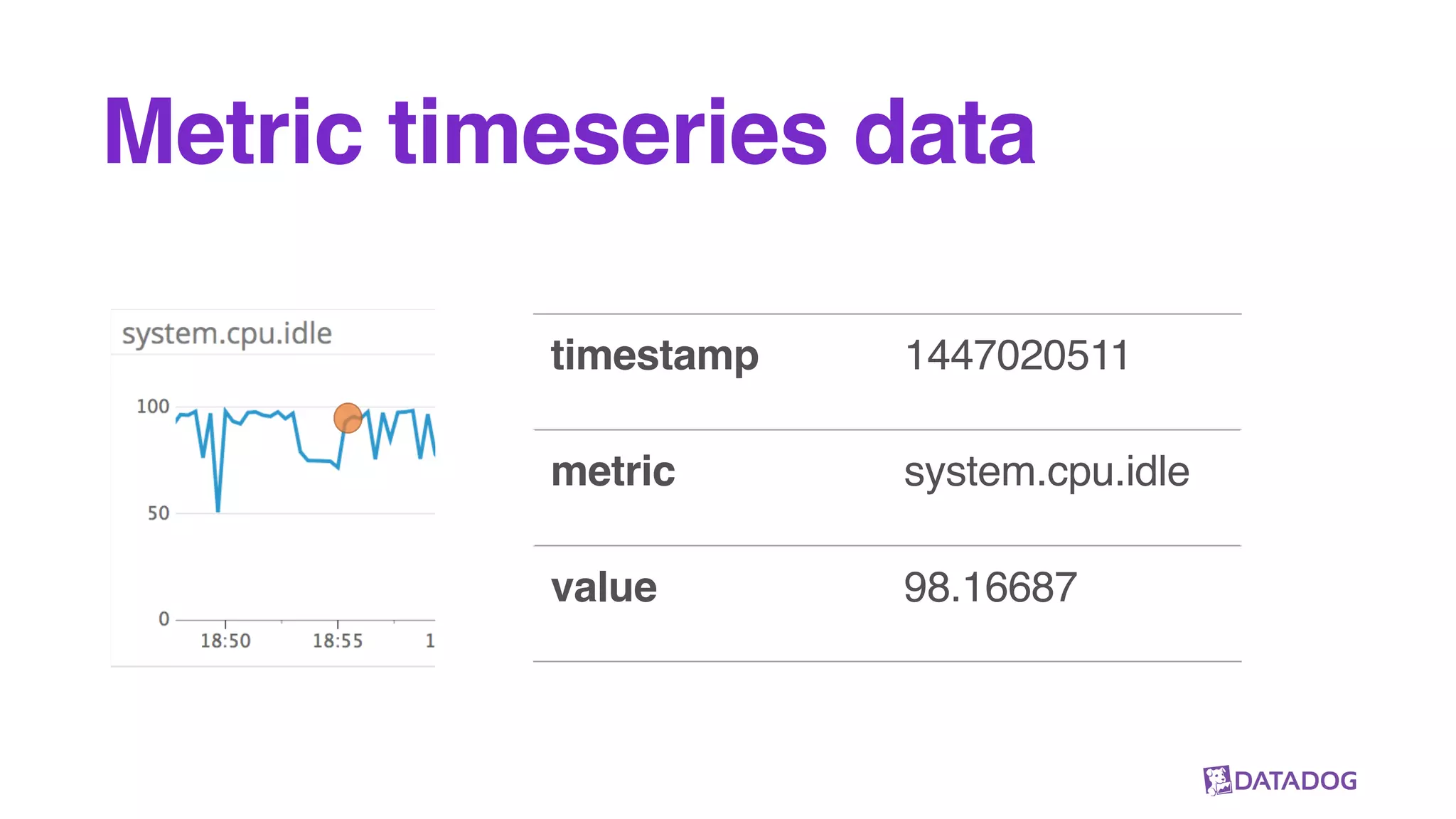

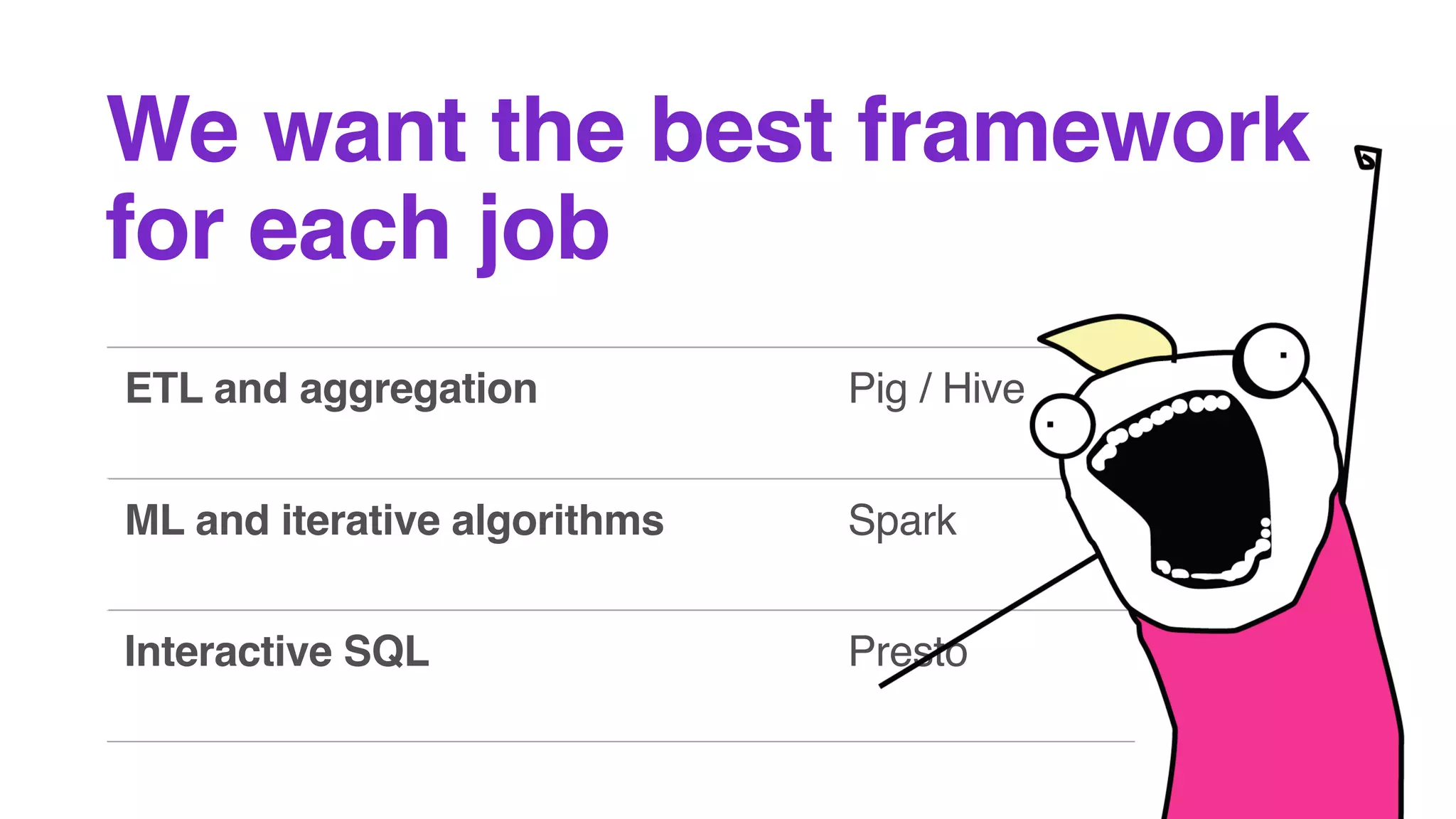

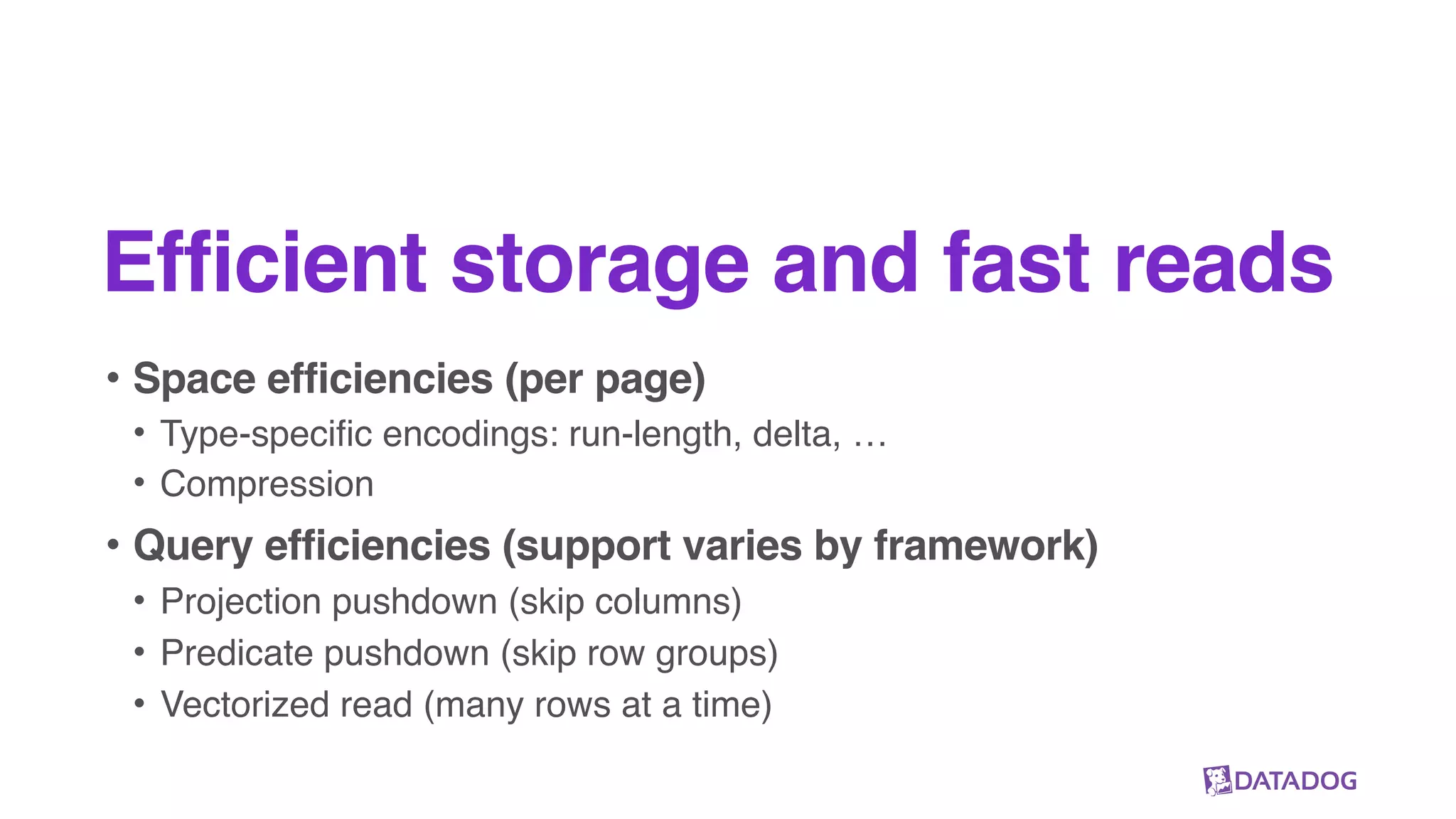

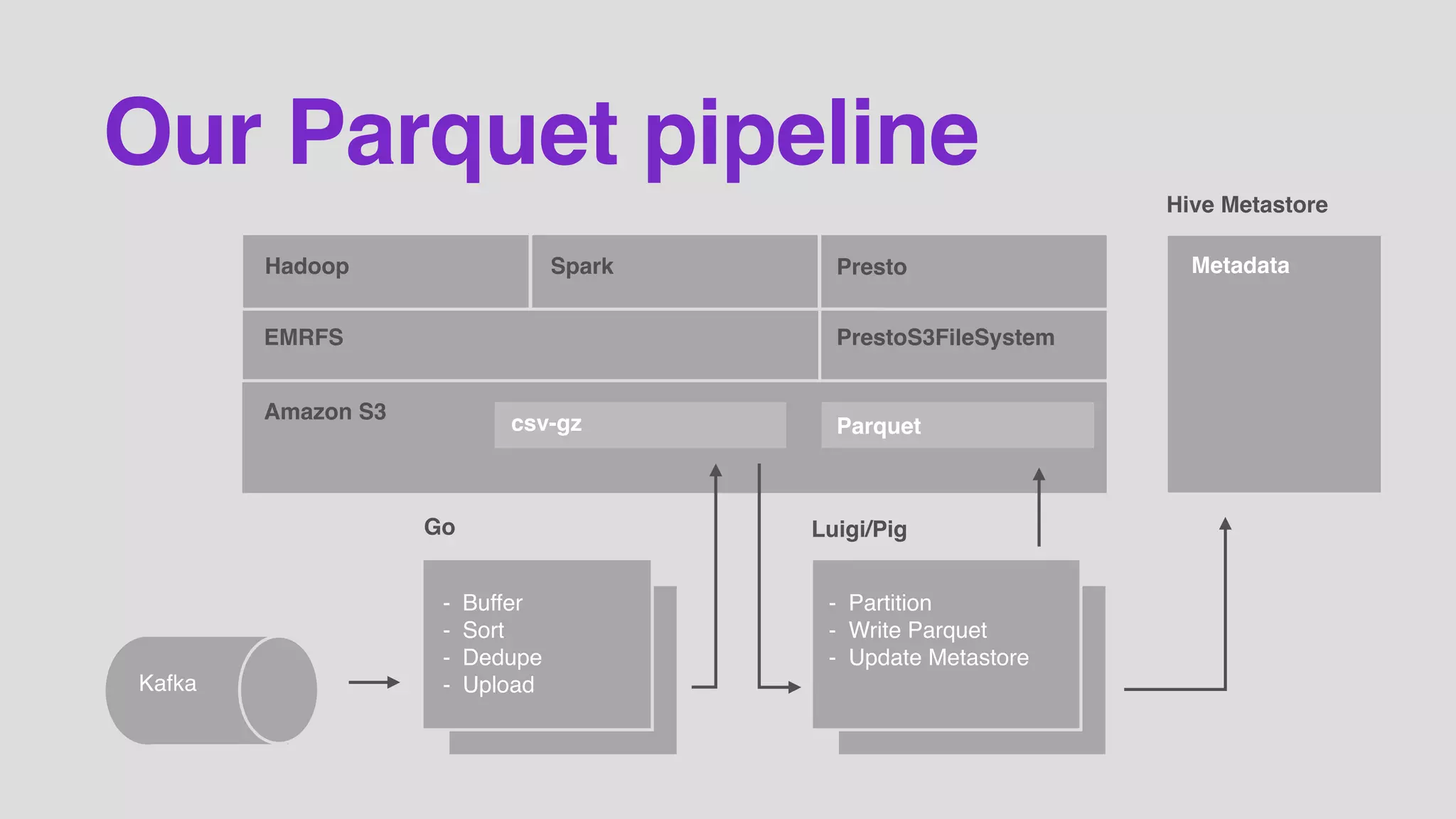

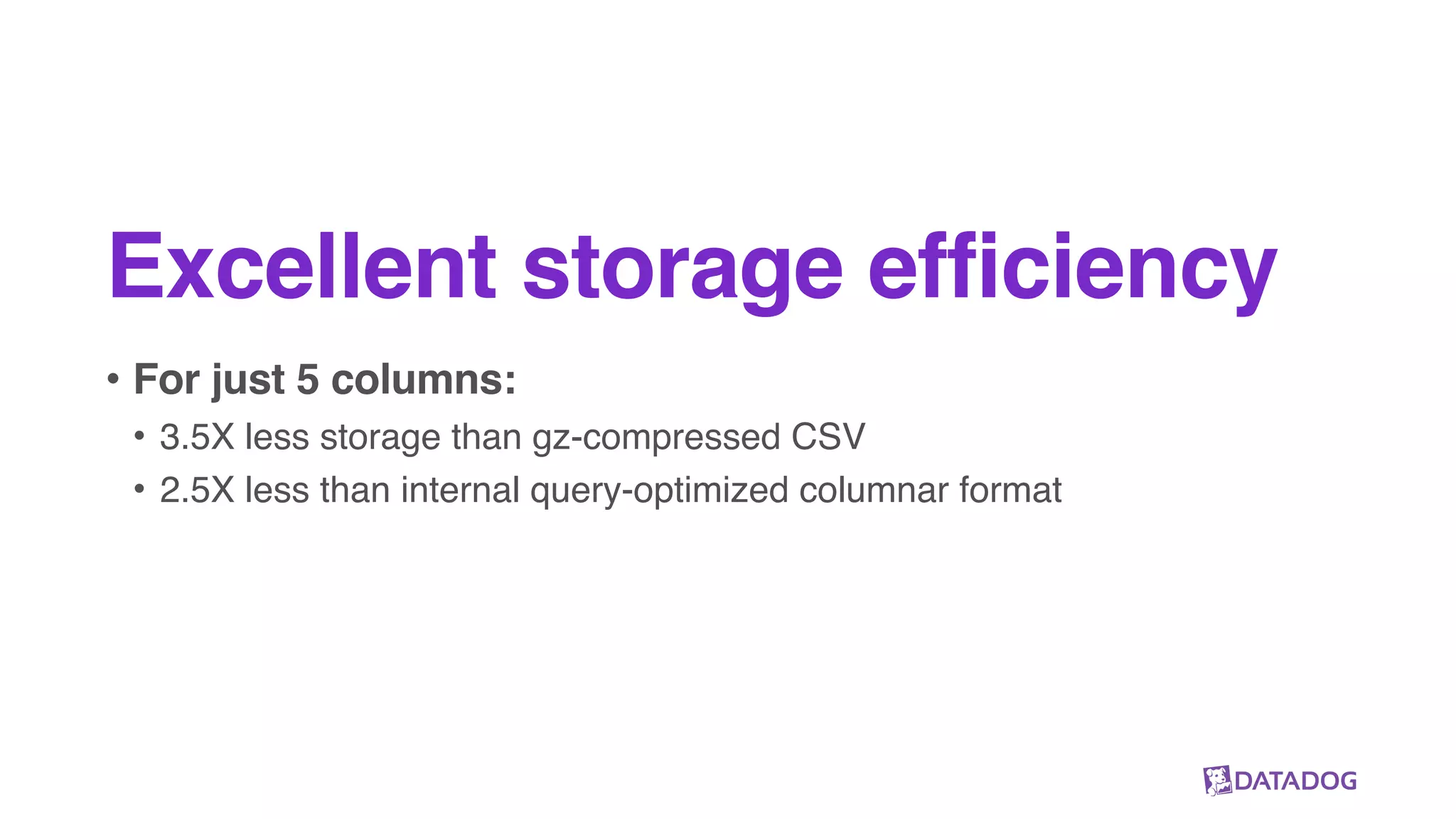

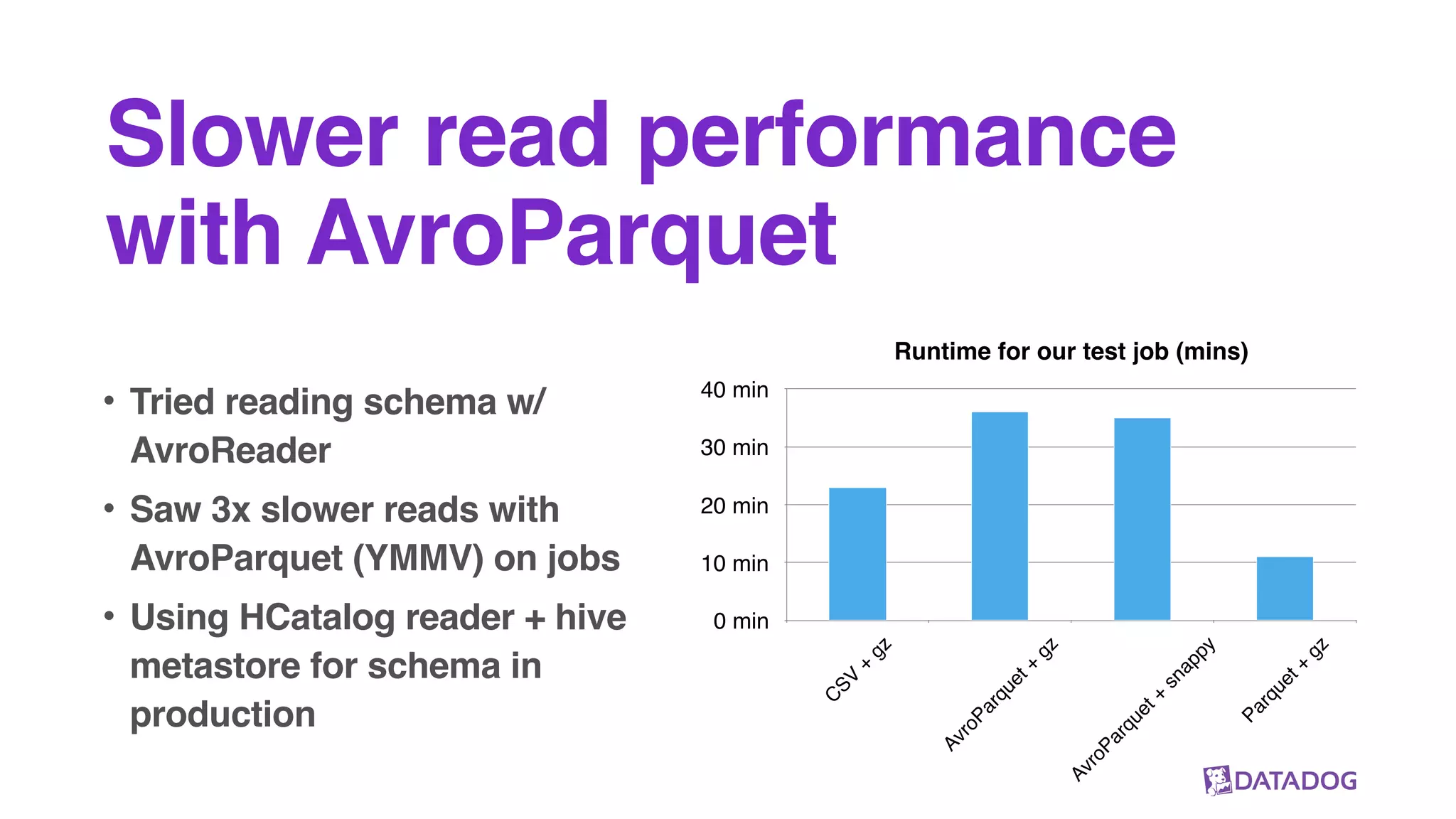

The document outlines Datadog's use of Parquet for efficiently collecting and processing vast amounts of metrics data from cloud applications. It highlights key aspects such as their data pipeline, the benefits of using Parquet, and some production insights related to storage efficiency and read performance. The discussion emphasizes the importance of separate compute and storage as well as a standard data format for optimal performance.