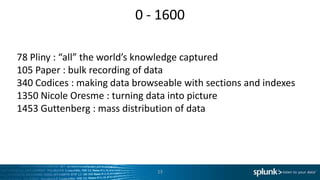

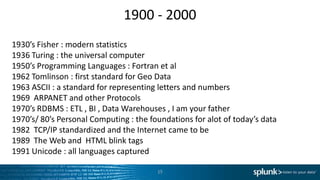

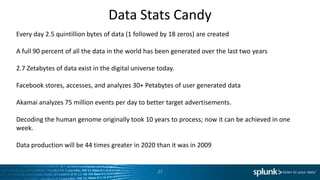

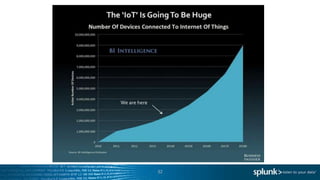

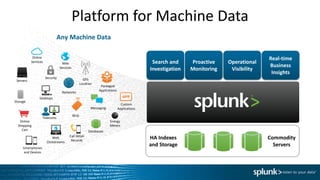

This document provides a brief history of data from ancient times to the present day. It discusses how humans started counting and recording data visually over 20,000 years ago. Written language emerged around 3,500 BC allowing data to be recorded and transmitted. Major developments include the first library in 1250 BC to store data in mass, the origin of maps in 1150 BC, and using numbers and logic to derive insights from 100 BC to 350 BC. Significant milestones from 1600 to 1900 include the development of statistics, computers, programming languages, data standards, and the internet. Today's "big data" landscape is characterized by volume, variety, velocity, and veracity of data being created. The future will involve understanding data through insights