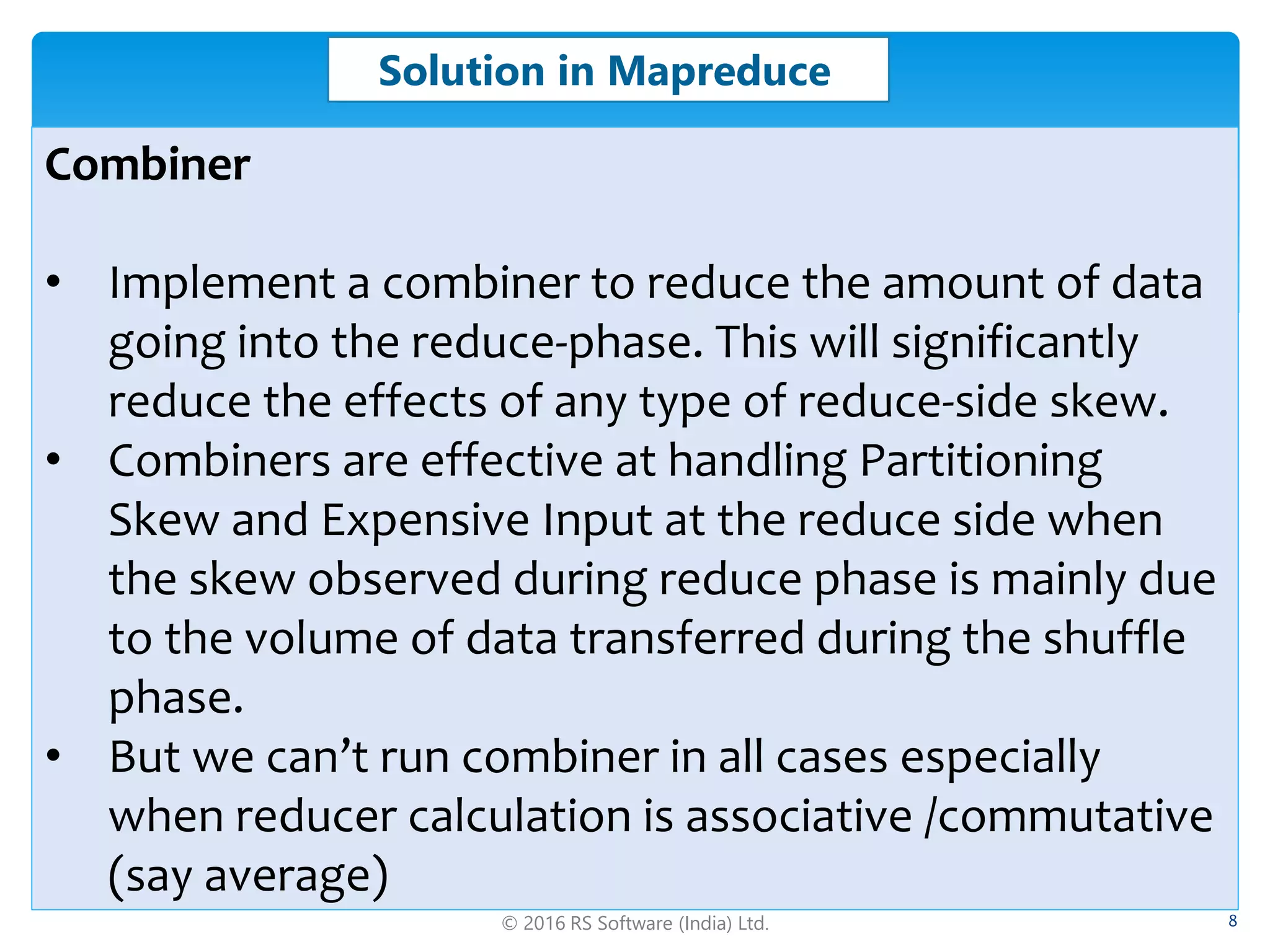

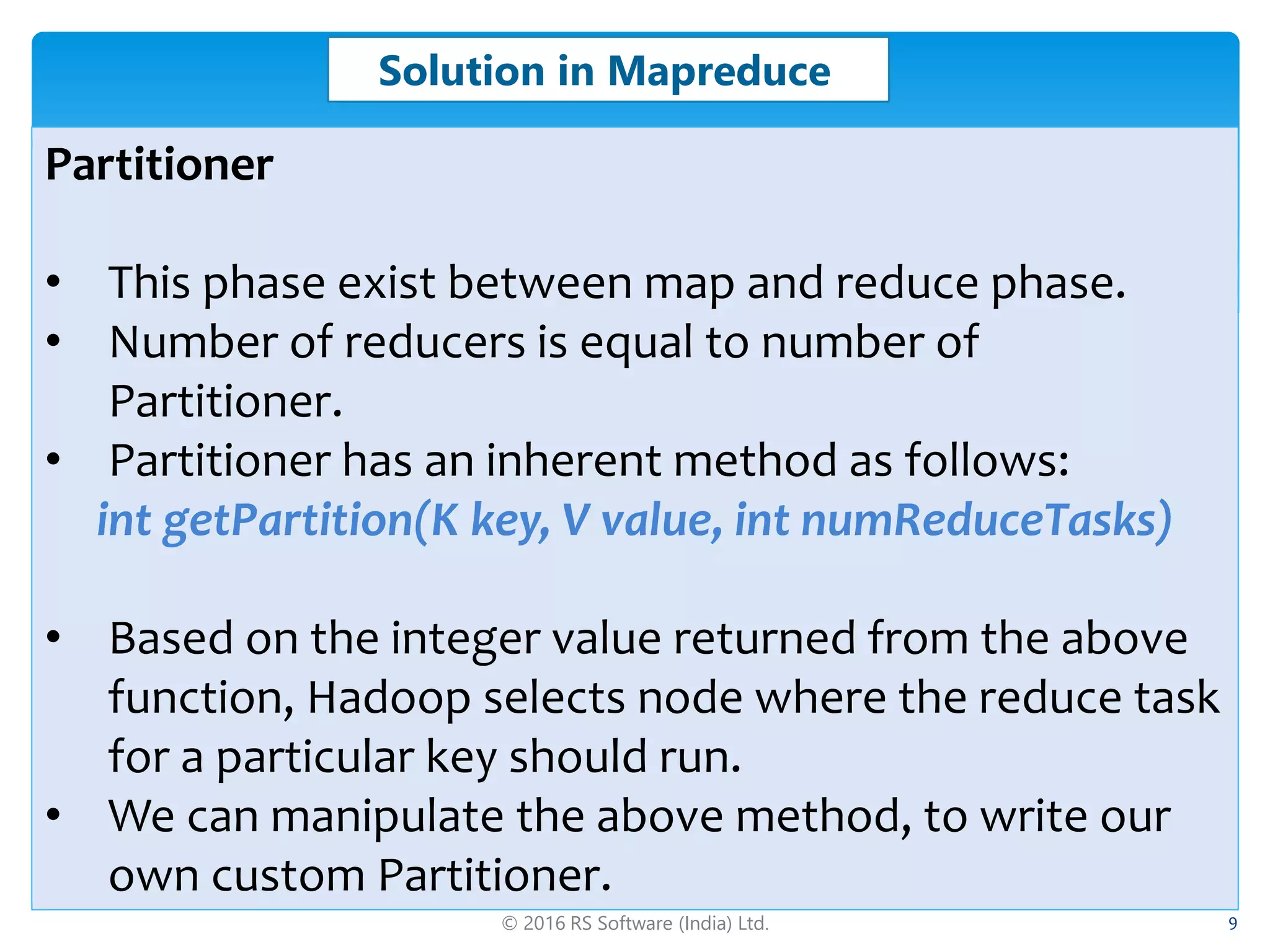

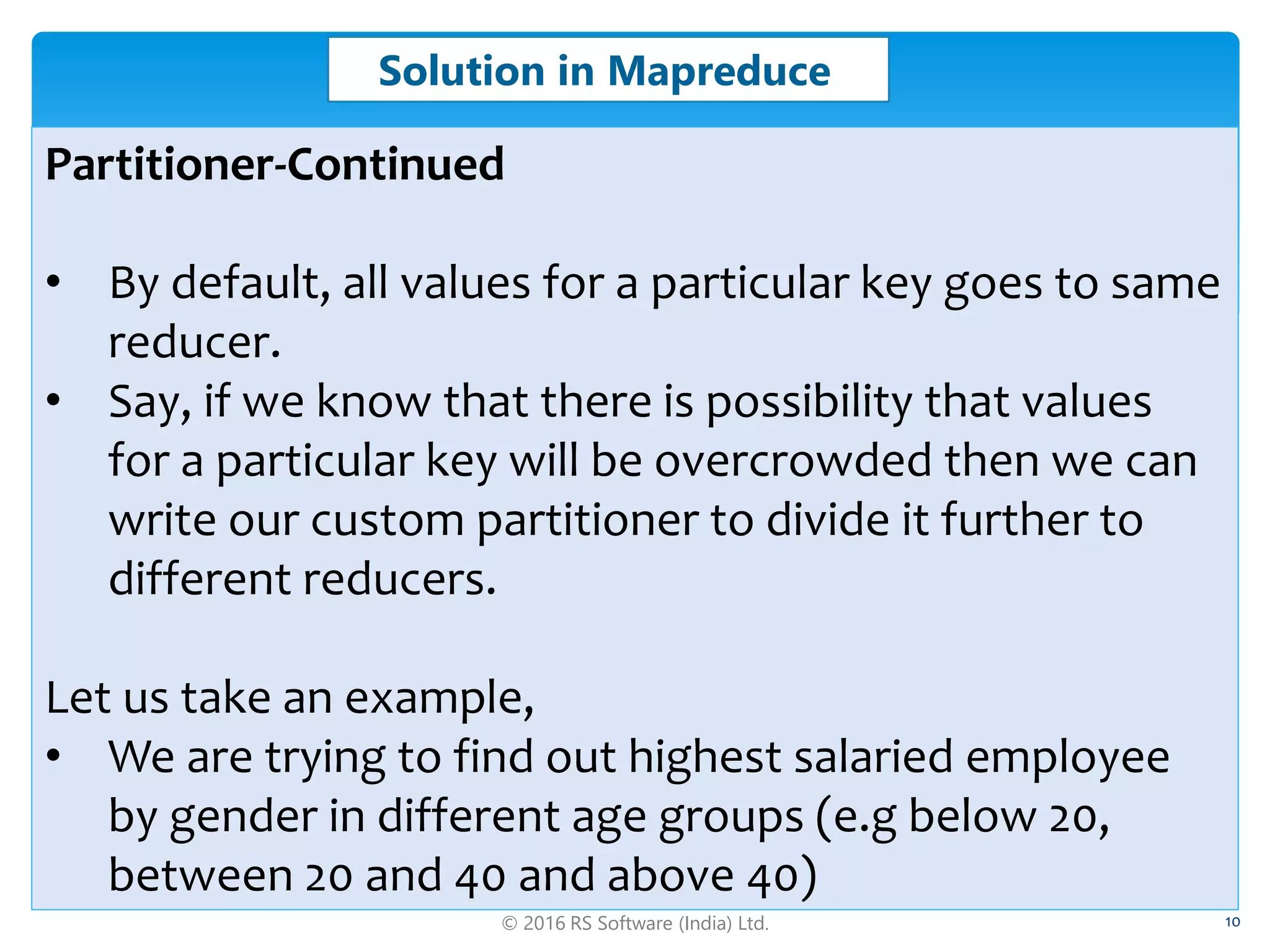

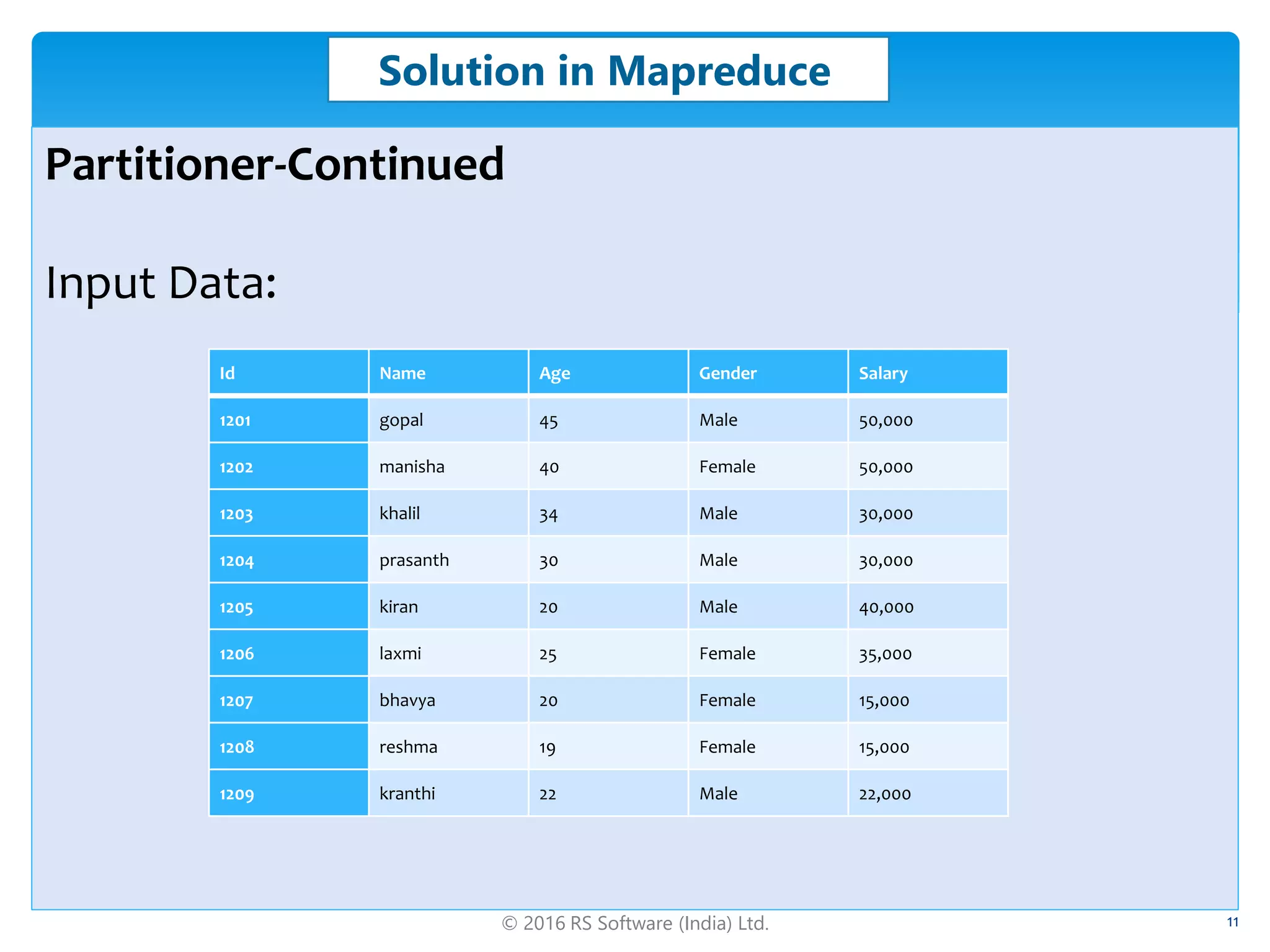

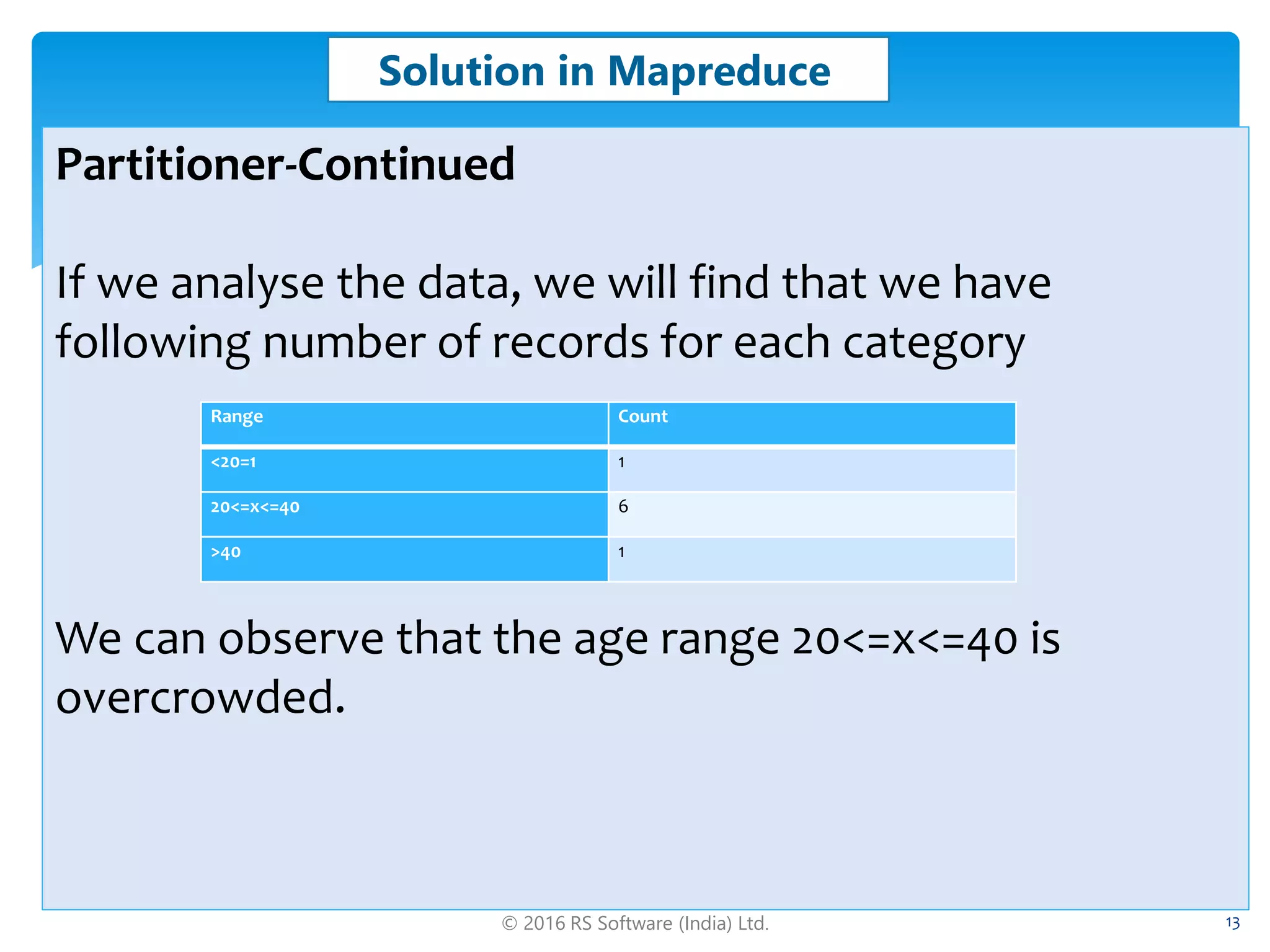

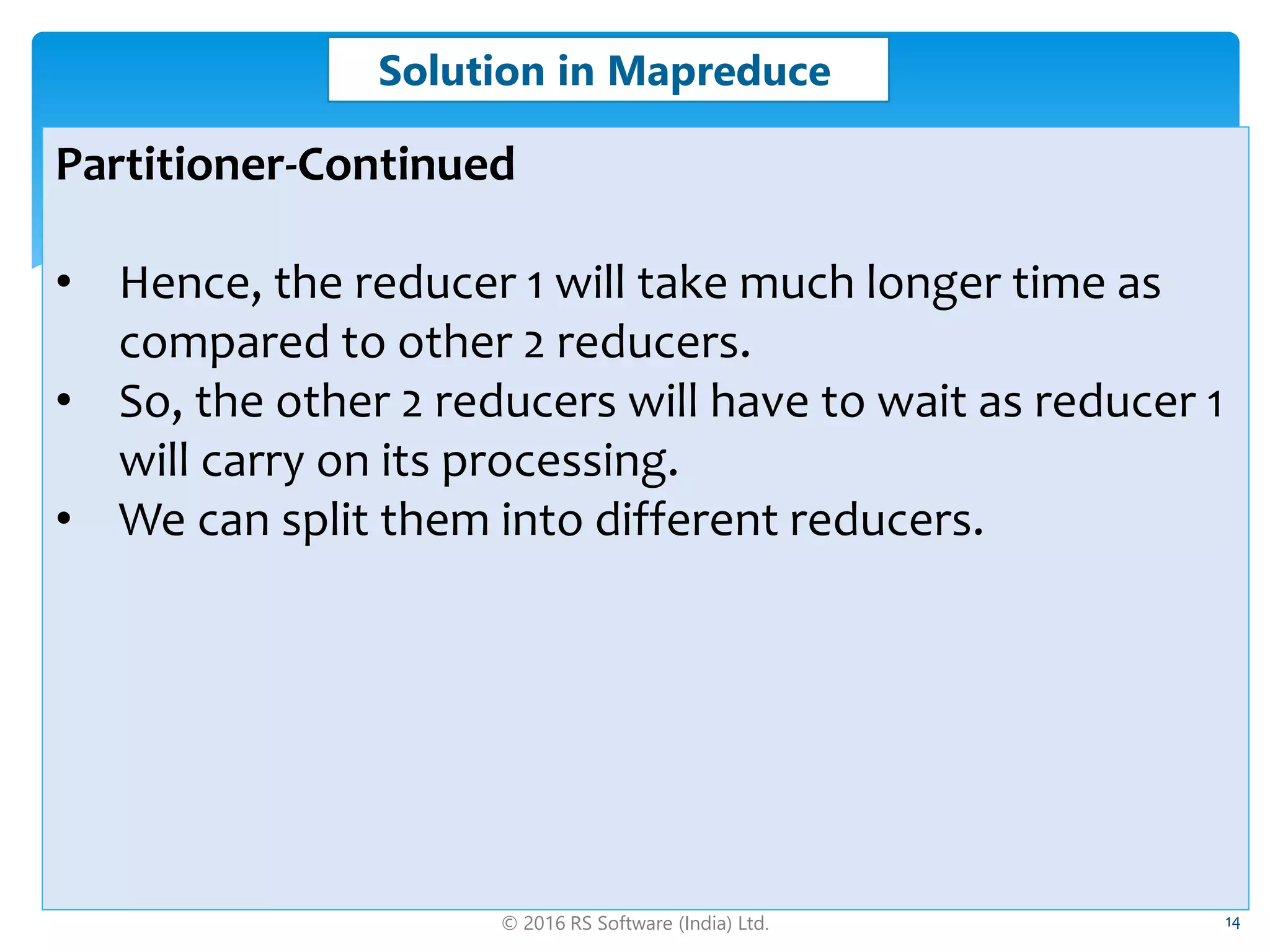

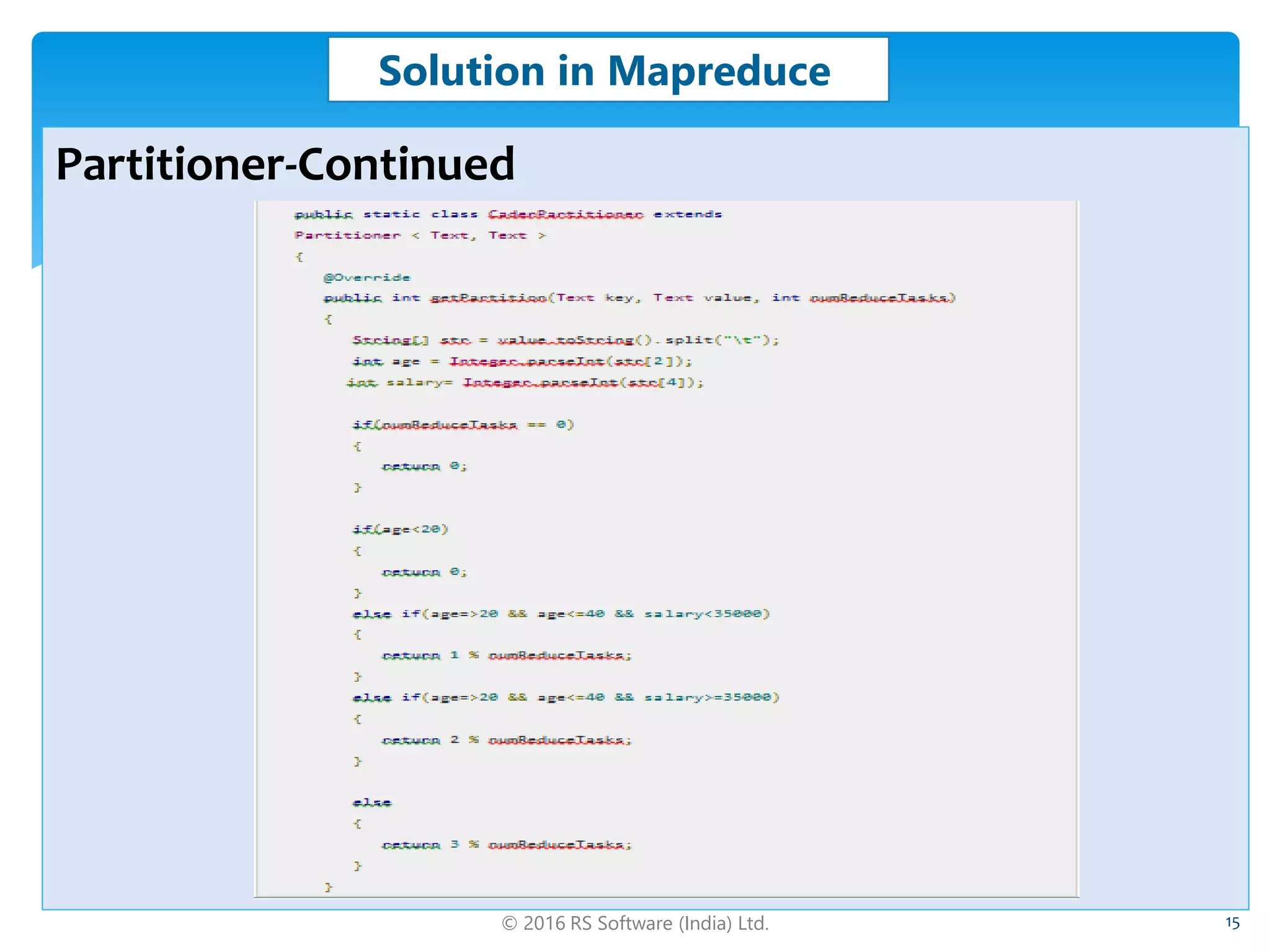

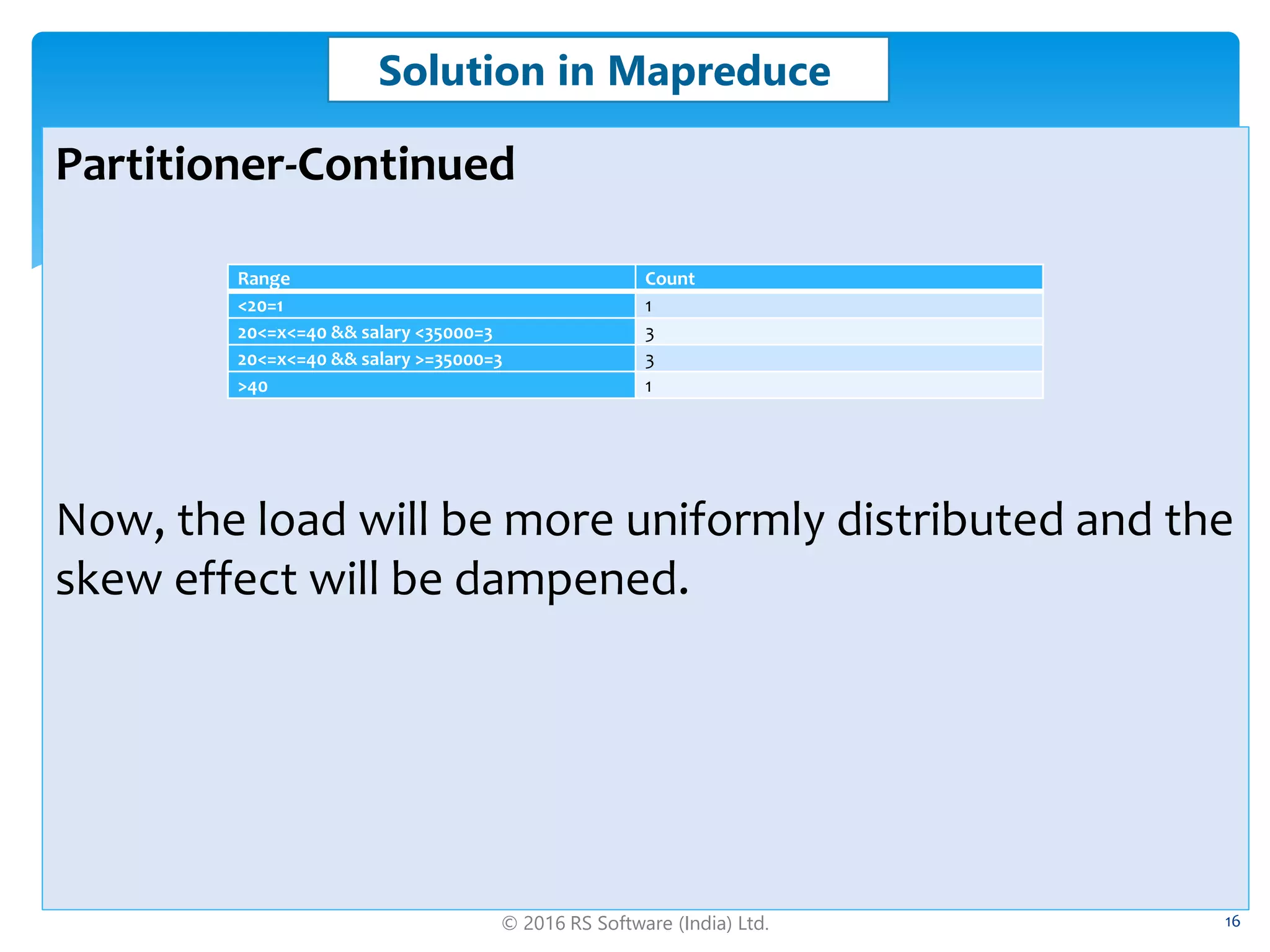

The document discusses big data skew, defining skewness and its types, including negative, positive, and normal distributions. It addresses challenges in data processing with Hadoop, particularly focusing on how to mitigate skew through methods like combiners and custom partitioners. Additionally, it covers solutions in Hive and Pig, explaining how skewed tables and skew joins can optimize performance in data analytics.

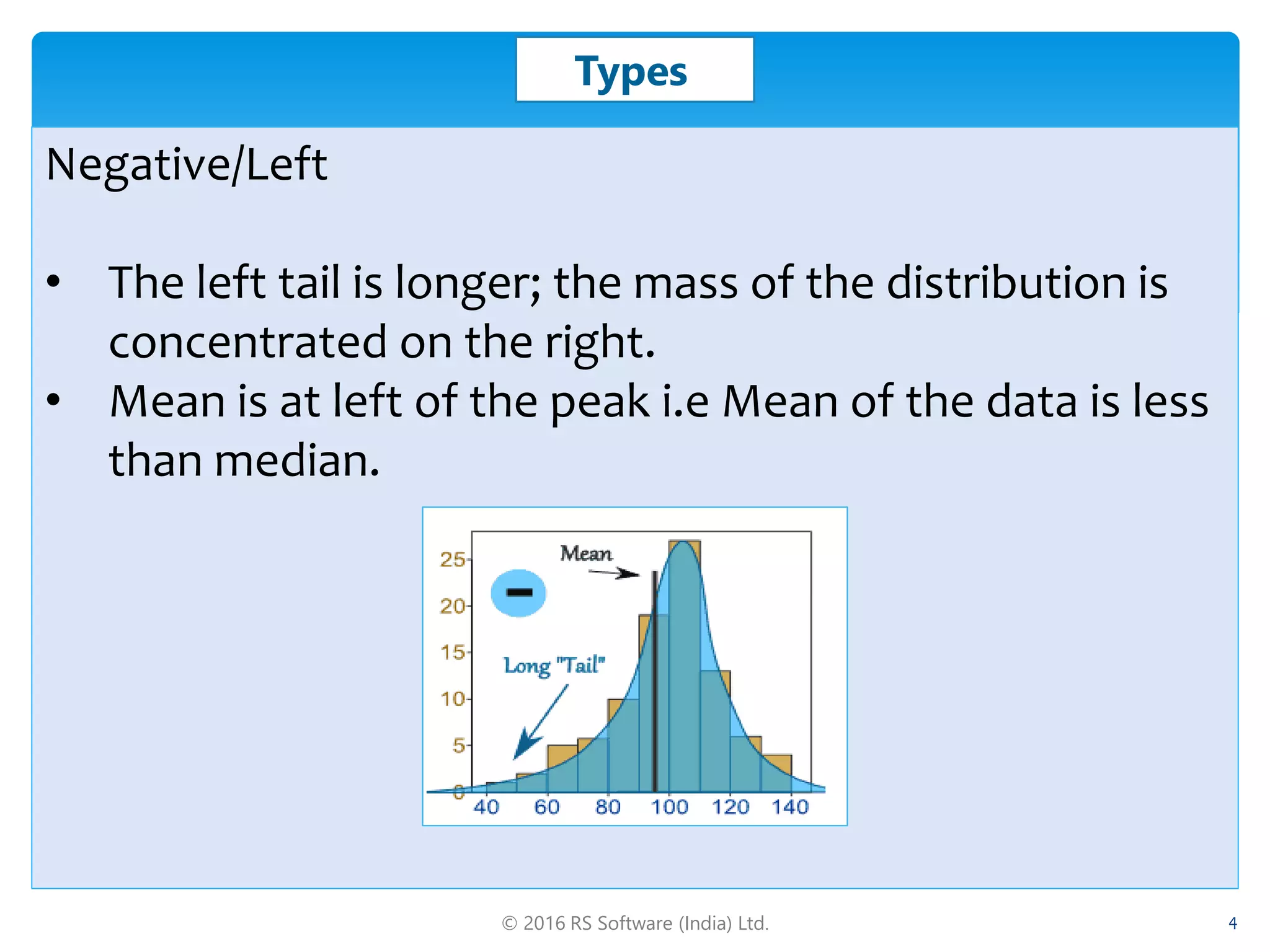

![© 2016 RS Software (India) Ltd. 18

Solution in Hive

Skewed table

• A skewed table is a special type of table where the

values that appear very often (heavy skew) are split

out into separate files and rest of the values go to

some other file.

• Syntax:

create table <T> (schema) skewed by (keys) on ('c1', 'c2')

[STORED as DIRECTORIES];

• Example:

create table T (c1 string, c2 string) skewed by (c1) on

('x1');](https://image.slidesharecdn.com/bigdataskew-160731052539/75/Big-data-skew-18-2048.jpg)