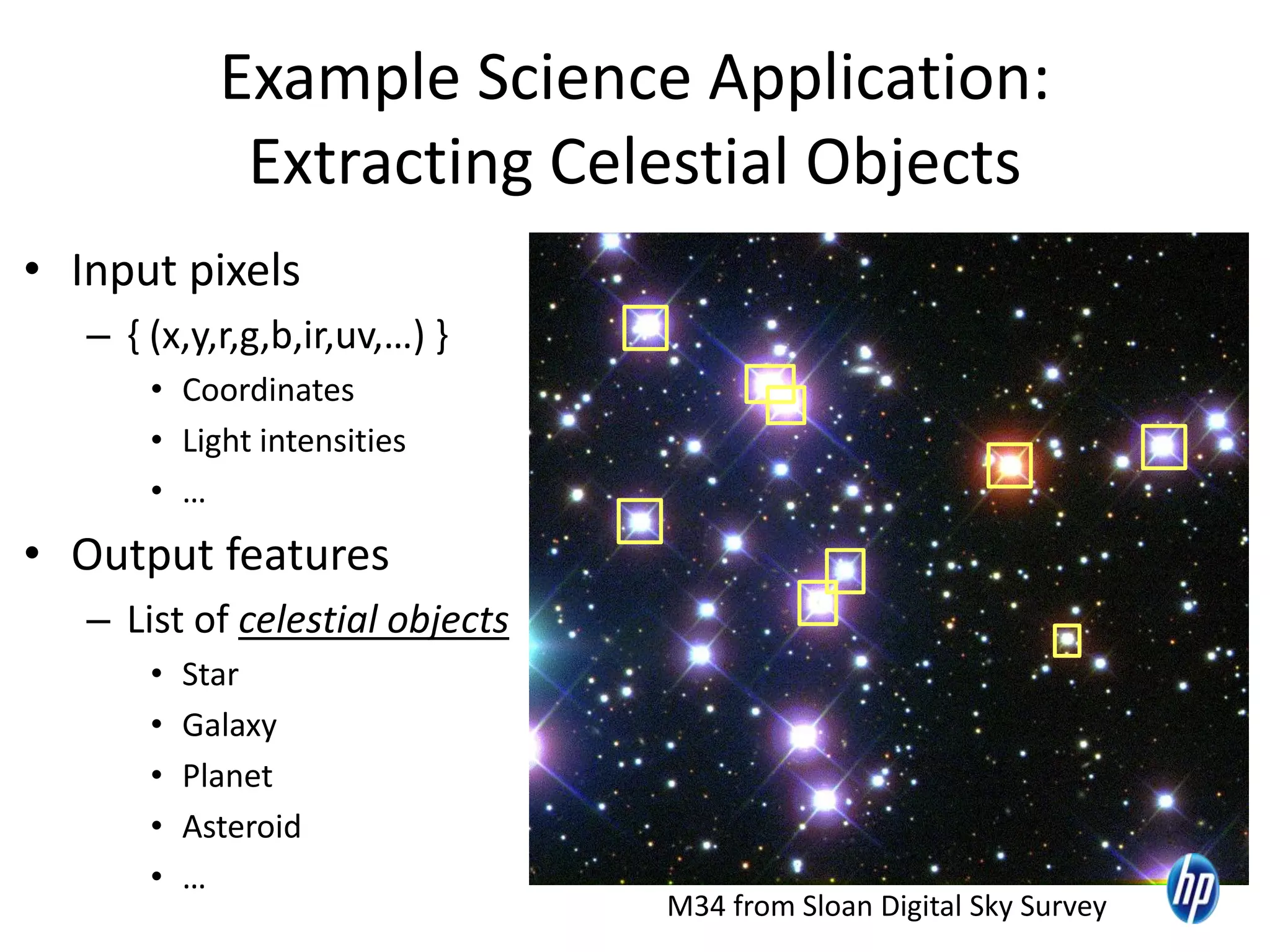

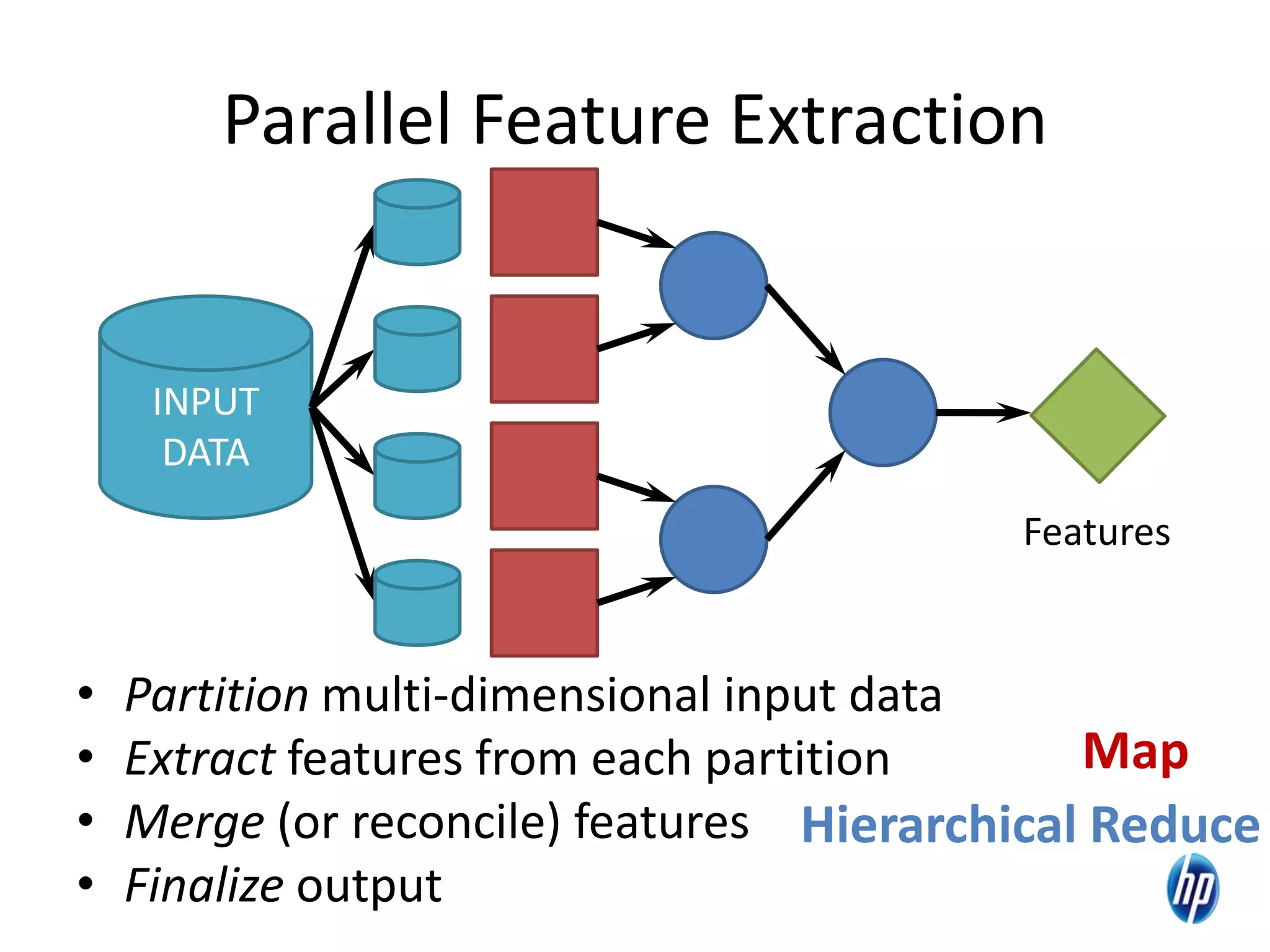

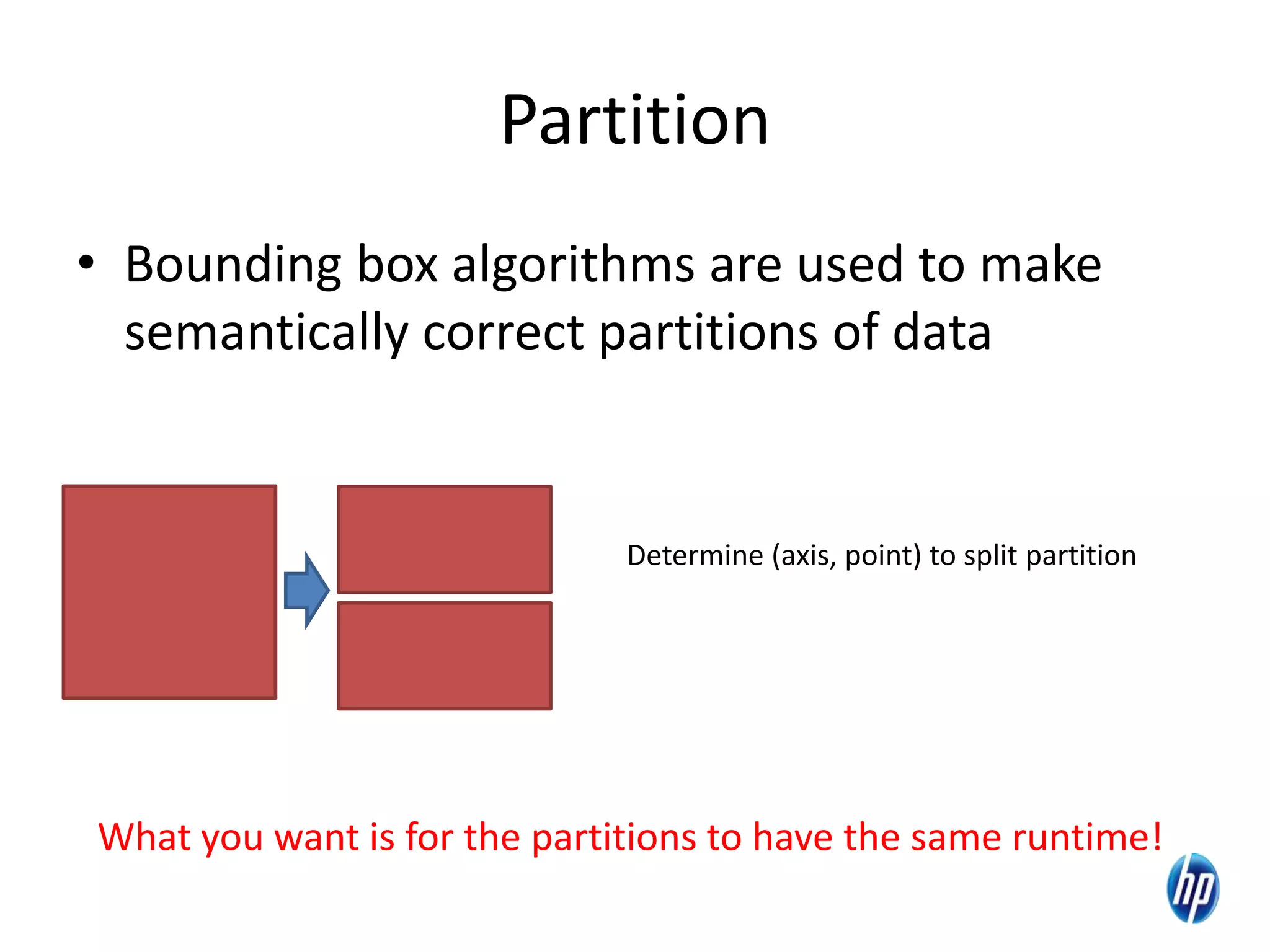

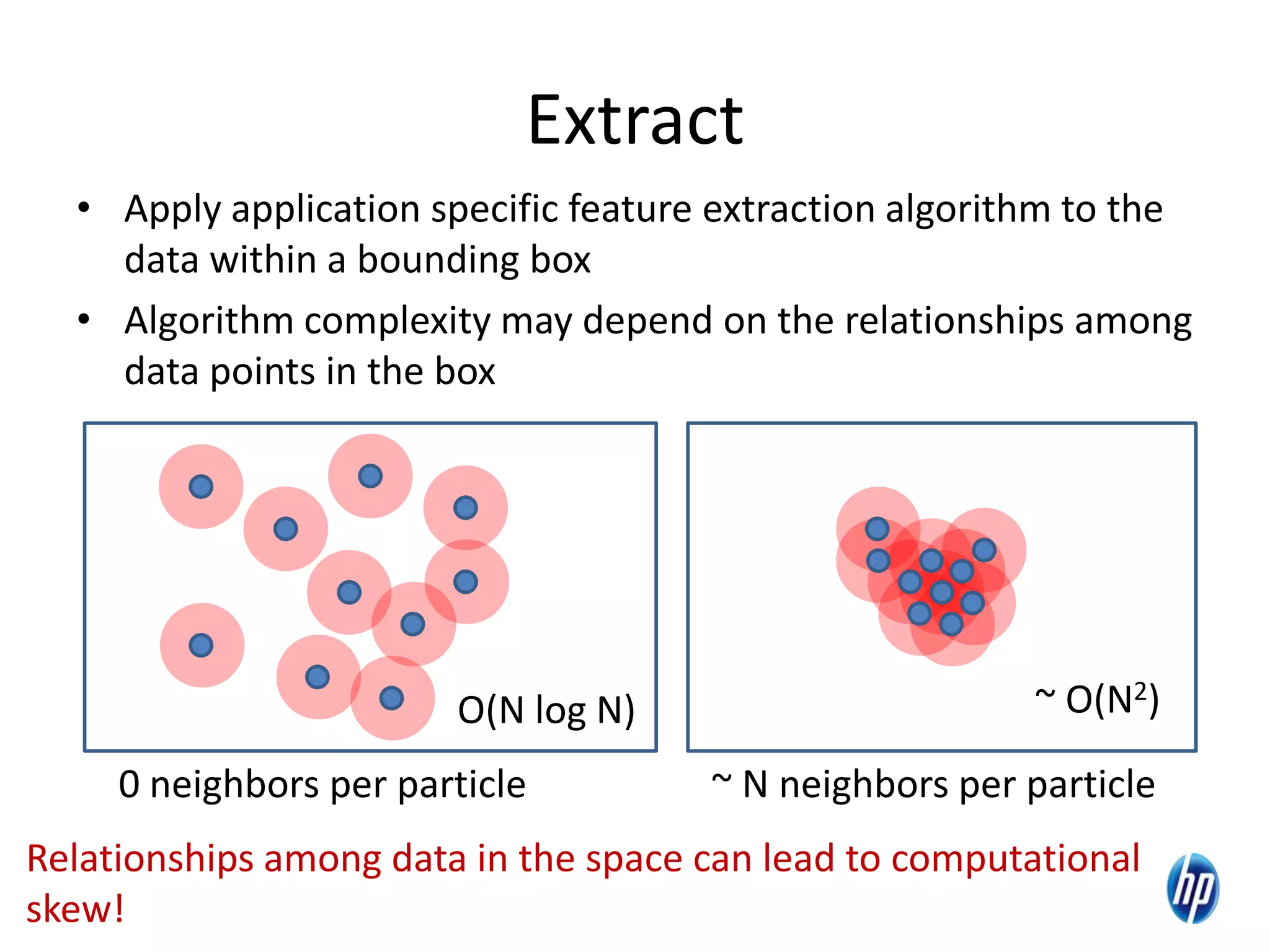

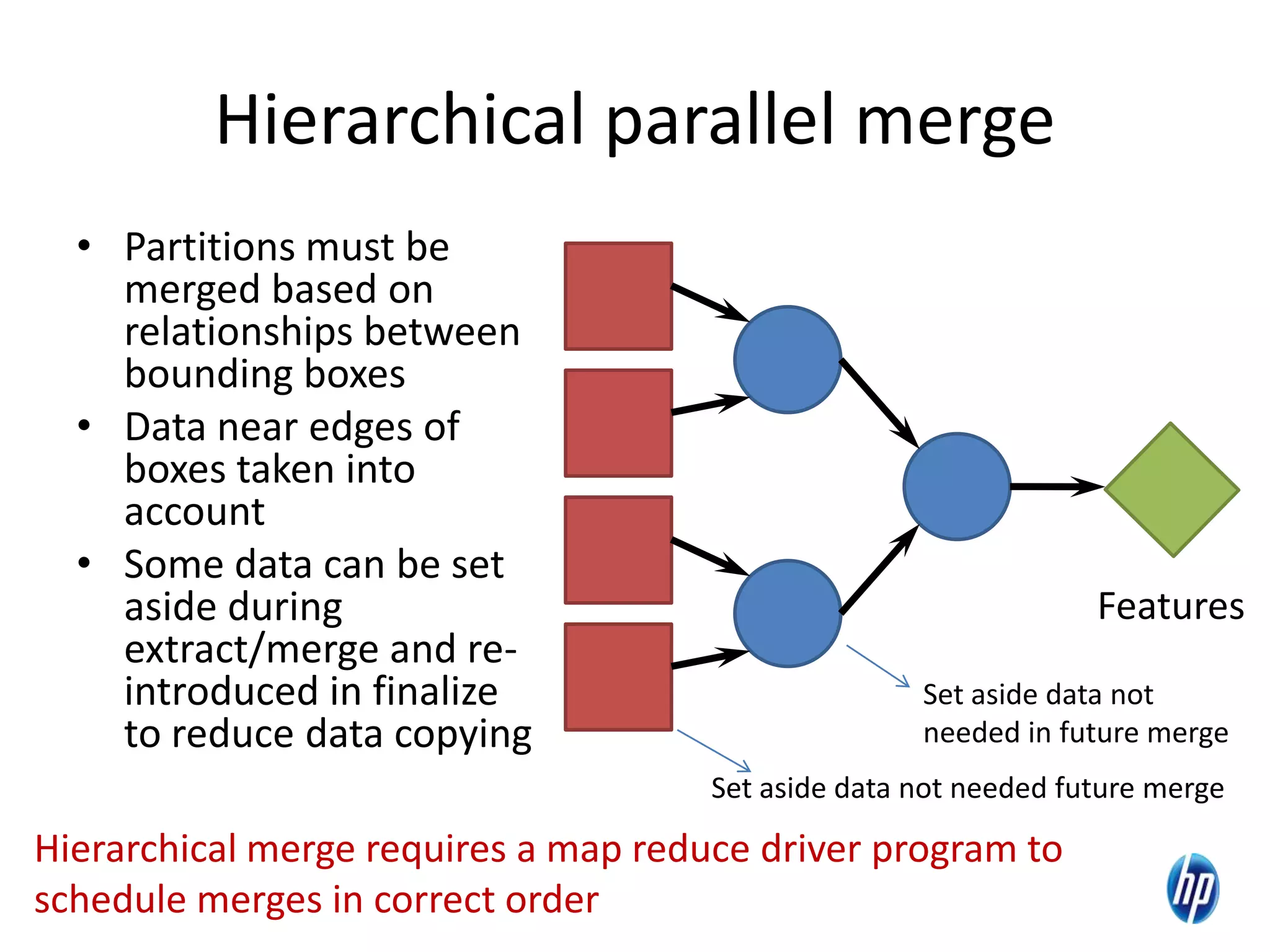

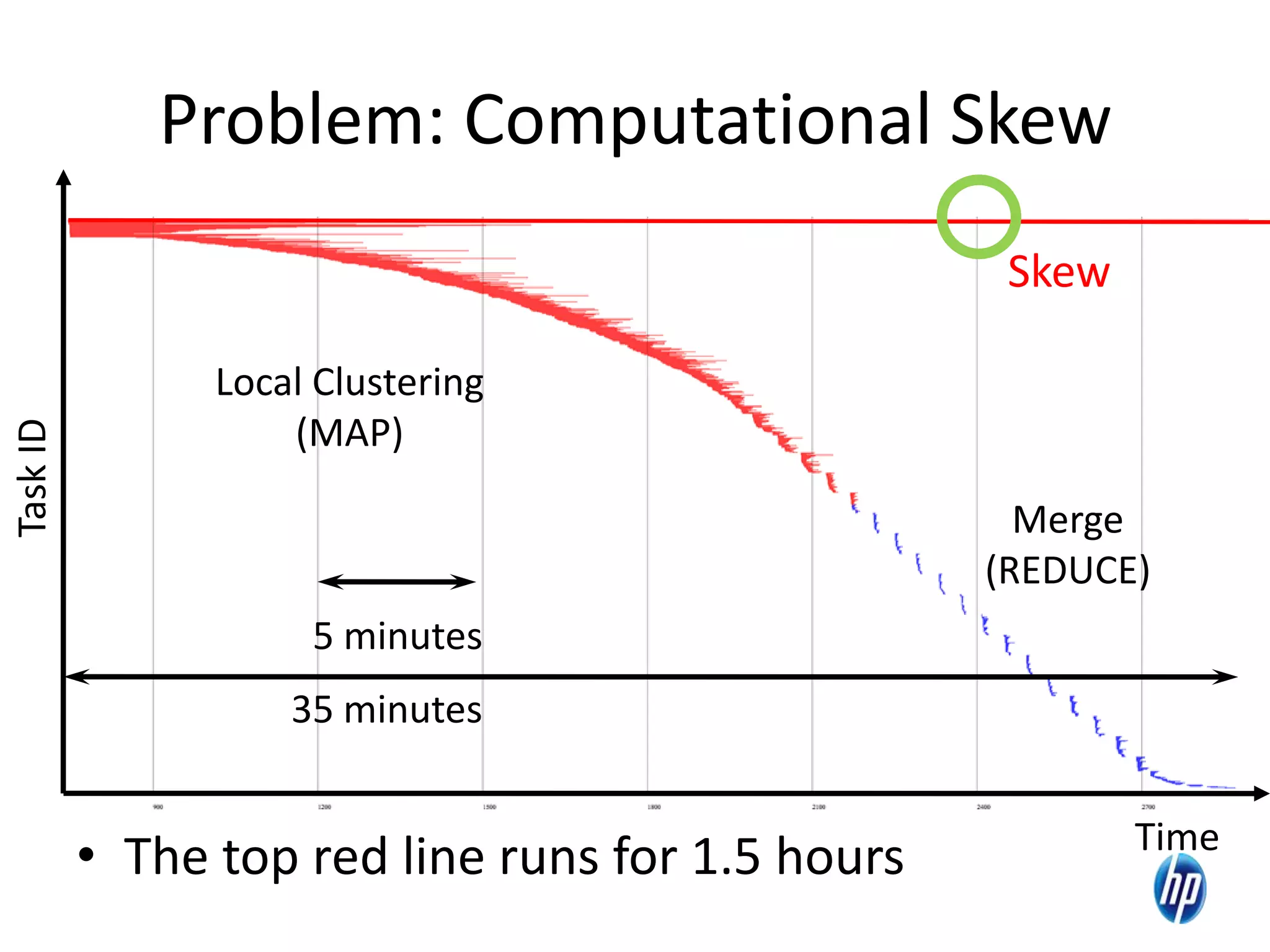

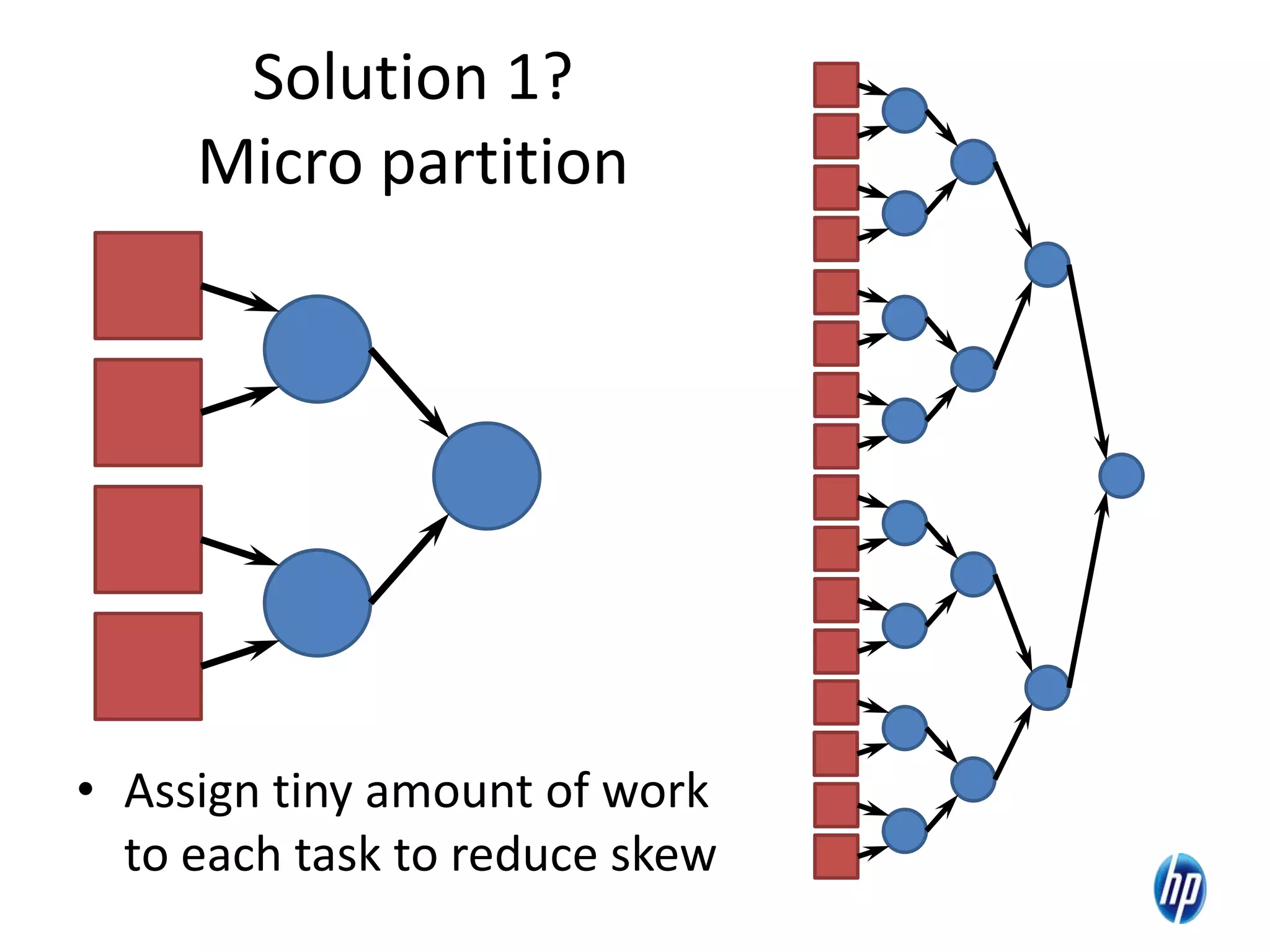

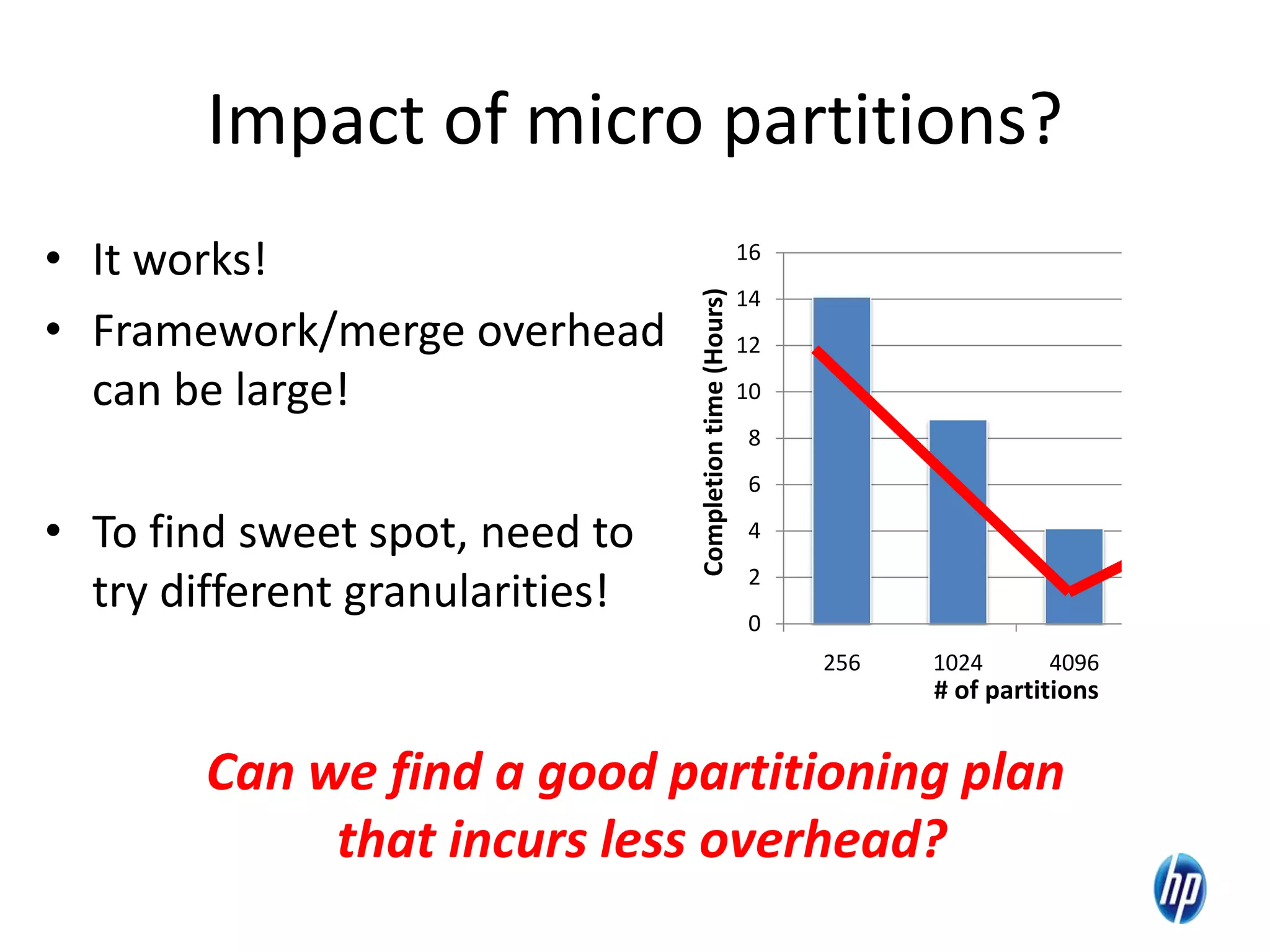

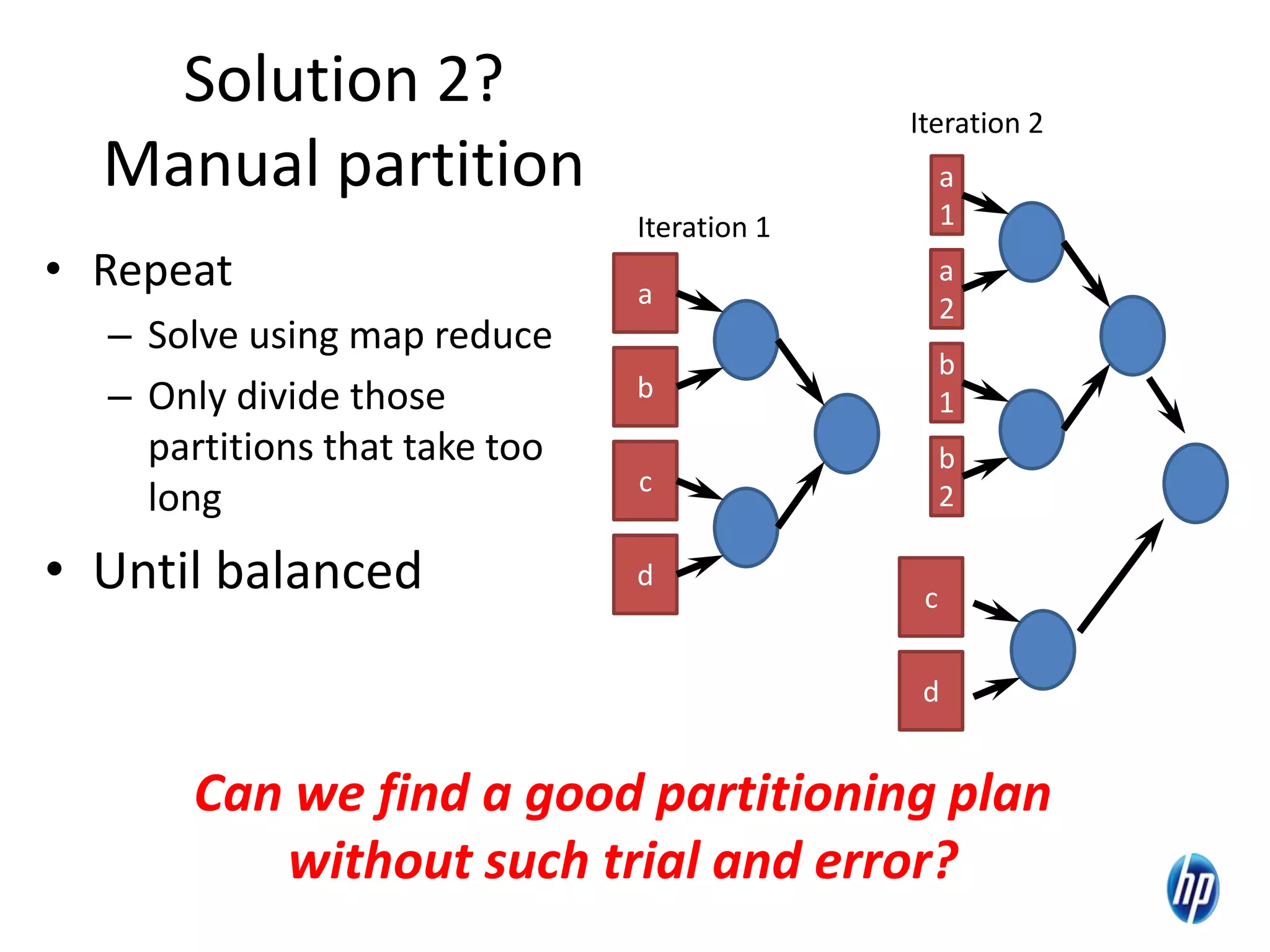

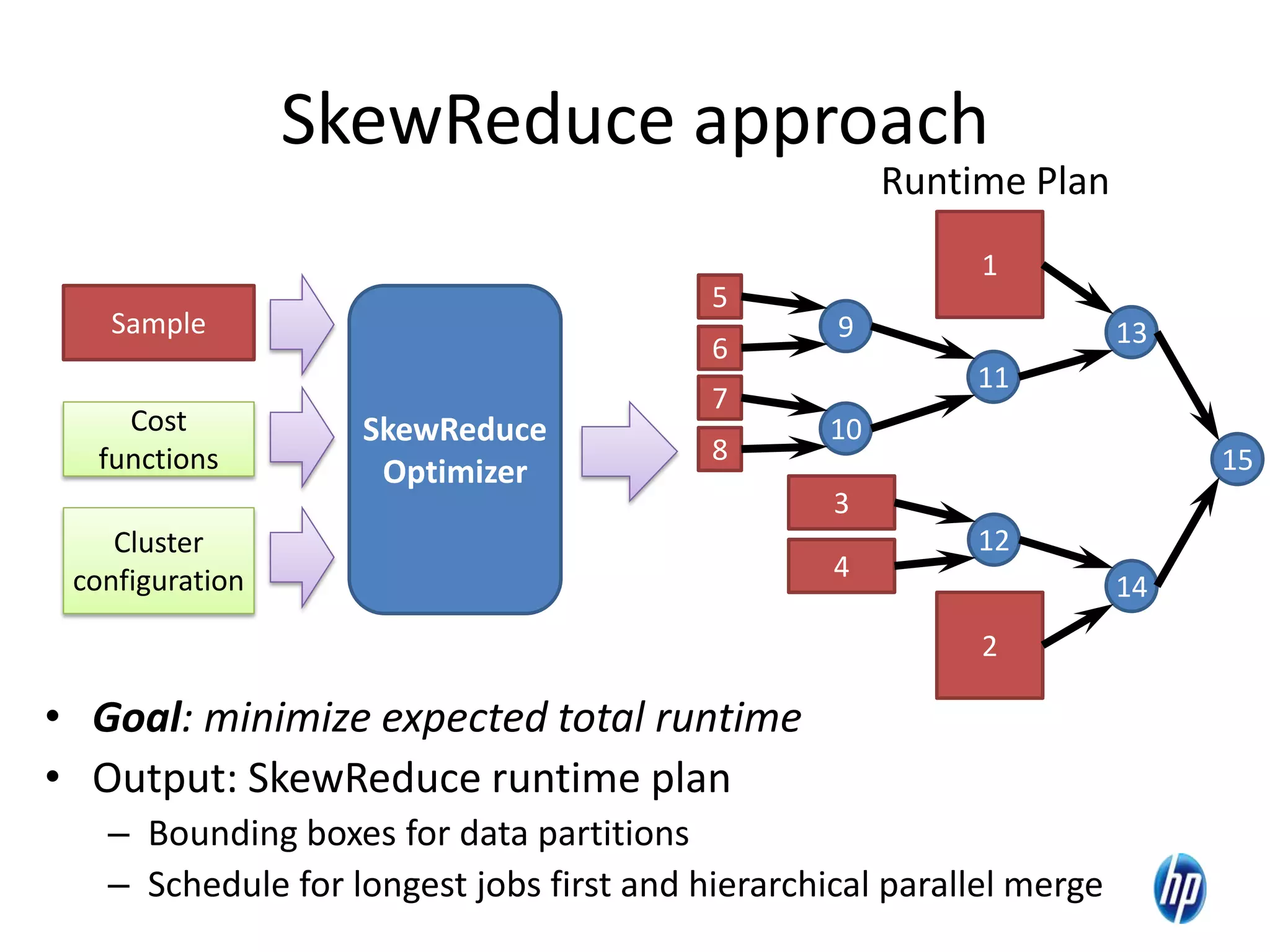

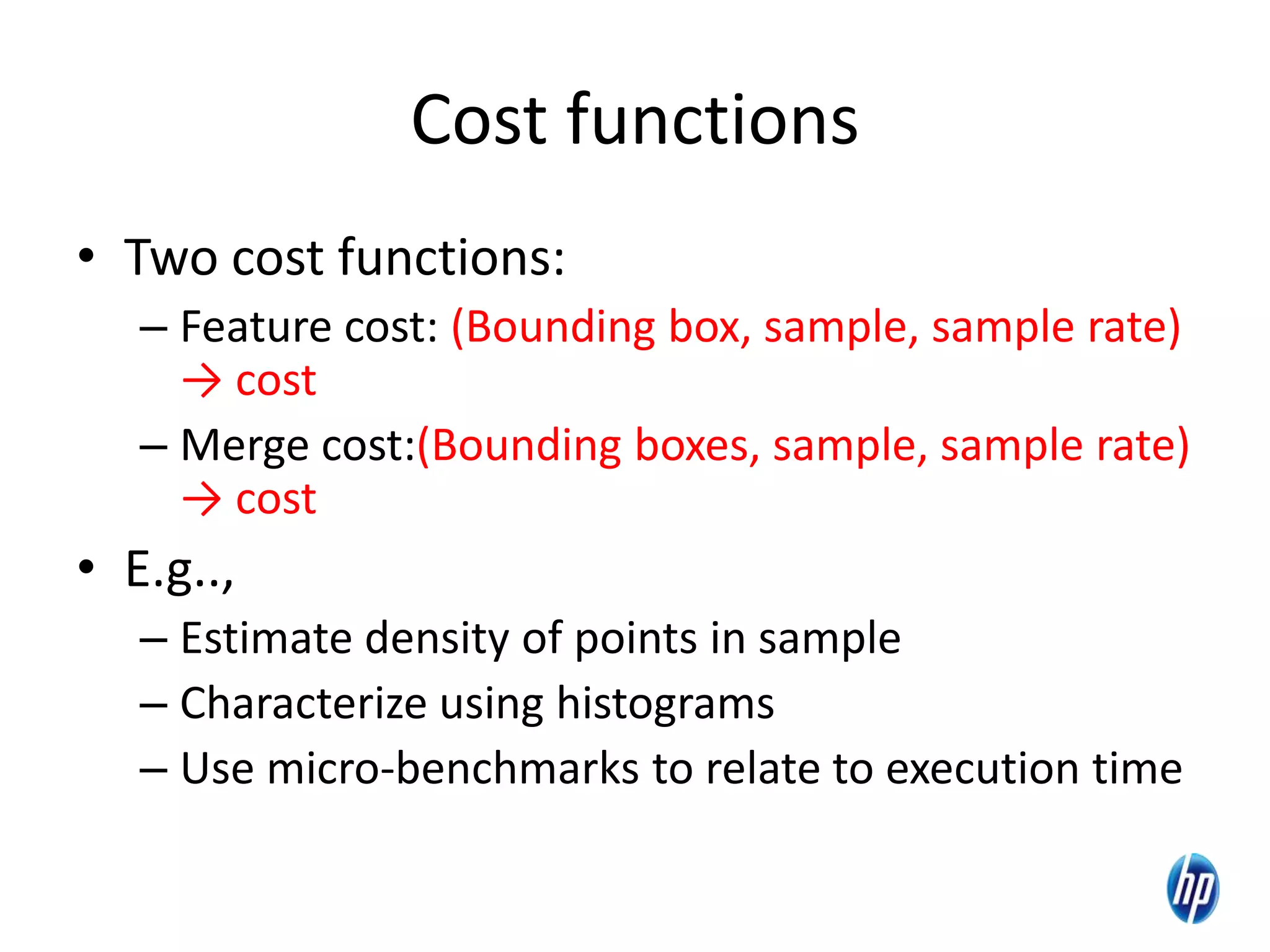

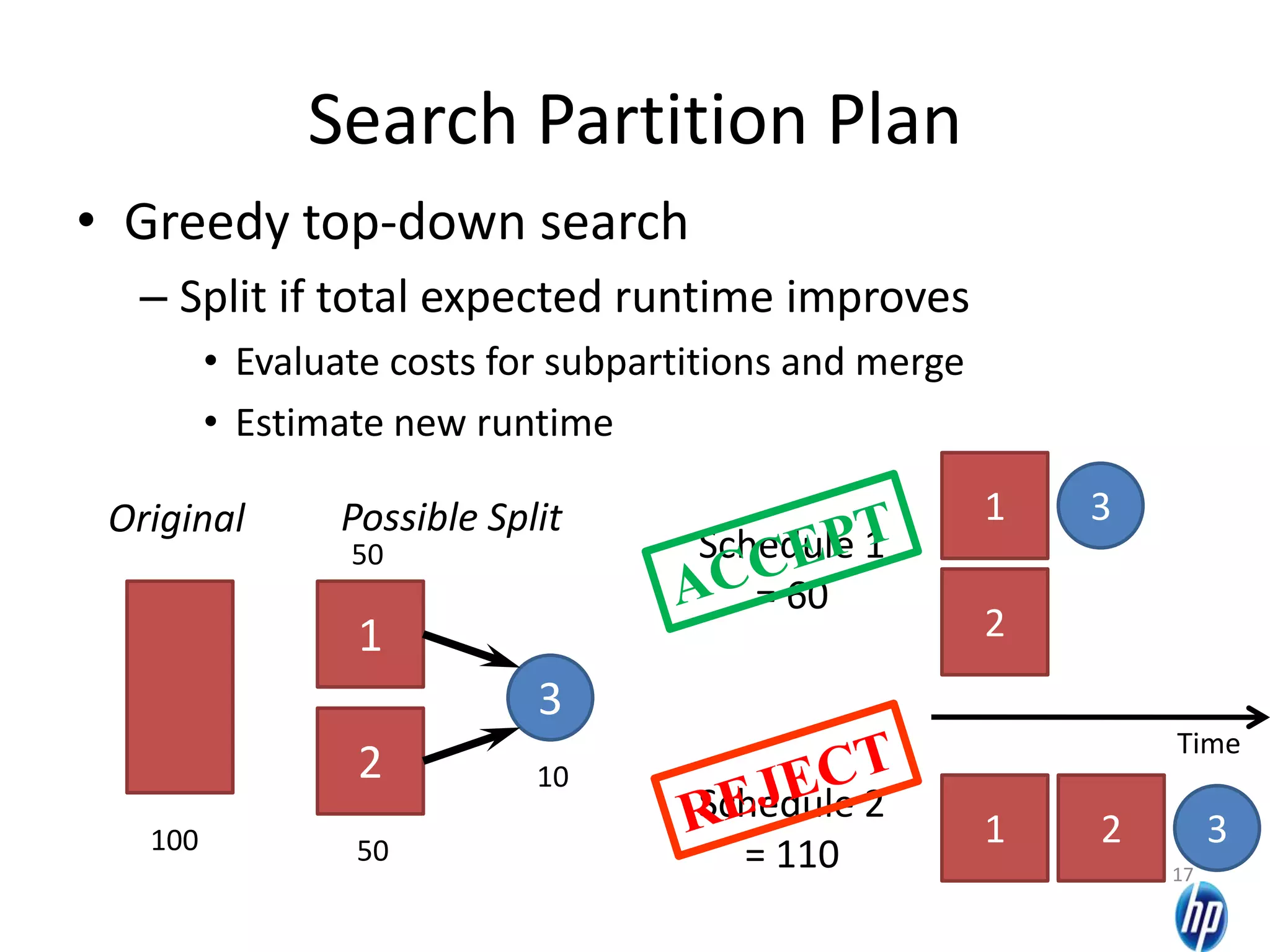

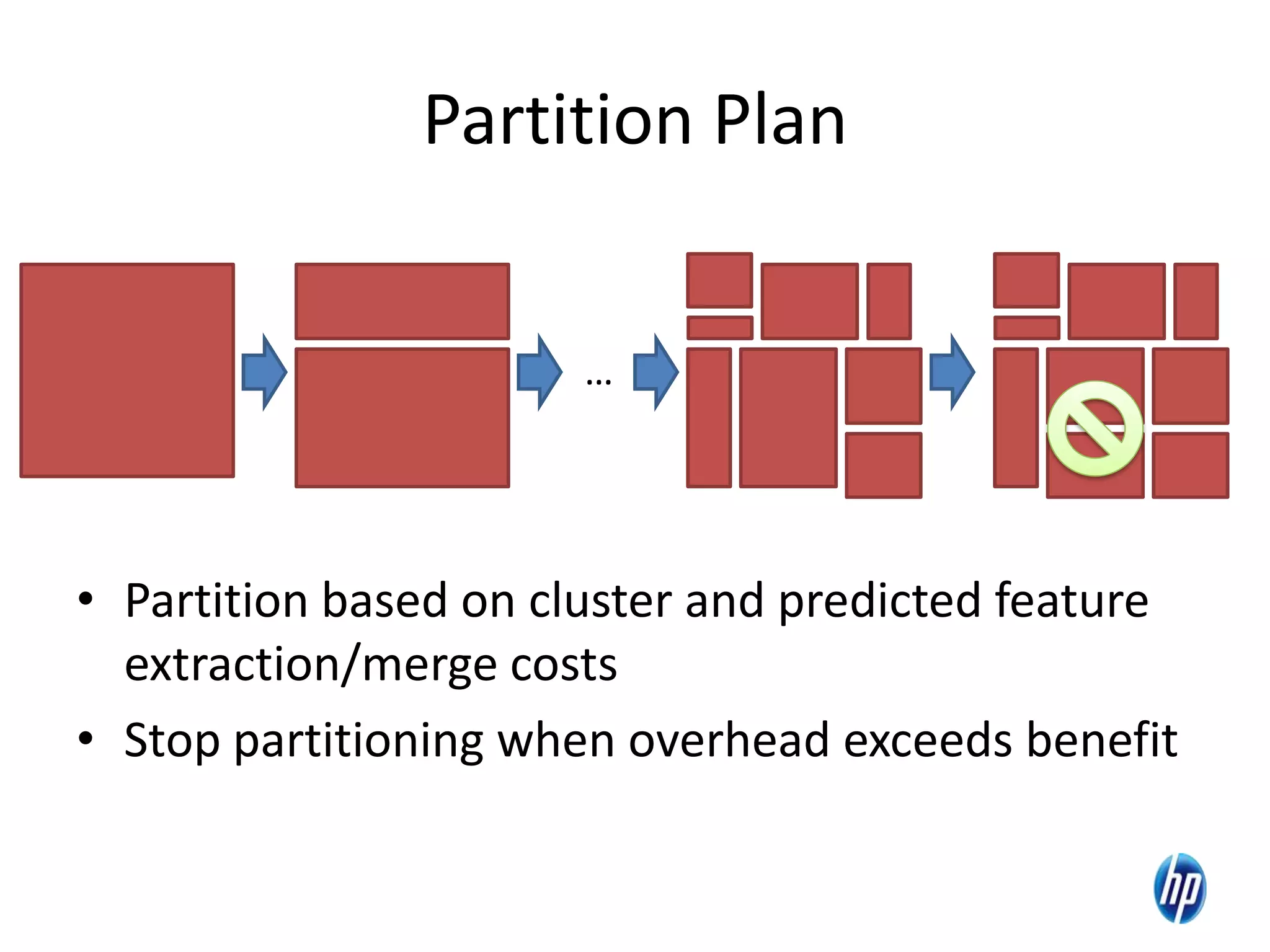

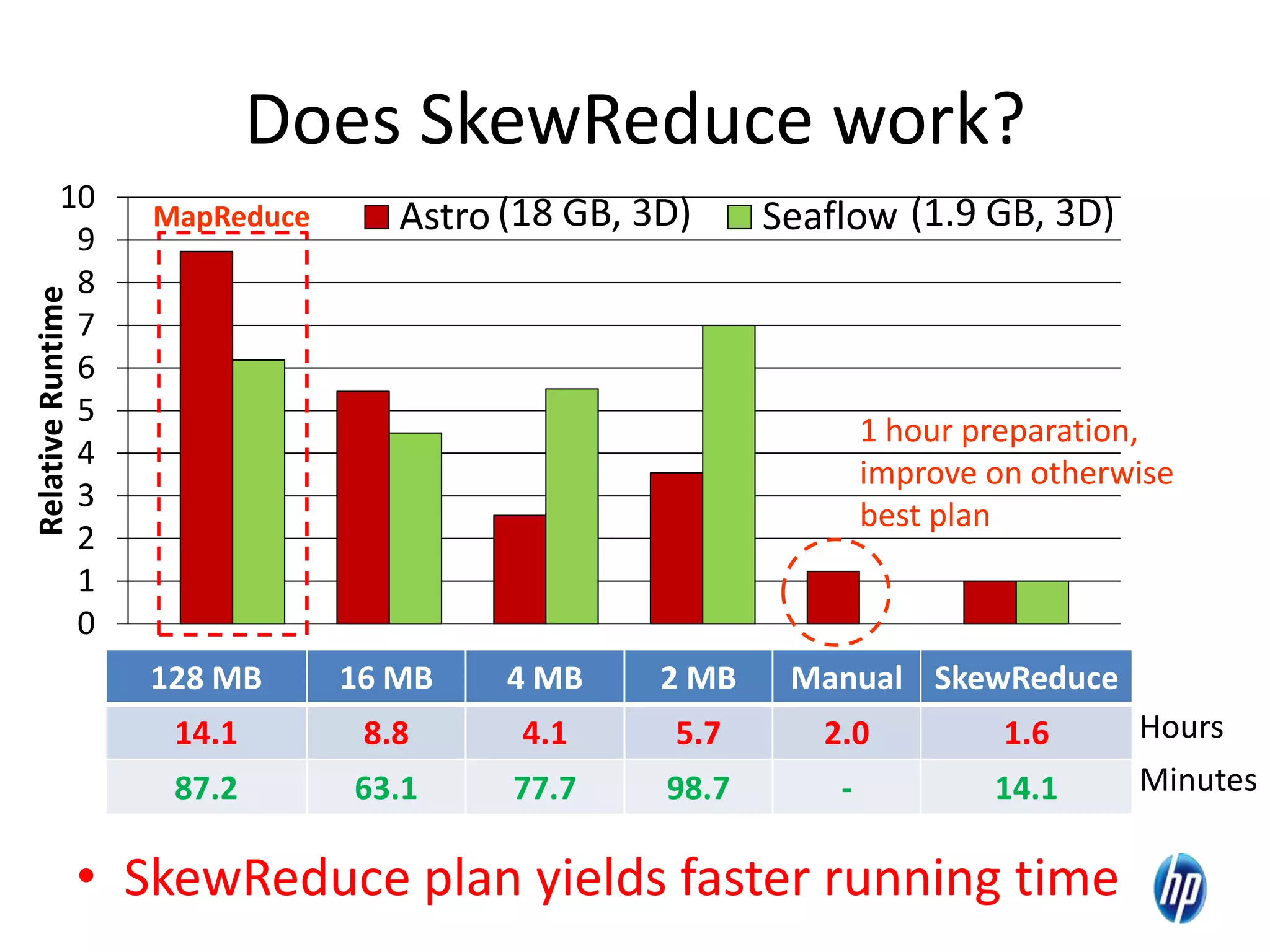

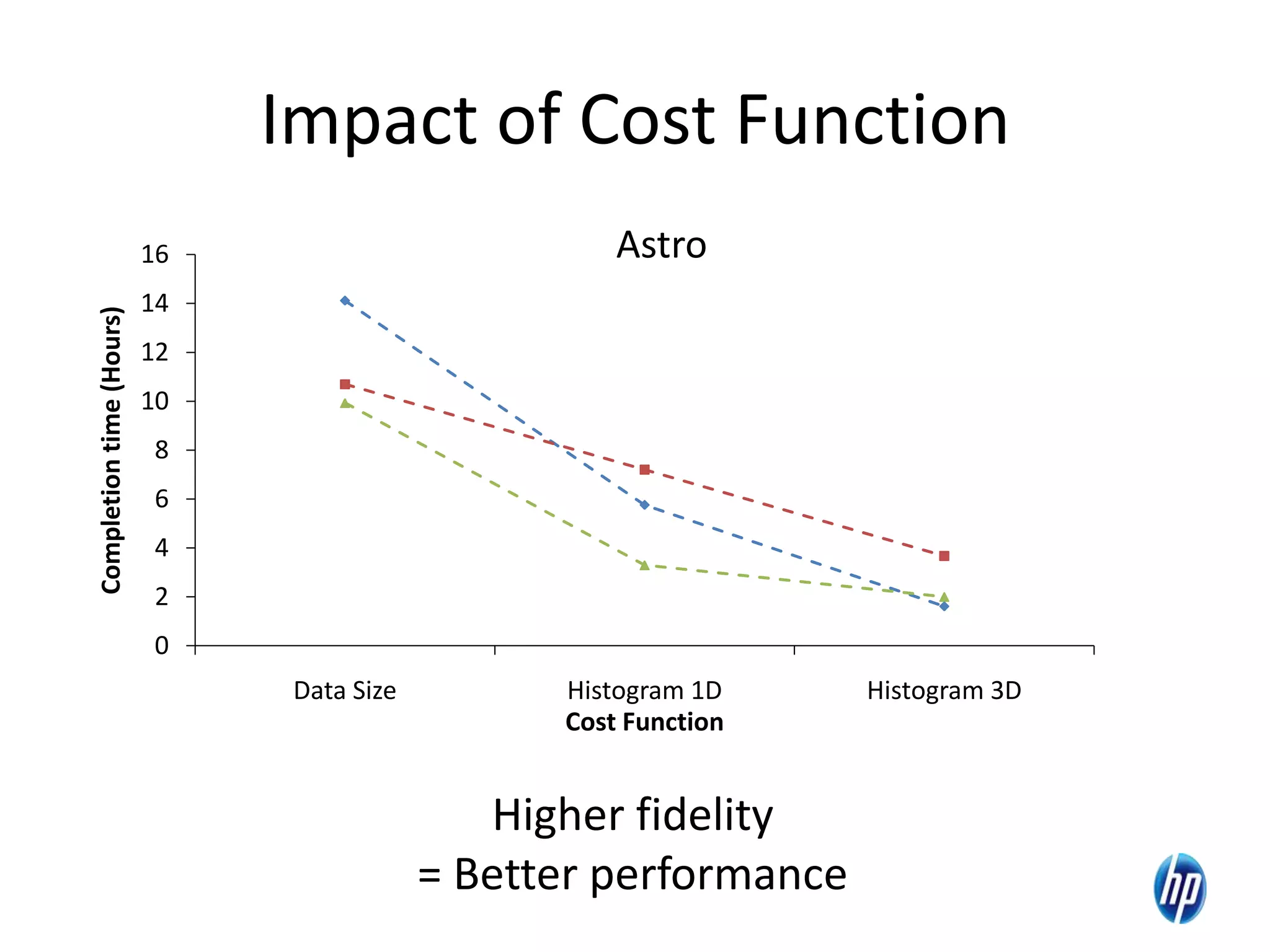

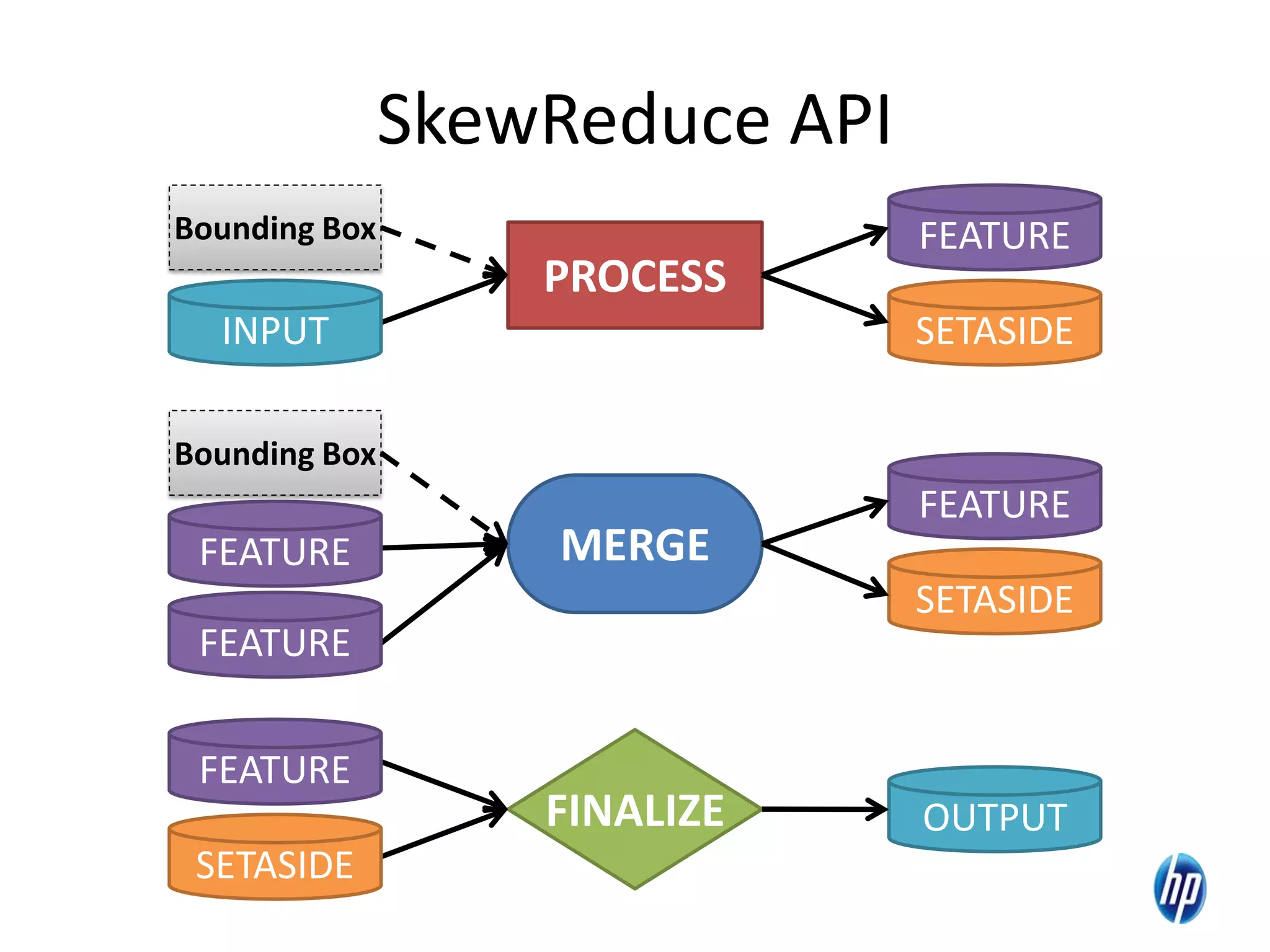

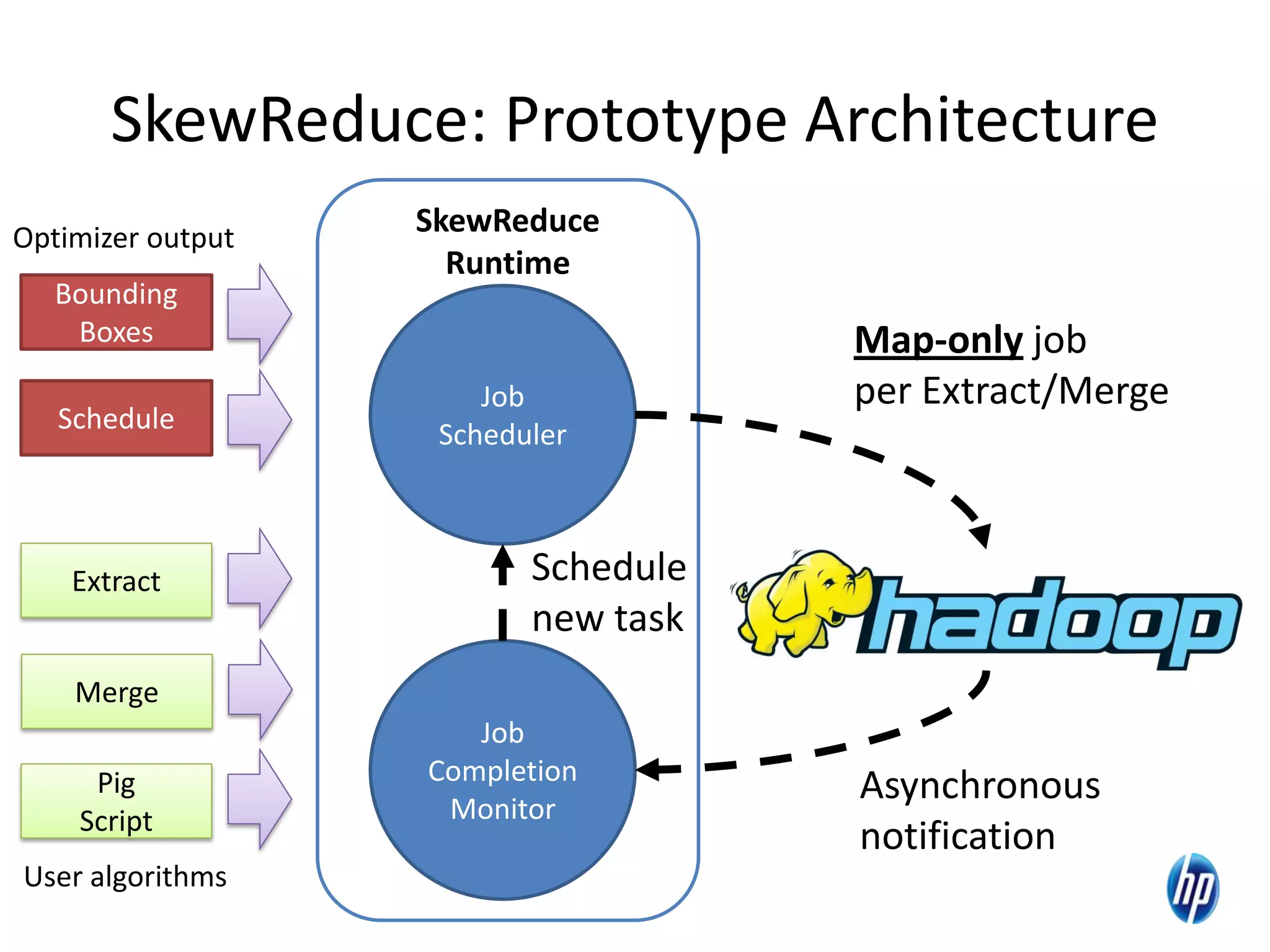

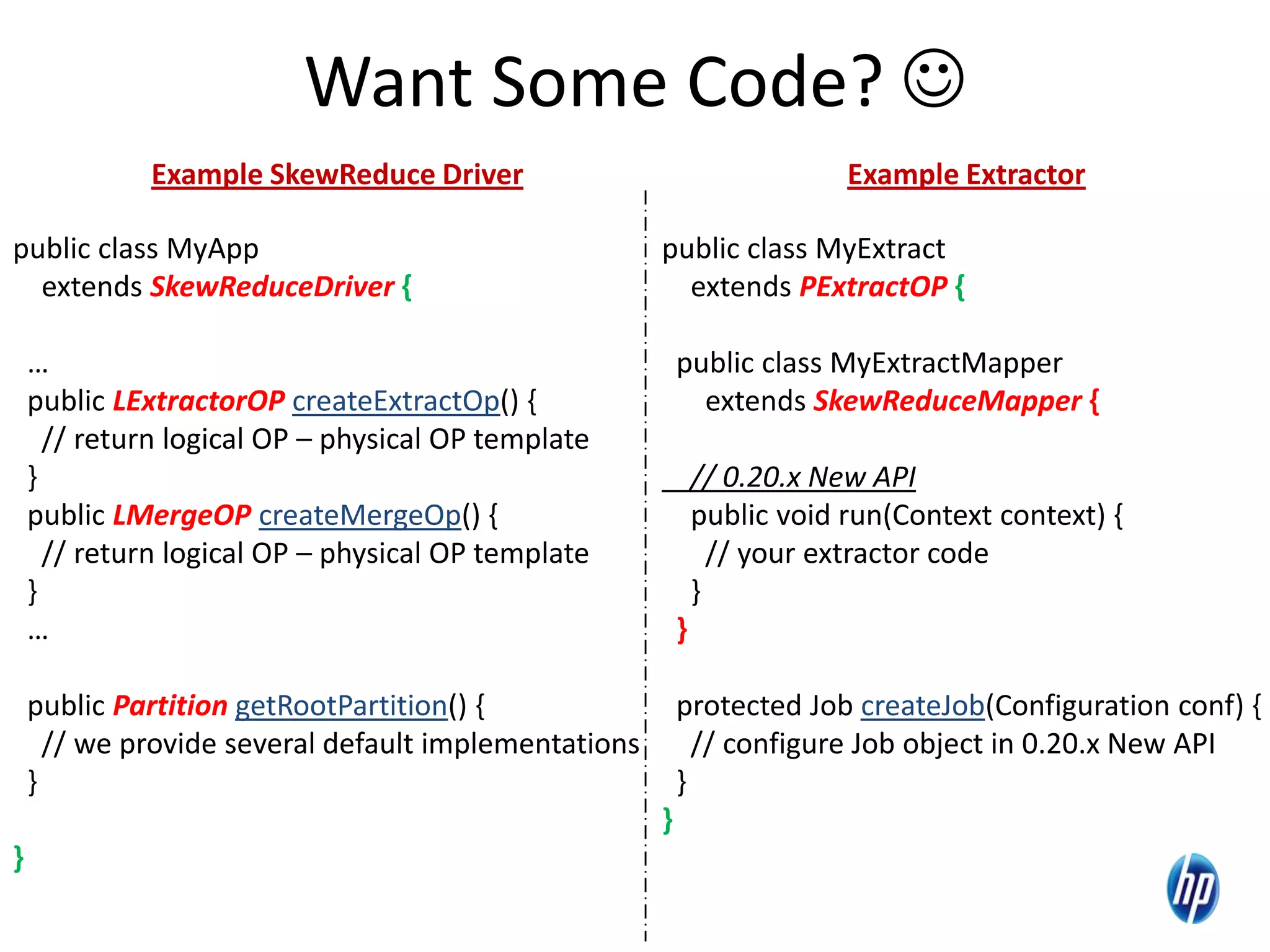

The document discusses the challenges of computational skew in scientific data analysis using Hadoop and MapReduce, highlighting the importance of effective data partitioning for improved performance. It introduces the 'skewreduce' approach, which automates data partitioning and merging plans to optimize processing times across various scientific applications. The evaluation of skewreduce demonstrates significant reductions in runtime, validating its effectiveness in addressing computational skew in large datasets.