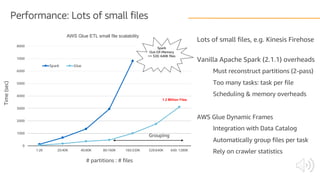

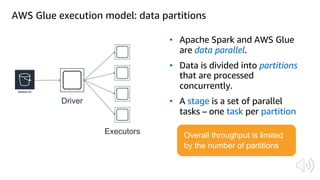

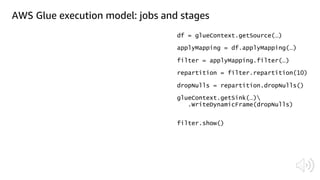

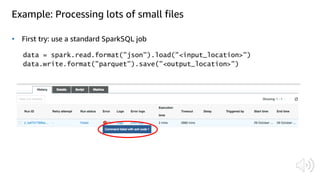

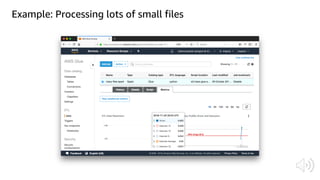

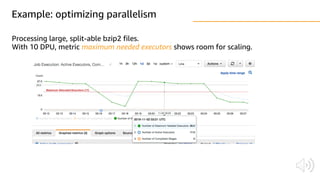

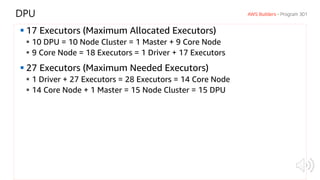

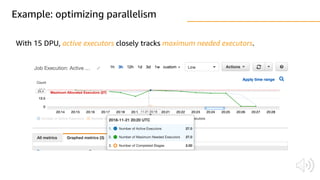

The document discusses AWS Glue, a fully managed ETL service. It provides an overview of Glue's programming environment and data processing model. It then gives several examples of optimizing Glue job performance, including processing many small files, a few large files, optimizing parallelism with JDBC partitions, Python performance, and using the new Python shell job type.

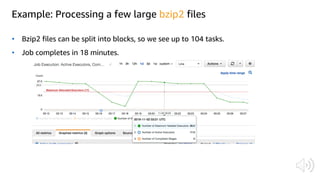

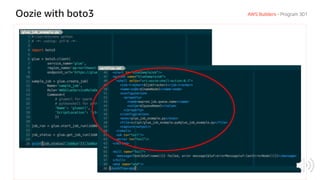

![Basics of ETL Job Programming

1. Initialize

2. Read

3. Transform data

4. Write

## Initialize

glueContext = GlueContext(SparkContext.getOrCreate())

## Create DynamicFrame and retrieve data from source

ds0 = glueContext.create_dynamic_frame.from_catalog (

database = "mysql", table_name = "customer",

transformation_ctx = "ds0")

## Implement data transformation here

ds1 = ds0 ...

## Write DynamicFrame from Catalog

ds2 = glueContext.write_dynamic_frame.from_catalog (

frame = ds1, database = "redshift",

table_name = "customer_dim",

redshift_tmp_dir = args["TempDir"],

transformation_ctx = "ds2")](https://image.slidesharecdn.com/awsbuildersaws301effectiveawsgluetaehyunkim-190306061037/85/AWS-Builders-Effective-AWS-Glue-20-320.jpg)

![Semi-structured schema Relational schema

FKA B B C.X C.Y

PK ValueOffset

A C D [ ]

X Y

B B

Transforms and adds new columns, types, and tables on-the-fly

Tracks keys and foreign keys across runs

SQL on the relational schema is orders of magnitude faster than JSON processing

Relationalize() transform](https://image.slidesharecdn.com/awsbuildersaws301effectiveawsgluetaehyunkim-190306061037/85/AWS-Builders-Effective-AWS-Glue-26-320.jpg)