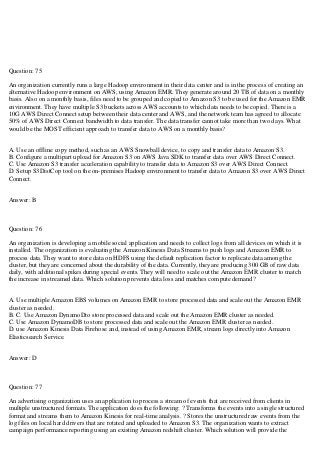

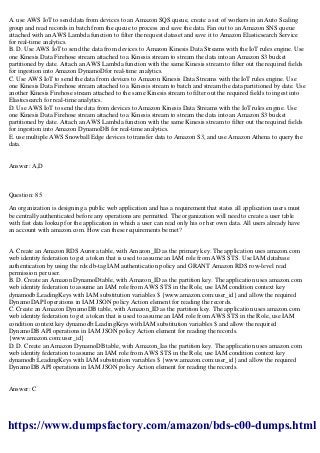

The foreword of BDS-C00 dumps has satisfied so many candidates who are enthusiastic to pass AWS Certified Big Data - Specialty. If you are penetrating for a dependable material then you are at right position just visit us at DumpsFactory and download your serving material. You can download free of cost demo questions that will tell you about the material that you are going to use. You can download BDS-C00 exam dumps in PDF form for your ease.

DumpsFactory has become the most trustable material as long as association. We give main concern to our users and try to make their work facilitative by all means. We are also as long as online testing locomotive to get better your knowledge by working on that software. You will better learn by heart the answers by repeat. This testing engine will also work as a simulator and gives you consciousness of the actual exam so you don’t get nervous with a new state of affairs during paper.

Your money will also be secure as it will be returned if in any case and unfortunately you fail to succeed in your exam. This promise is because of our self-confidence at BDS-C00 exam dumps.