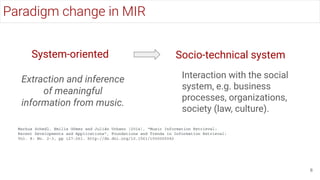

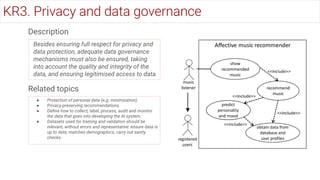

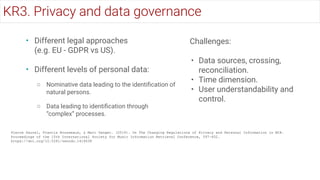

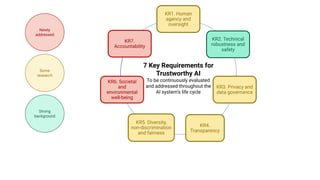

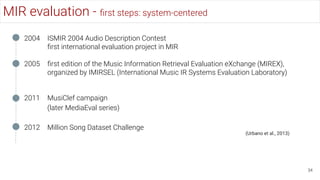

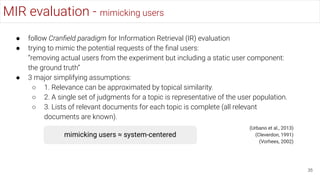

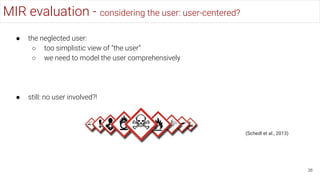

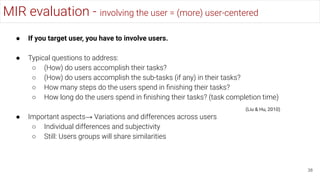

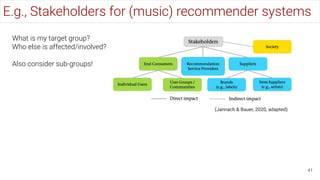

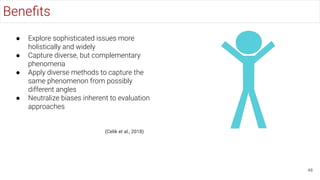

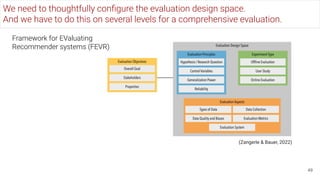

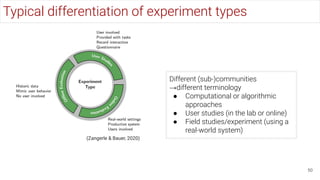

The document outlines a tutorial on trustworthy music information retrieval (MIR), emphasizing the ethical and legal considerations necessary for AI systems applied to music. It discusses the importance of human agency, technical robustness, privacy, transparency, diversity, societal impact, and accountability throughout the AI system's lifecycle. Various evaluation strategies for MIR systems that account for multiple stakeholder perspectives and user-centered approaches are also highlighted.

![Recommendation on the Ethics of AI (UNESCO, 2020)

61

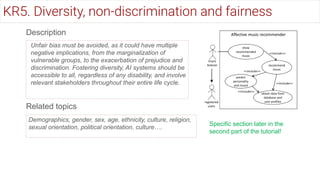

“AI actors should promote social justice and safeguard fairness and non-discrimination of any kind [...].

This implies an inclusive approach to ensuring that the benefits of AI technologies are available and

accessible to all, taking into consideration the specific needs of different age groups, cultural systems,

different language groups, persons with disabilities, girls and women, and disadvantaged, marginalized

and vulnerable people or people in vulnerable situations.” (Section III.2, Article 28)](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-61-320.jpg)

![108

Agreement and Perceptions

- Listeners trained in classical music tend to disagree more on perceived

emotions, than those not trained [Schedl et al., 2018]

- Preference, familiarity, and lyrics comprehension increase agreement for

emotions corresponding to quadrants Q1 and Q3. [Gómez-Cañón et al., 2020]

- Current emotional state of users influences their perception of music similarity

[Flexer et al., 2021]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-108-320.jpg)

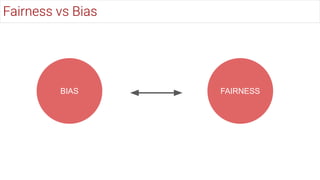

![The ML community has begun to address claims of algorithmic

bias under the rubric of fairness, accountability, and transparency.

We should clearly define:

● Assumptions

● Goals or principles that we are based on

● Methods used to evaluate the ability to meet those goals

● How to assess the relevance and appropriateness of those

goals to the social problem in question

What is fair? Fair for who?

[*] Redistribution and Rekognition: A Feminist Critique of Algorithmic Fairness [West, 2020]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-121-320.jpg)

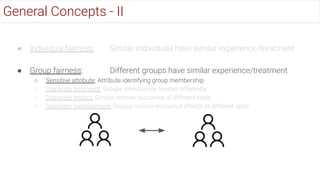

![The ML community has begun to address claims of algorithmic

bias under the rubric of fairness, accountability, and transparency.

We should clearly define:

● Assumptions

● Goals or principles that we are based on

● Methods used to evaluate the ability to meet those goals

● How to assess the relevance and appropriateness of those

goals to the social problem in question

What is fair? Fair for who?

[*] Redistribution and Rekognition: A Feminist Critique of Algorithmic Fairness [West, 2020]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-122-320.jpg)

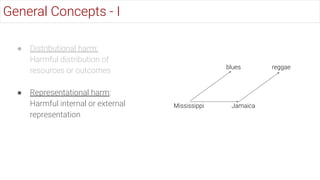

![● Distributional harm:

Harmful distribution of

resources or outcomes

● Representational harm:

Harmful internal or external

representation

General Concepts - I

Popularity

Recommended

Fairness in Information Access Systems [Ekstrand et al., 2022]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-123-320.jpg)

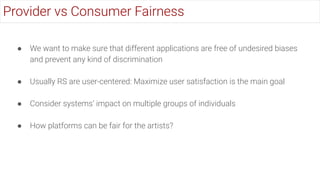

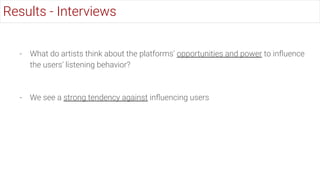

![● The leading questions are:

○ How do artists feel affected by current systems?

○ What do artists want to see improved in future?

○ What concrete ideas or even algorithmic decisions could be adopted in

future systems?

What is fair? exploring the artists' perspective on the fairness of music streaming platforms [Ferraro et al., 2021]

What is fair in Music recommendation?](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-134-320.jpg)

![135

Design of

semi-structured

interviews

Documents

preparation

Selected participants

based on diversity

Conducting

interviews

Interview

transcription

Coding

Analysis of

Results

Methodology - Semi-structured interviews and Qualitative Content Analysis

Qualitative content analysis [Mayring, 2004]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-135-320.jpg)

![141

"[...] the population of the world is 50% women. So it

would be ridiculous if the system wouldn’t recommend

them.”](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-141-320.jpg)

![Based the interviews we focus on the topic of gender balance

● Qualitative approach:

○ Artists express that platforms should promote gender balance

● Quantitative approach:

○ Using 2 datasets of user listening data, evaluate how different users’ history is compared to the

(CF) recommendations

○ What is the effect in user consumption in the long term?

142

Break the Loop: Gender Imbalance in Music Recommenders [Ferraro, et al., 2021]

Gender Imbalance in Music Recsys](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-142-320.jpg)

![- 3 sources of unfairness:

- Representation: Similar proportion to users history

- Exposure: Users have to put more effort to reach the first female artist in the recommendation

- Utiliy: Model make more “mistakes” for female artists than for male artists

Similar results in:

Exploring Artist Gender Bias in Music Recommendation [Shakespeare et al., 2020]

Gender Imbalance in Music Recsys - Results](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-144-320.jpg)

![- Exploring Longitudinal Effects of Session-based Recommendations. In Proceedings of

the 14th ACM Conference on Recommender Systems [Ferraro, Jannach & Serra, 2020]

- Consumption and performance: understanding longitudinal dynamics of recommender

systems via an agent-based simulation framework [Zhang et al., 2019]

- Longitudinal impact of preference biases on recommender systems’ performance [Zhou,

Zhang, & Adomavicius, 2021]

- The Dual Echo Chamber: Modeling Social Media Polarization for Interventional

Recommending [Donkers & Ziegler, 2021]

152

References - Simulations](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-152-320.jpg)

![- Exploring User Opinions of Fairness in Recommender Systems [Smith et al.,

2020]

- Fairness and Transparency in Recommendation: The Users’ Perspective

[Sonboli et al., 2021]

- The multisided complexity of fairness in recommender systems [Sonboli et al.,

2022]

References - Fairness for Users](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-153-320.jpg)

![- The Unfairness of Popularity Bias in Music Recommendation: A Reproducibility

Study [Kowald, Schedl & Lex, 2020]

- Support the underground: characteristics of beyond-mainstream music

listeners [Kowald et al., 2021]

- Analyzing Item Popularity Bias of Music Recommender Systems: Are Different

Genders Equally Affected? [Lesota et al., 2021]

References - Unfairness for users](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-154-320.jpg)

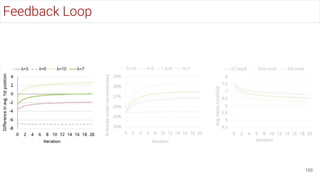

![Bias and Feedback Loops in Music Recommendation: Studies on Record Label

Impact [Knees, Ferraro & Hübler, 2022]

Music Record Labels](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-155-320.jpg)

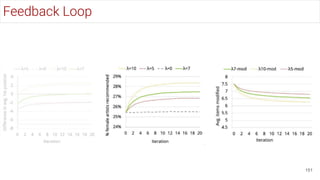

![Music Record Labels

Bias and Feedback Loops in Music Recommendation: Studies on Record Label

Impact [Knees, Ferraro & Hübler, 2022]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-156-320.jpg)

![Music Record Labels

Bias and Feedback Loops in Music Recommendation: Studies on Record Label

Impact [Knees, Ferraro & Hübler, 2022]](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-157-320.jpg)

![Assessing Algorithmic Biases for Musical Version Identification [Yesiler et al., 2022]

- Multiple stakeholders: Composers and other artists

- Compare 5 systems

- Use different attributes of the songs/artists

They Identify algorithmic biases in cover song id. systems that may lead to differences in

performance (dis)favor different groups of artists, e.g.:

- Tracks after 2000 work better

- Larger gap between release year lead to worse performance

- Learning-based systems perform better for underrepresented artists

Cover Song Identification](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-158-320.jpg)

![Assessing Algorithmic Biases for Musical Version Identification [Yesiler et al., 2022]

- Multiple stakeholders: Composers and other artists

- Compare 5 systems

- Use different attributes of the songs/artists

They Identify algorithmic biases in cover song id. systems that may lead to differences in

performance (dis)favor different groups of artists, e.g.:

- Tracks after 2000 work better

- Larger gap between release year lead to worse performance

- Learning-based systems perform better for underrepresented artists

Cover Song Identification](https://image.slidesharecdn.com/trustworthymir-ismir2022tutorial-221205091000-9e83d8b2/85/TRUSTWORTHY-MIR-ISMIR-2022-Tutorial-pdf-159-320.jpg)