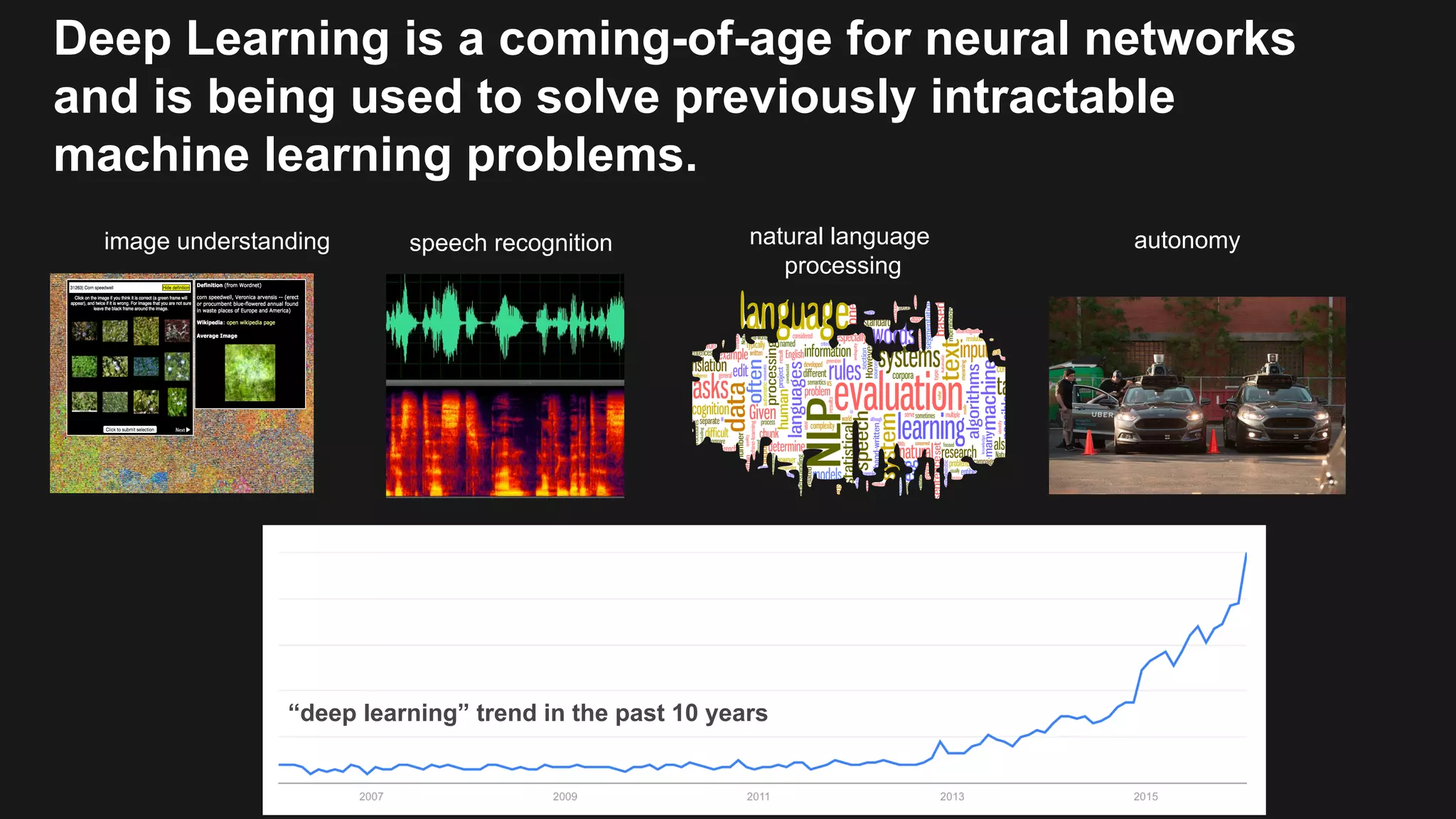

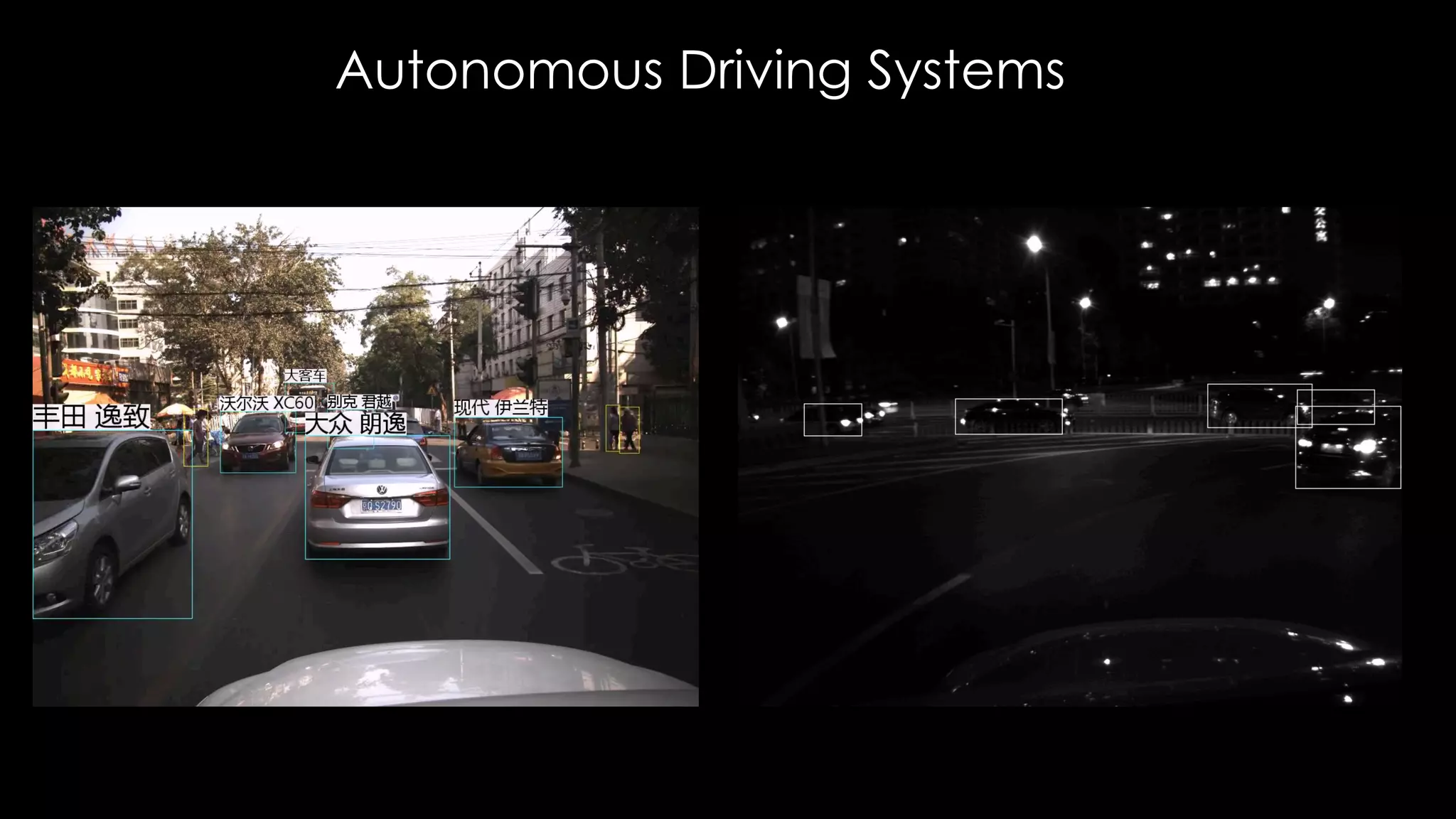

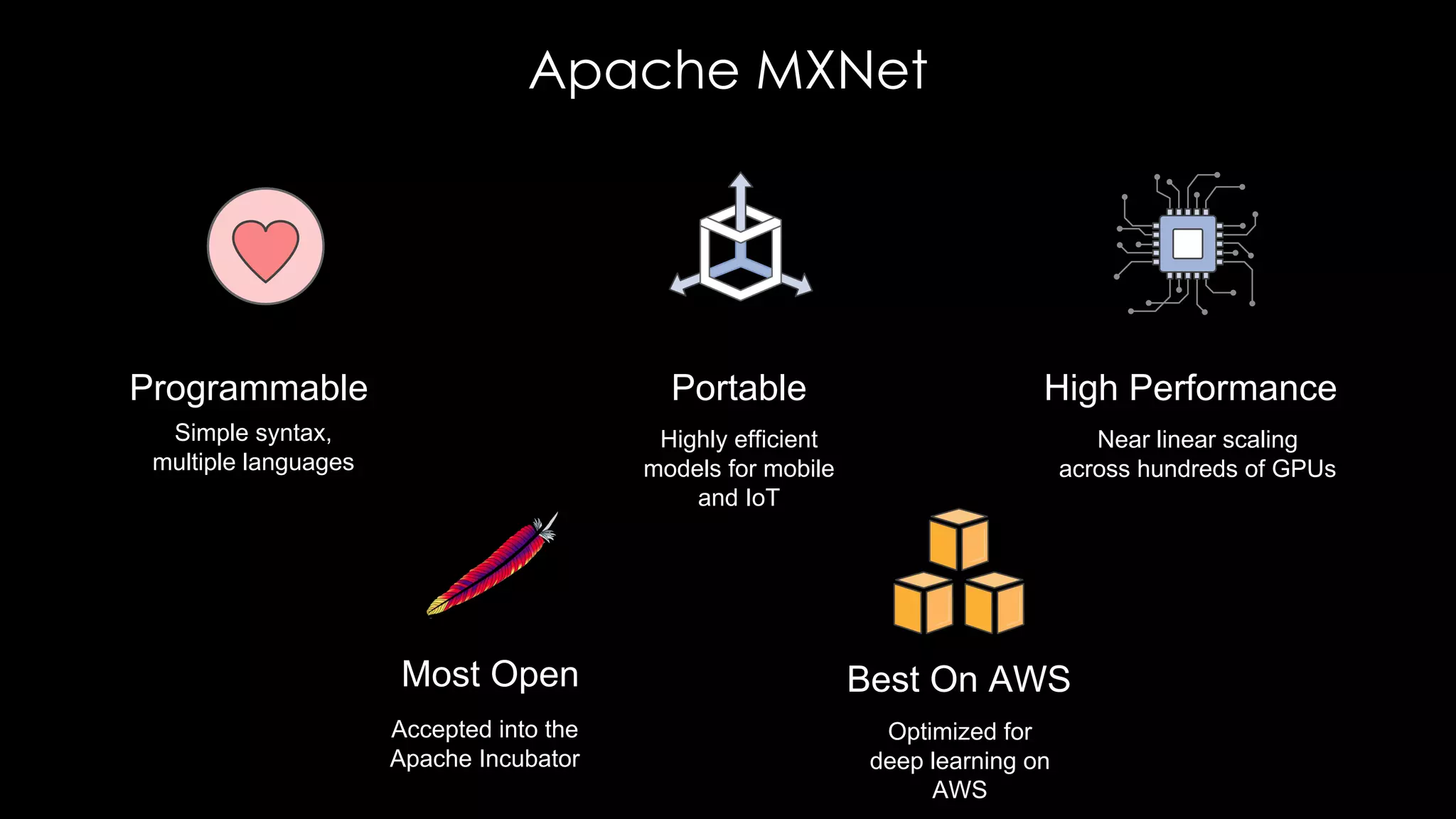

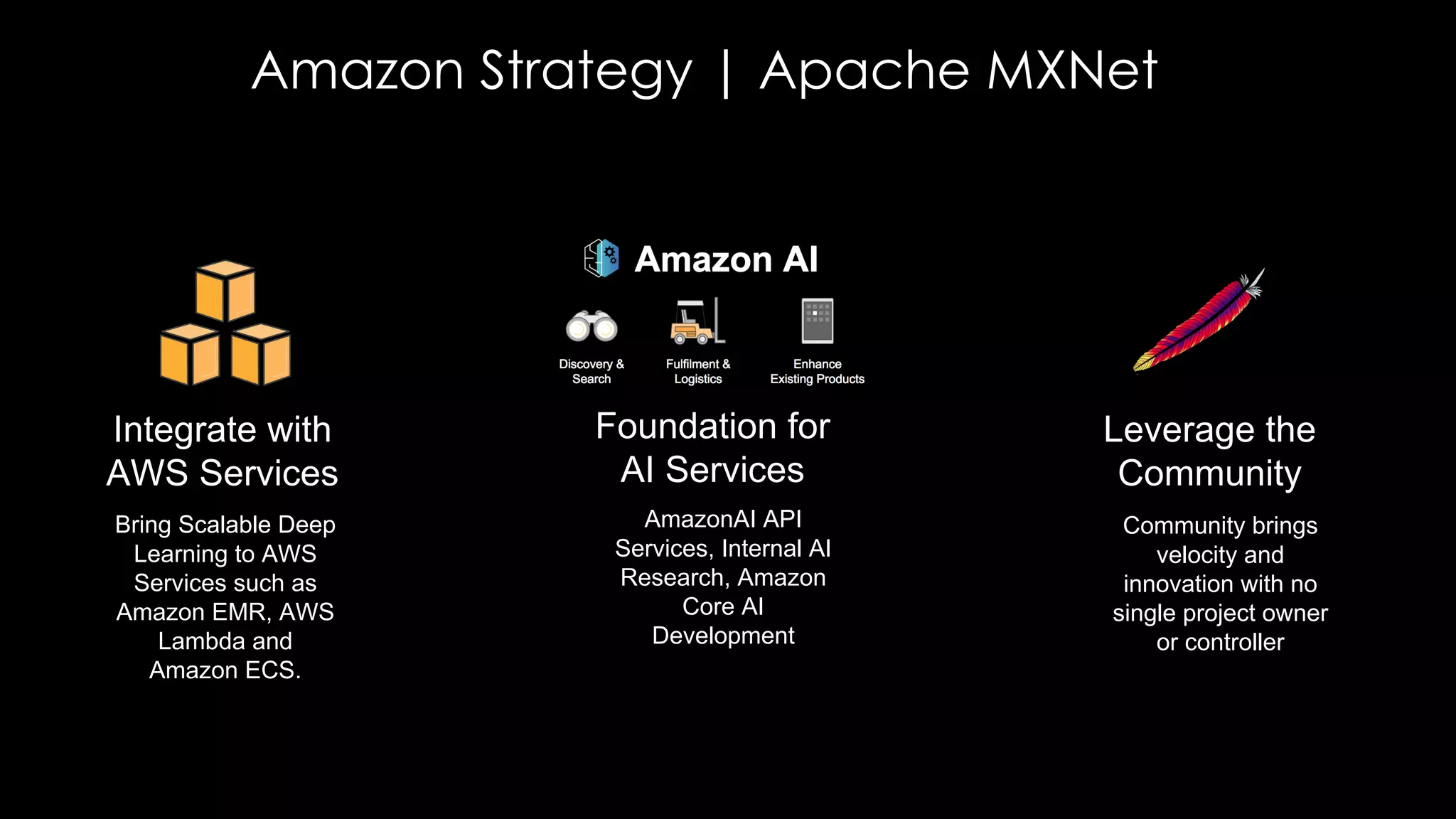

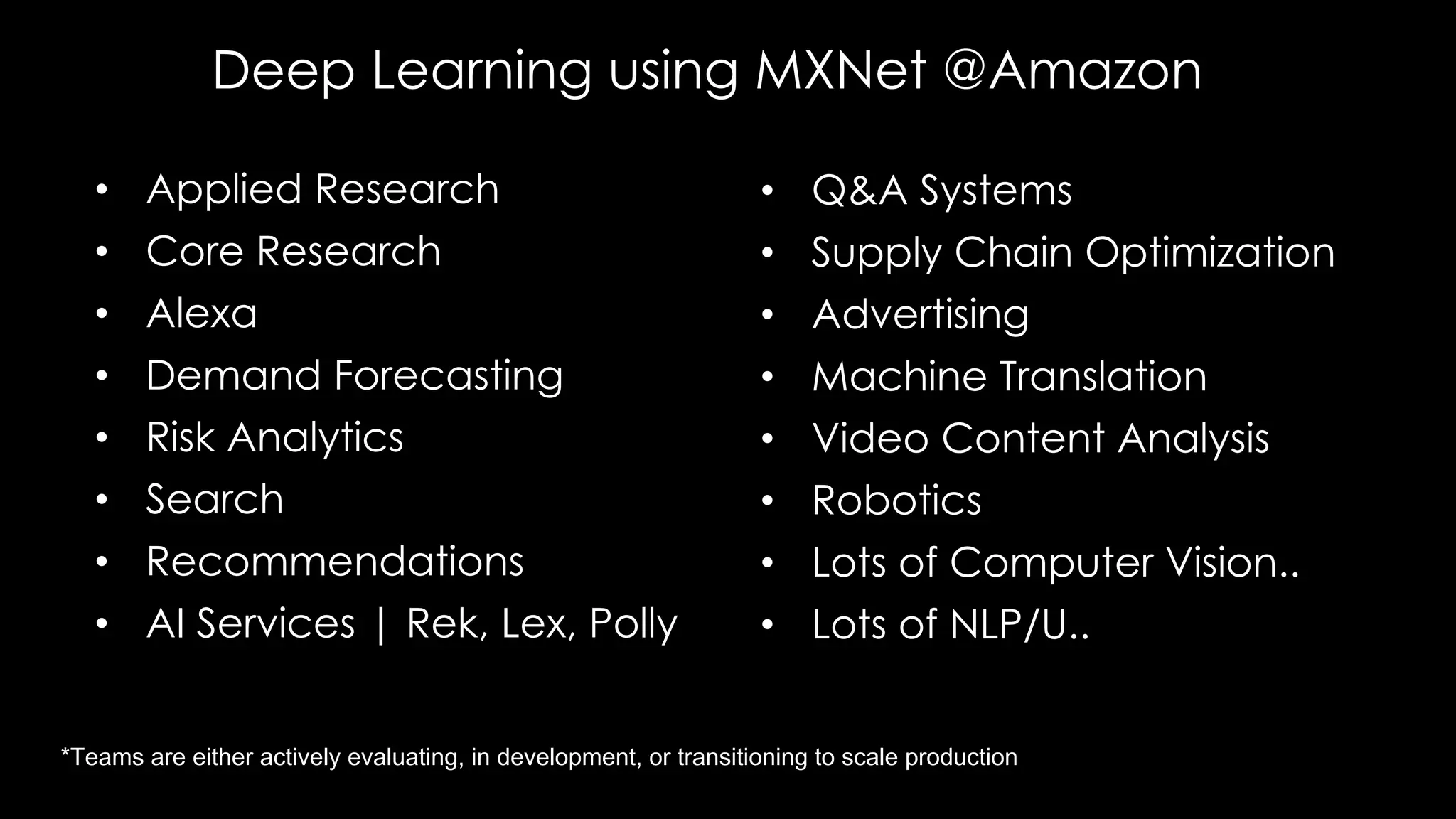

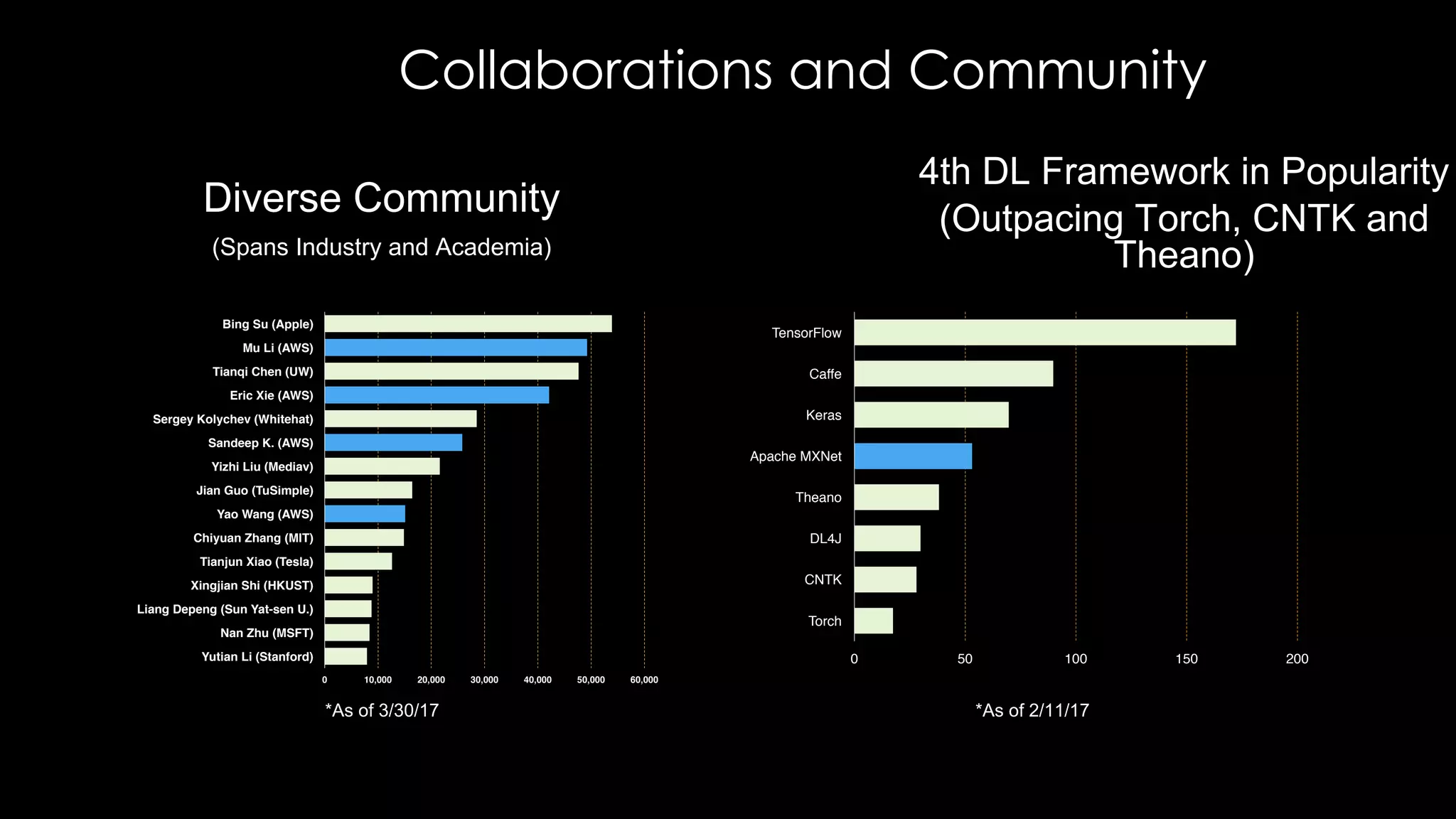

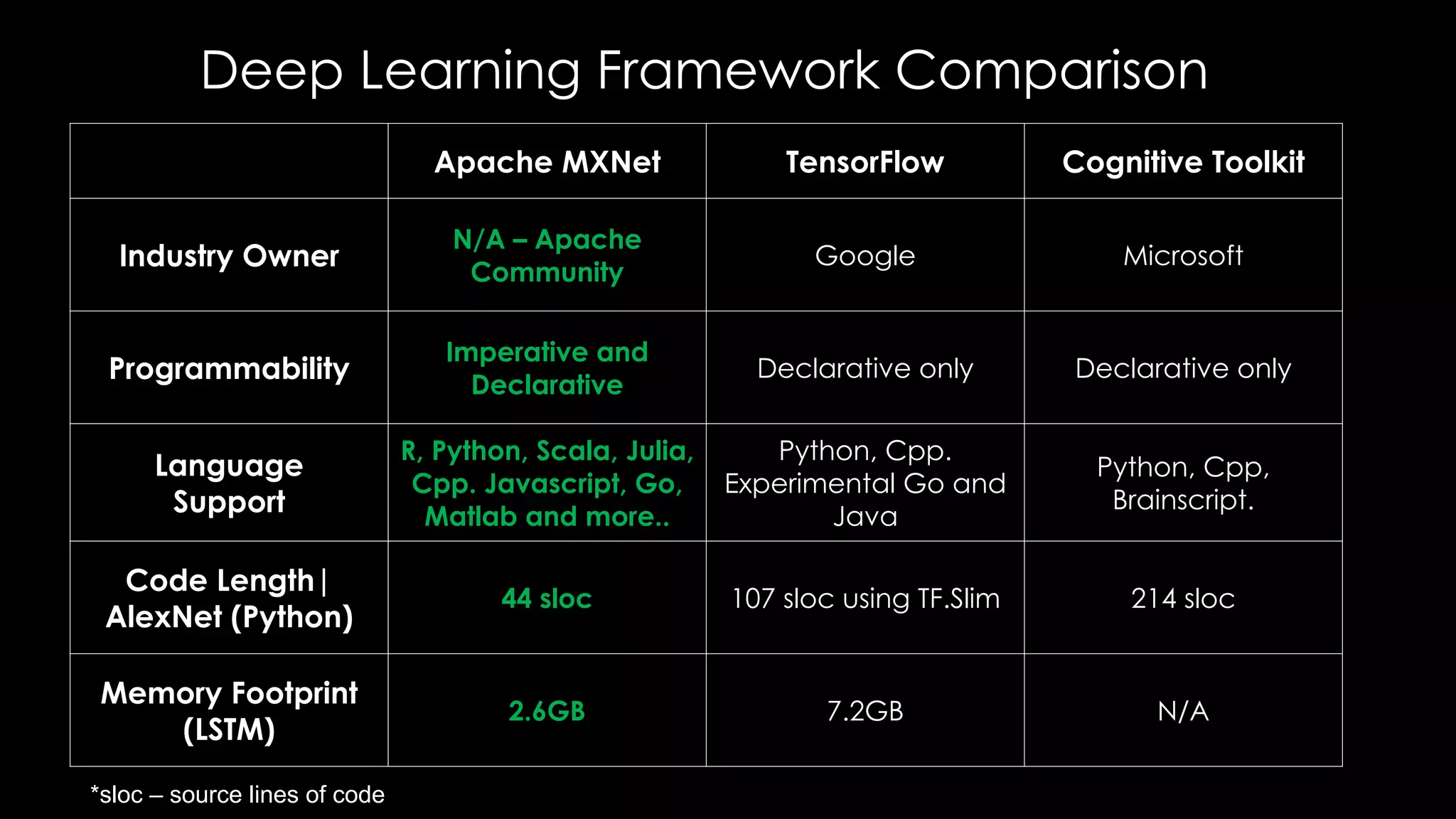

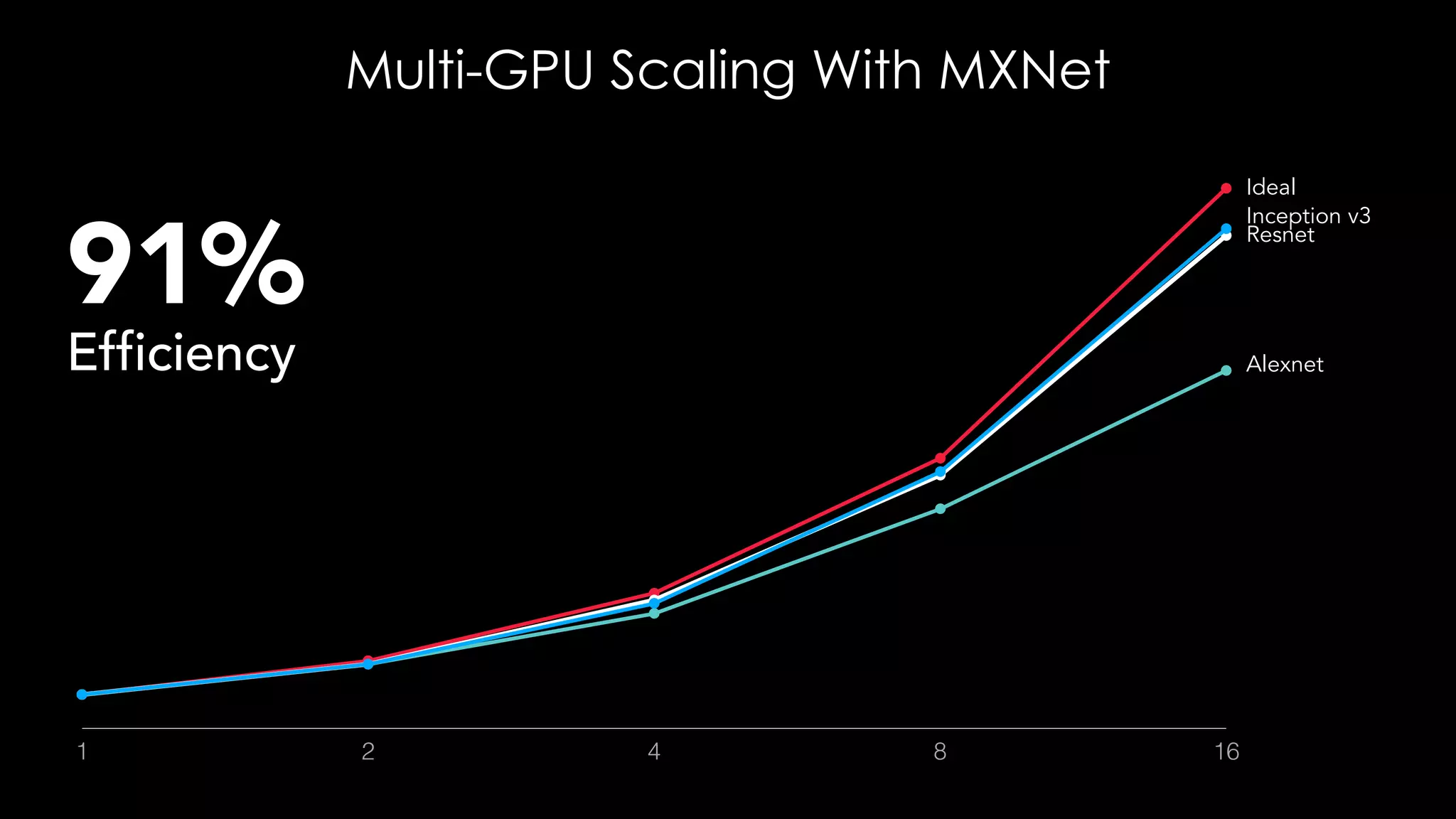

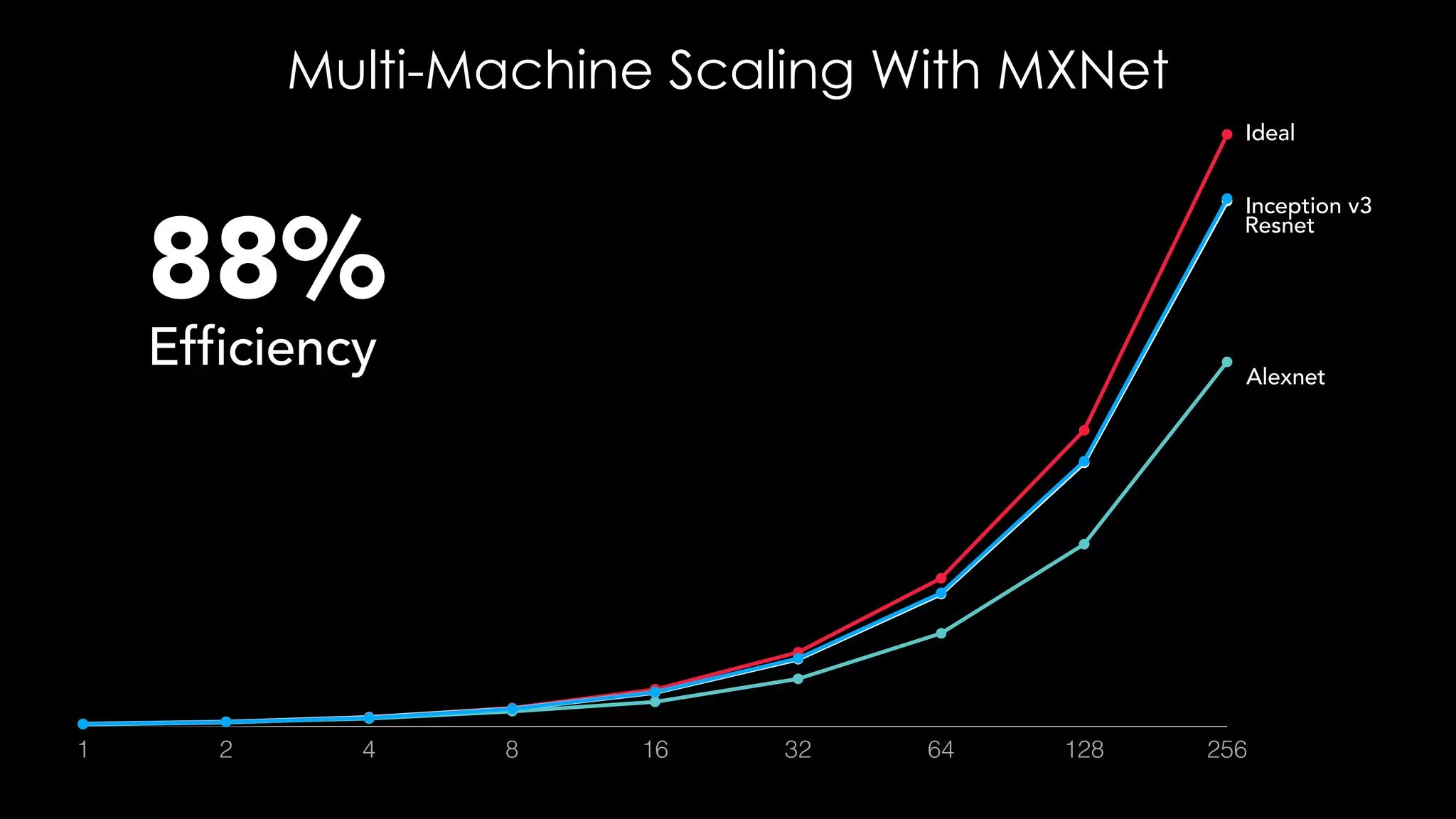

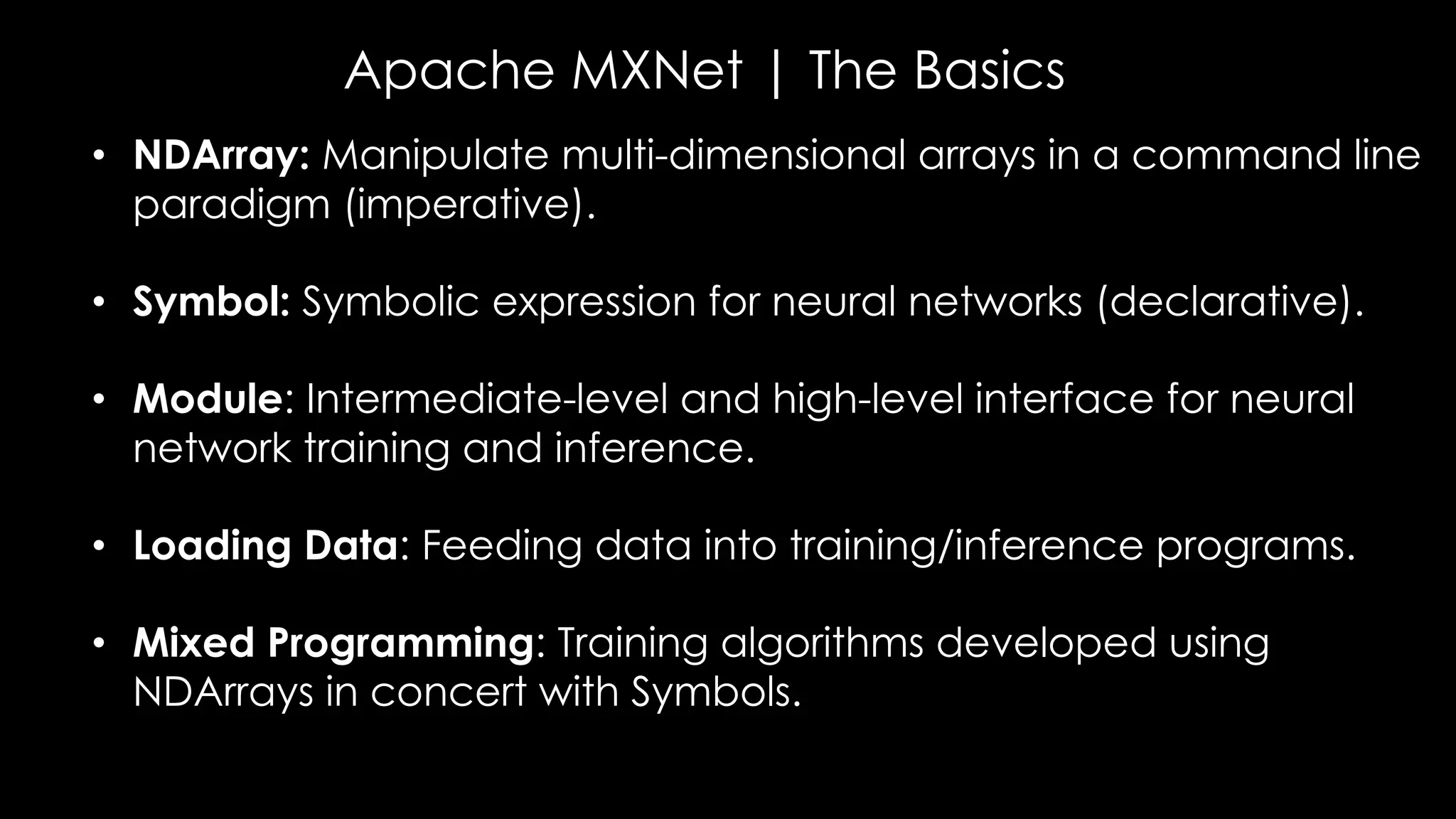

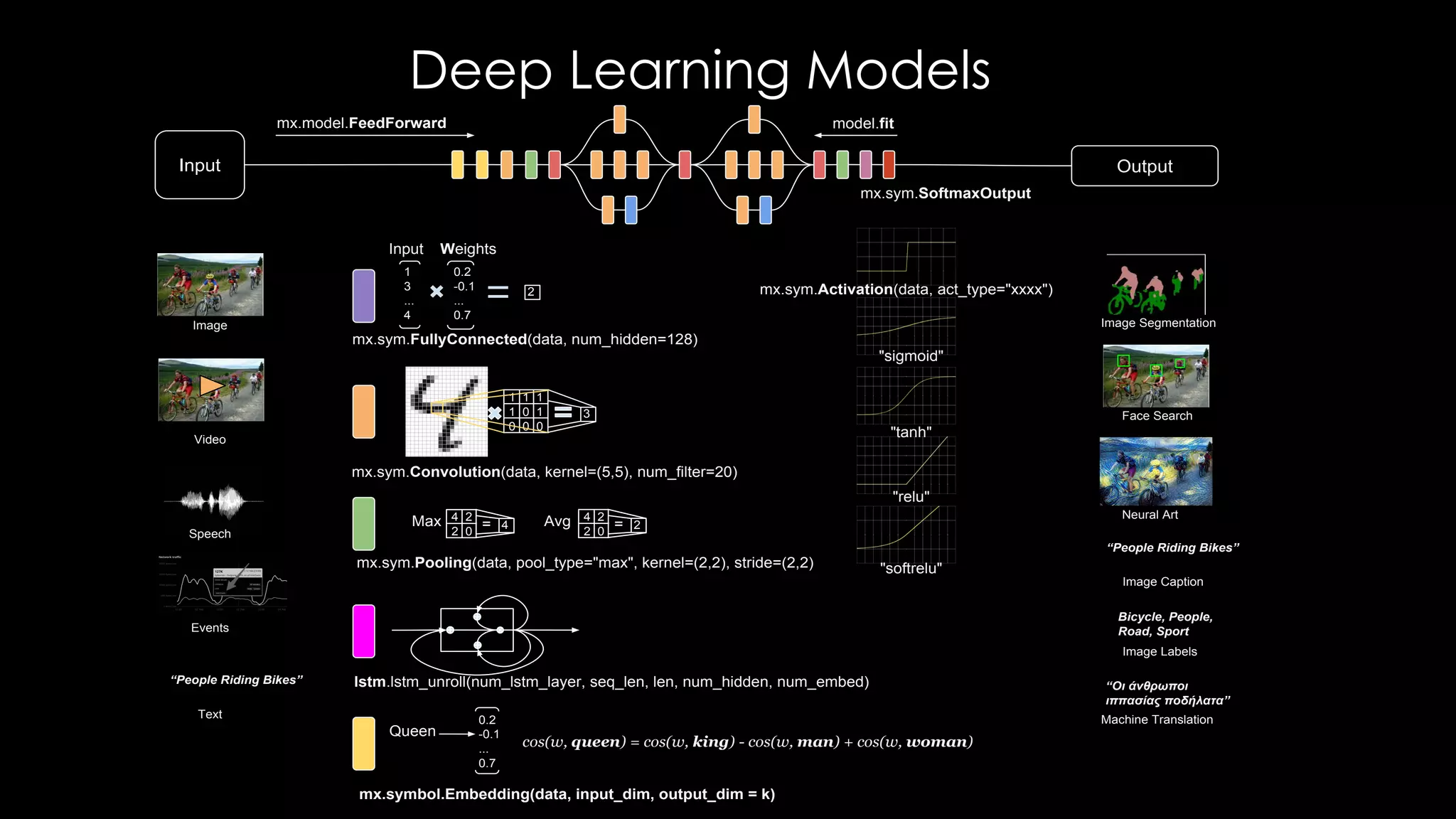

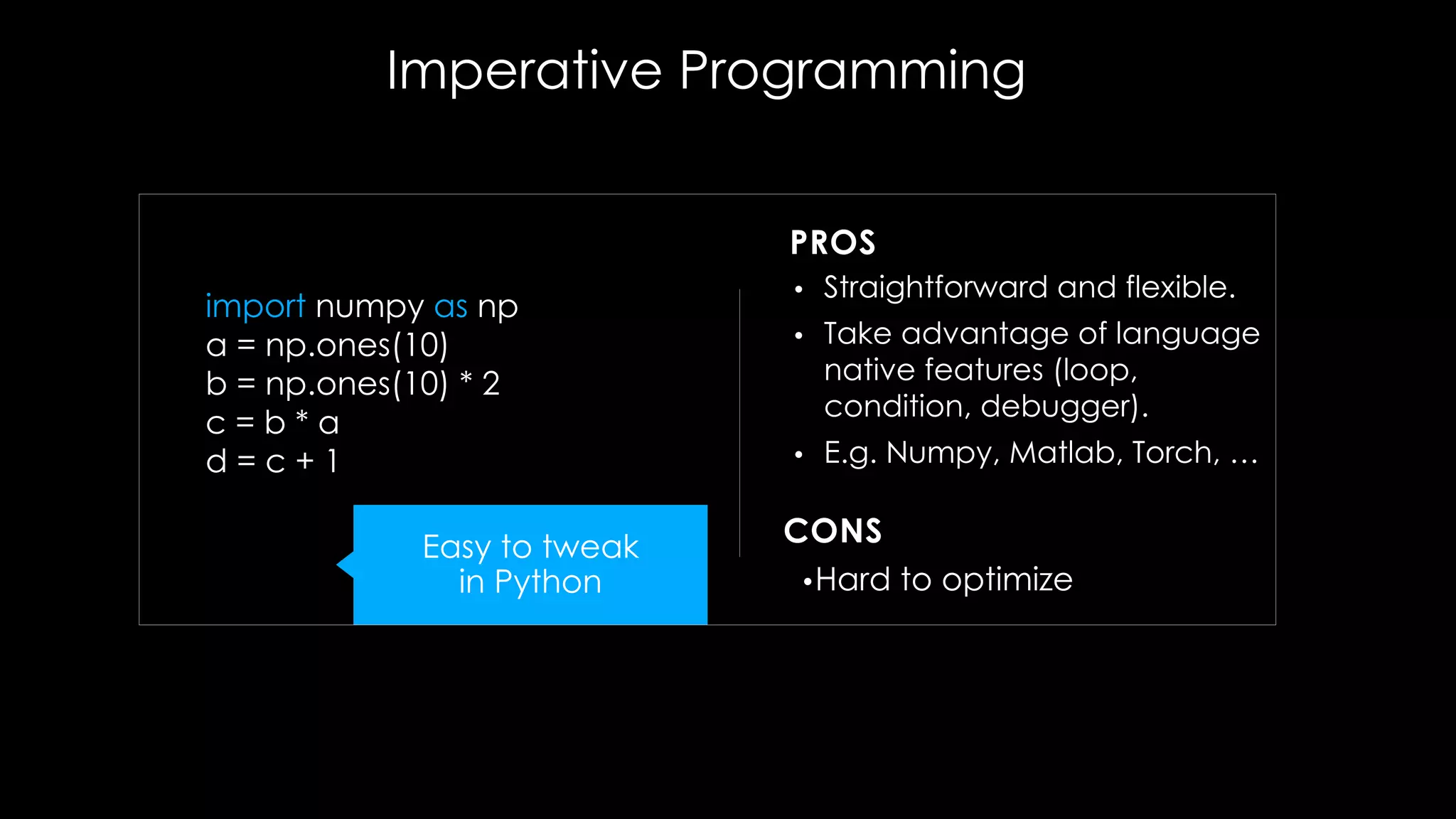

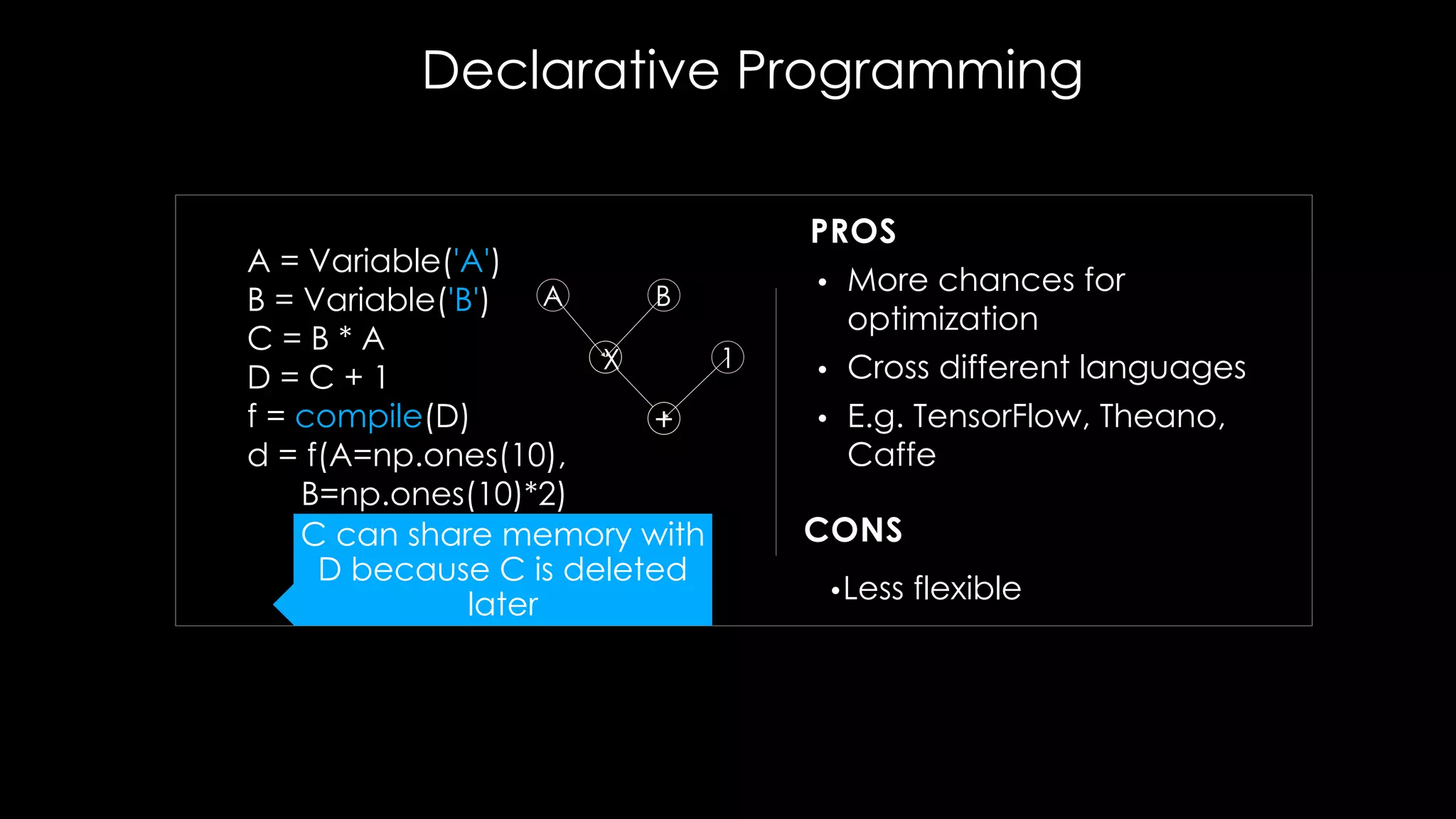

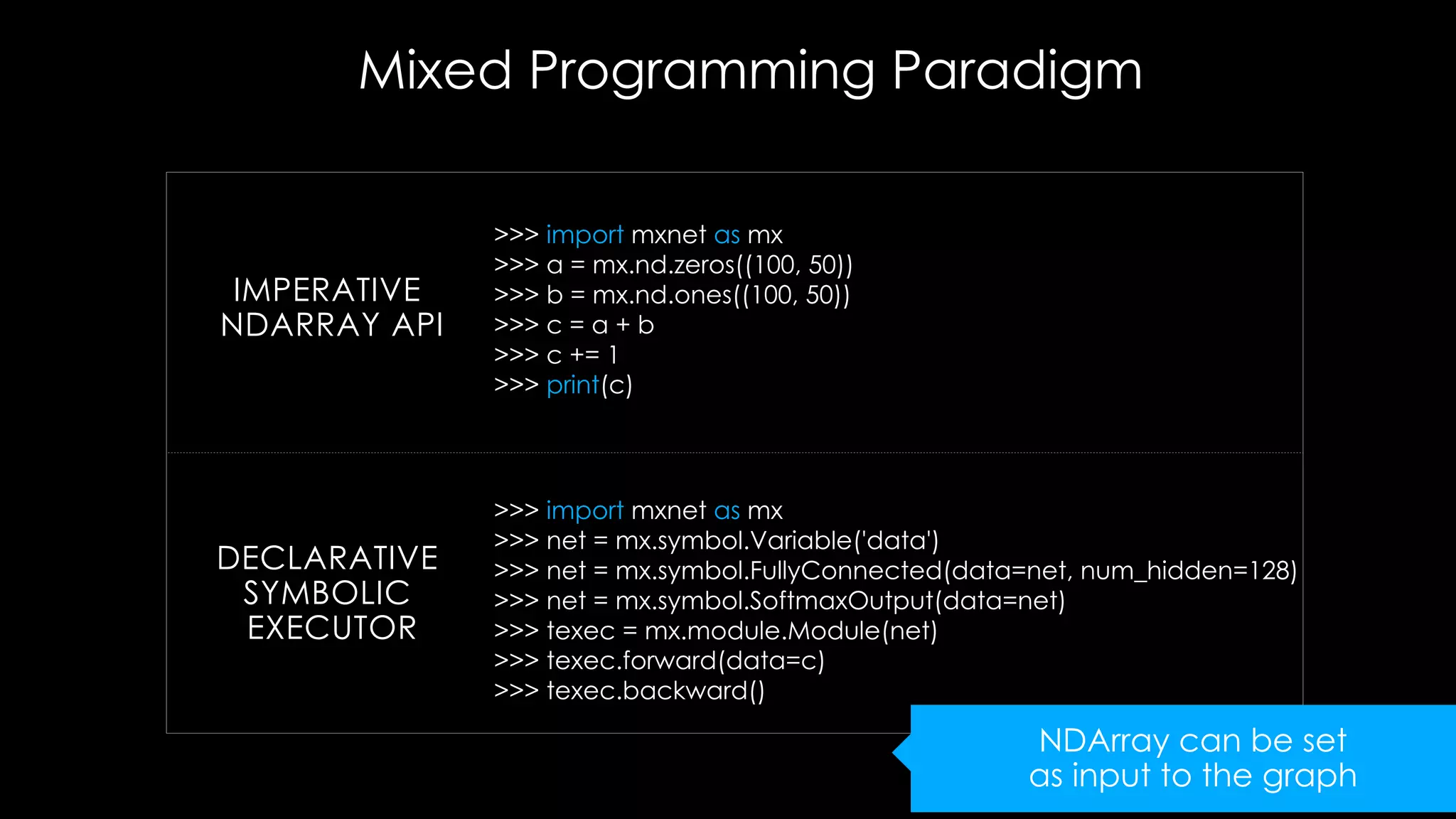

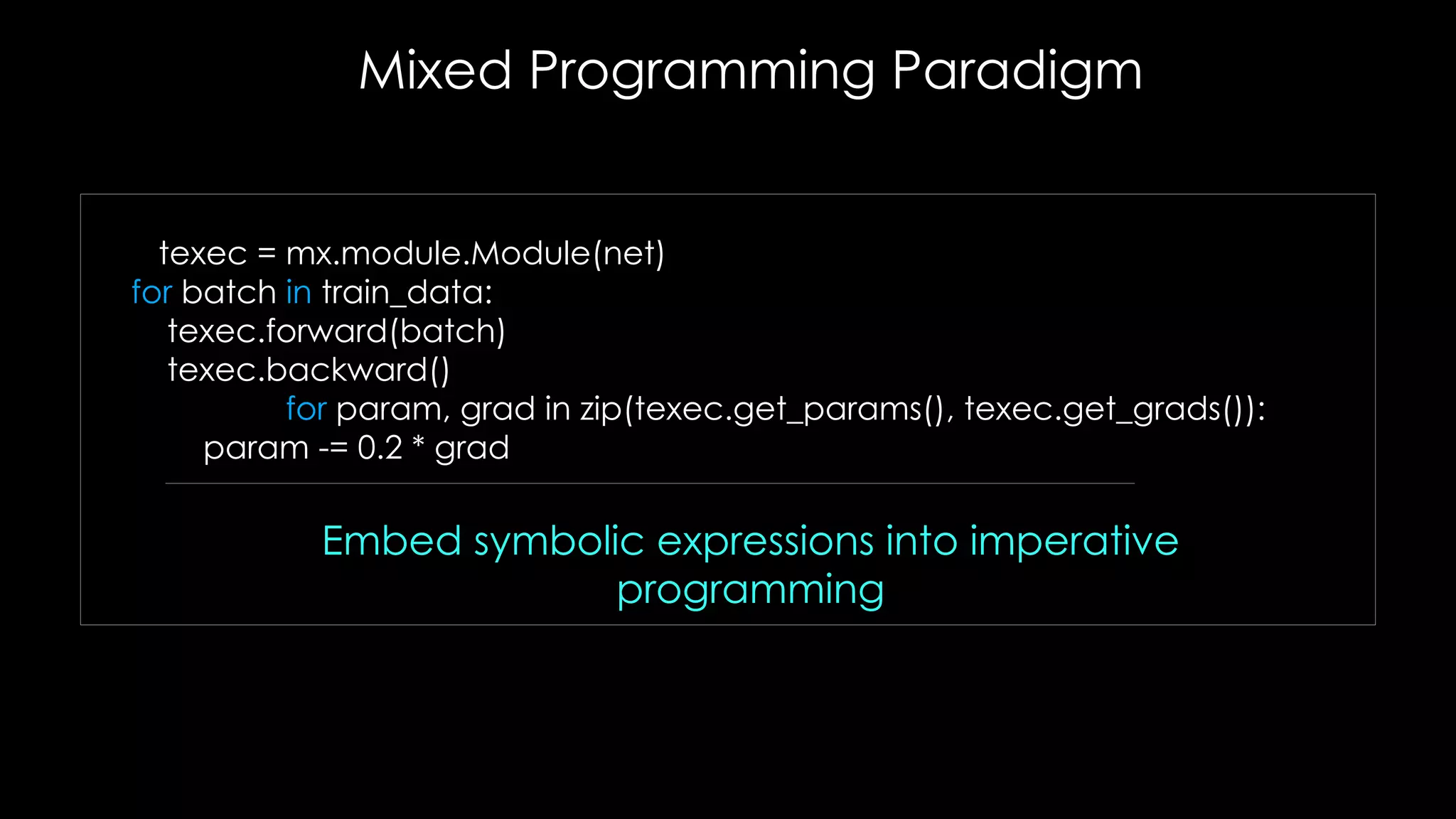

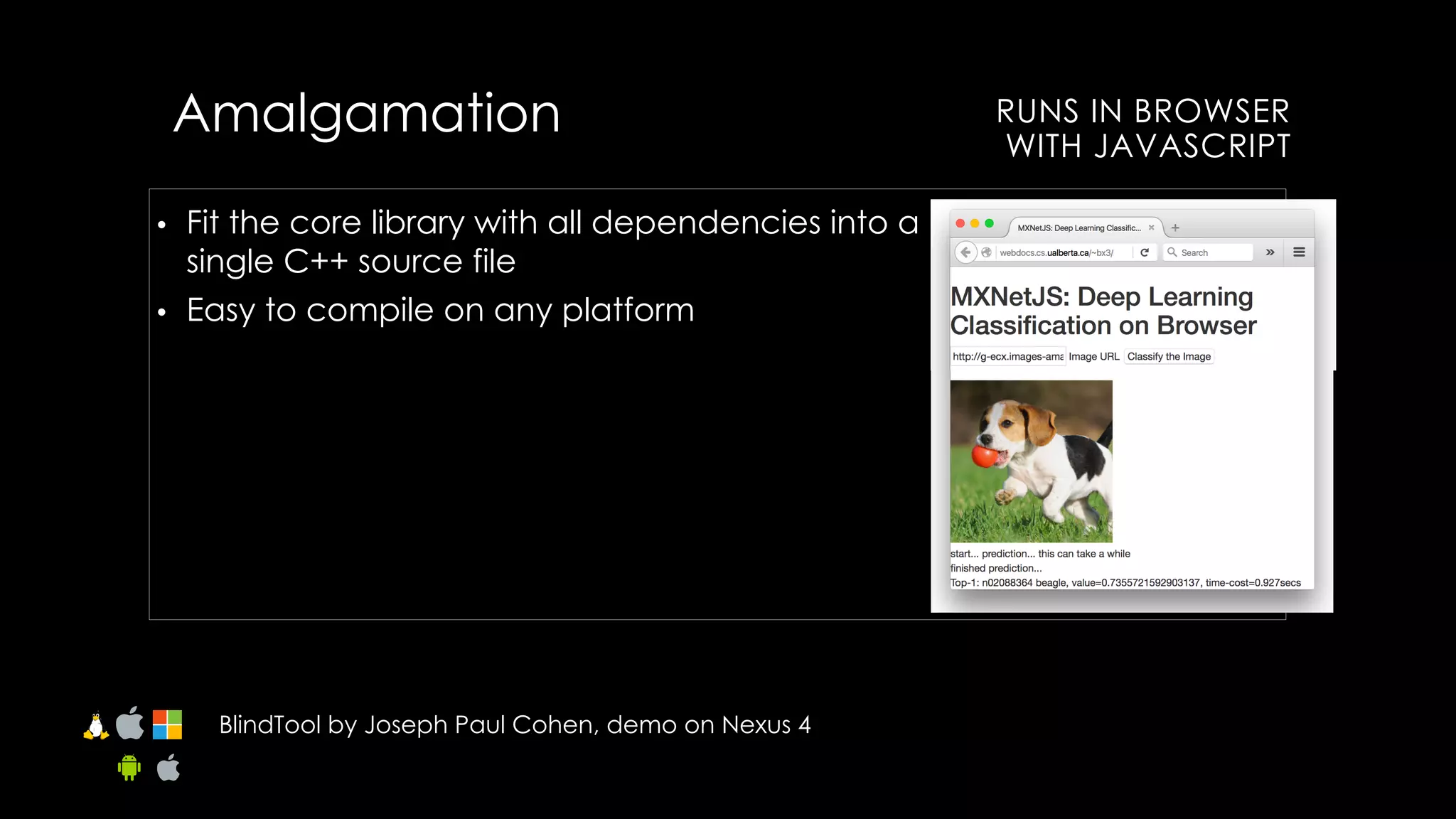

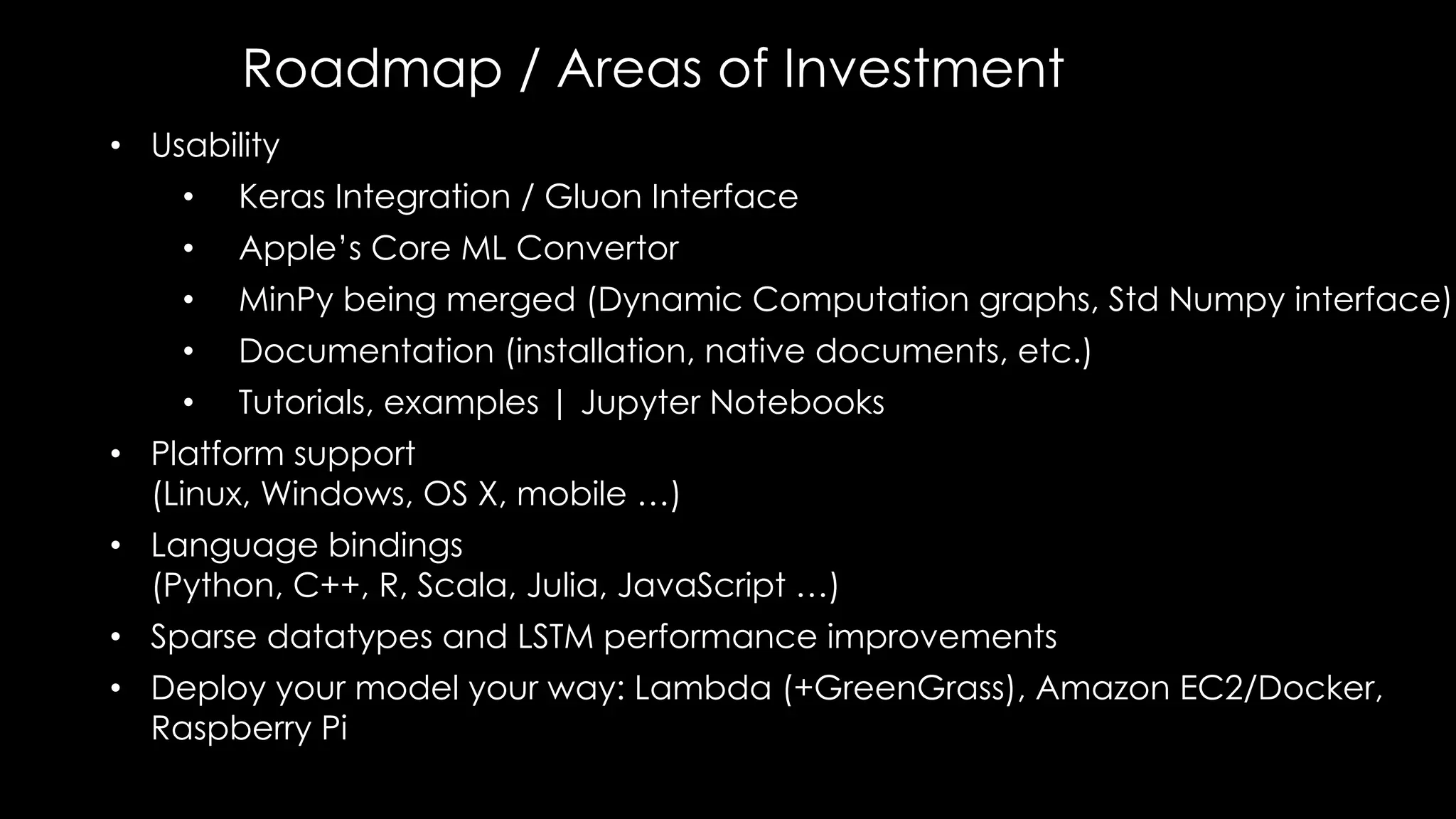

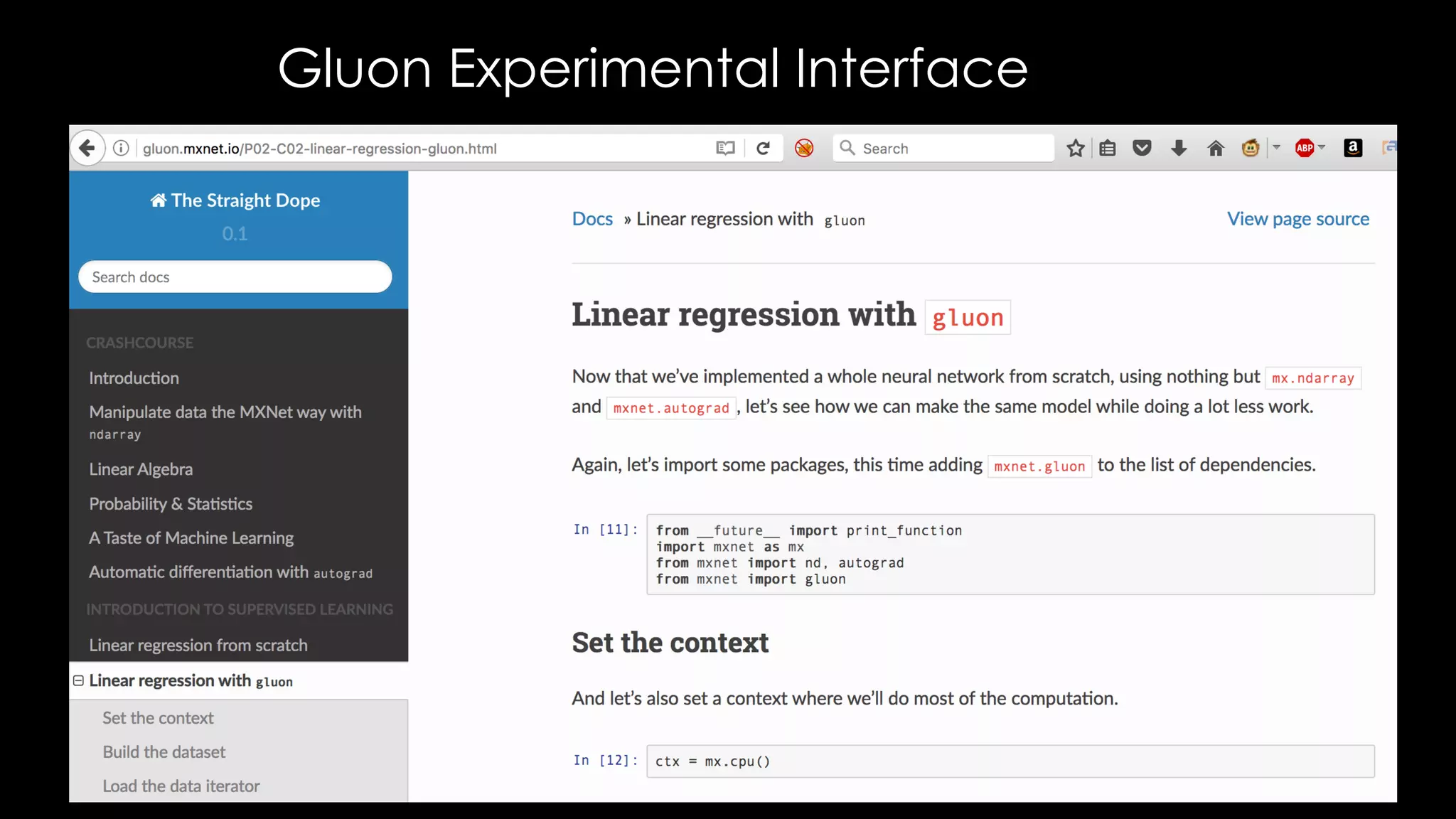

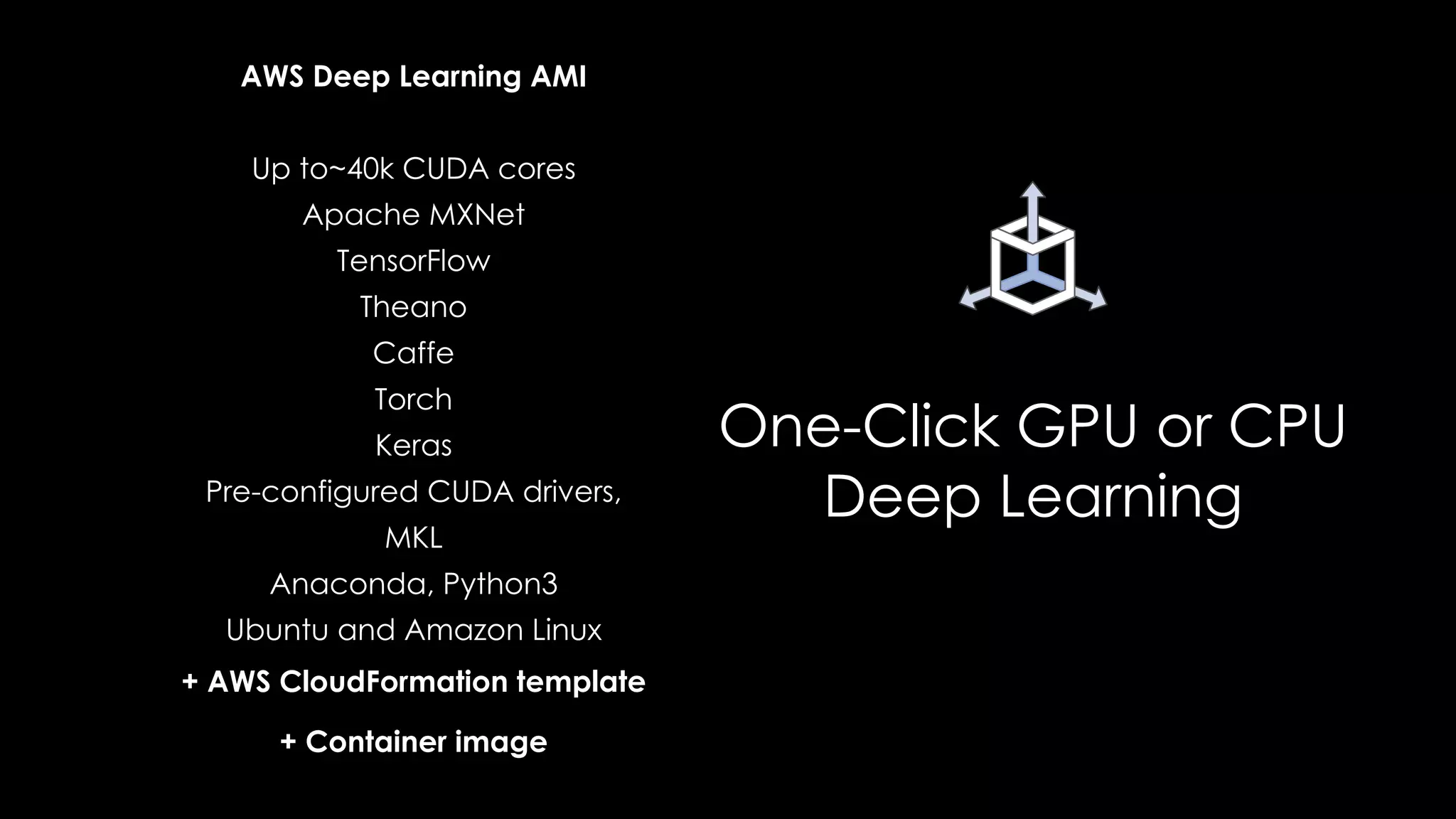

This document provides an overview of Apache MXNet and deep learning on AWS. It begins with an introduction to deep learning applications and trends. The rest of the document discusses MXNet features like scalability, language support and frameworks comparisons. It also covers MXNet usage on AWS like integration with services and AI research. The document concludes with developer resources like notebooks, documentation and tools for building models with MXNet.