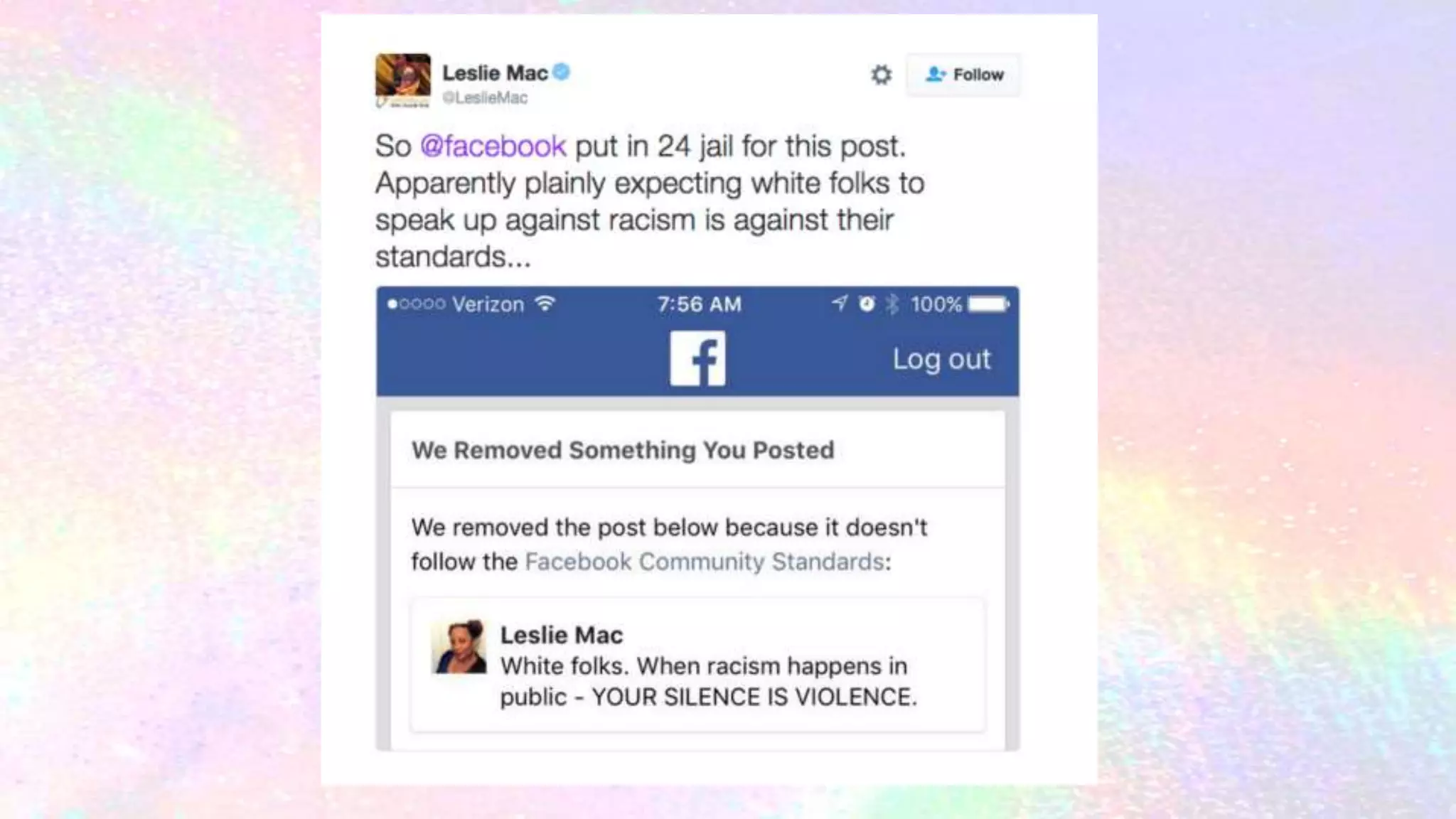

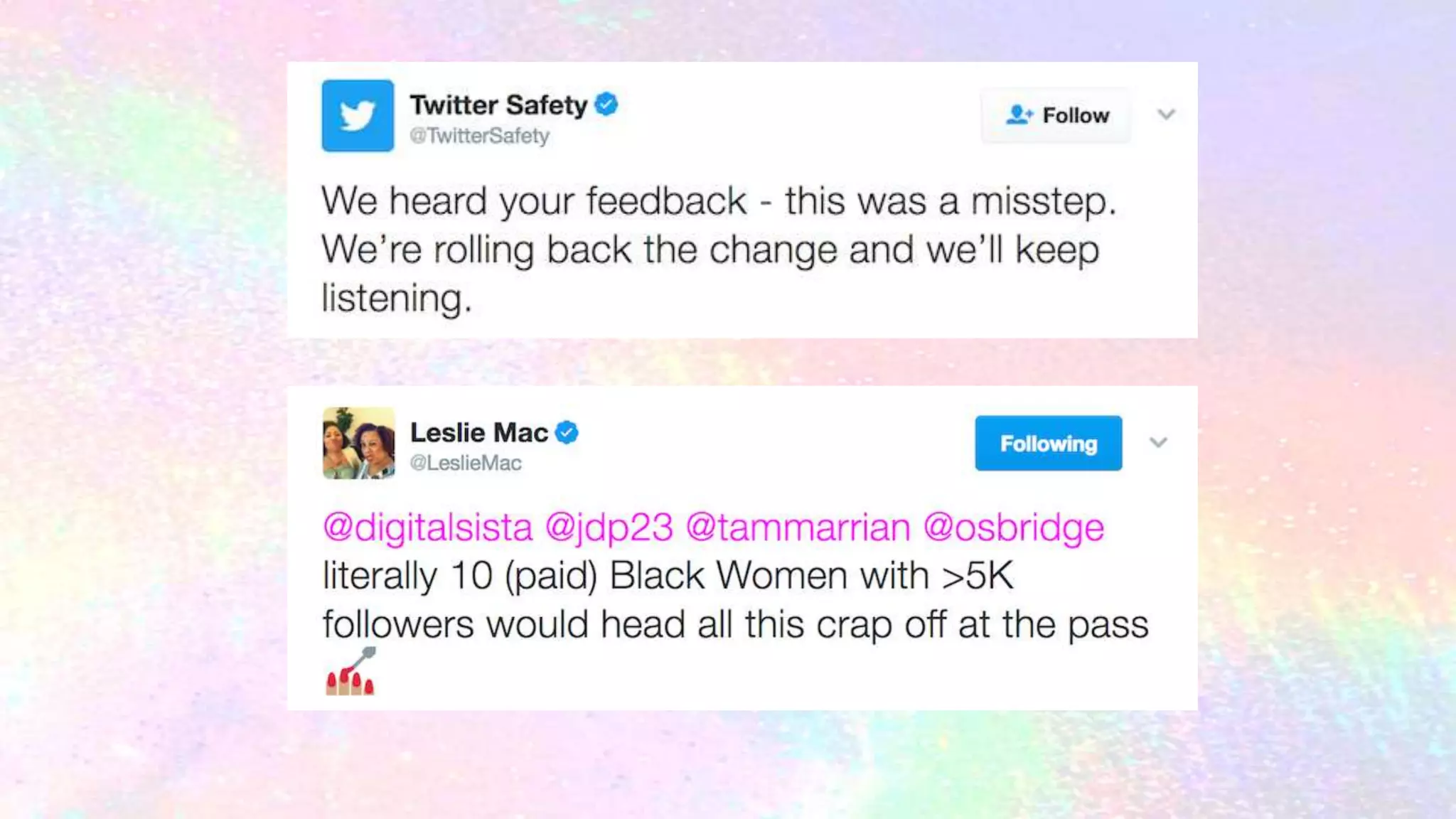

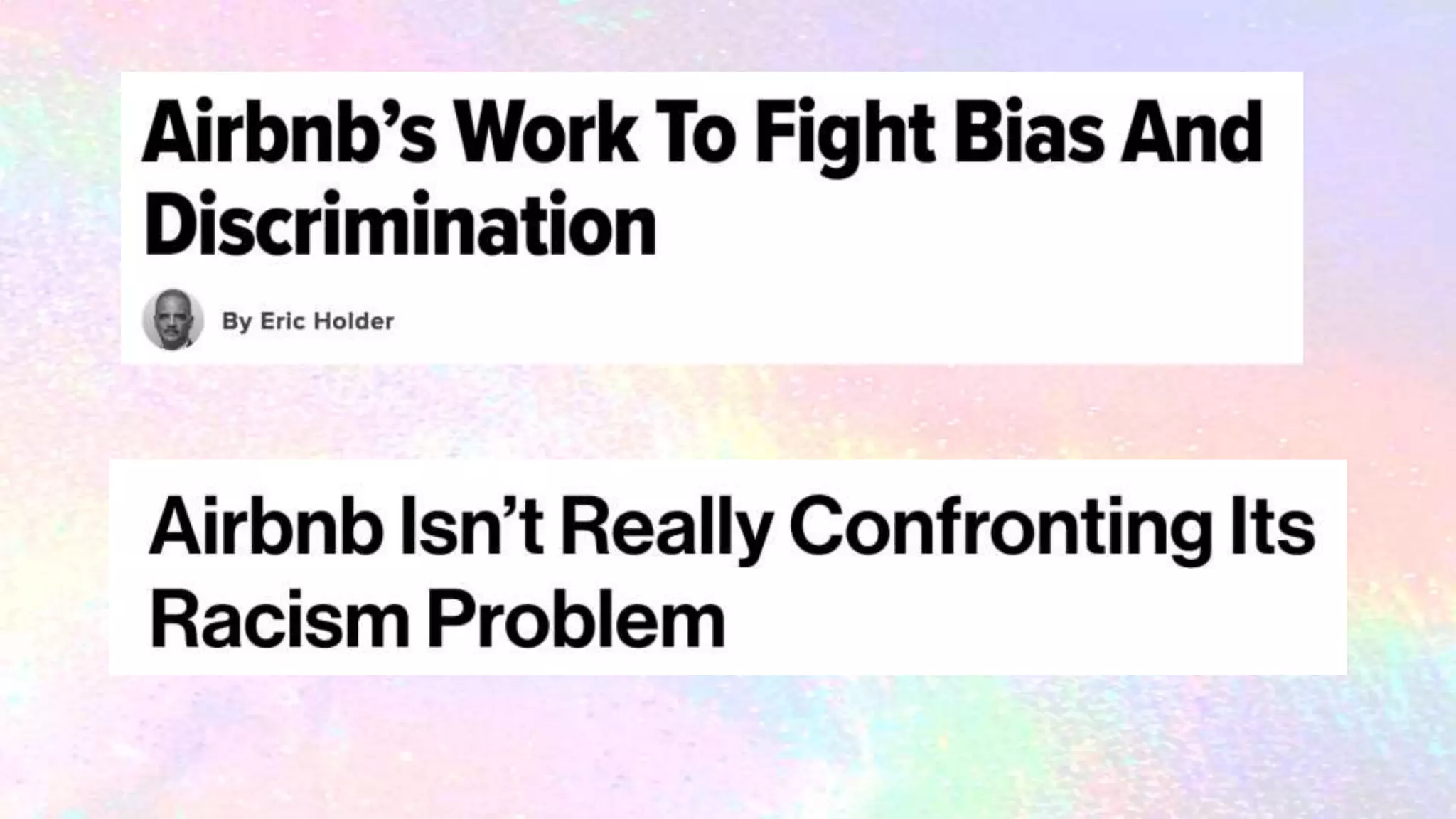

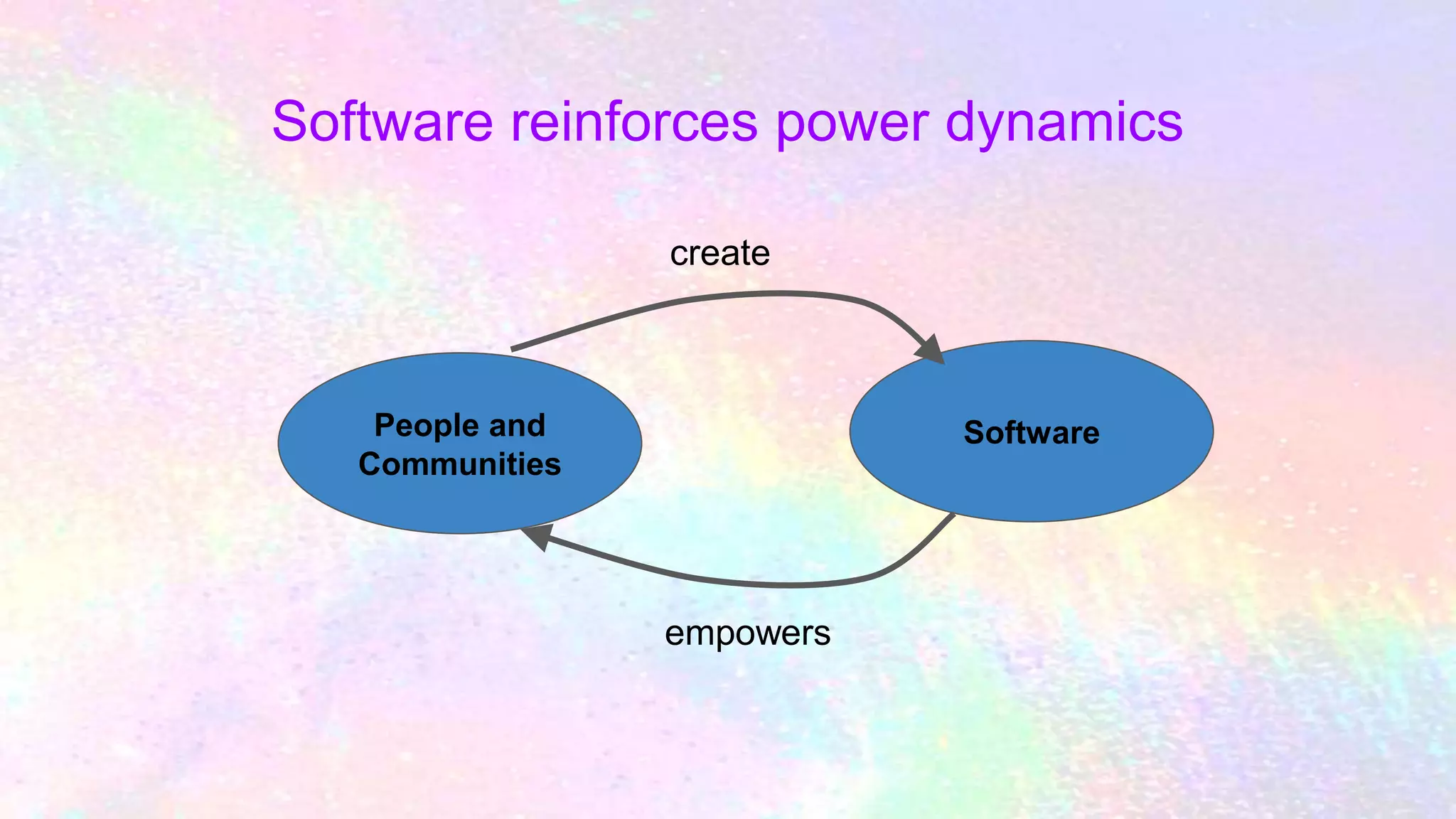

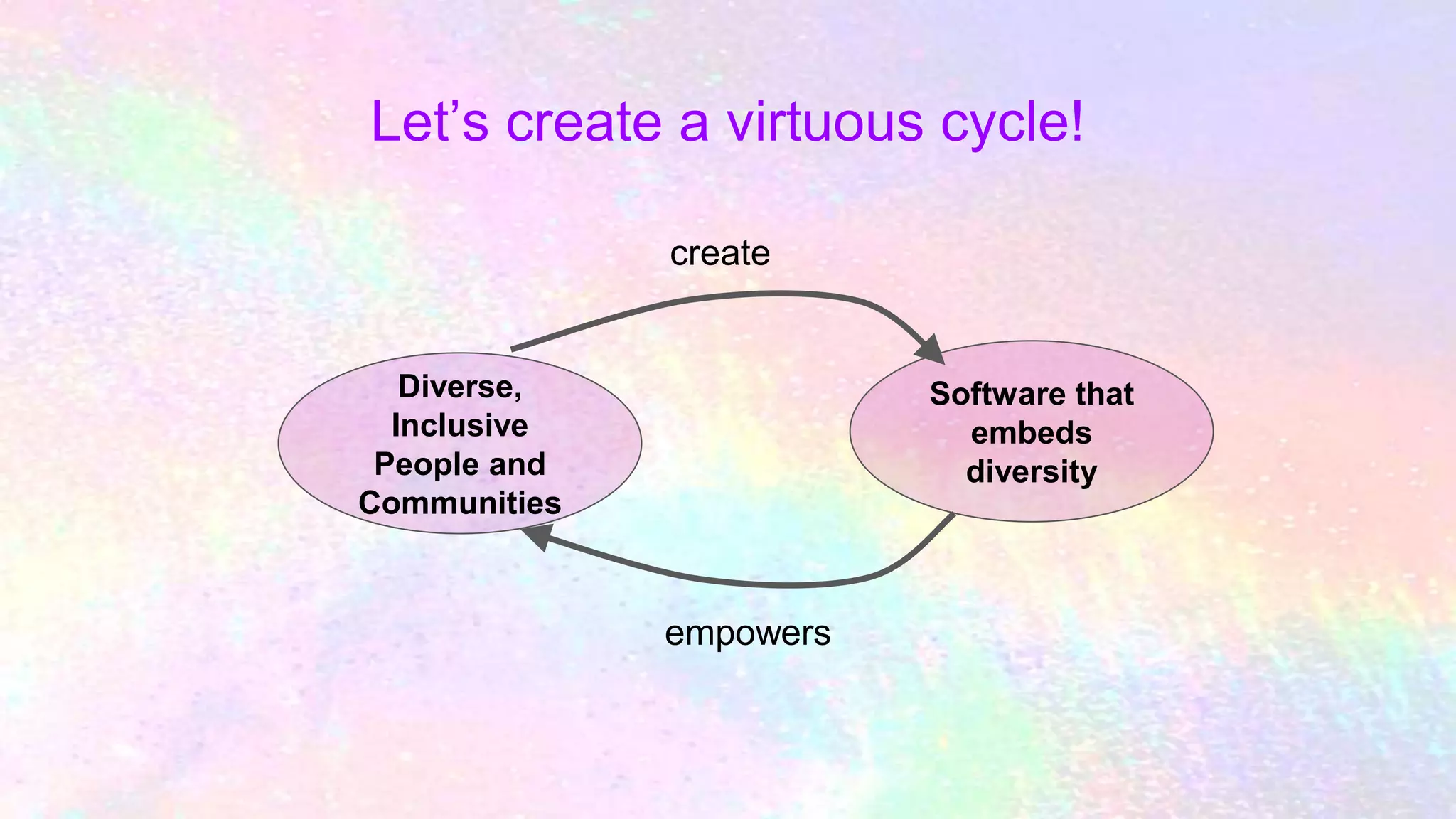

This document discusses best practices and emerging techniques for creating diversity-friendly software. It addresses three aspects of diversity: the people creating software, the processes used, and supporting diversity in the software itself. Best practices include having diverse representation on teams, an inclusive culture, equitable policies, accessibility design, and optional self-identification. Emerging techniques discussed are gender HCI, threat modeling for harassment, and avoiding algorithmic bias. Challenges covered include lack of priority on diversity and lack of knowledge, and responses provided are to prioritize diversity and budget for training. The document advocates starting with the needs of highly marginalized groups and creating a virtuous cycle of diverse and inclusive people creating empowering software.