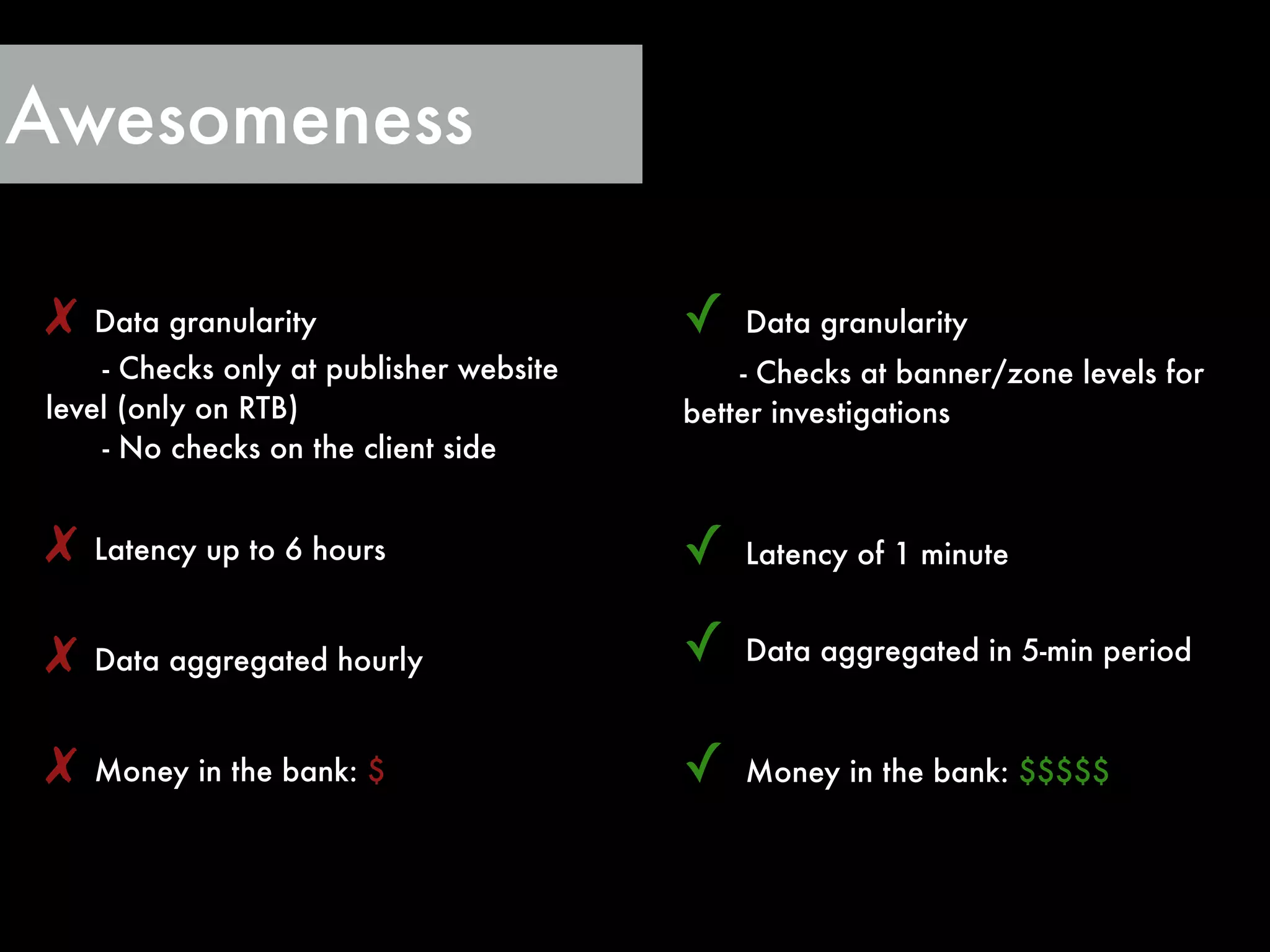

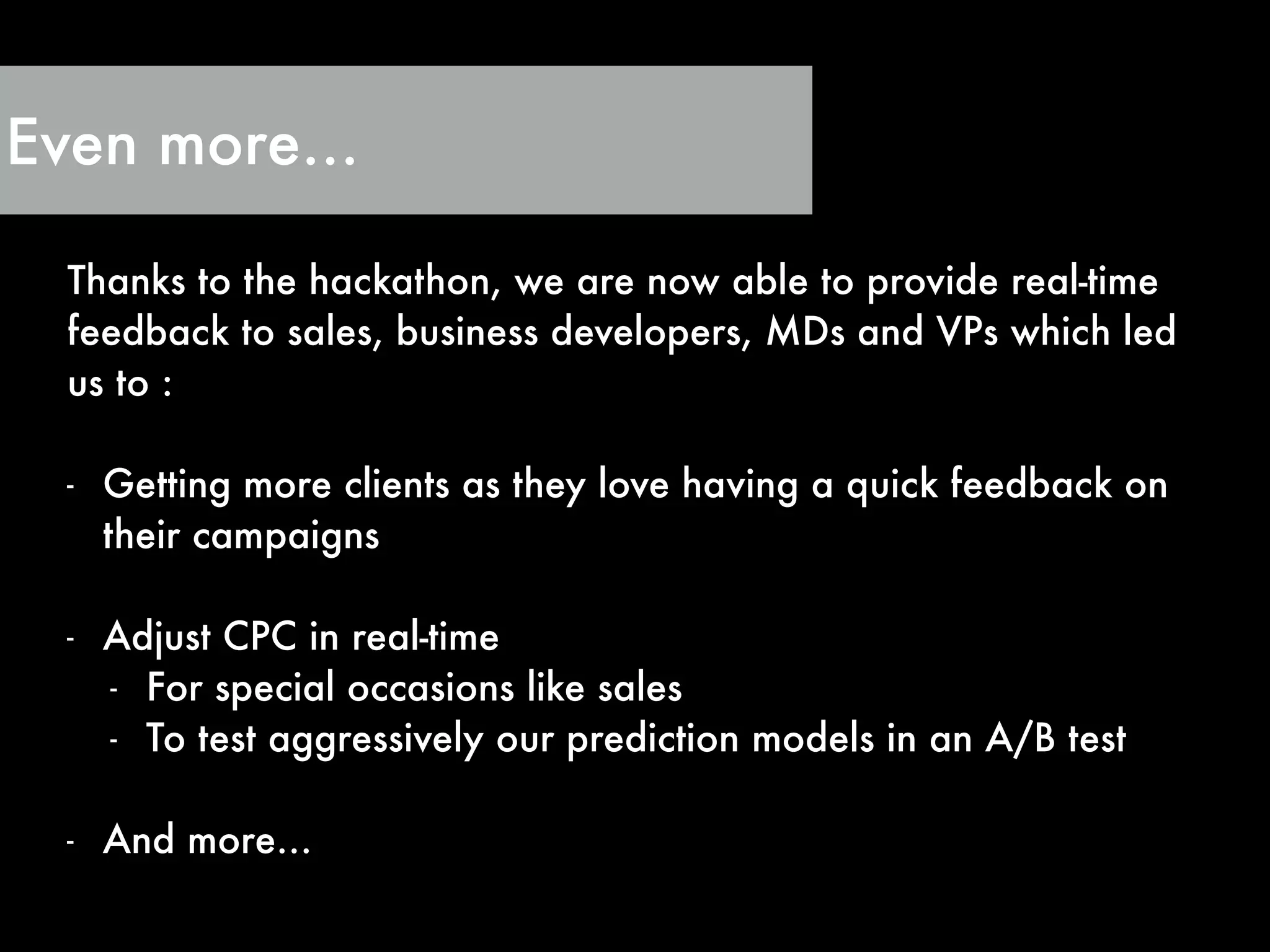

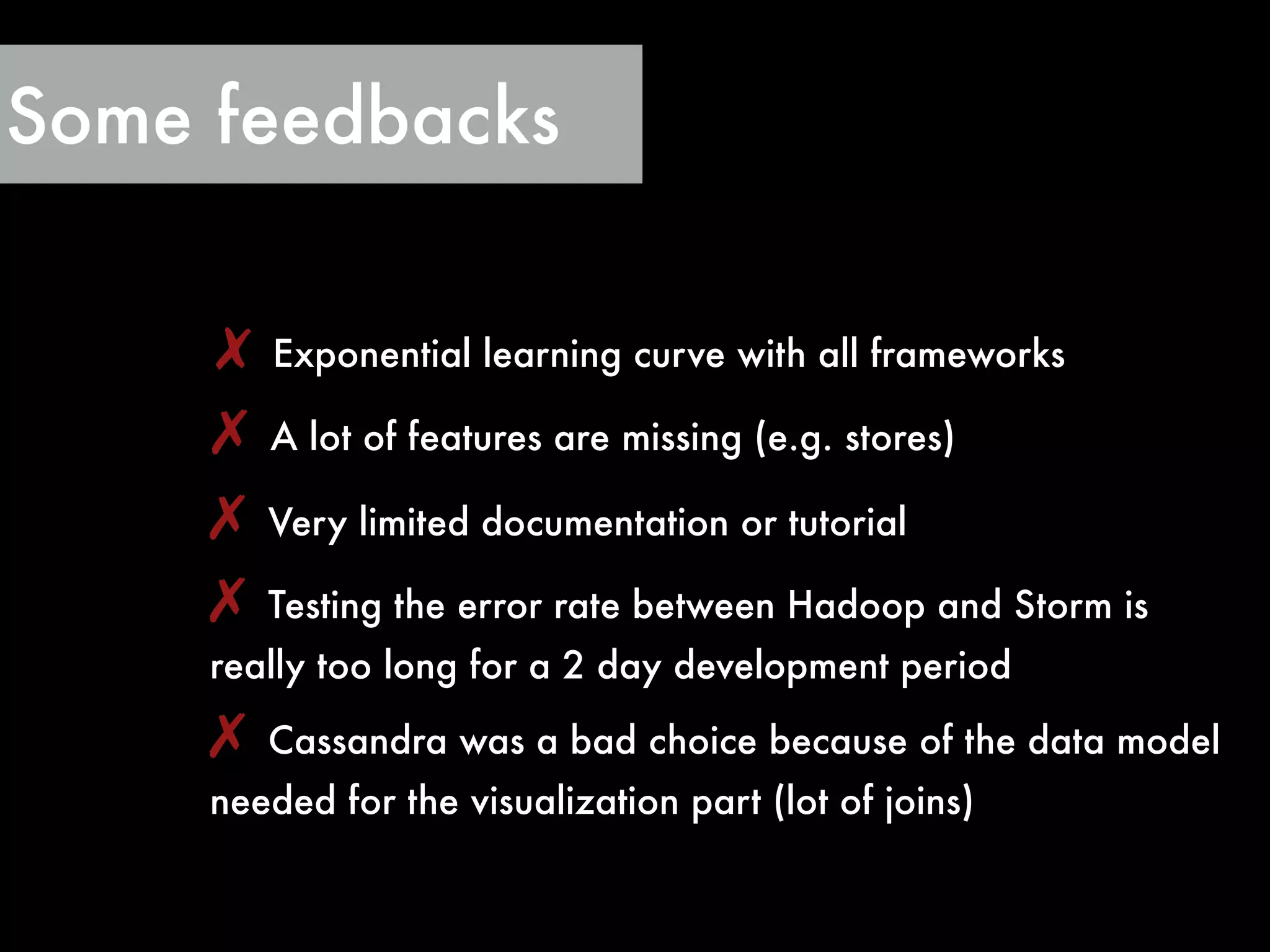

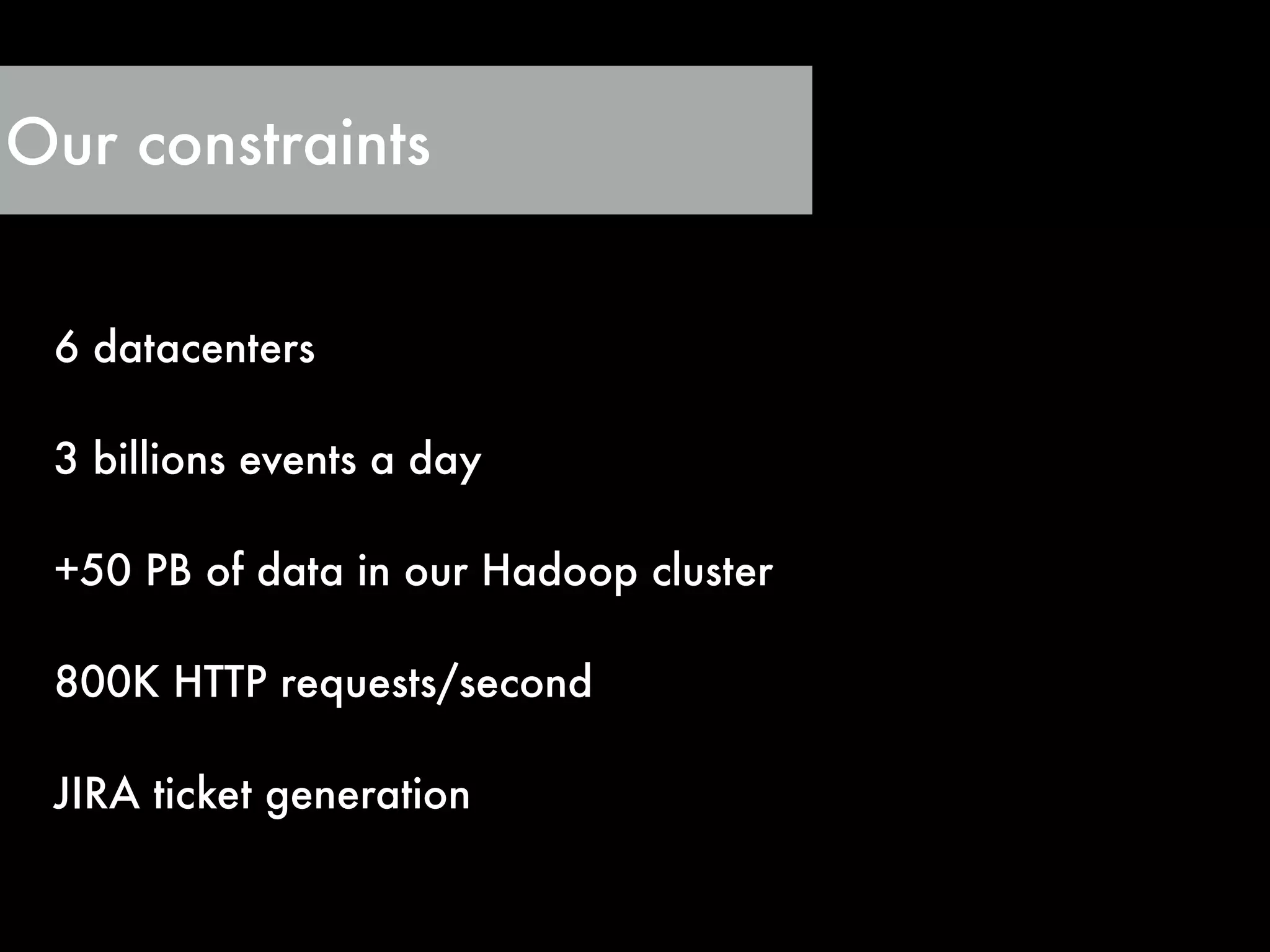

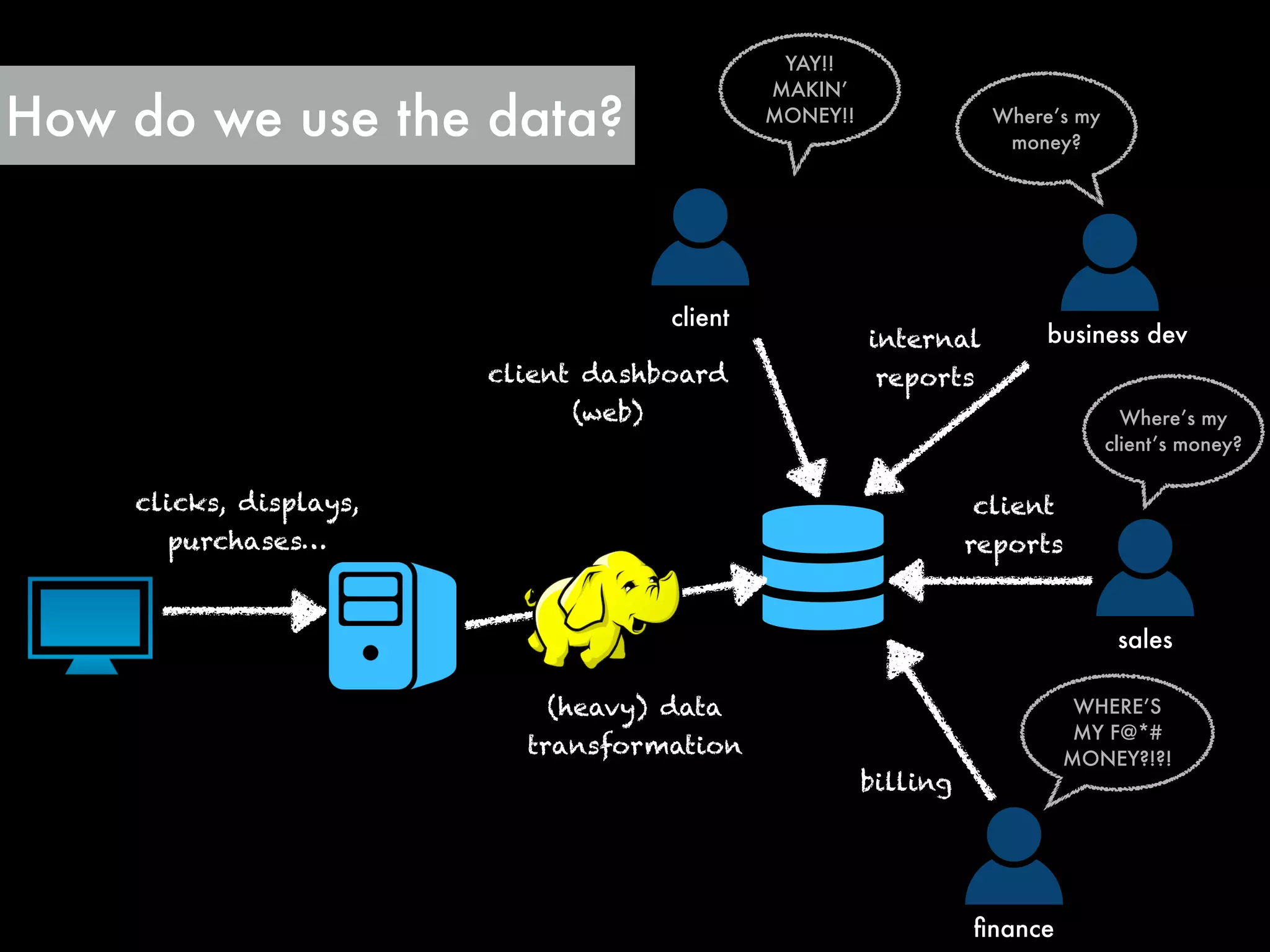

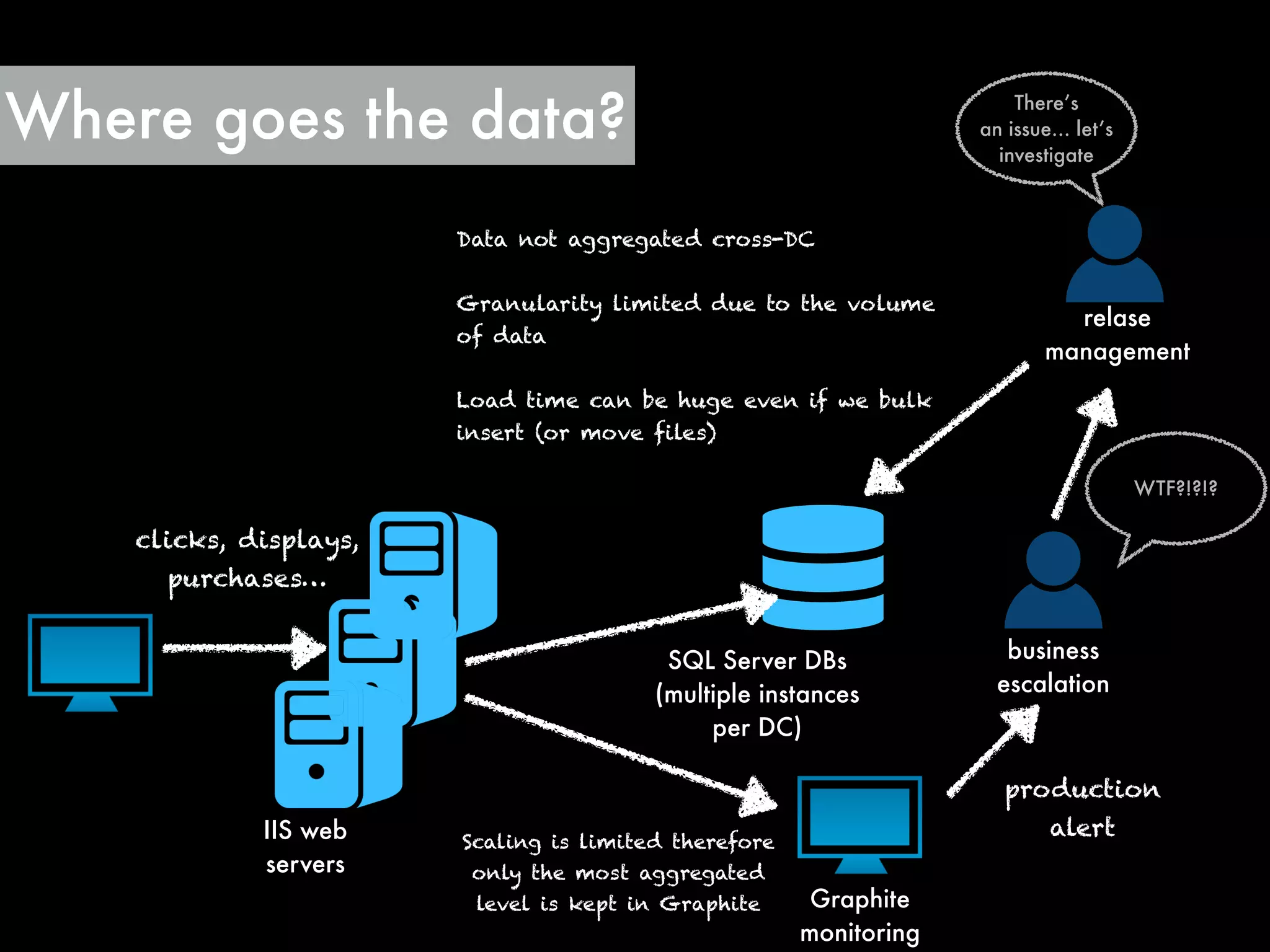

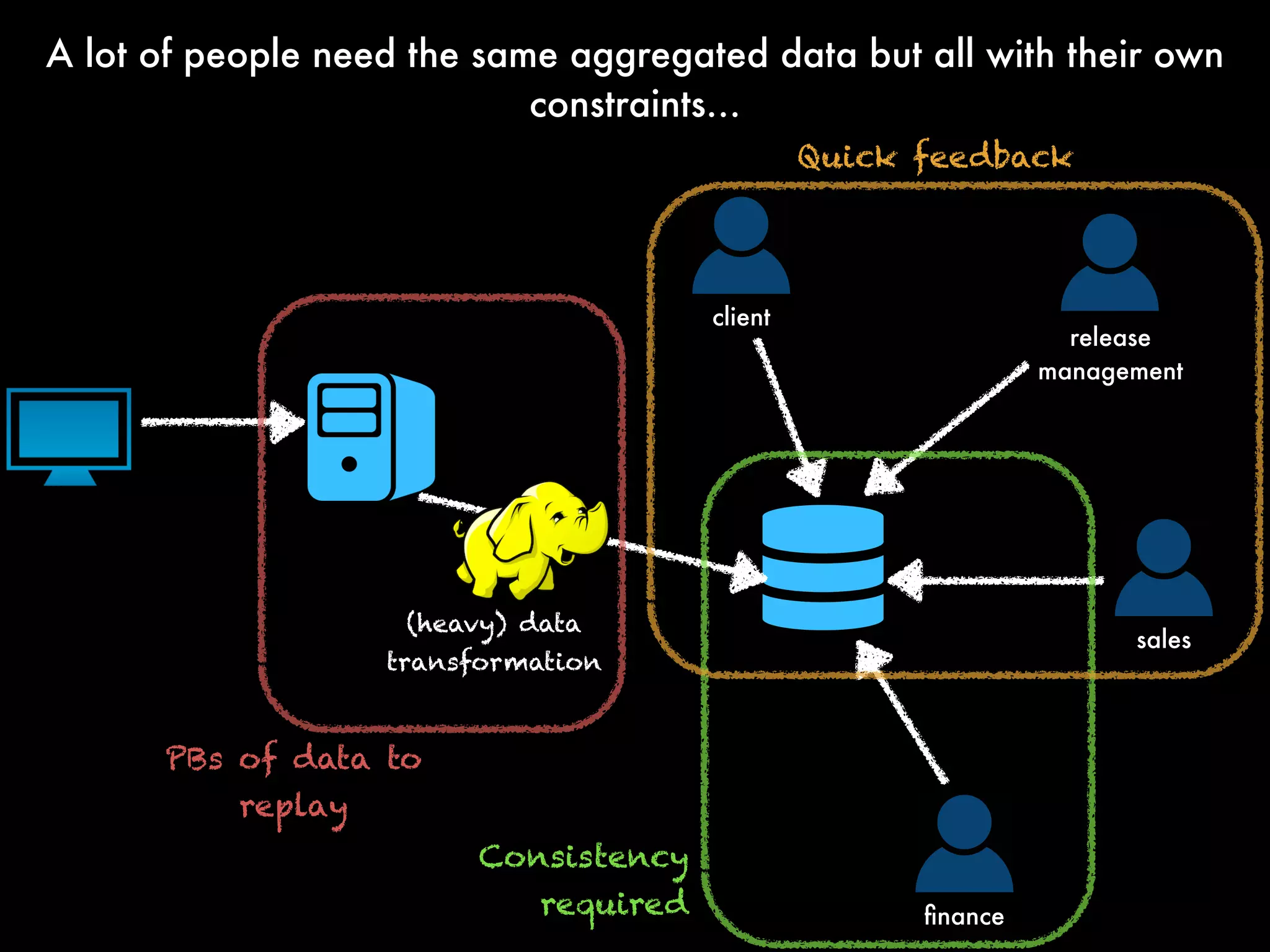

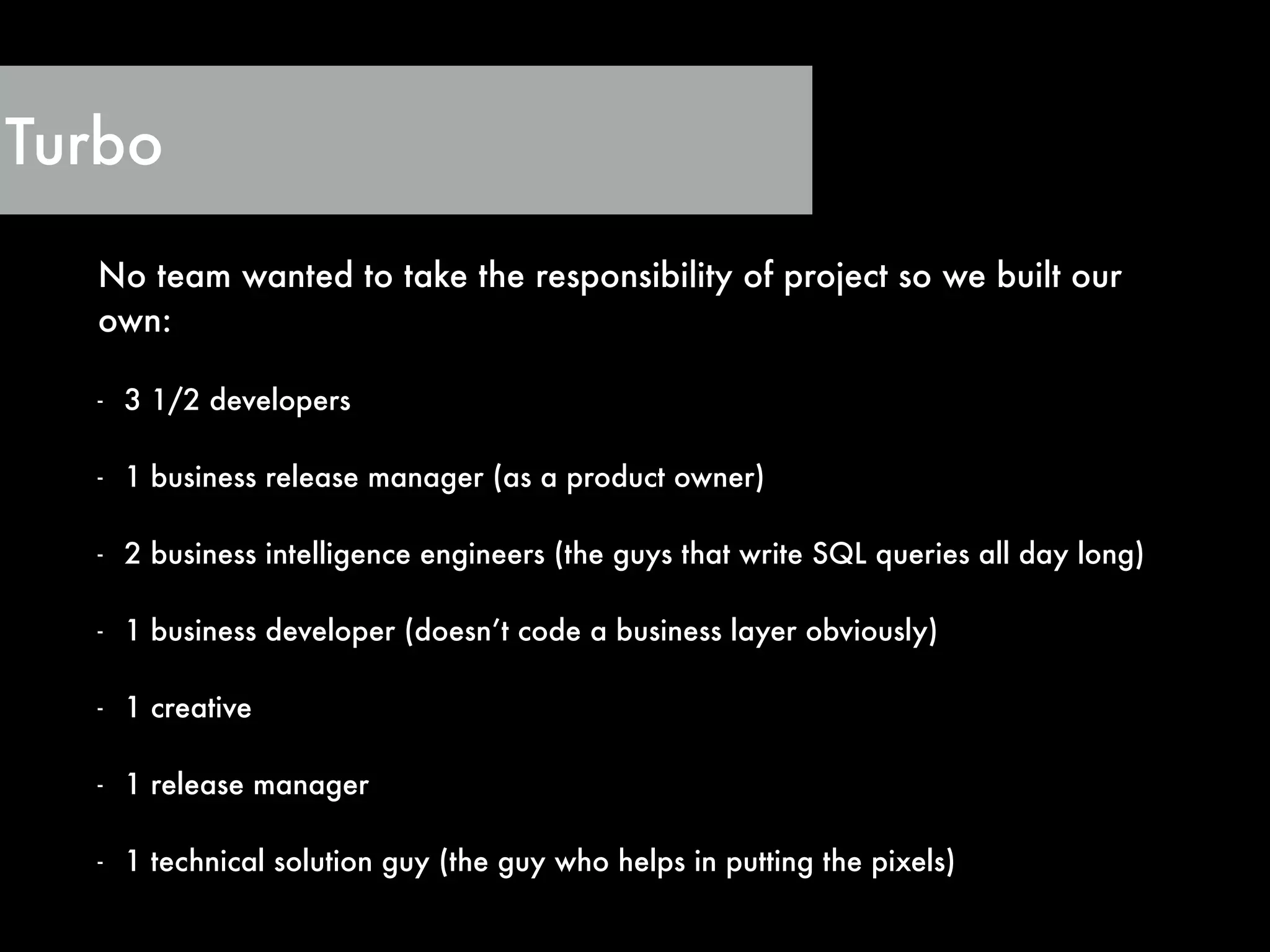

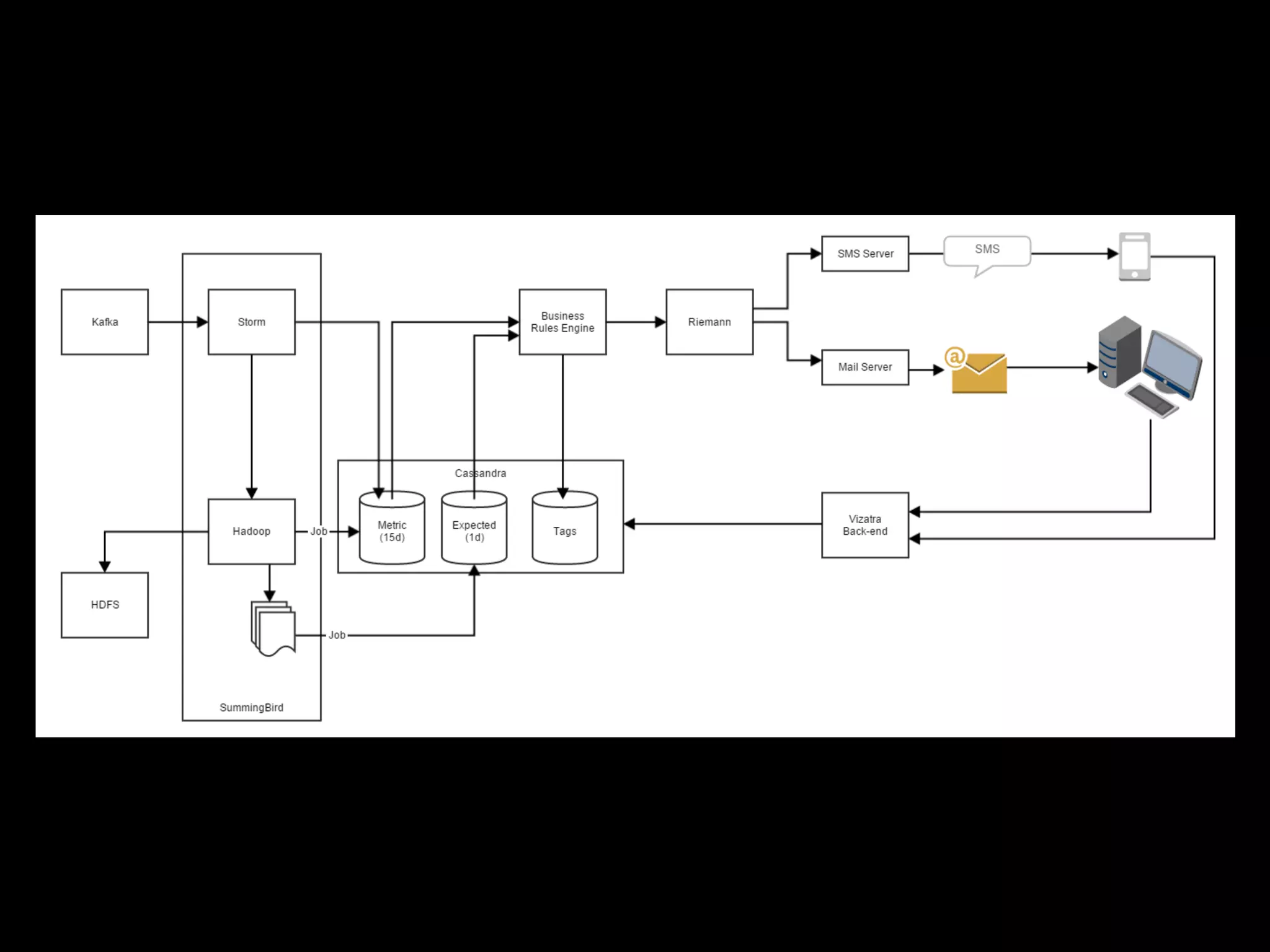

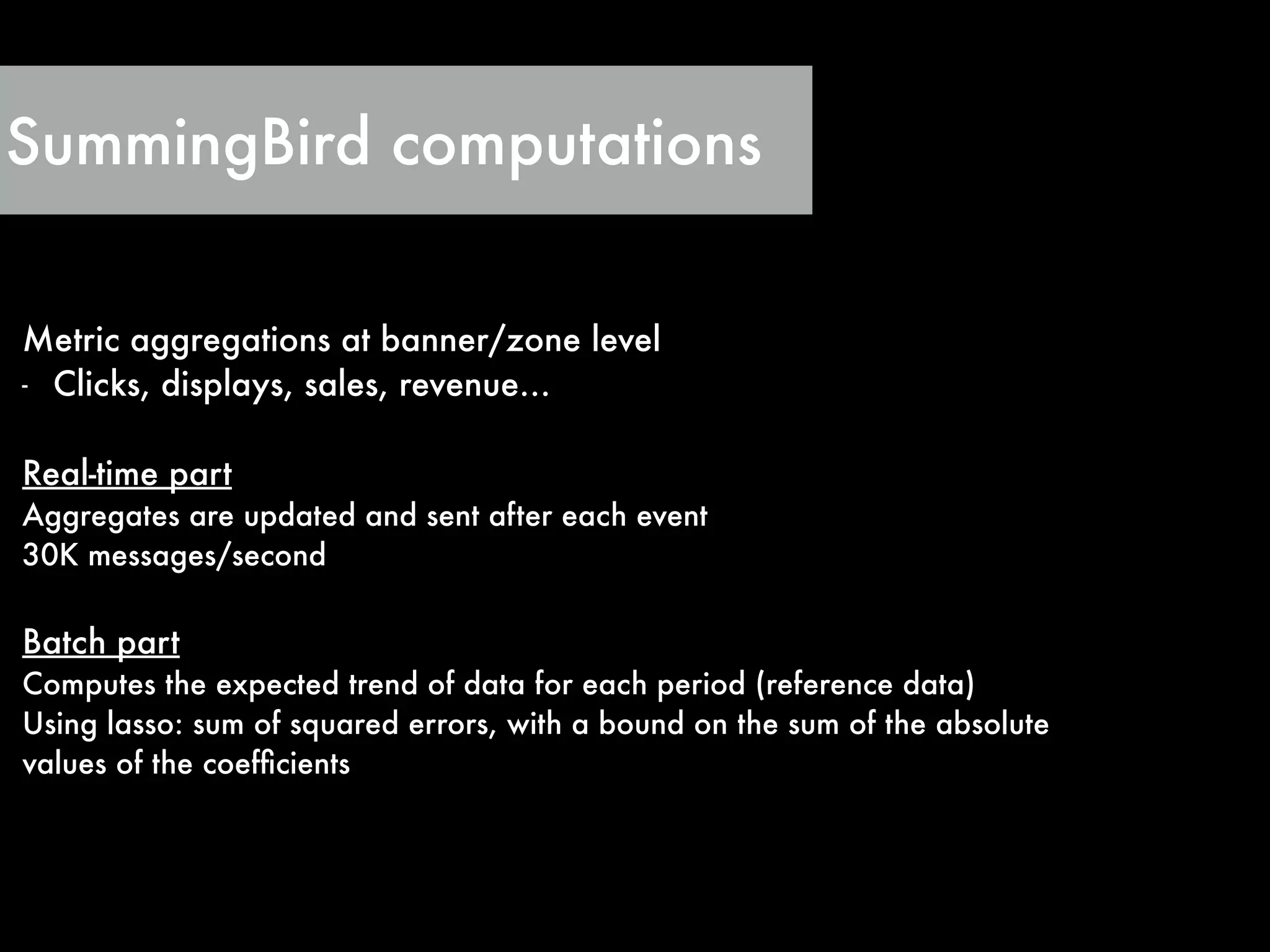

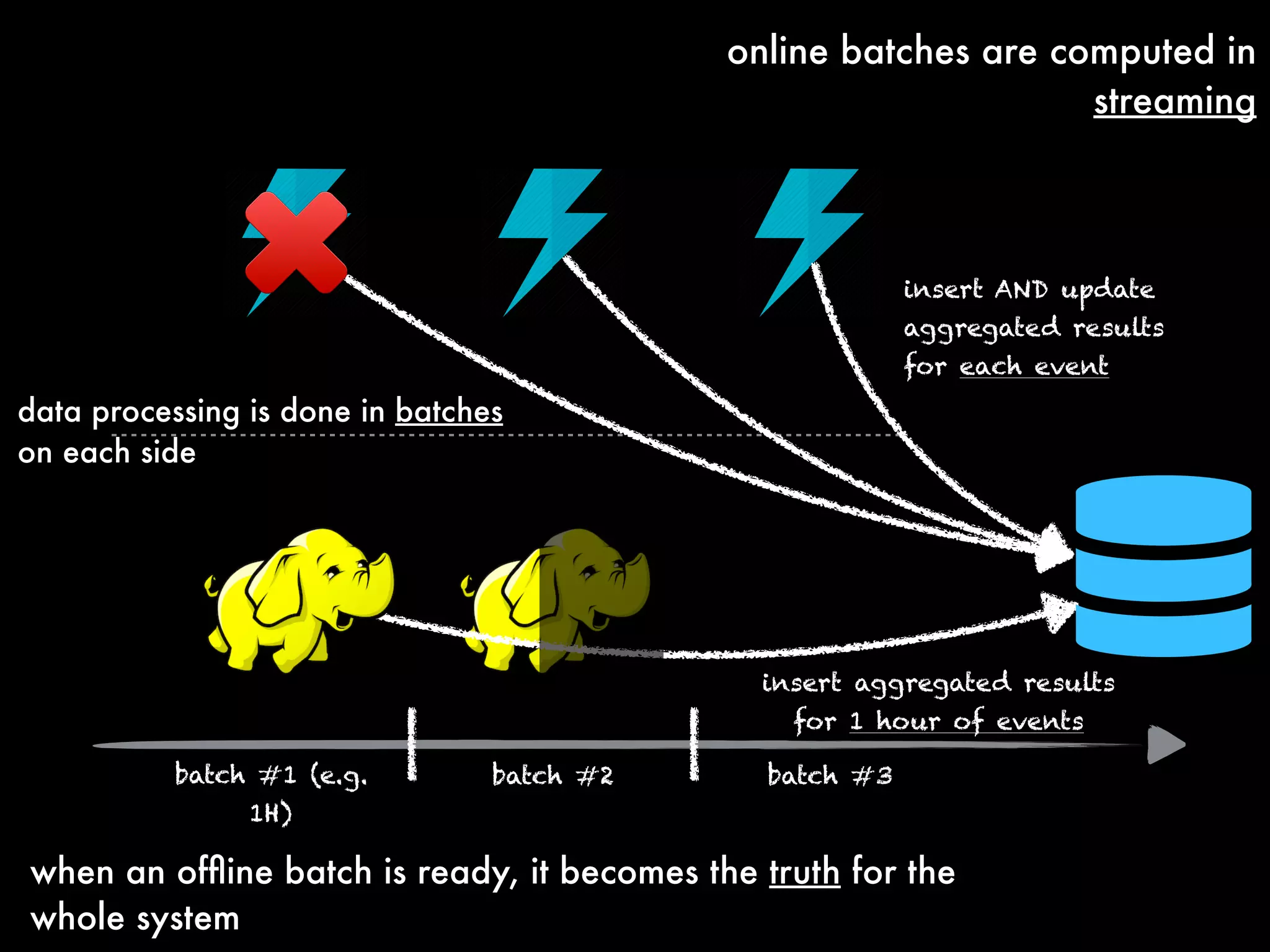

The document outlines the challenges and solutions employed by a software engineering team to reduce the processing time of Hadoop batch jobs from six hours to one minute within two days. It describes the implementation of a hybrid stream-batch processing architecture using tools like Summingbird, enabling real-time feedback and aggregation of large data sets while addressing data lag and infrastructure constraints. The successful project, born out of a hackathon, has improved operational efficiency and client engagement through timely analytics and reporting.

![Platform[T]

def job = source.map { !

/* your job here */ "

}.store()

P#Source P#Store

job is

executed on

sends job results

to store

redirects input

to job](https://image.slidesharecdn.com/bigdataberlin-sofian-150504112334-conversion-gate01/75/From-6-hours-to-1-minute-in-2-days-How-we-managed-to-stream-our-long-Hadoop-batches_-Sofian-Dhamaa_Criteo-23-2048.jpg)