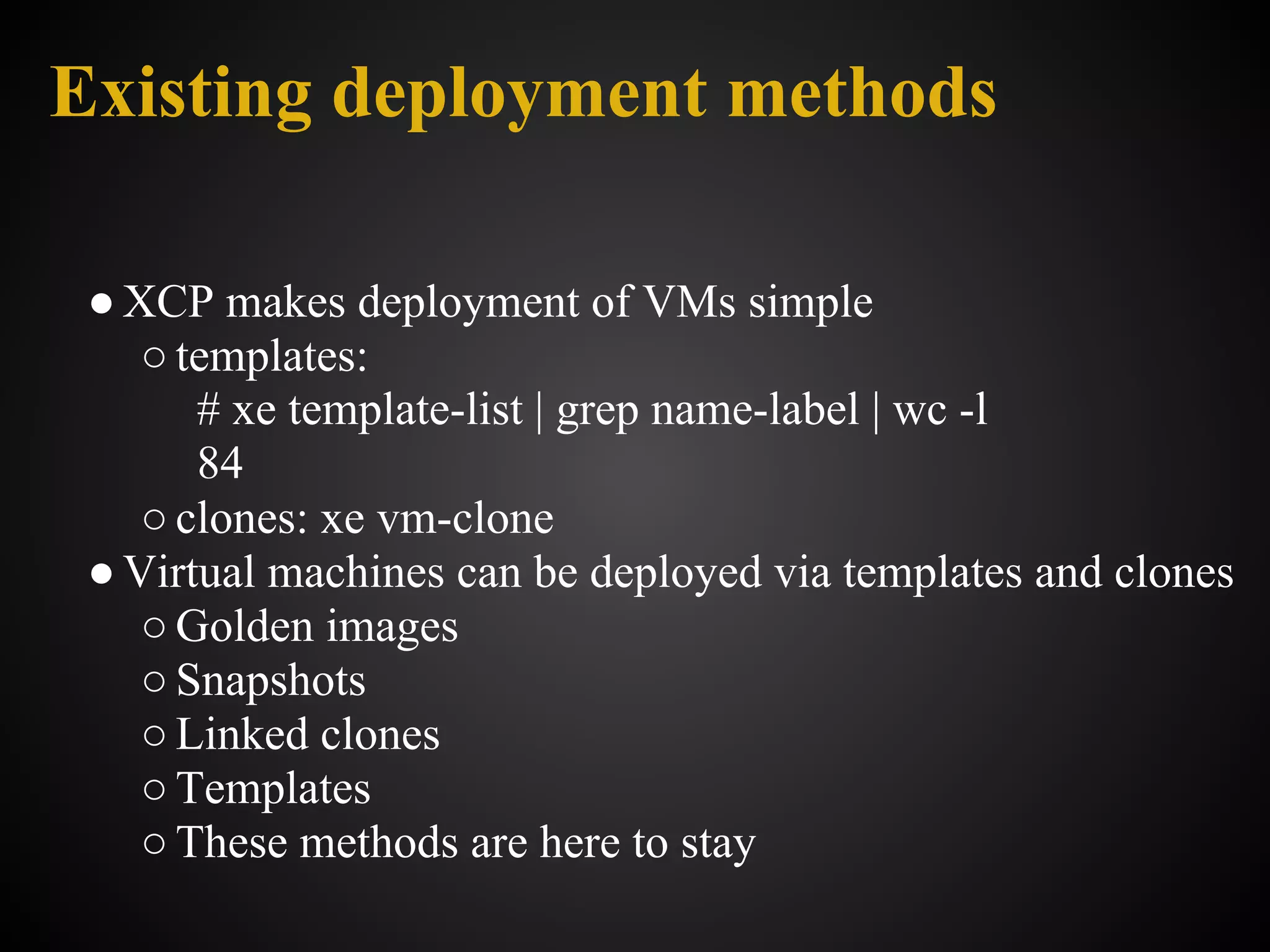

Here are some common existing deployment methods for virtual machines:

- Manual installation from ISO - Booting a virtual machine from an installation ISO and manually installing an operating system through the graphical user interface. Good for one-off deployments but not scalable.

- Scripted installation - Using scripts to automate the installation process. Better than manual but still requires customizing for each new virtual machine.

- Templates - Creating a "golden image" template virtual machine with a pre-installed and configured operating system. New virtual machines can be quickly deployed by cloning the template. Allows consistent deployments but still requires customizing each template.

- Configuration management - Using configuration management tools like Puppet, Chef, Ansible to declar

![What it is

import xmlrpclib

x=xmlrpclib.Server("https://localhost")

sessid=x.session.login_with_password("root","pass")

['Value']

# go forth, that's all you needed to begin

allvms=x.VM.get_all_records(sessid)['Value']](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-102-2048.jpg)

![API Architecture: Target the right

destination

import XenAPI

host="x"

user="y"

pass="p"

try:

session=XenAPI.Session('https://'+host)

session.login_with_password(user, pass)

except XenAPI.Failure, e:

if e.details[0]=='HOST_IS_SLAVE':

session=XenAPI.Session('https://'+e.details[1])

session.login_with_password(username, password)

else:

raise

s=session.xenapi](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-120-2048.jpg)

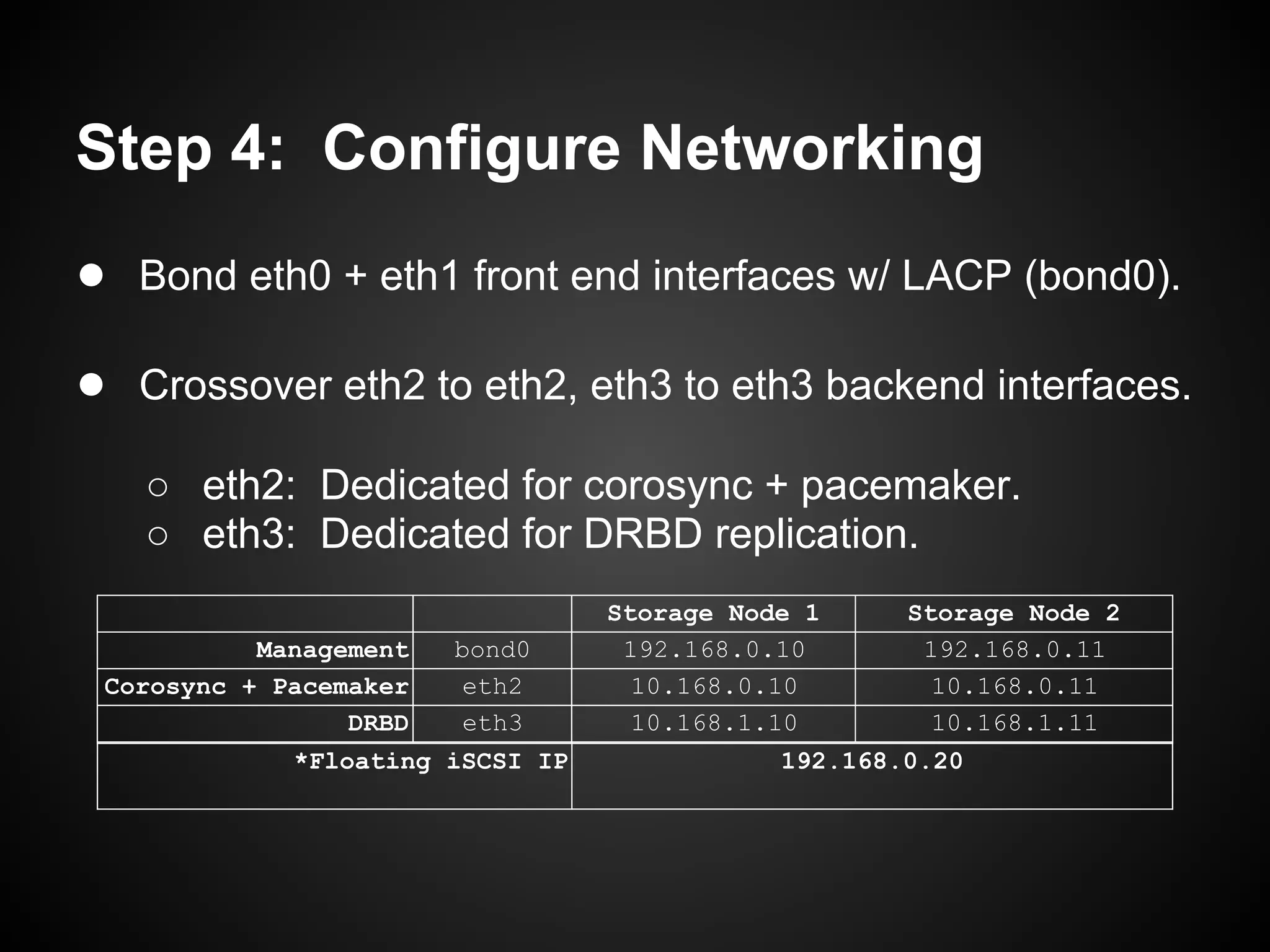

![Step 4: Configure Networking

Stacked Switches

Stacked Switches

19

10 2.

8 .0. 168

6 .0.

2.1 [ 192.168.0.20 ] 11

19

eth0 eth1 eth0 eth1

XCP Storage Node 1 XCP Storage Node 2

eth2 eth3 eth2 eth3

10.168.0.10 & 10.168.0.11

10.168.1.10 & 10.168.1.11](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-161-2048.jpg)

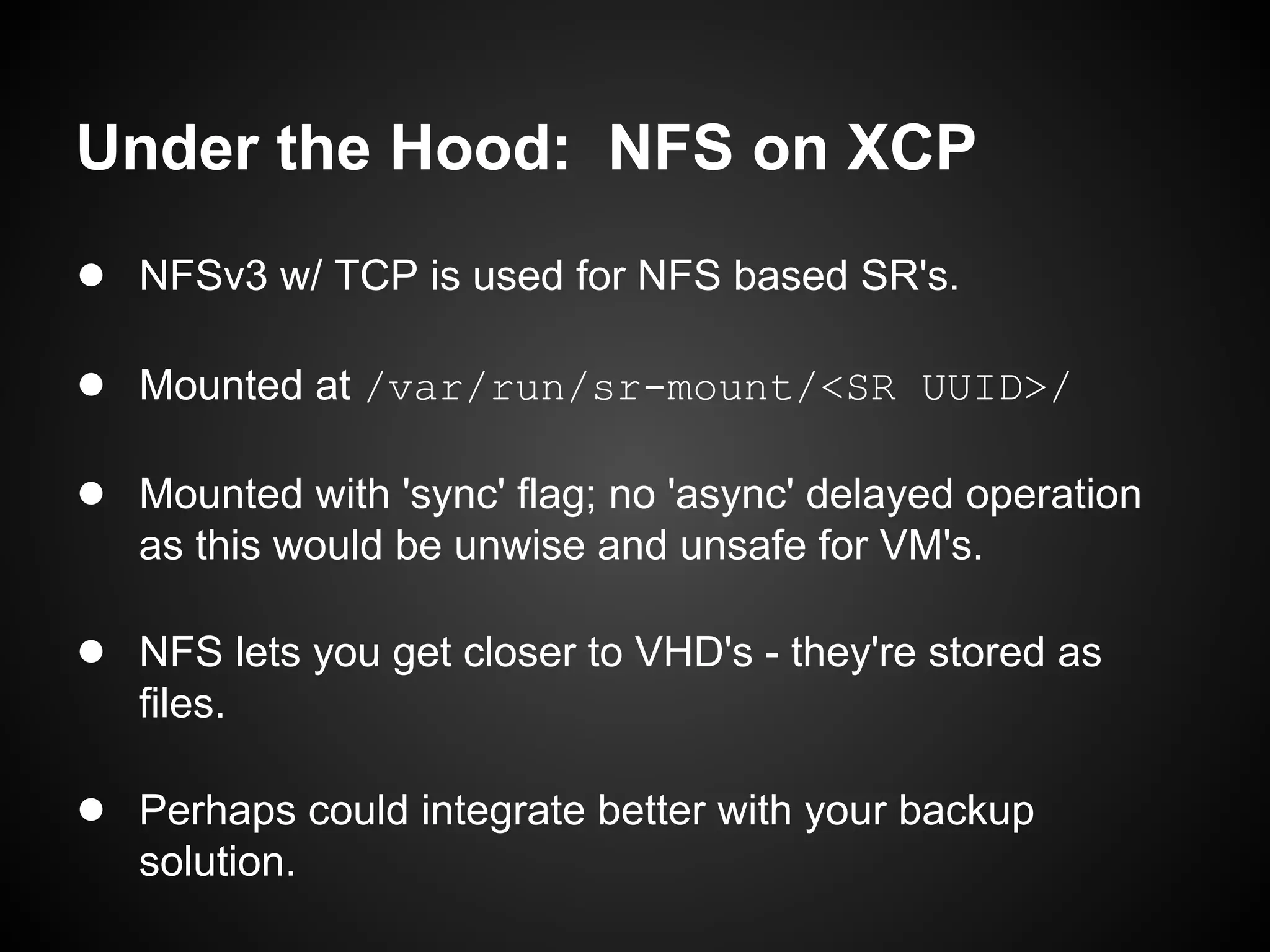

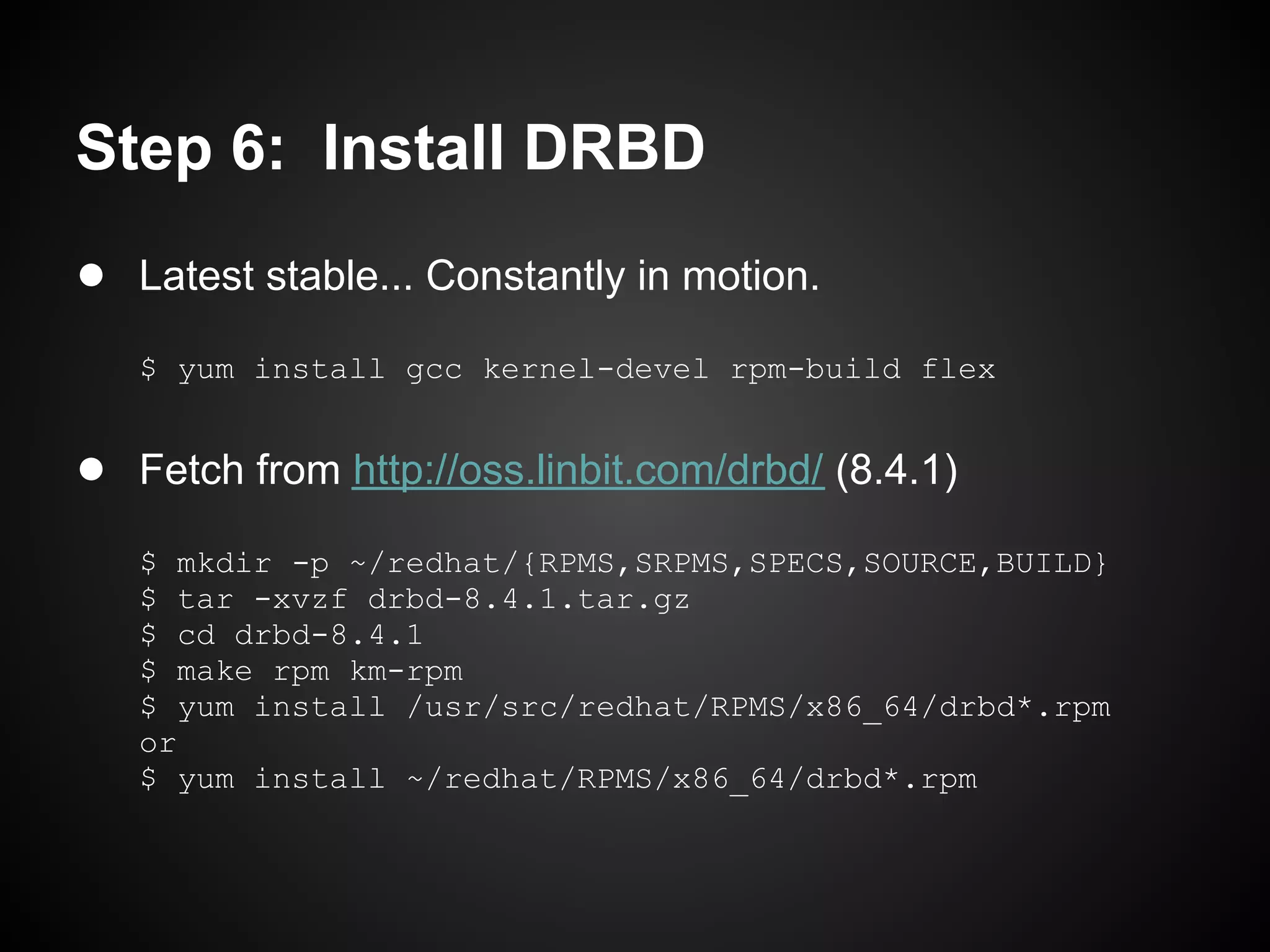

![Step 5: Configure LVM

● Setup dedicated storage partition:

$ pvcreate /dev/sdb1

$ vgcreate vg-xcp /dev/sdb1

$ lvcreate -l 100%FREE -n lv-xcp vg-xcp

● Adjust /etc/lvm/lvm.conf filters and run vgscan:

filter = [ "a|sd.*|", "r|.*|" ]

● XCP will put LVM on top of iSCSI LUN's (LVMoISCSI).

● SAN should not scan local DRBD resource content.](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-162-2048.jpg)

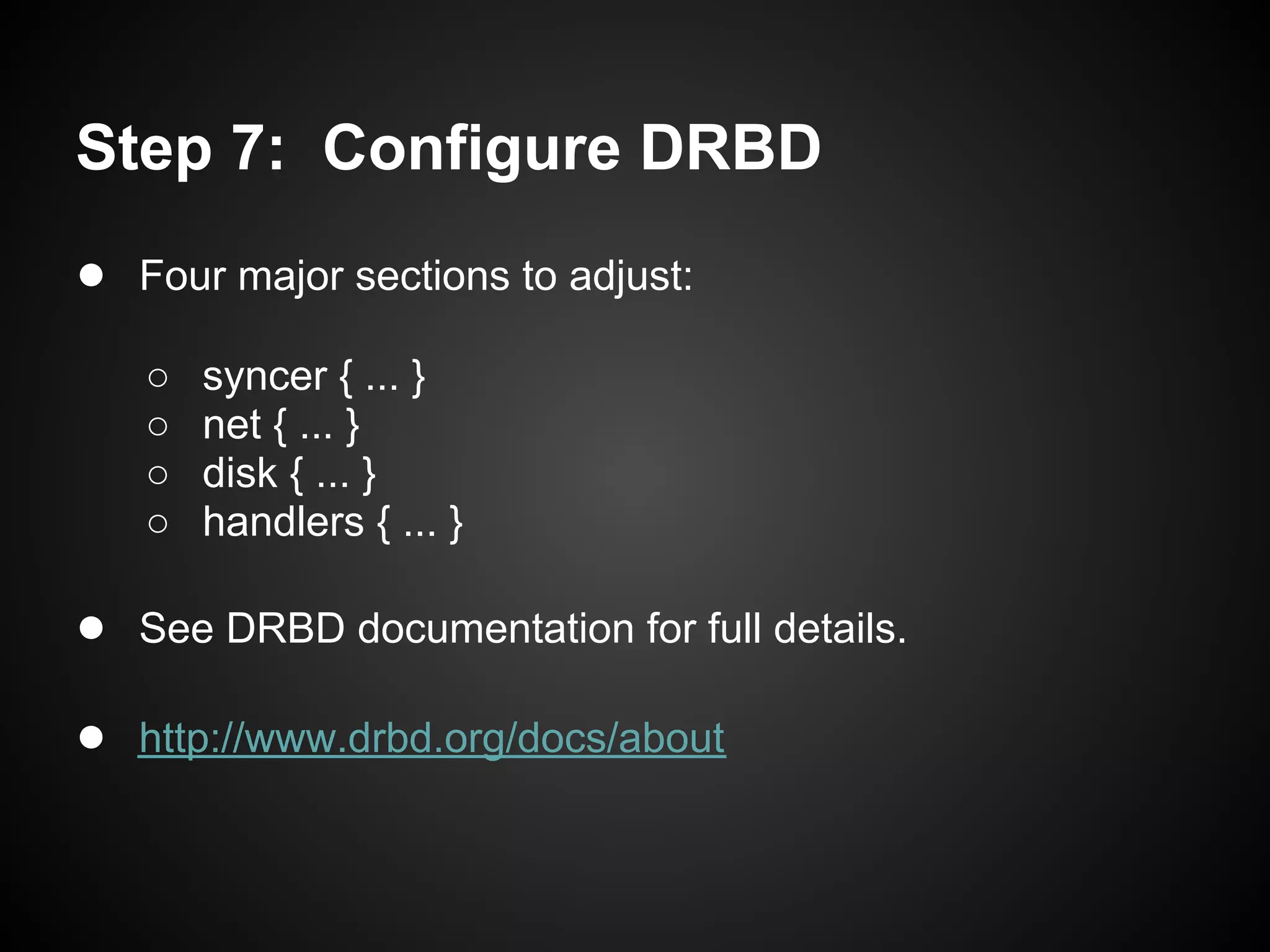

![Step 7: global_common.conf

syncer { handlers {

rate 1G; ... [ snip ] ...

verify-alg "crc32c"; fence-peer "/usr/lib/drbd/crm-

al-extents 1087; fence-peer.sh";

} after-resync-target

"/usr/lib/drbd/crm-unfence-peer.sh";

... [ snip ] ...

disk {

}

on-io-error detach;

fencing resource-only;

net {

}

sndbuf-size 0;

max-buffers 8000;

max-epoch-size 8000;

unplug-watermark 8000;

}](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-165-2048.jpg)

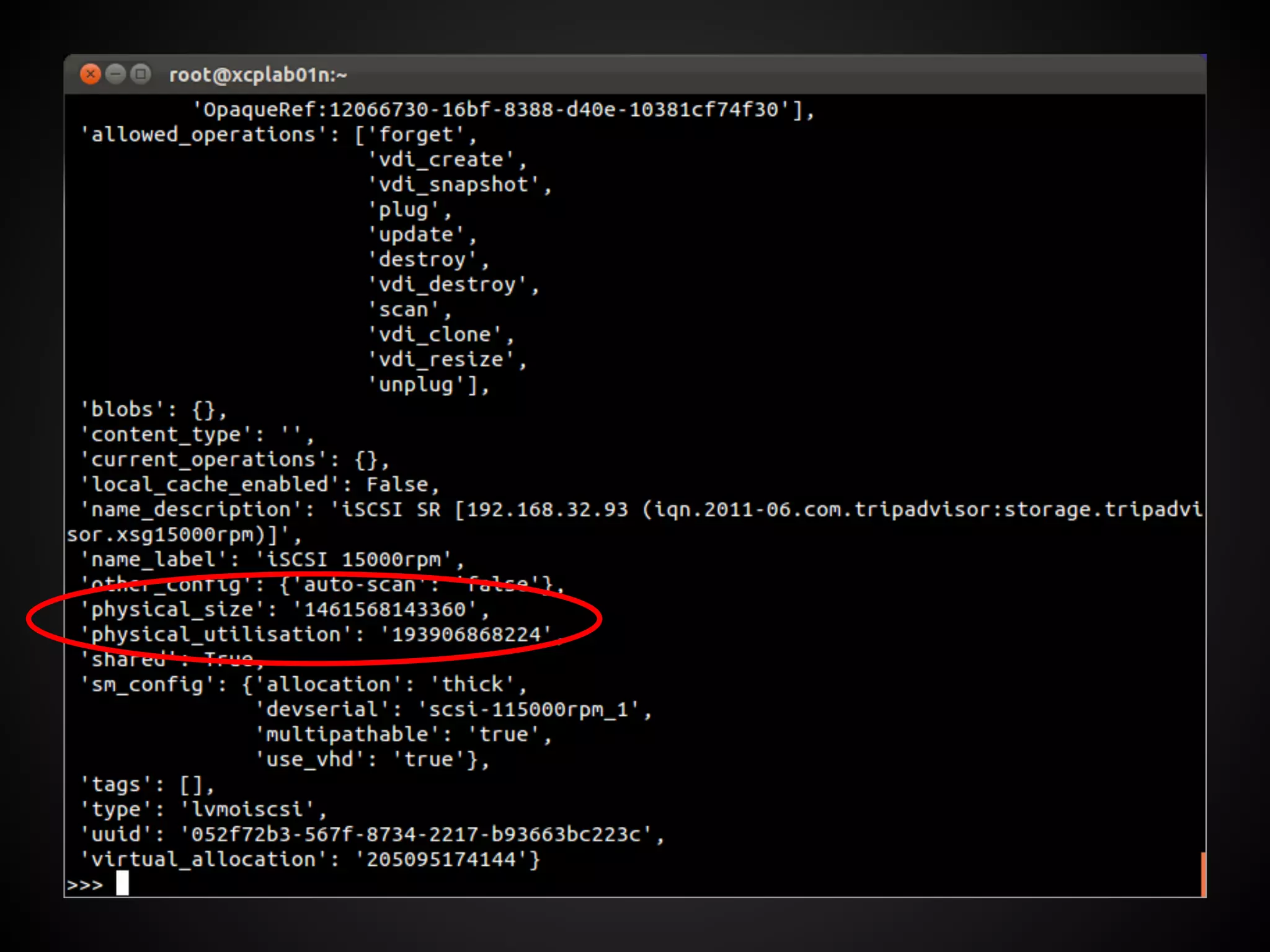

![XenAPI and SR Metrics

>>> import XenAPI

>>> from pprint import pprint

>>> session = XenAPI.Session('http://127.0.0.1')

>>> session.login_with_password('root', 'secret')

>>> session.xenapi.SR.get_all()

['OpaqueRef:18c80a5d-cef6-c2e8-59d1-a03cfbed97e5',

'OpaqueRef:94f13ac8-6d8b-9bc0-2c71-fd29c9636f4e', ...]

>>>

>>> pprint(session.xenapi.SR.get_record('OpaqueRef:

18c80a5d-cef6-c2e8-59d1-a03cfbed97e5'))](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-216-2048.jpg)

![XenAPI and Events

>>> import XenAPI

>>> from pprint import pprint

>>> session = XenAPI.Session('http://127.0.0.1')

>>> session.login_with_password('root', 'secret')

>>> session.xenapi.event.register(["*"])

''

>>> session.xenapi.event.next()

See examples on http://community.citrix.com/](https://image.slidesharecdn.com/oscon2012slideset-120720200537-phpapp01/75/Oscon-2012-From-Datacenter-to-the-Cloud-Featuring-Xen-and-XCP-218-2048.jpg)