Lesson 11: Markov Chains

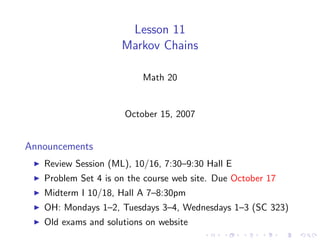

- 1. Lesson 11 Markov Chains Math 20 October 15, 2007 Announcements Review Session (ML), 10/16, 7:30–9:30 Hall E Problem Set 4 is on the course web site. Due October 17 Midterm I 10/18, Hall A 7–8:30pm OH: Mondays 1–2, Tuesdays 3–4, Wednesdays 1–3 (SC 323) Old exams and solutions on website

- 2. The Markov Dance Divide the class into three groups A, B, and C .

- 3. The Markov Dance Divide the class into three groups A, B, and C . Upon my signal: 1/3of group A goes to group B, and 1/3 of group A goes to group C .

- 4. The Markov Dance Divide the class into three groups A, B, and C . Upon my signal: 1/3of group A goes to group B, and 1/3 of group A goes to group C . 1/4of group B goes to group A, and 1/4 of group A goes to group C .

- 5. The Markov Dance Divide the class into three groups A, B, and C . Upon my signal: 1/3of group A goes to group B, and 1/3 of group A goes to group C . 1/4of group B goes to group A, and 1/4 of group A goes to group C . 1/2 of group C goes to group B.

- 7. Another Example Suppose on any given class day you wake up and decide whether to come to class. If you went to class the time before, you’re 70% likely to go today, and if you skipped the last class, you’re 80% likely to go today.

- 8. Another Example Suppose on any given class day you wake up and decide whether to come to class. If you went to class the time before, you’re 70% likely to go today, and if you skipped the last class, you’re 80% likely to go today. Some questions you might ask are: If I go to class on Monday, how likely am I to go to class on Friday?

- 9. Another Example Suppose on any given class day you wake up and decide whether to come to class. If you went to class the time before, you’re 70% likely to go today, and if you skipped the last class, you’re 80% likely to go today. Some questions you might ask are: If I go to class on Monday, how likely am I to go to class on Friday? Assuming the class is infinitely long (the horror!), approximately what portion of class will I attend?

- 10. Many times we are interested in the transition of something between certain “states” over discrete time steps. Examples are movement of people between regions states of the weather movement between positions on a Monopoly board your score in blackjack

- 11. Many times we are interested in the transition of something between certain “states” over discrete time steps. Examples are movement of people between regions states of the weather movement between positions on a Monopoly board your score in blackjack Definition A Markov chain or Markov process is a process in which the probability of the system being in a particular state at a given observation period depends only on its state at the immediately preceding observation period.

- 12. Common questions about a Markov chain are: What is the probability of transitions from state to state over multiple observations? Are there any “equilibria” in the process? Is there a long-term stability to the process?

- 13. Definition Suppose the system has n possible states. For each i and j, let tij be the probability of switching from state j to state i. The matrix T whose ijth entry is tij is called the transition matrix.

- 14. Definition Suppose the system has n possible states. For each i and j, let tij be the probability of switching from state j to state i. The matrix T whose ijth entry is tij is called the transition matrix. Example The transition matrix for the skipping class example is 0.7 0.8 T= 0.3 0.2

- 17. The big idea about the transition matrix reflects an important fact about probabilities: All entries are nonnegative. The columns add up to one. Such a matrix is called a stochastic matrix.

- 18. Definition The state vector of a Markov process with n-states at time step k is the vector (k) p 1 p (k) x(k) = 2 . . . (k) pn (k) where pj is the probability that the system is in state j at time step k.

- 20. Definition The state vector of a Markov process with n-states at time step k is the vector (k) p 1 p (k) x(k) = 2 . . . (k) pn (k) where pj is the probability that the system is in state j at time step k. Example Suppose we start out with 20 students in group A and 10 students in groups B and C . Then the initial state vector is x(0) =

- 21. Definition The state vector of a Markov process with n-states at time step k is the vector (k) p 1 p (k) x(k) = 2 . . . (k) pn (k) where pj is the probability that the system is in state j at time step k. Example Suppose we start out with 20 students in group A and 10 students in groups B and C . Then the initial state vector is 0.5 x(0) = 0.25 . 0.25

- 23. Example Suppose after three weeks of class I am equally likely to come to class or skip. Then my state vector would be x(10) =

- 24. Example Suppose after three weeks of class I am equally likely to come to 0.5 class or skip. Then my state vector would be x(10) = 0.5

- 25. Example Suppose after three weeks of class I am equally likely to come to 0.5 class or skip. Then my state vector would be x(10) = 0.5 The big idea about state vectors reflects an important fact about probabilities: All entries are nonnegative. The entries add up to one. Such a vector is called a probability vector.

- 26. Lemma Let T be an n × n stochastic matrix and x an n × 1 probability vector. Then T x is a probability vector.

- 27. Lemma Let T be an n × n stochastic matrix and x an n × 1 probability vector. Then T x is a probability vector. Proof. We need to show that the entries of T x add up to one. We have n n n n n (T x)i = tij xj = tij xj i=1 i=1 j=1 j=1 i=1 n 1 · xj = 1 = j=1

- 28. Theorem If T is the transition matrix of a Markov process, then the state vector x(k+1) at the (k + 1)th observation period can be determined from the state vector x(k) at the kth observation period, as x(k+1) = T x(k)

- 29. Theorem If T is the transition matrix of a Markov process, then the state vector x(k+1) at the (k + 1)th observation period can be determined from the state vector x(k) at the kth observation period, as x(k+1) = T x(k) This comes from an important idea in conditional probability: P(state i at t = k + 1) n = P(move from state j to state i)P(state j at t = k) j=1 That is, for each i, n (k+1) (k) pi = tij pj j=1

- 30. Illustration Example How does the probability of going to class on Wednesday depend on the probabilities of going to class on Monday? (k) (k) p2 p1 go Monday skip t21 t11 t12 t22 go go Wednesday skip skip (k+1) (k) (k) p1 = t11 p1 + t12 p2 (k+1) (k) (k) p2 = t21 p1 + t22 p2

- 31. Example If I go to class on Monday, what’s the probability I’ll go to class on Friday?

- 32. Example If I go to class on Monday, what’s the probability I’ll go to class on Friday? Solution 1 We have x(0) = . We want to know x(2) . We have 0 x(2) = T x(1) = T (T x(0) ) = T 2 = T x(0) 2 0.7 0.8 1 0.7 0.8 0.7 0.73 = = = 0.3 0.2 0 0.3 0.2 0.3 0.27

- 33. Let’s look at successive powers of the probability matrix. Do they converge? To what?

- 34. Let’s look at successive powers of the transition matrix in the Markov Dance. 0.333333 0.25 0. T = 0.333333 0.5 0.5 0.333333 0.25 0.5

- 35. Let’s look at successive powers of the transition matrix in the Markov Dance. 0.333333 0.25 0. T = 0.333333 0.5 0.5 0.333333 0.25 0.5 0.194444 0.208333 0.125 T 2 = 0.444444 0.458333 0.5 0.361111 0.333333 0.375

- 36. Let’s look at successive powers of the transition matrix in the Markov Dance. 0.333333 0.25 0. T = 0.333333 0.5 0.5 0.333333 0.25 0.5 0.194444 0.208333 0.125 T 2 = 0.444444 0.458333 0.5 0.361111 0.333333 0.375 0.175926 0.184028 0.166667 T 3 = 0.467593 0.465278 0.479167 0.356481 0.350694 0.354167

- 37. 0.17554 0.177662 0.175347 T 4 = 0.470679 0.469329 0.472222 0.353781 0.353009 0.352431

- 38. 0.17554 0.177662 0.175347 4 0.470679 0.469329 0.472222 T= 0.353781 0.353009 0.352431 0.176183 0.176553 0.176505 T 5 = 0.470743 0.47039 0.470775 0.353074 0.353057 0.35272

- 39. 0.17554 0.177662 0.175347 4 0.470679 0.469329 0.472222 T= 0.353781 0.353009 0.352431 0.176183 0.176553 0.176505 T 5 = 0.470743 0.47039 0.470775 0.353074 0.353057 0.35272 0.176414 0.176448 0.176529 T 6 = 0.470636 0.470575 0.470583 0.35295 0.352977 0.352889 Do they converge? To what?

- 40. A transition matrix (or corresponding Markov process) is called regular if some power of the matrix has all nonzero entries. Or, there is a positive probability of eventually moving from every state to every state.

- 41. Theorem 2.5 If T is the transition matrix of a regular Markov process, then (a) As n → ∞, T n approaches a matrix u1 u1 . . . u1 u2 u2 . . . u2 A= , . . . . . . . . . . . . un un . . . un all of whose columns are identical. (b) Every column u is a a probability vector all of whose components are positive.

- 42. Theorem 2.6 If T is a regular∗ transition matrix and A and u are as above, then (a) For any probability vector x, Tn x → u as n → ∞, so that u is a steady-state vector. (b) The steady-state vector u is the unique probability vector satisfying the matrix equation Tu = u.

- 43. Finding the steady-state vector We know the steady-state vector is unique. So we use the equation it satisfies to find it: Tu = u.

- 44. Finding the steady-state vector We know the steady-state vector is unique. So we use the equation it satisfies to find it: Tu = u. This is a matrix equation if you put it in the form (T − I)u = 0

- 46. Example (Skipping class) 0.7 0.8 If the transition matrix is T = , what is the 0.3 0.2 steady-state vector?

- 47. Example (Skipping class) 0.7 0.8 If the transition matrix is T = , what is the 0.3 0.2 steady-state vector? Solution We can combine the equations (T − I )u = 0, u1 + u2 = 1 into a single linear system with augmented matrix −3/10 8/10 0 1 0 8/11 3/10 −8/10 0 0 1 3/11 1 11 00 0

- 48. Example (Skipping class) 0.7 0.8 If the transition matrix is T = , what is the 0.3 0.2 steady-state vector? Solution We can combine the equations (T − I )u = 0, u1 + u2 = 1 into a single linear system with augmented matrix −3/10 8/10 0 1 0 8/11 3/10 −8/10 0 0 1 3/11 1 11 00 0 8/11 So the steady-state vector is . You’ll go to class about 72% 3/11 of the time.

- 49. Example (The Markov Dance) 1/3 1/4 0 If the transition matrix is T = 1/3 1/2 1/2, what is the 1/3 1/4 1/2 steady-state vector?

- 50. Example (The Markov Dance) 1/3 1/4 0 If the transition matrix is T = 1/3 1/2 1/2, what is the 1/3 1/4 1/2 steady-state vector? Solution We have −2/3 1/4 0 0 1 0 0 3/17 1/3 −1/2 1/2 0 0 1 0 8/17 1/4 −1/2 1/3 0 0 0 1 6/17 1 1 1 1 0 0 0 0