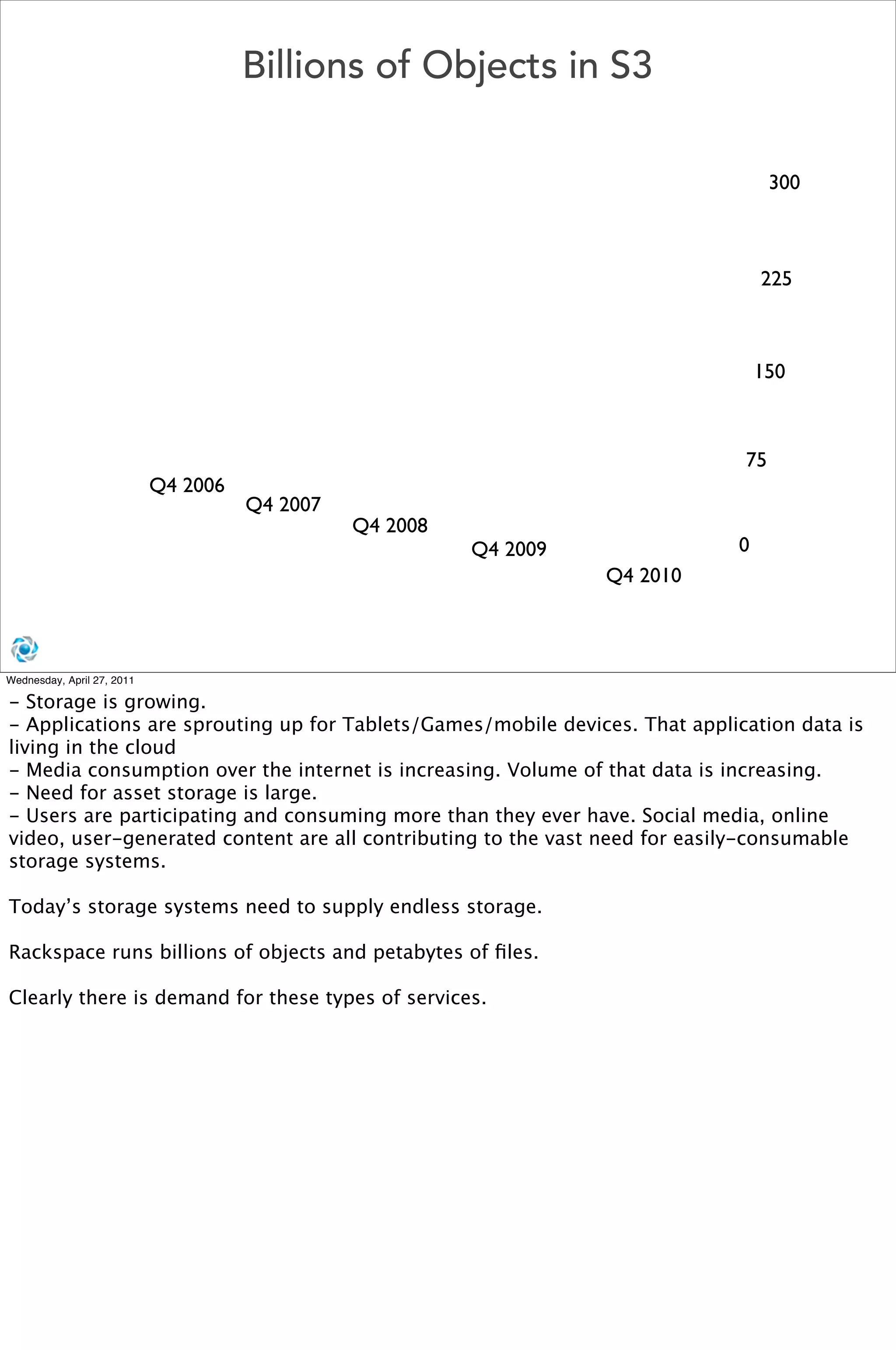

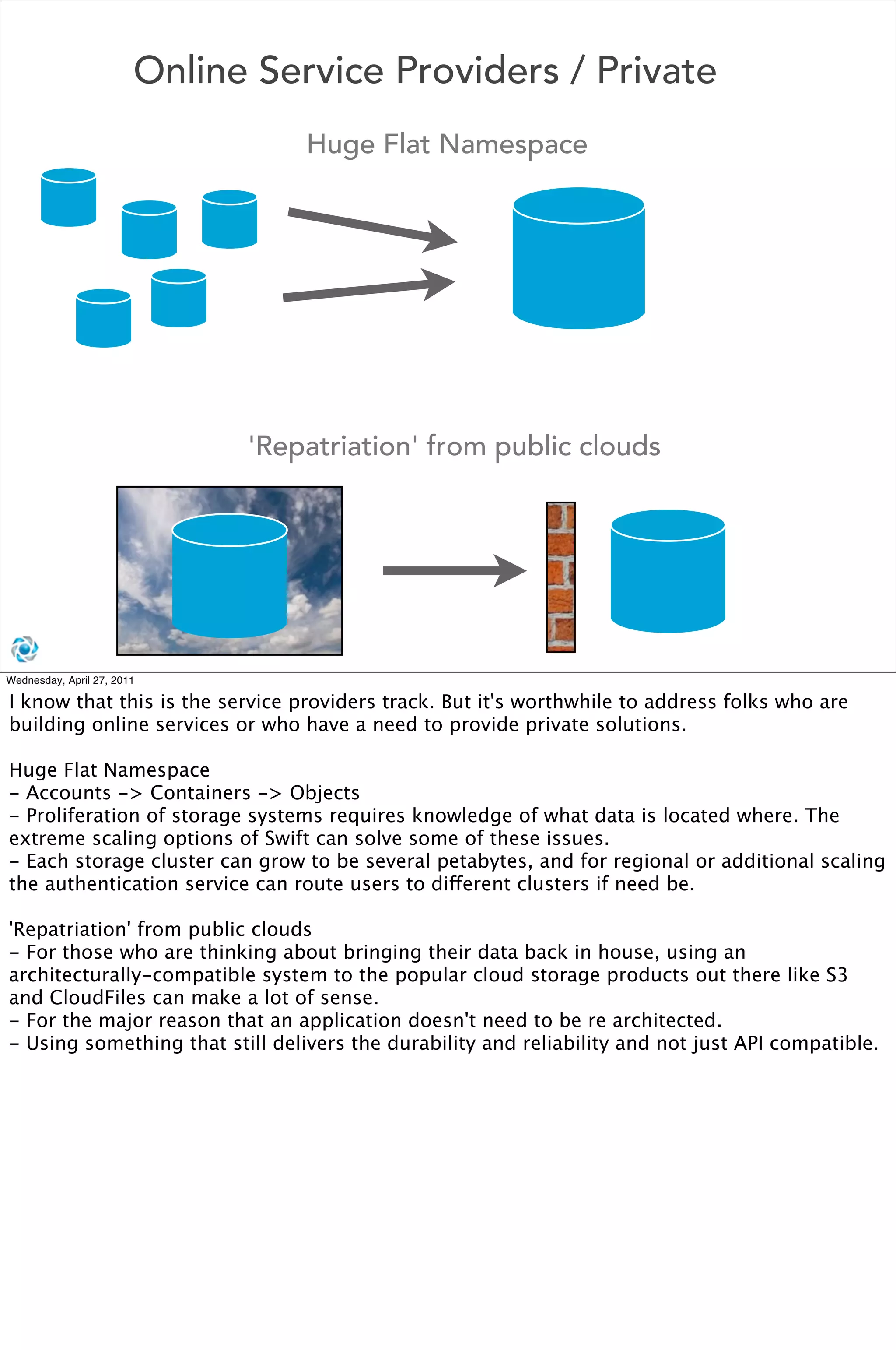

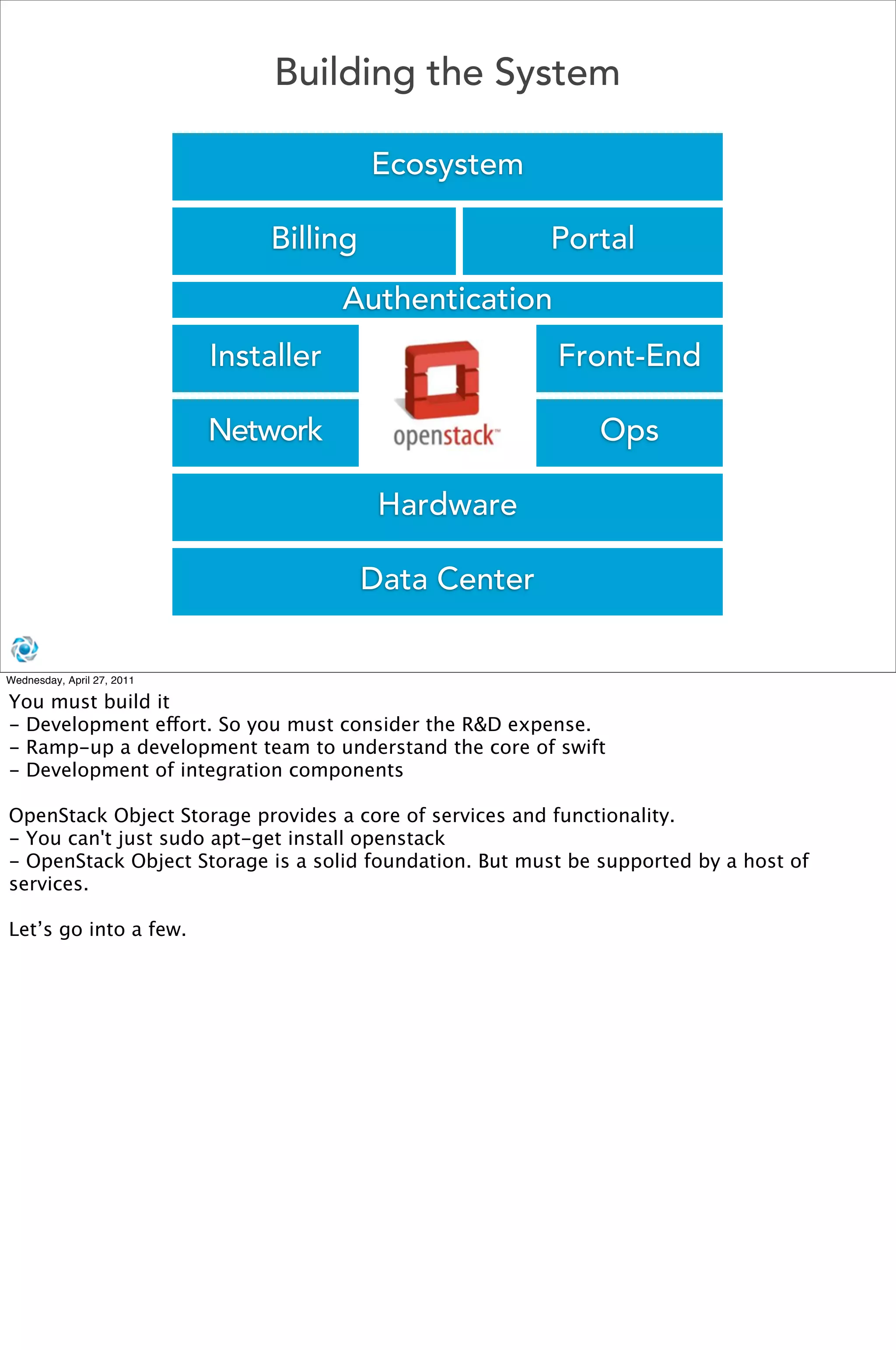

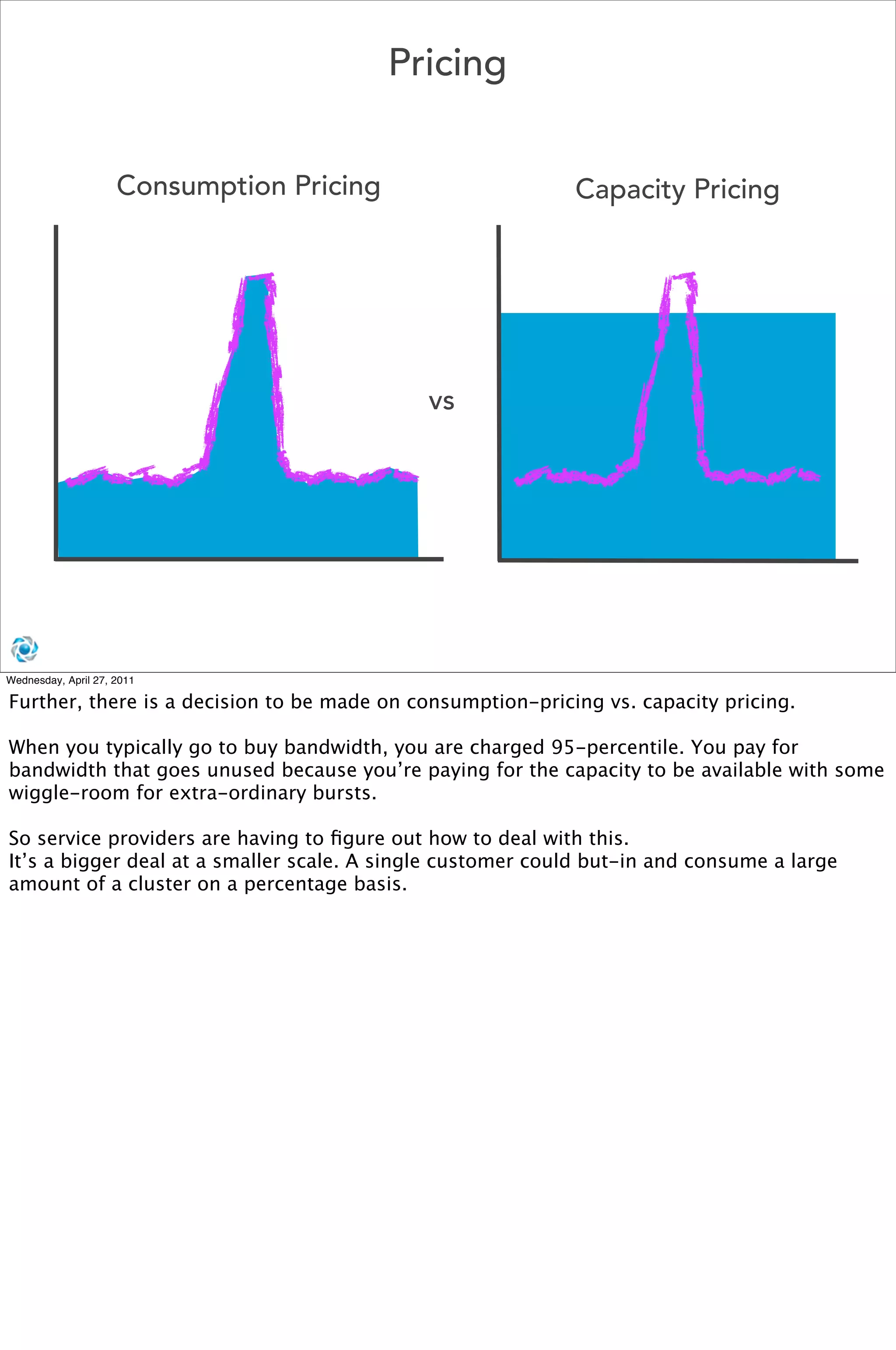

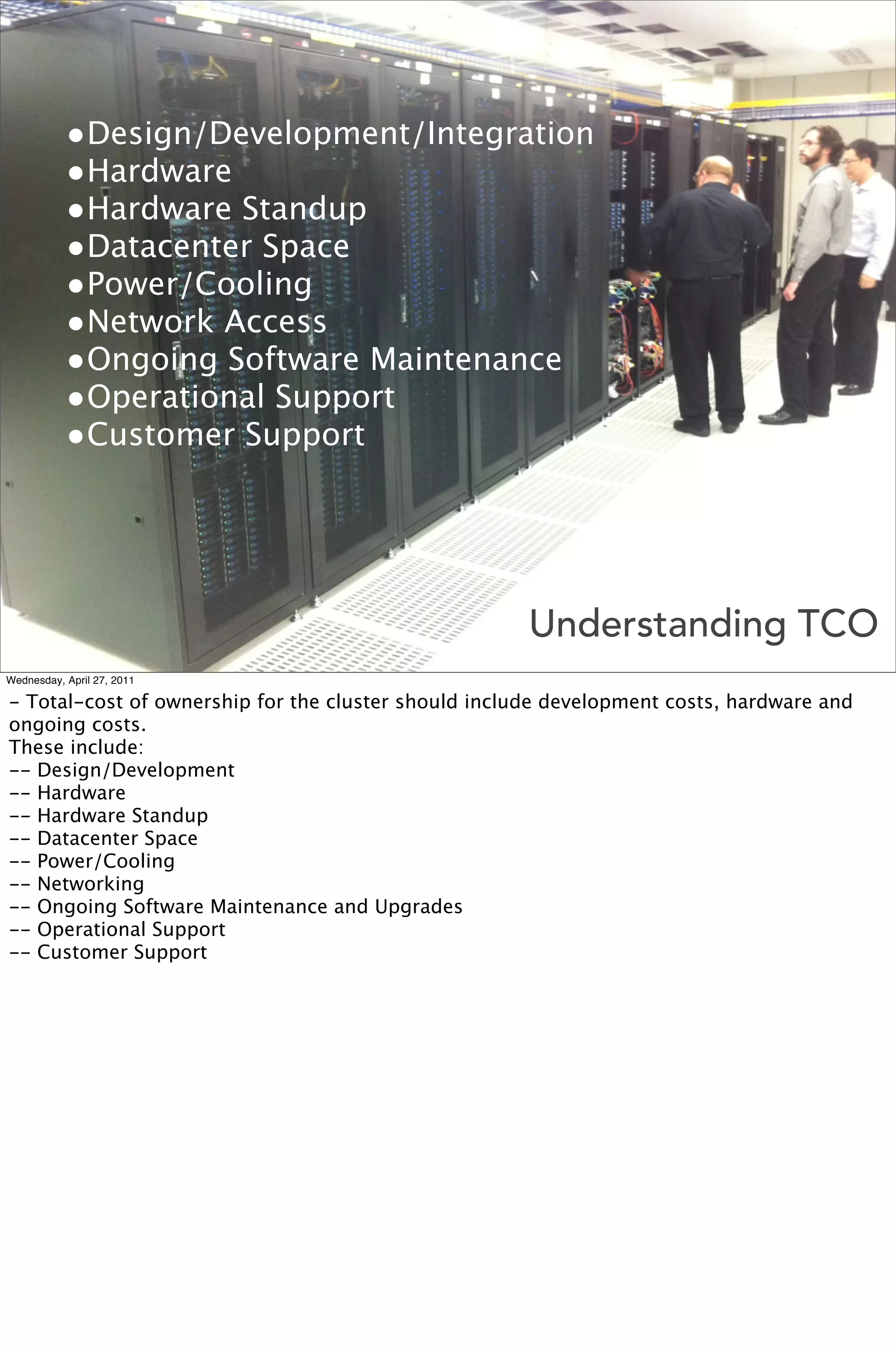

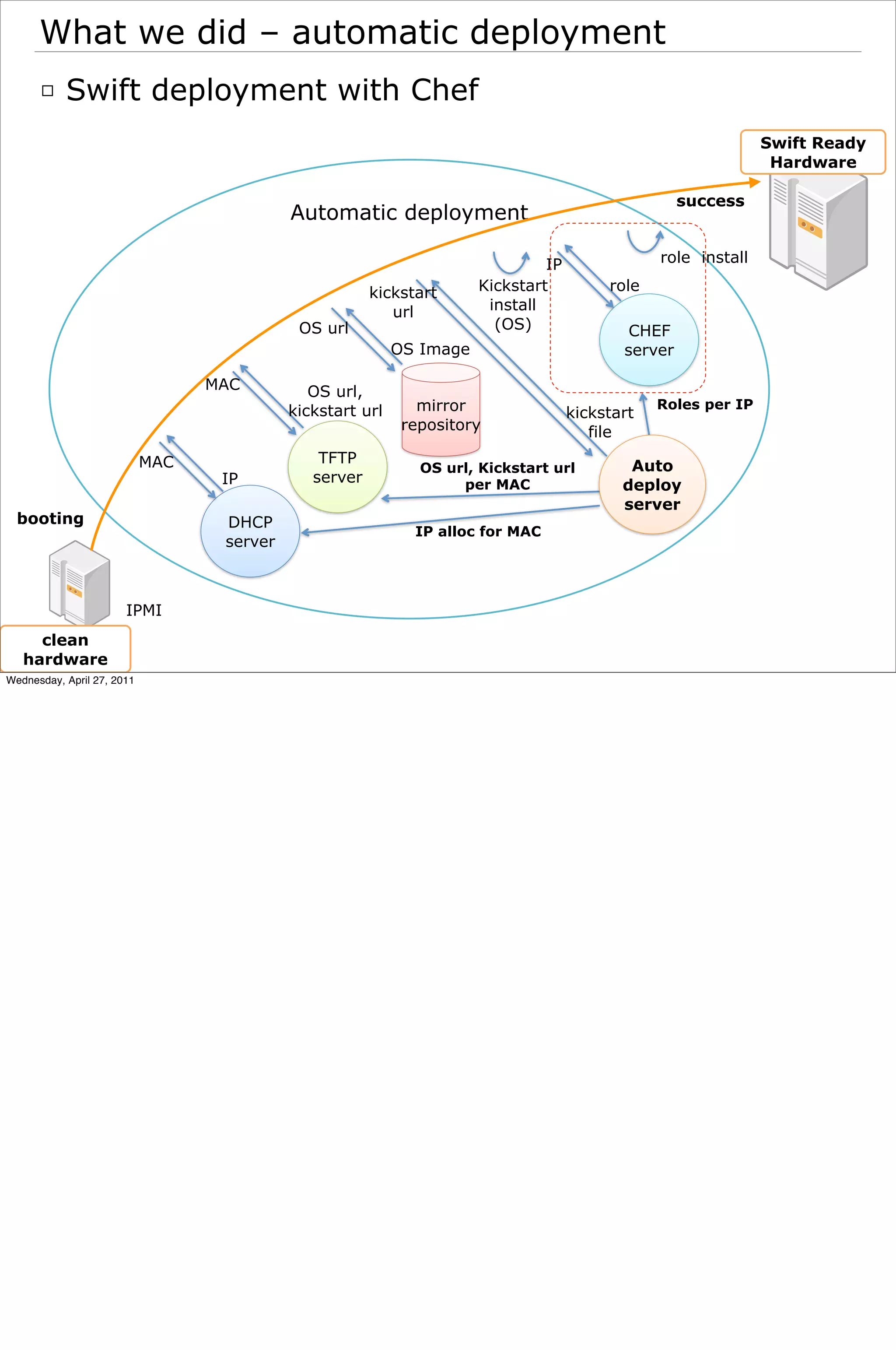

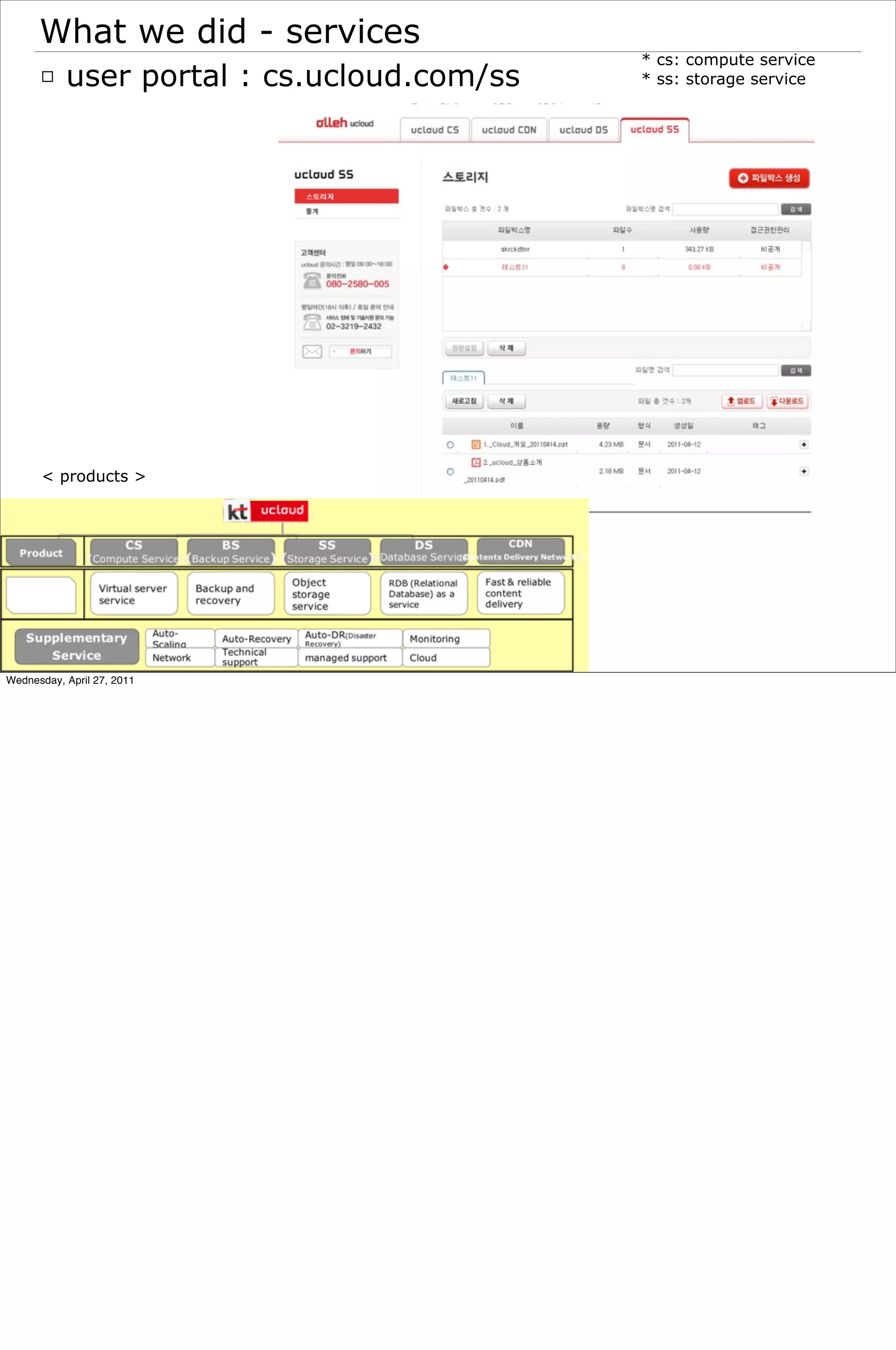

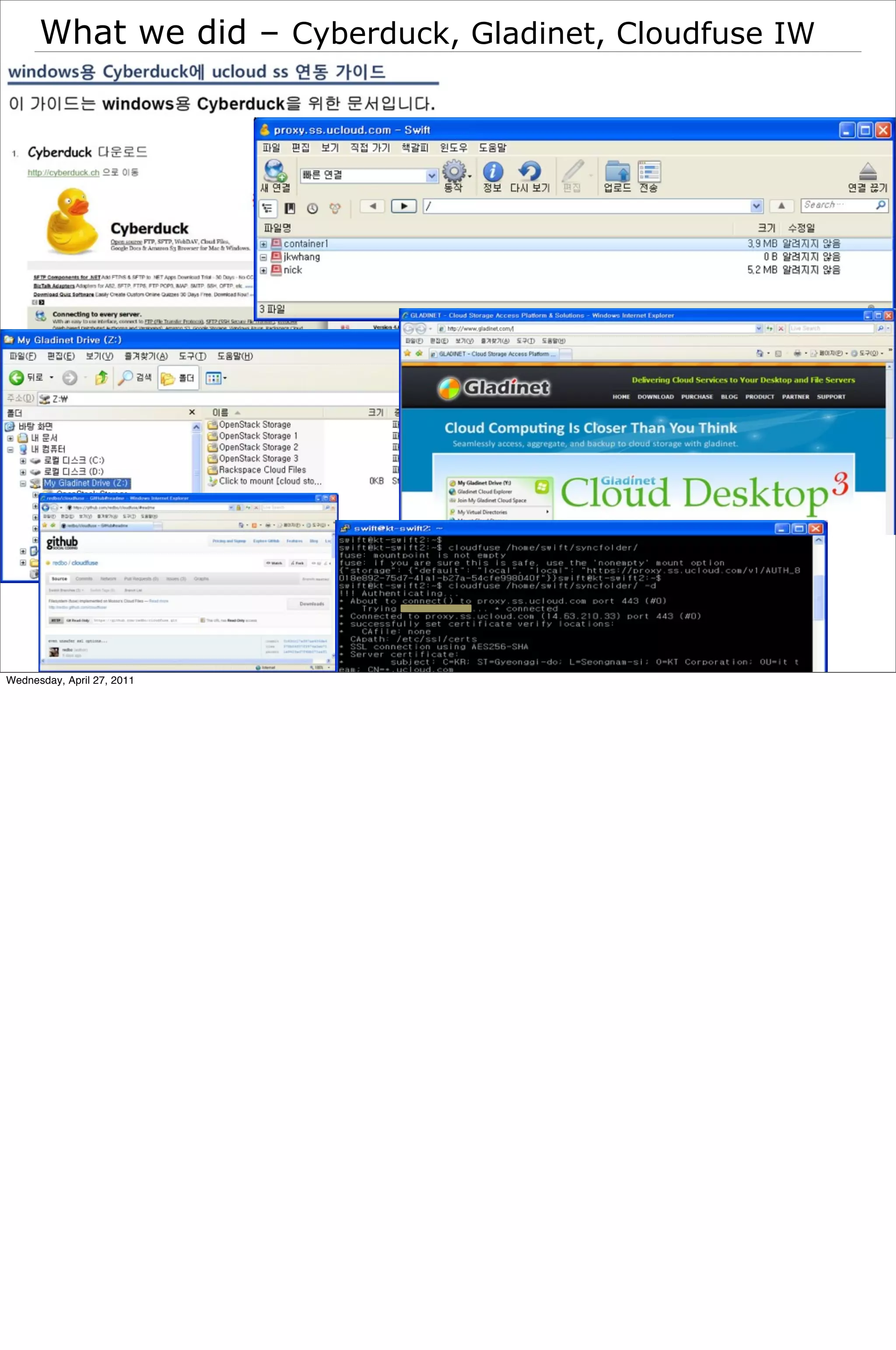

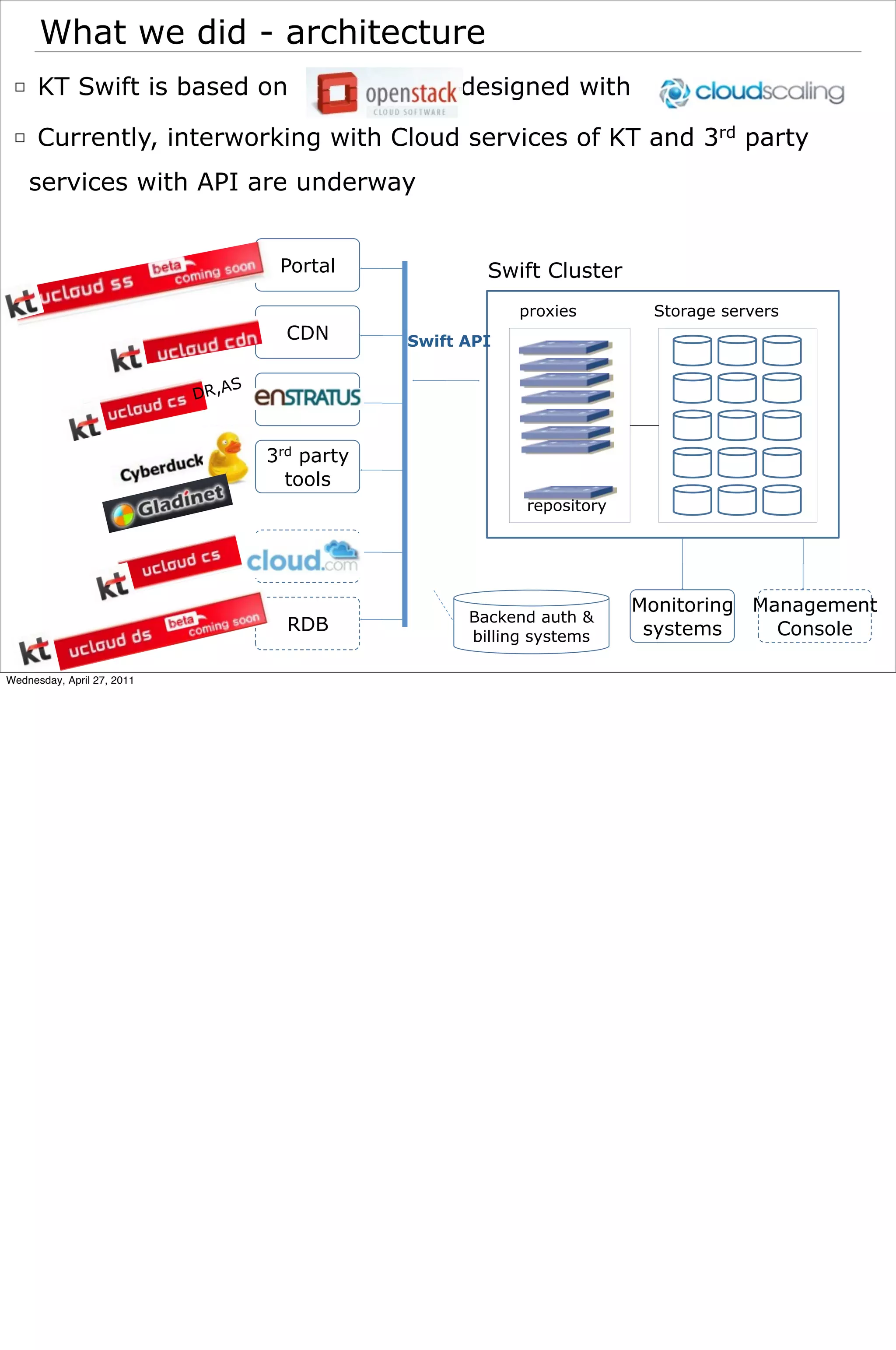

Cloudscaling has been working with KT to build infrastructure cloud services for telcos and service providers using OpenStack Object Storage. They have helped KT launch object storage systems based on Swift and end-user cloud products. Building these infrastructure services requires integrating hardware, software, and operational components and considering aspects like billing, authentication, load balancing, and networking. OpenStack Object Storage provides a solid foundation but additional services need to be developed to fully support customers.