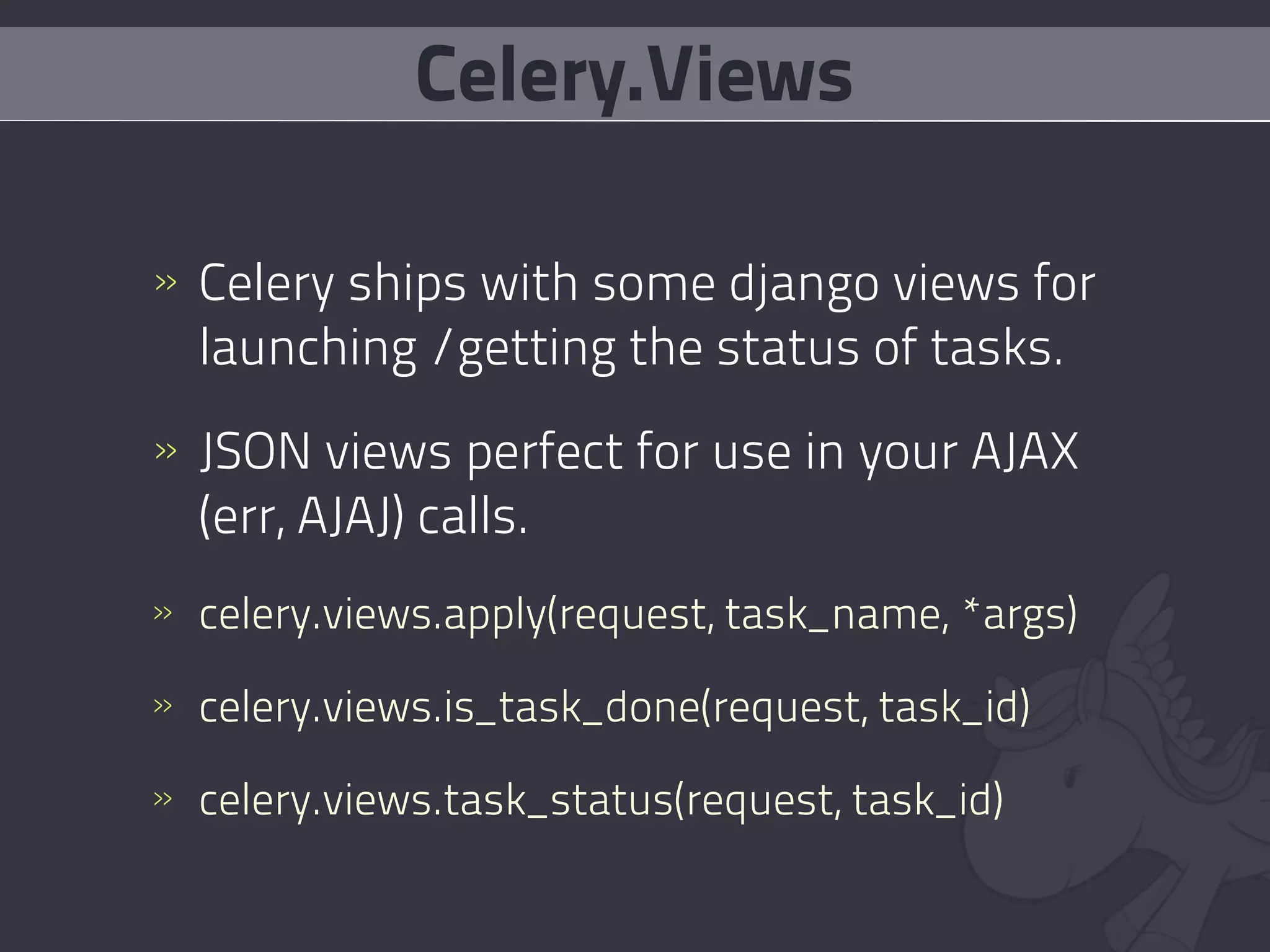

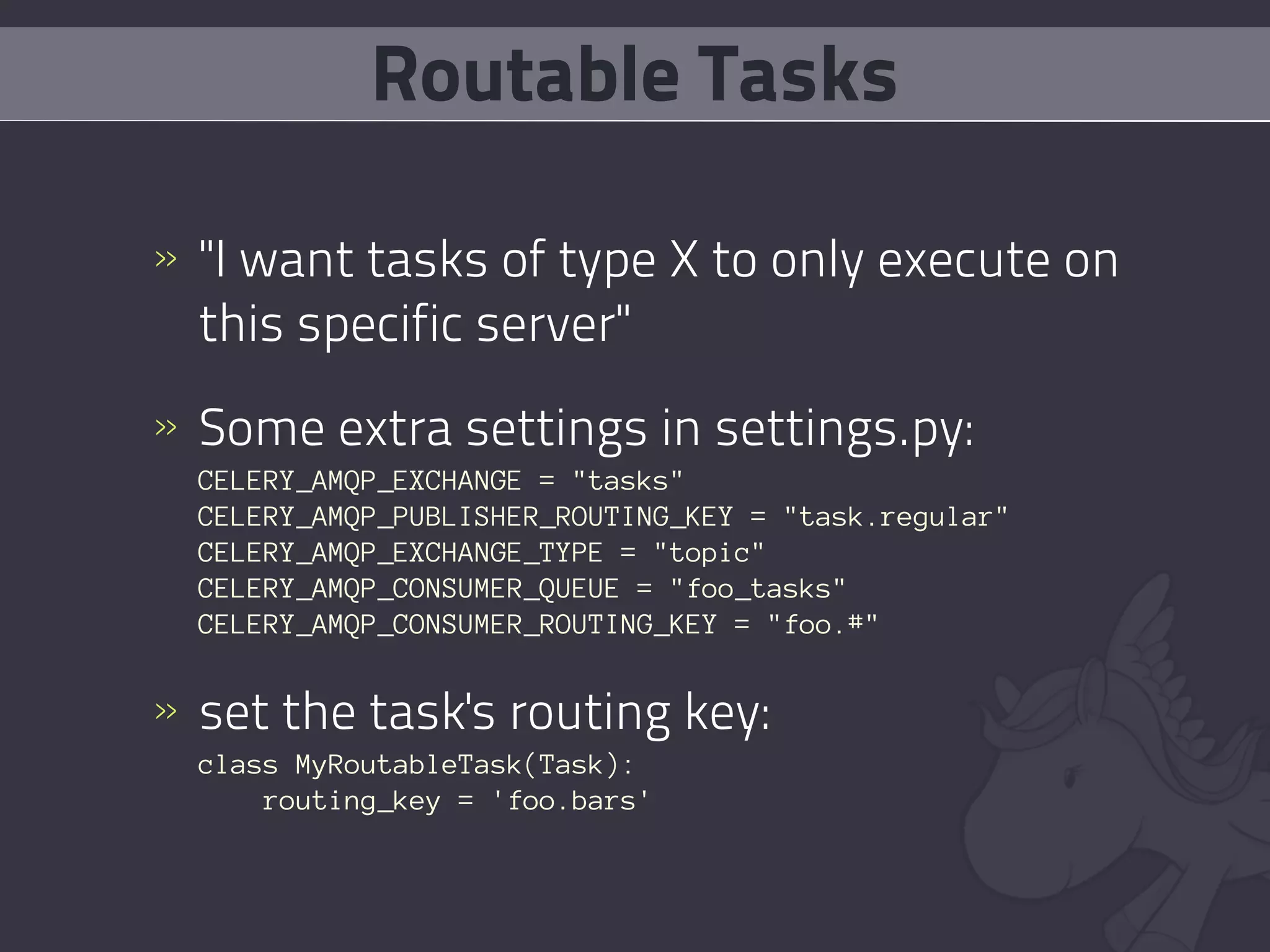

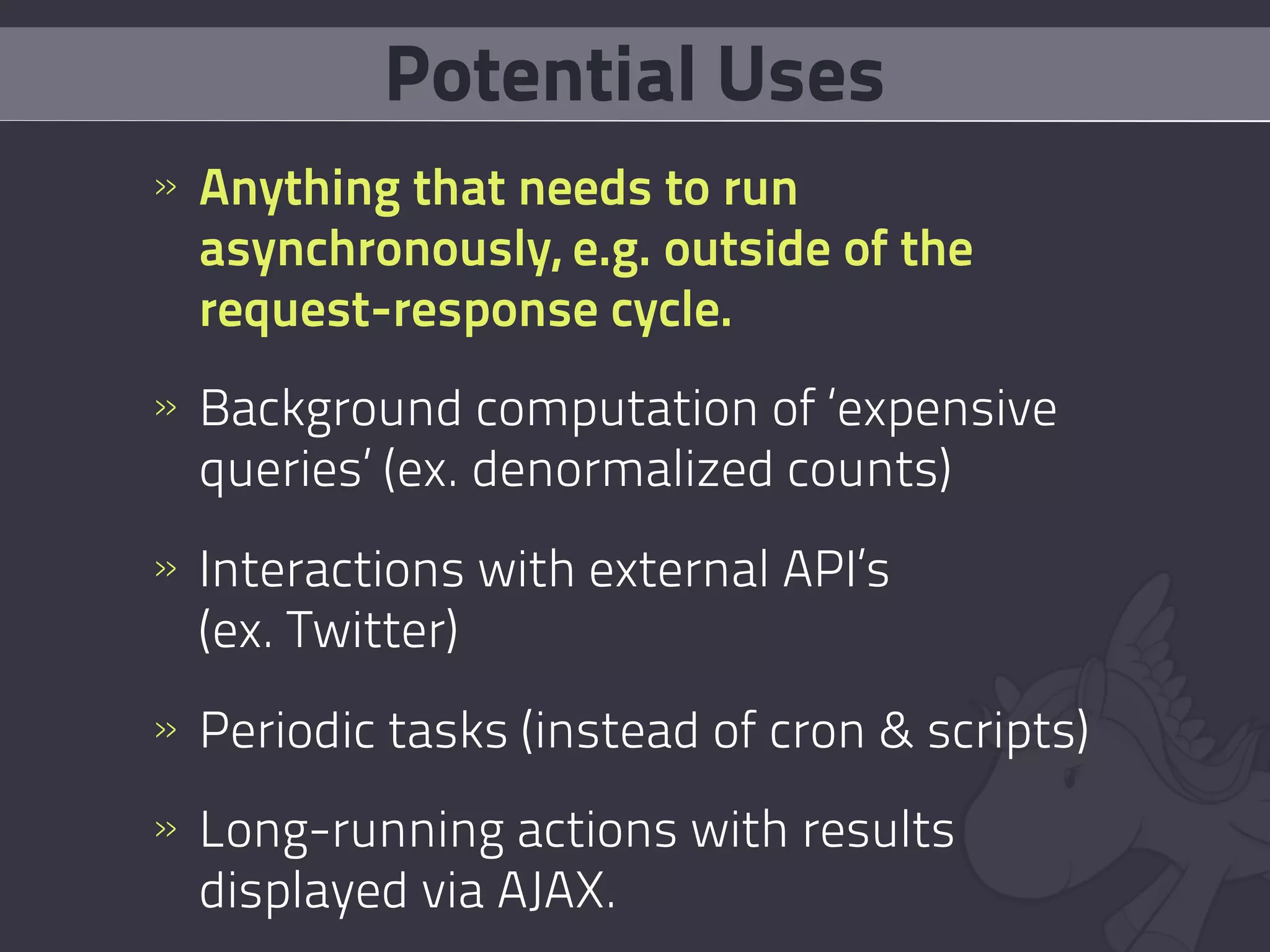

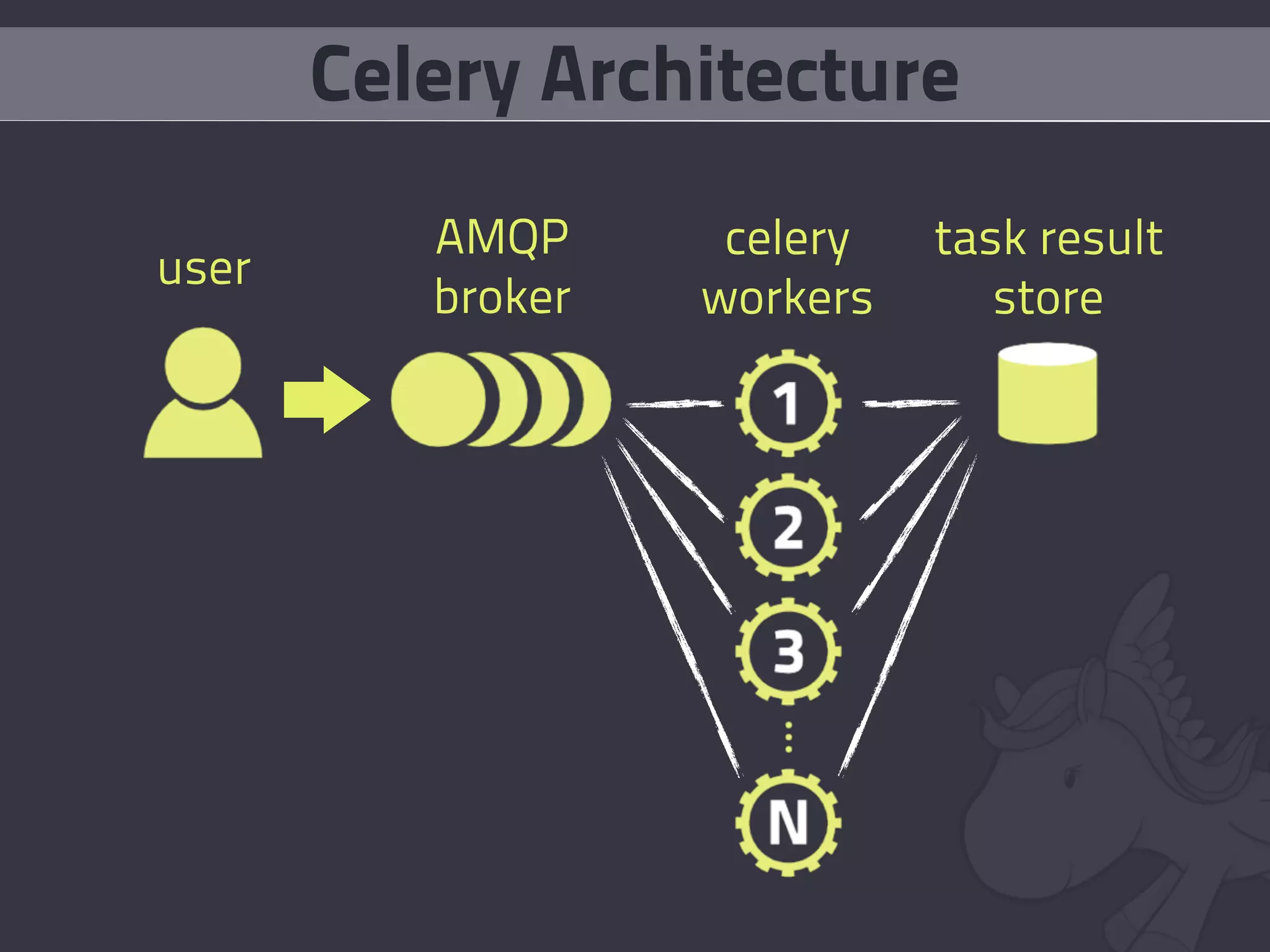

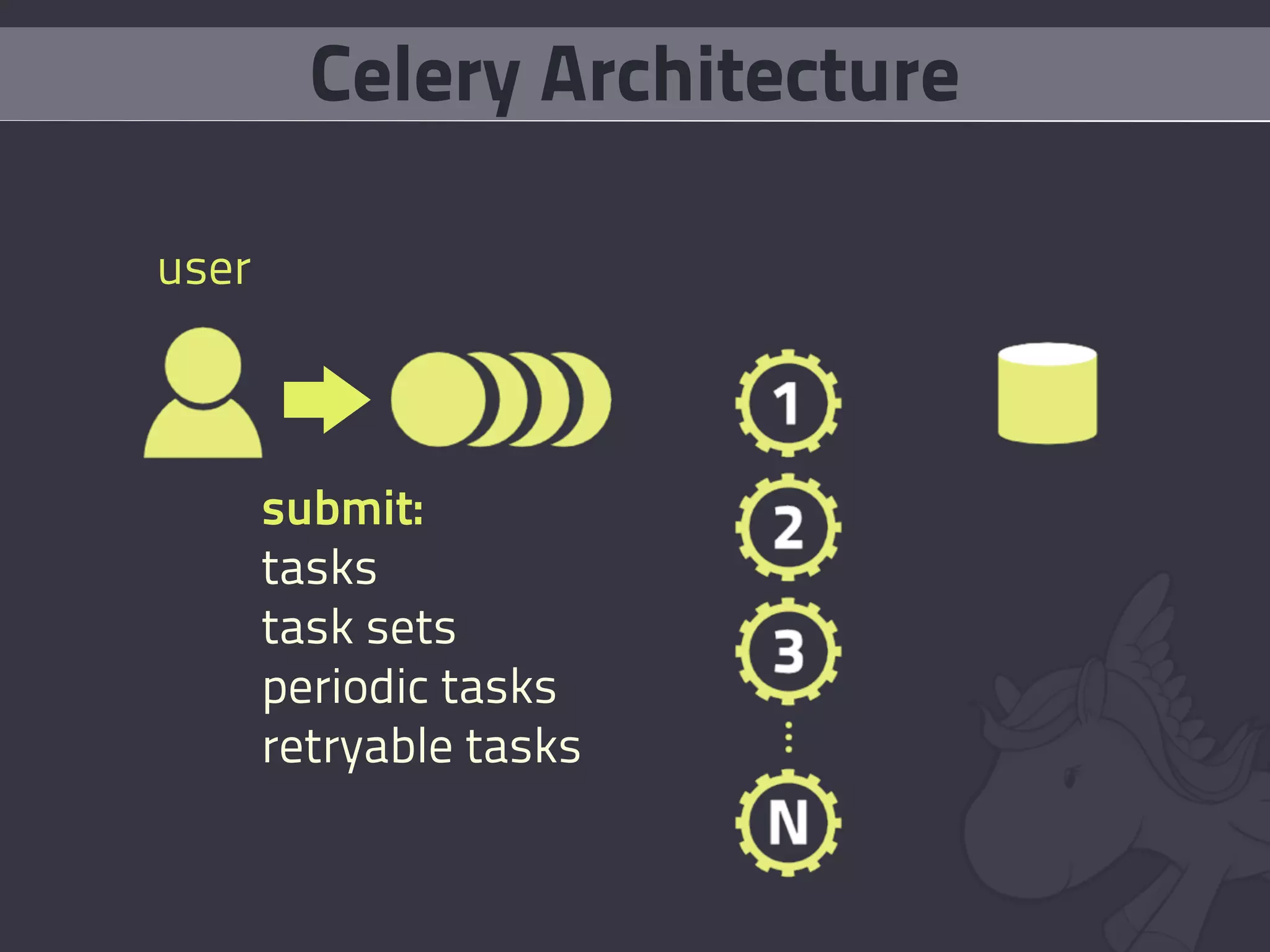

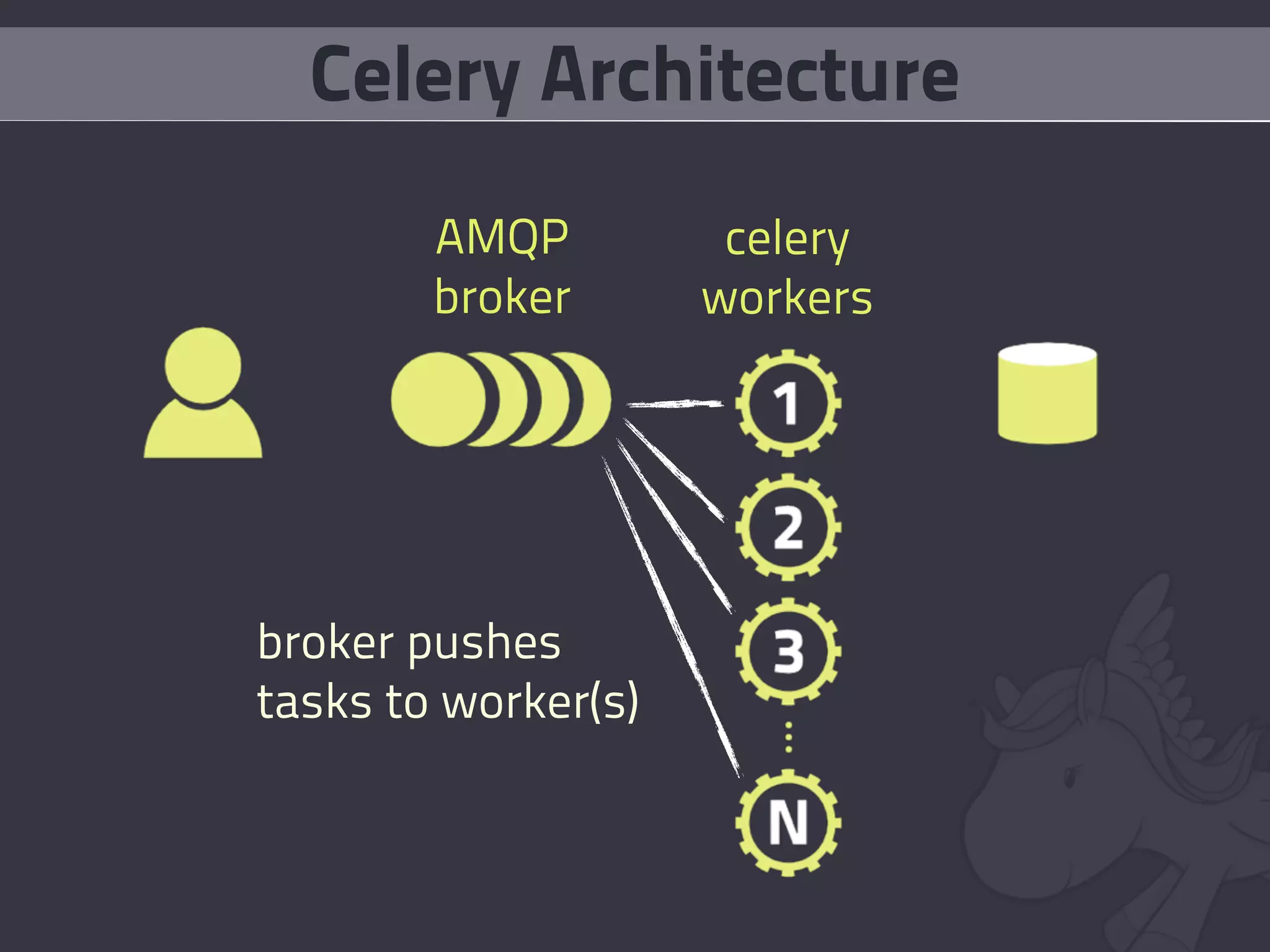

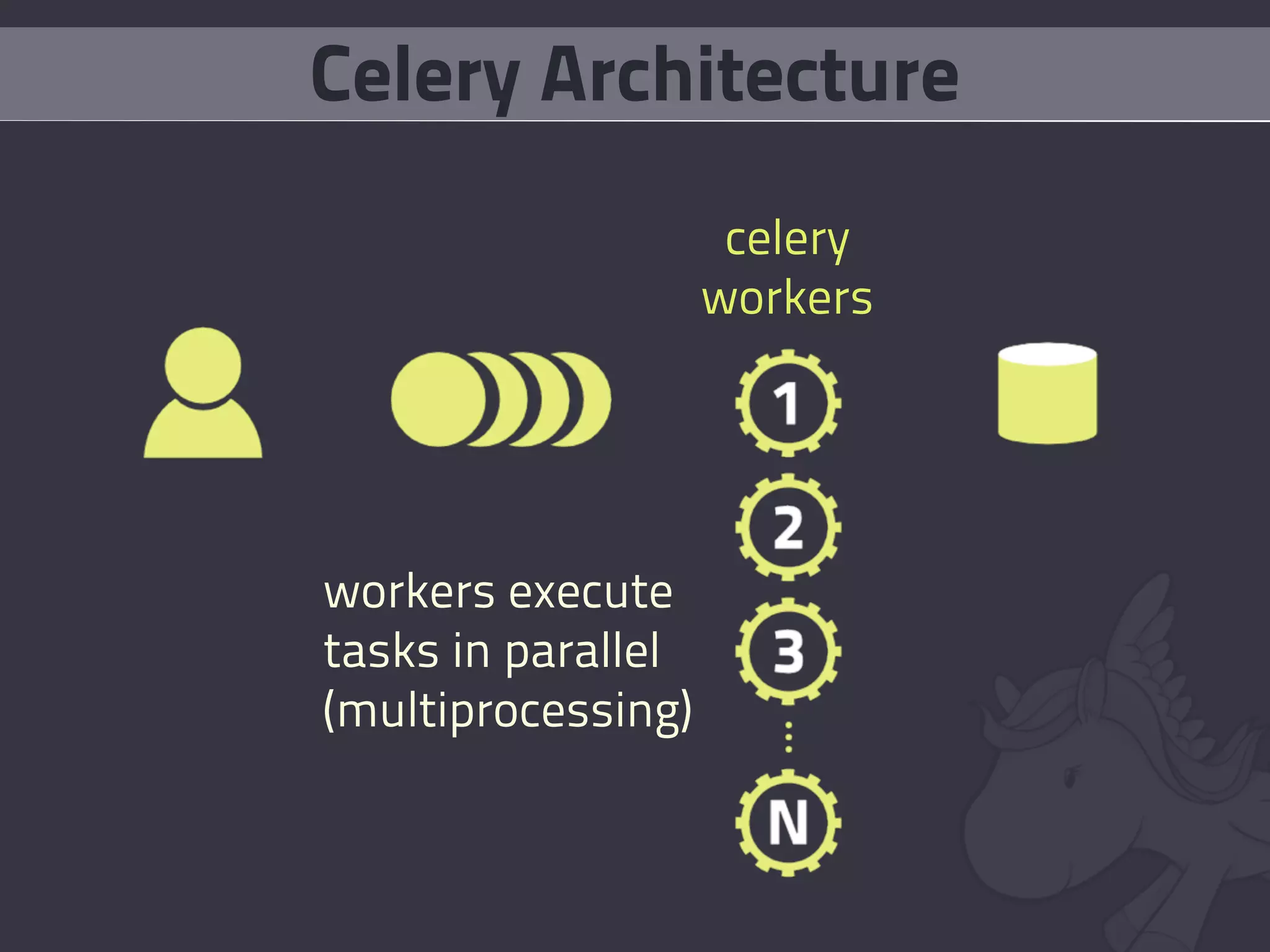

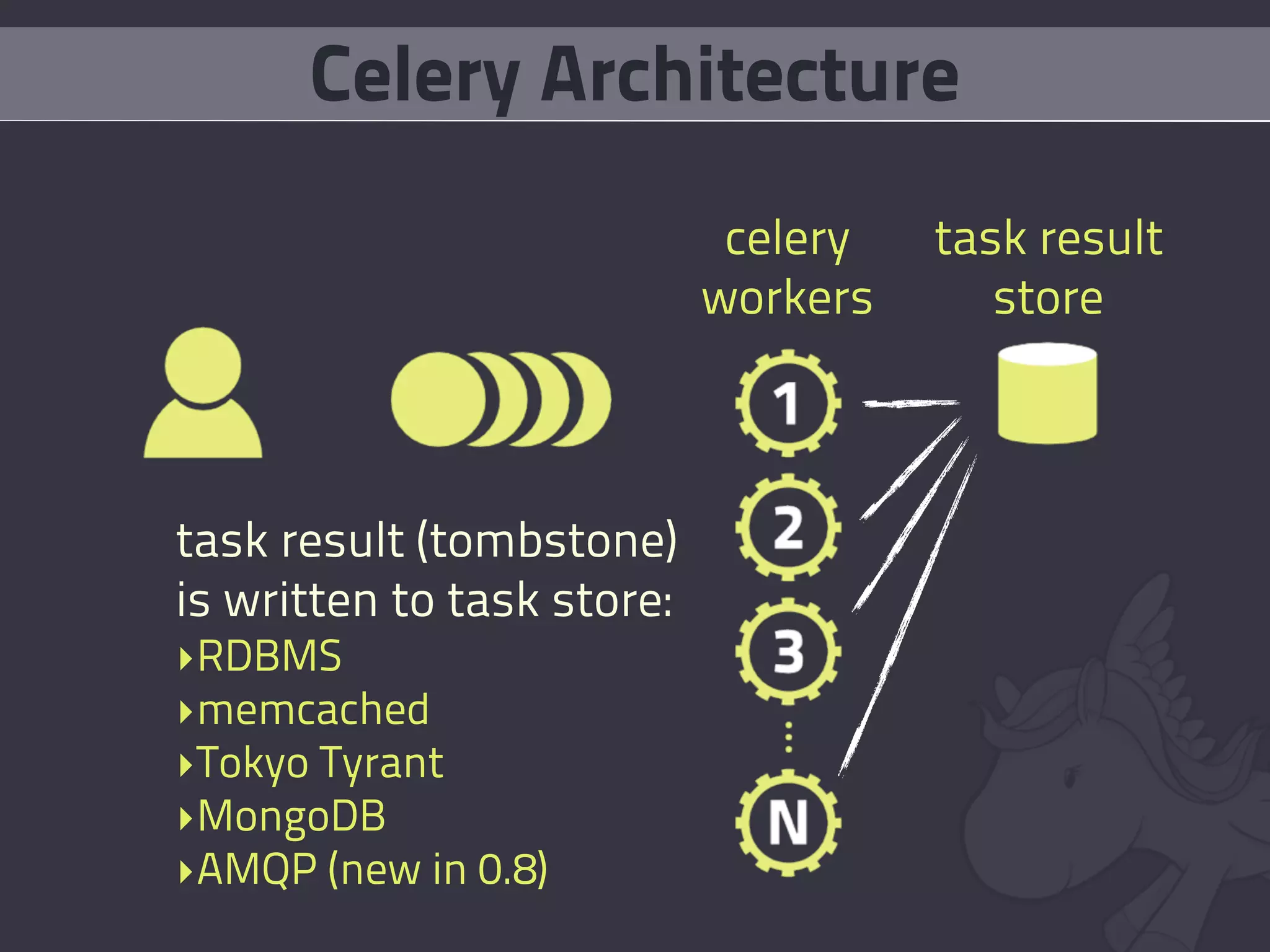

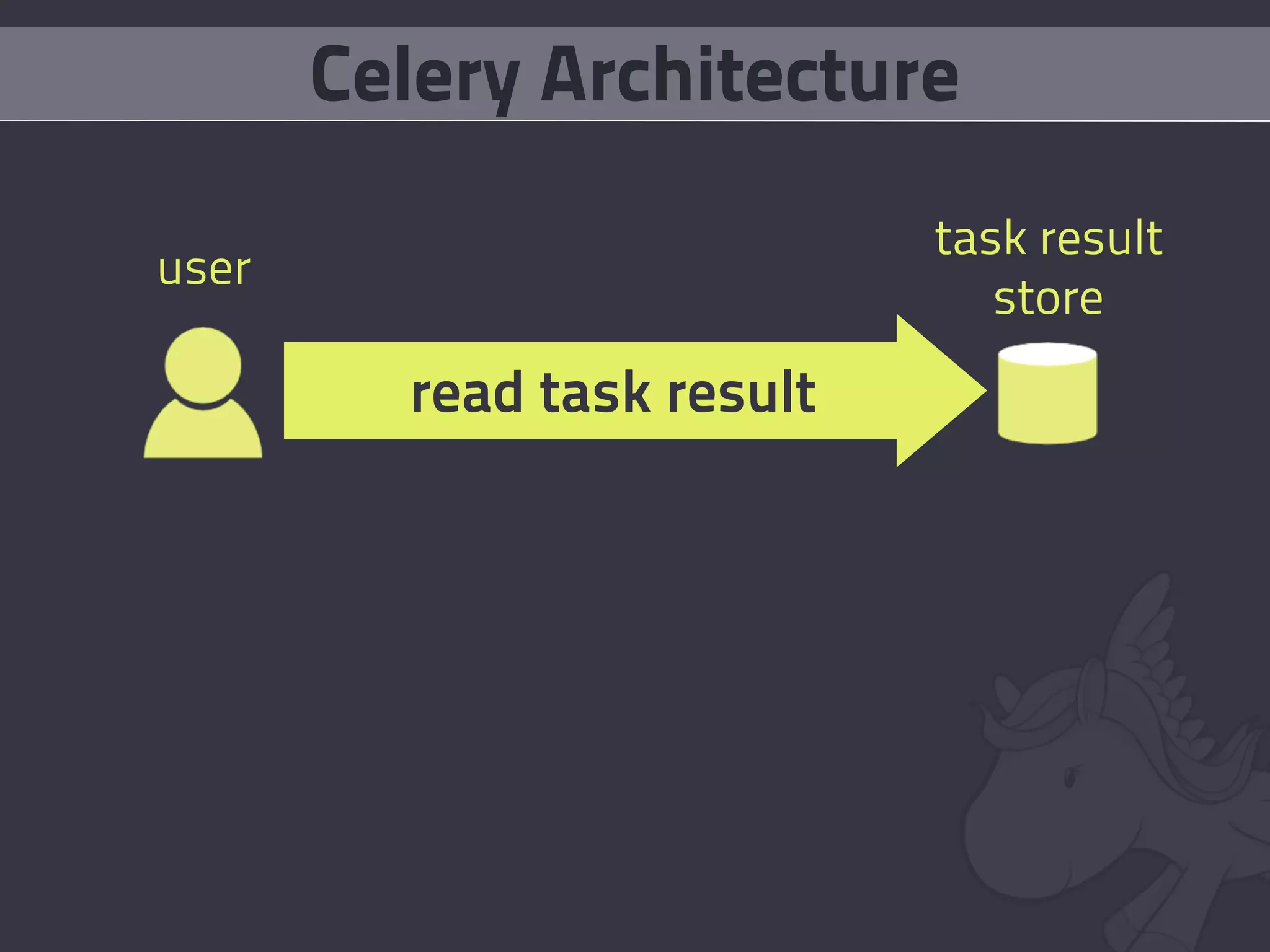

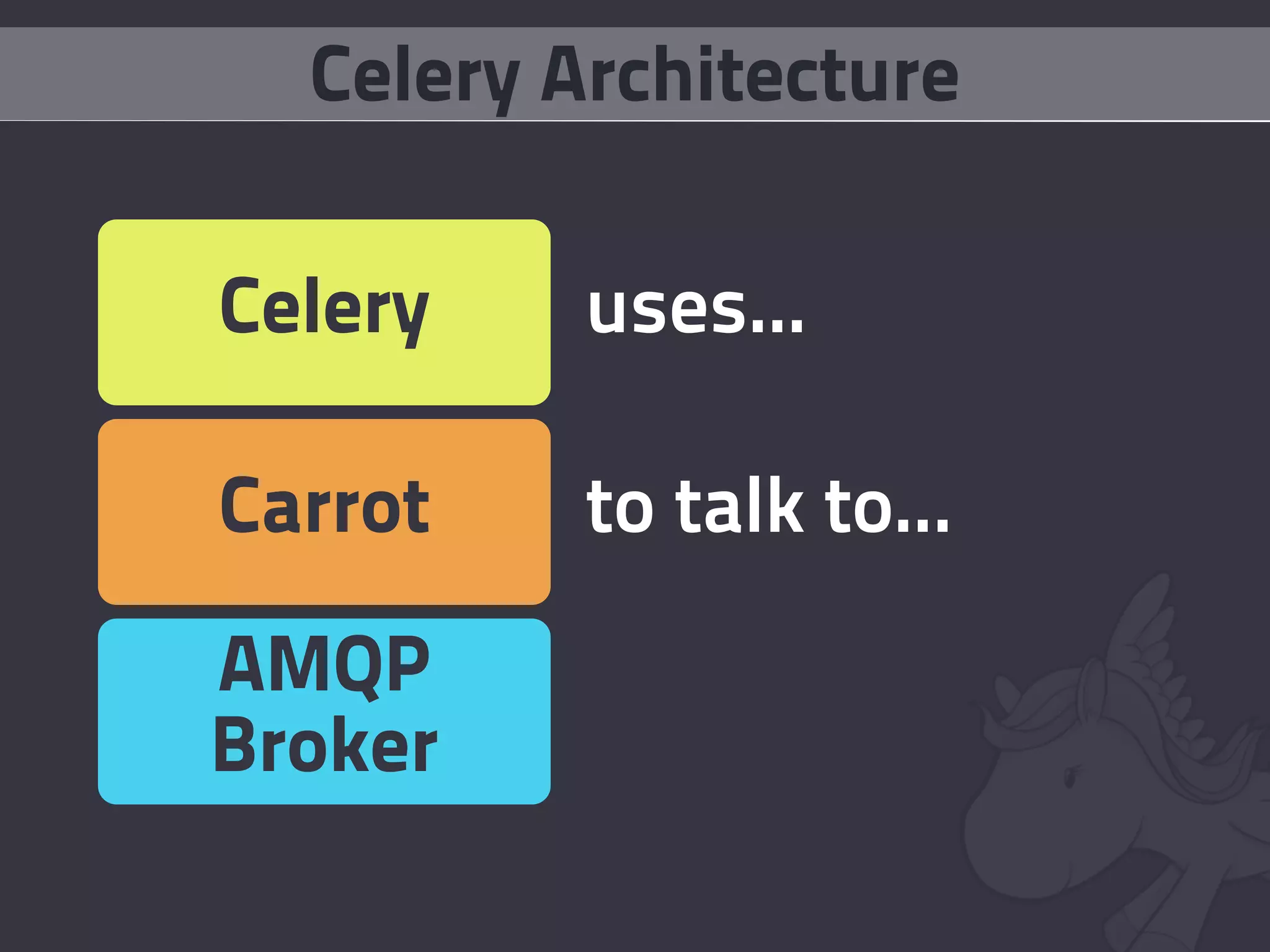

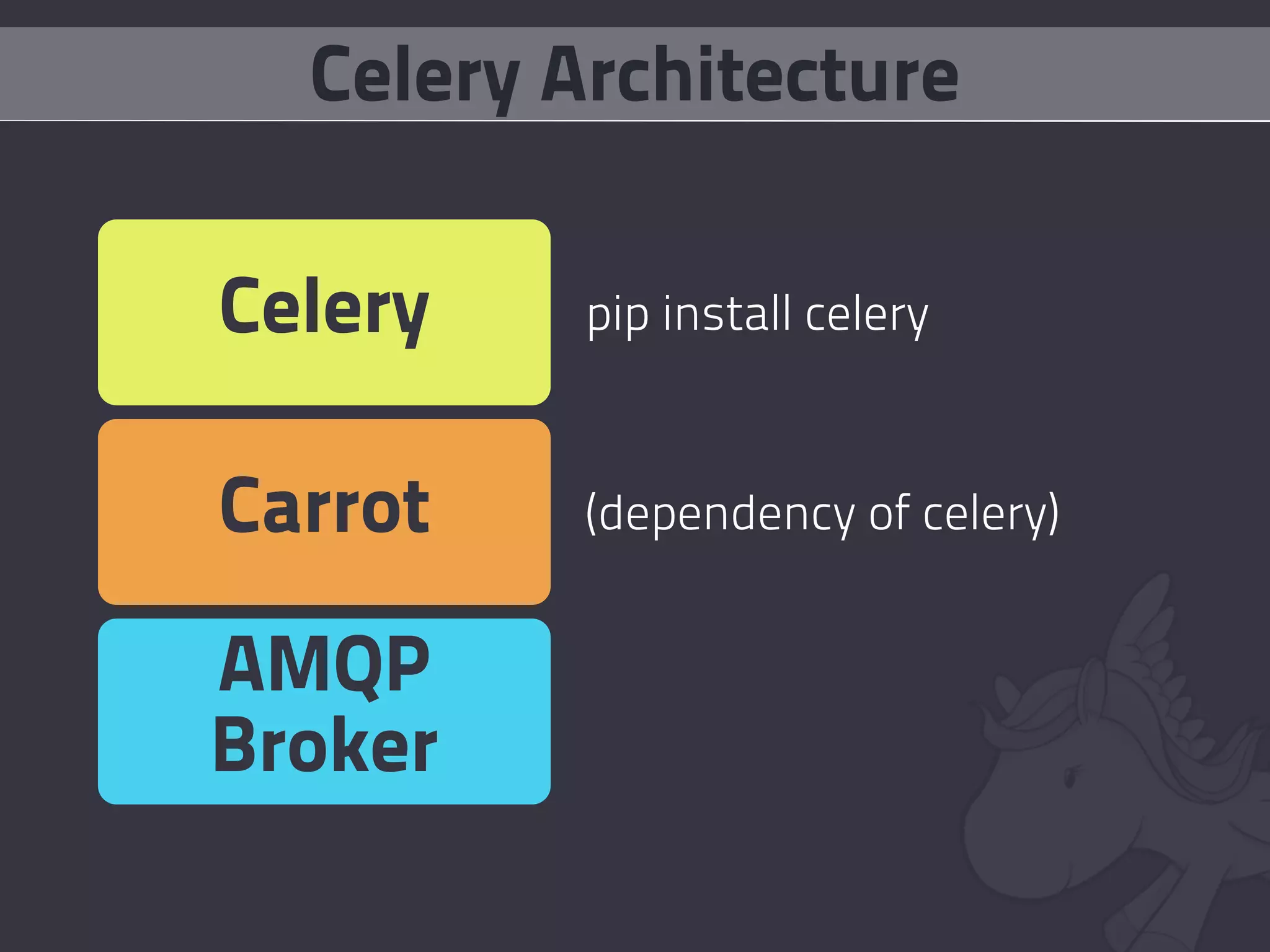

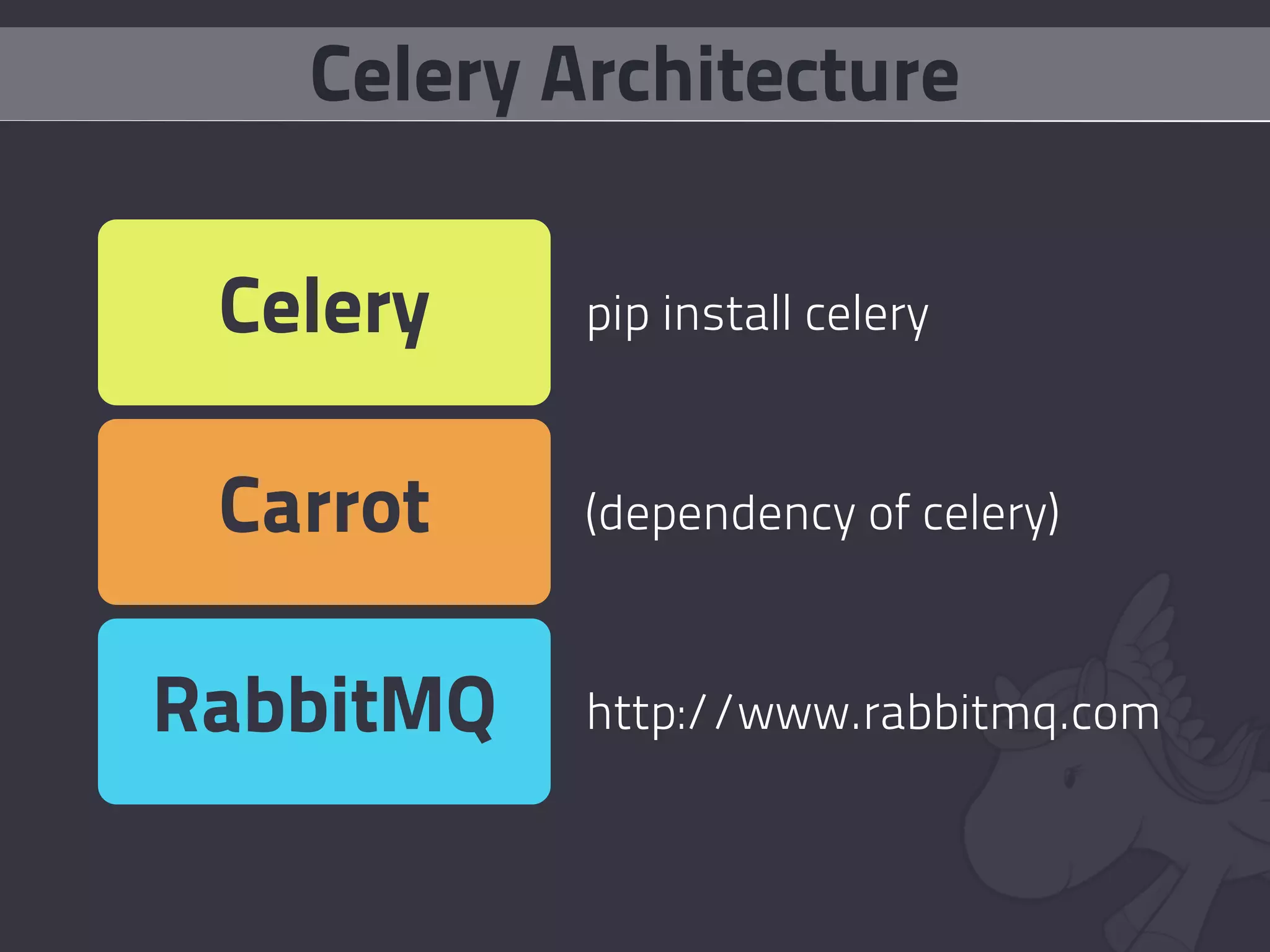

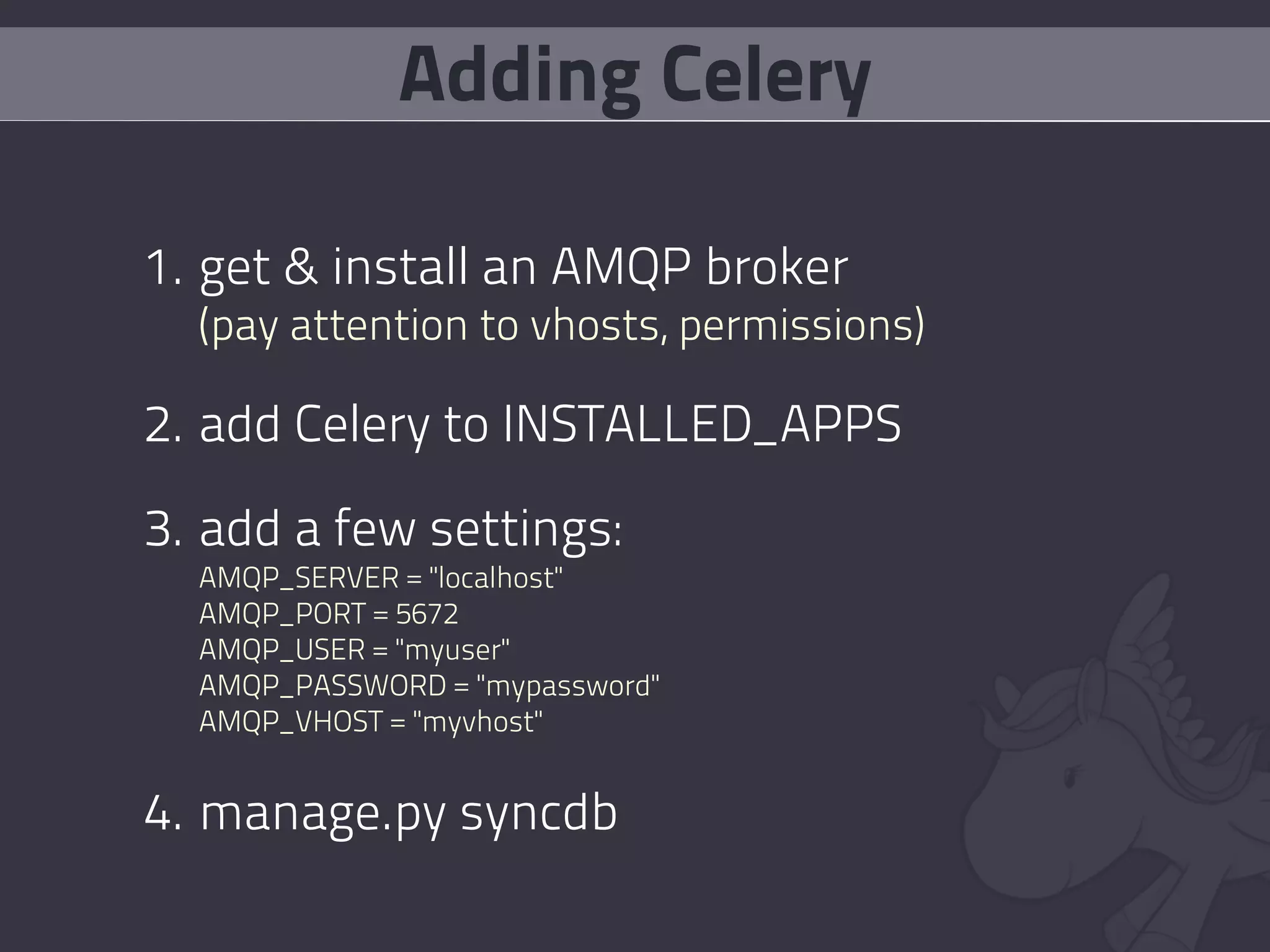

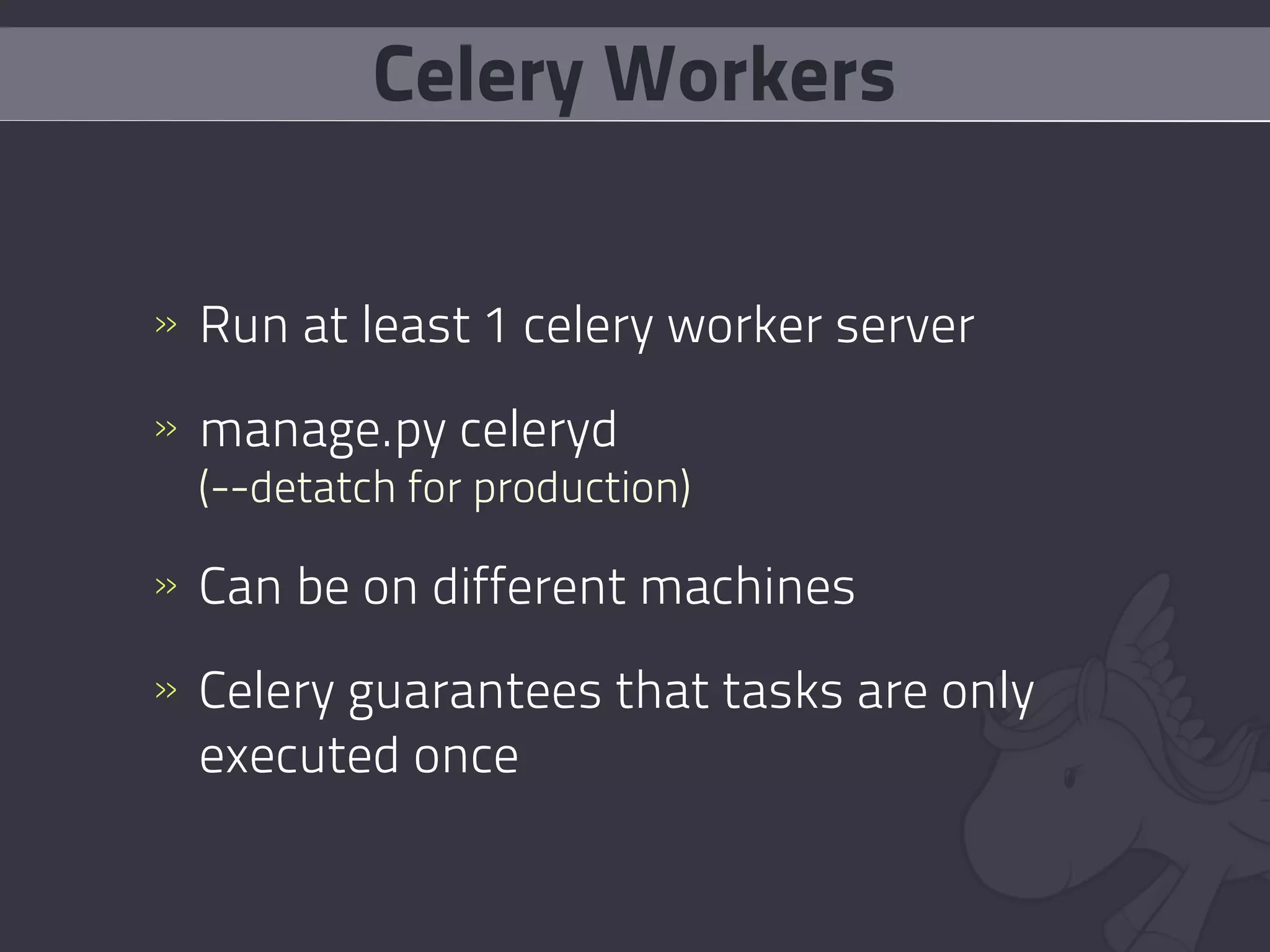

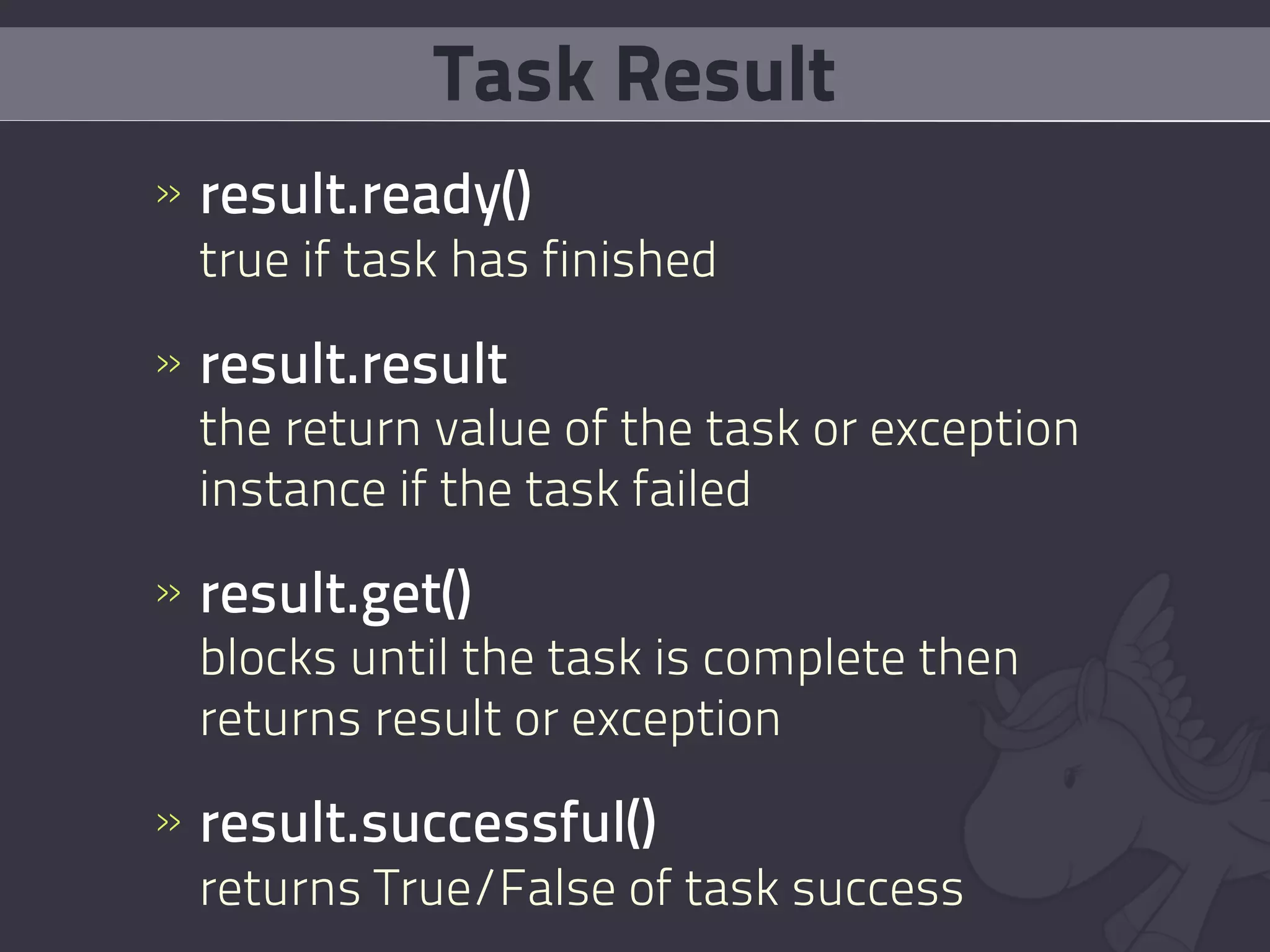

Celery is an asynchronous task queue/job queue based on distributed message passing. It allows tasks to be executed asynchronously by worker servers using a message passing protocol like AMQP. Celery can be used to process tasks in the background outside of the HTTP request/response cycle, run periodic tasks, interact with APIs, and more. It uses brokers like RabbitMQ to push tasks to workers to execute tasks in parallel. Task results are stored for retrieval. Celery provides features like task retries, task sets, periodic tasks, and Django views for interacting with tasks.

![Task Retries

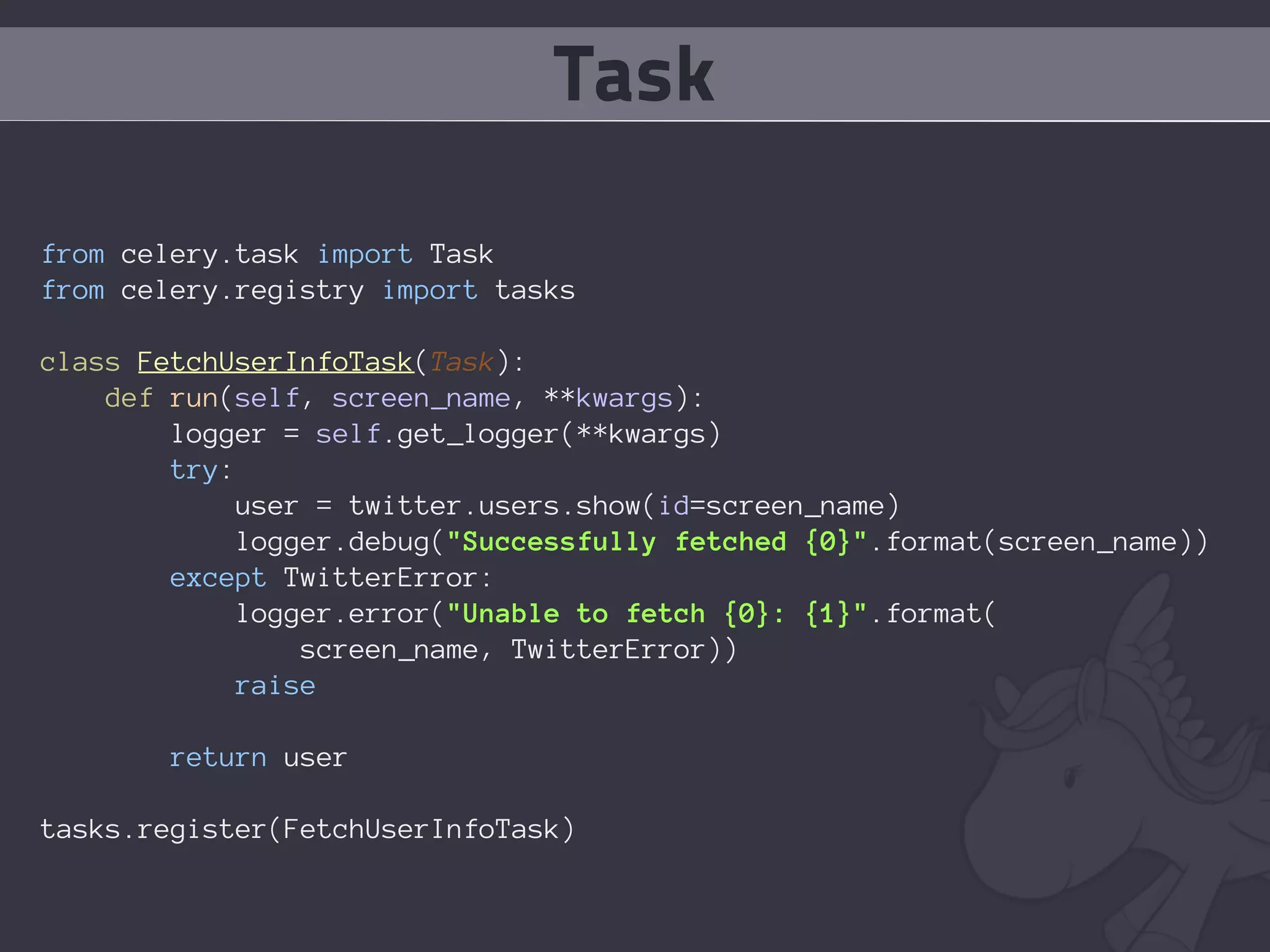

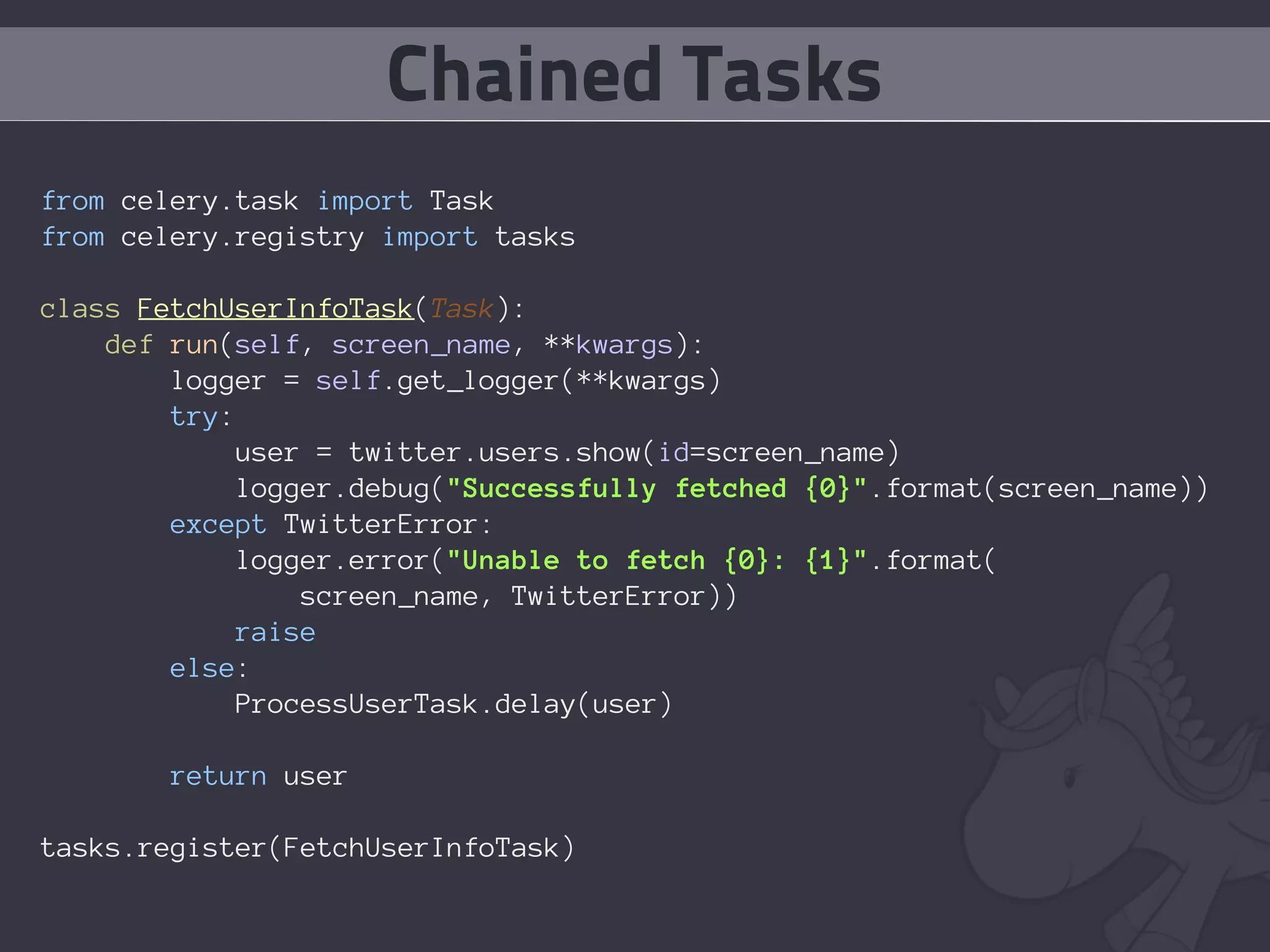

from celery.task import Task

from celery.registry import tasks

class FetchUserInfoTask(Task):

default_retry_delay = 5 * 60 # retry in 5 minutes

max_retries = 5

def run(self, screen_name, **kwargs):

logger = self.get_logger(**kwargs)

try:

user = twitter.users.show(id=screen_name)

logger.debug("Successfully fetched {0}".format(screen_name))

except TwitterError, exc:

self.retry(args=[screen_name,], kwargs=**kwargs, exc)

else:

ProcessUserTask.delay(user)

return user

tasks.register(FetchUserInfoTask)](https://image.slidesharecdn.com/pyweb-celery-090929081406-phpapp01/75/An-Introduction-to-Celery-34-2048.jpg)

![Task Sets

>>> from myapp.tasks import FetchUserInfoTask

>>> from celery.task import TaskSet

>>> ts = TaskSet(FetchUserInfoTask, args=(

['ahikman'], {},

['idangazit'], {},

['daonb'], {},

['dibau_naum_h'], {}))

>>> ts_result = ts.run()

>>> list_of_return_values = ts.join()](https://image.slidesharecdn.com/pyweb-celery-090929081406-phpapp01/75/An-Introduction-to-Celery-38-2048.jpg)