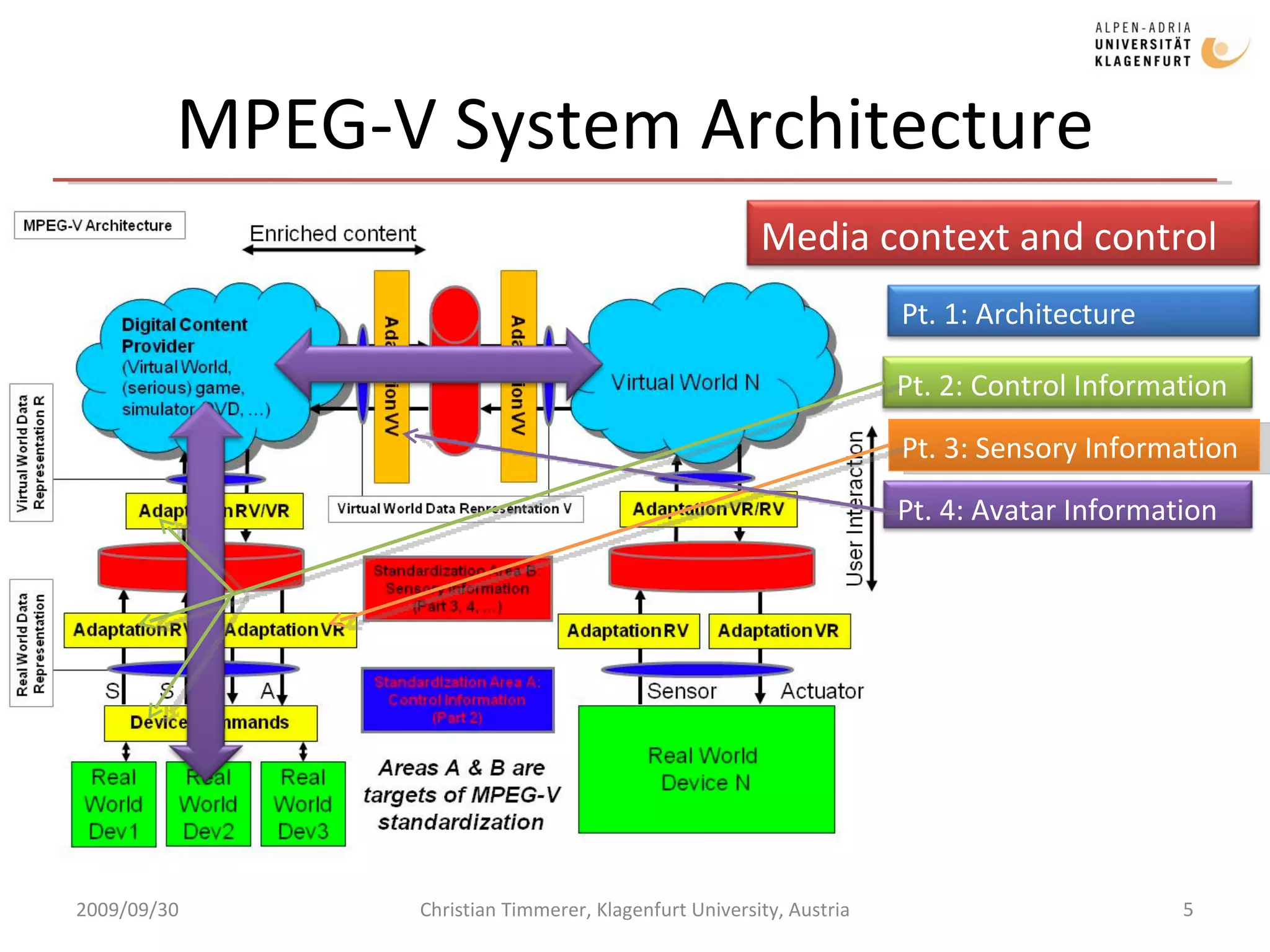

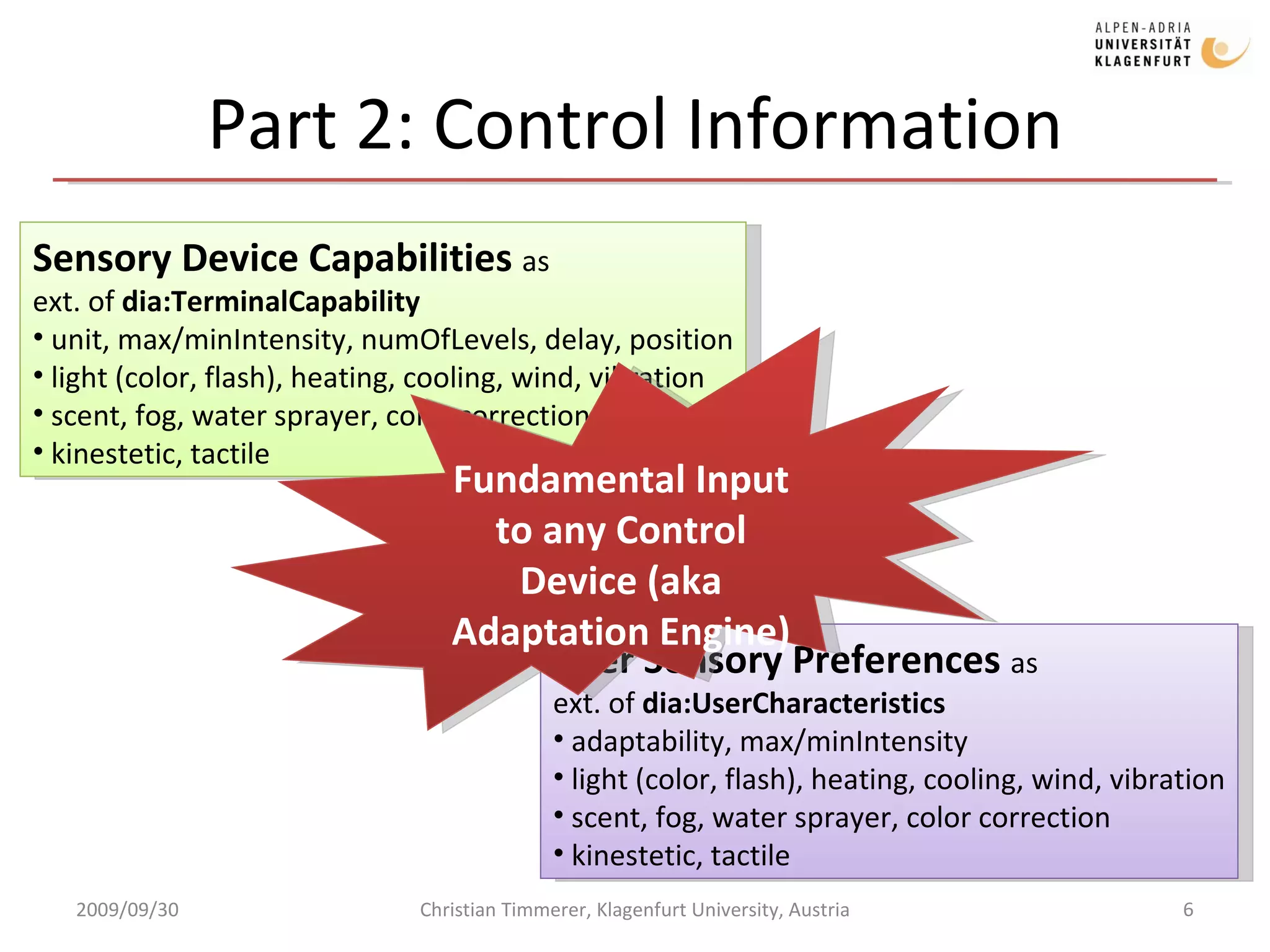

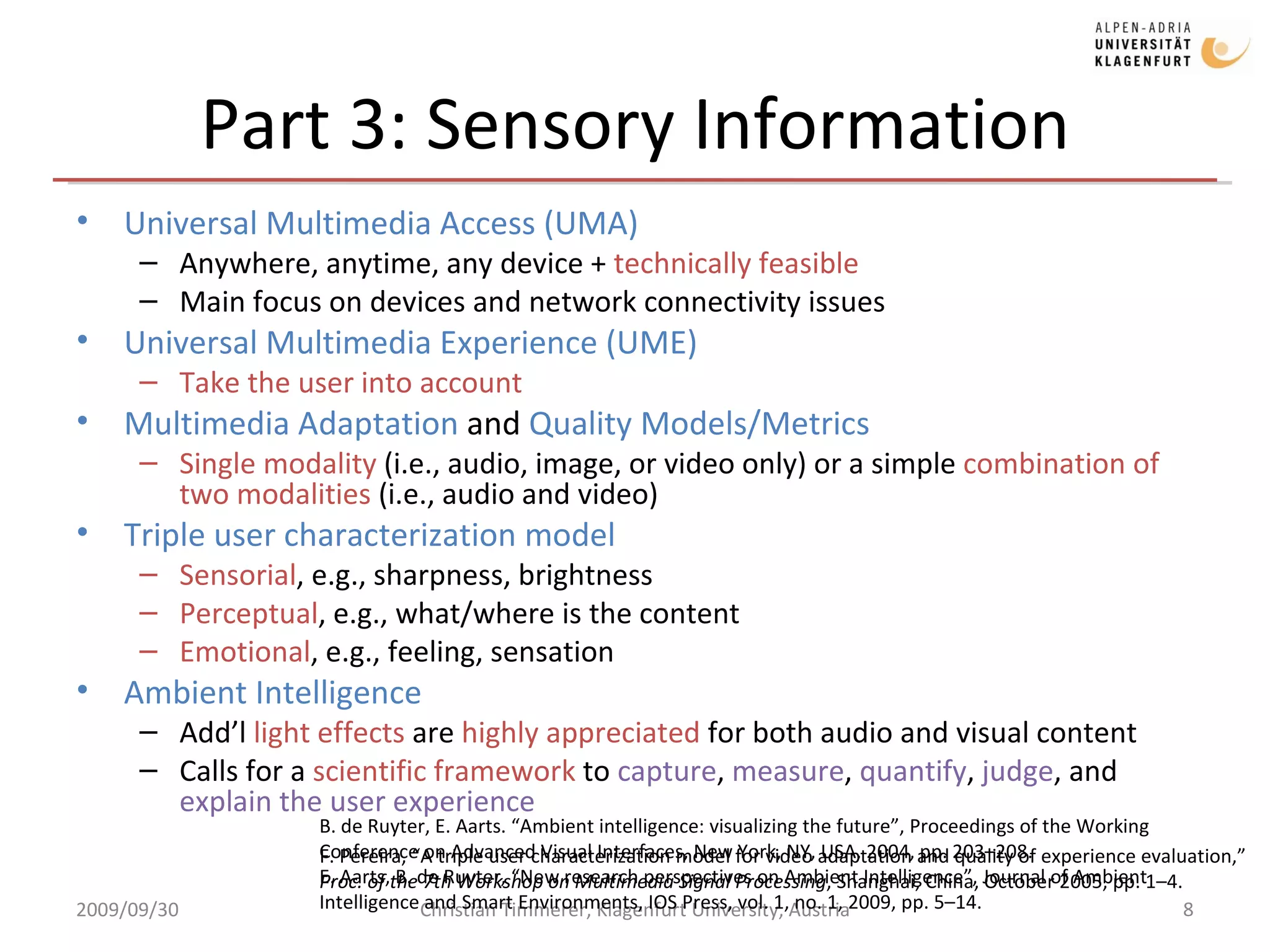

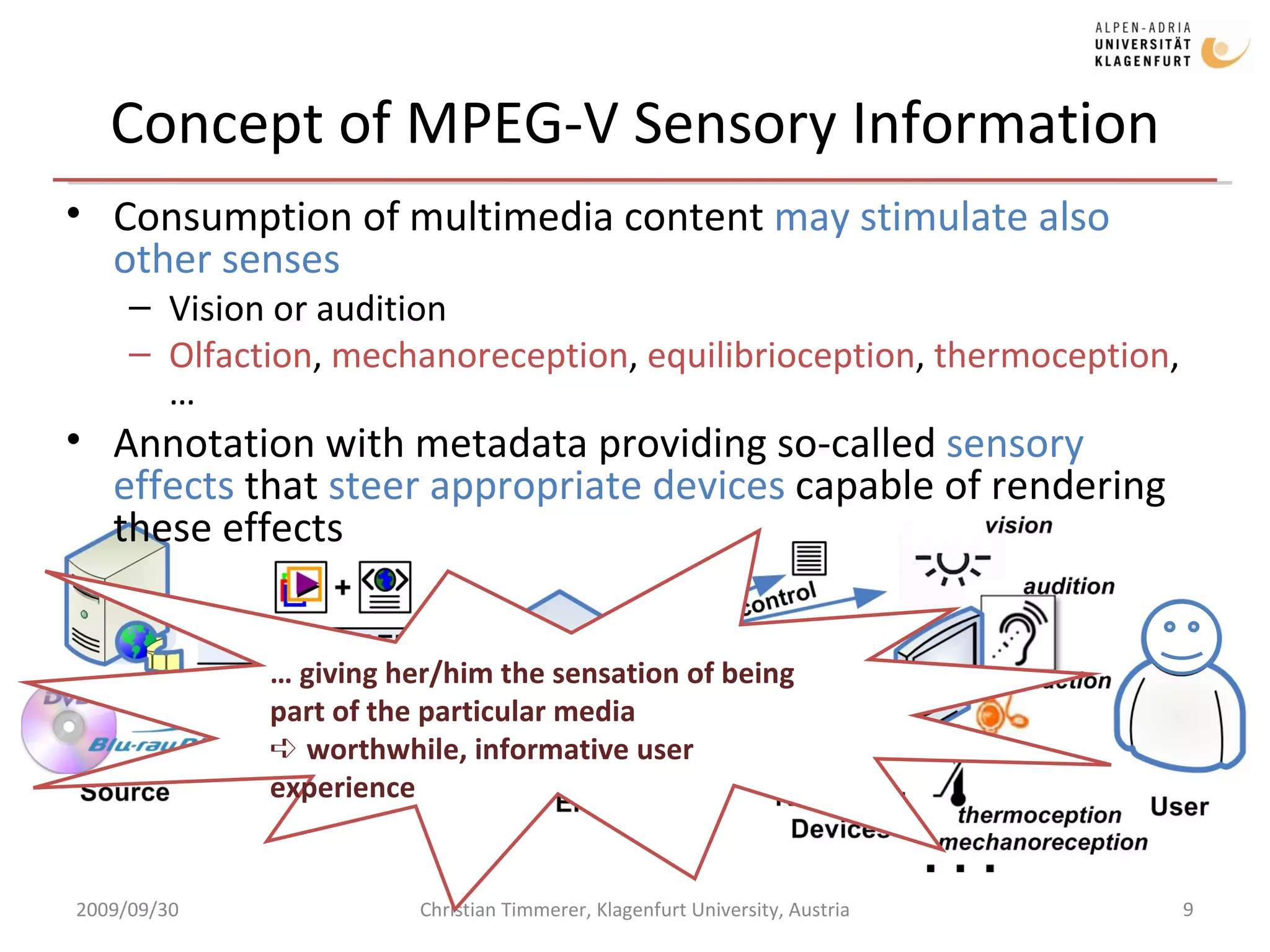

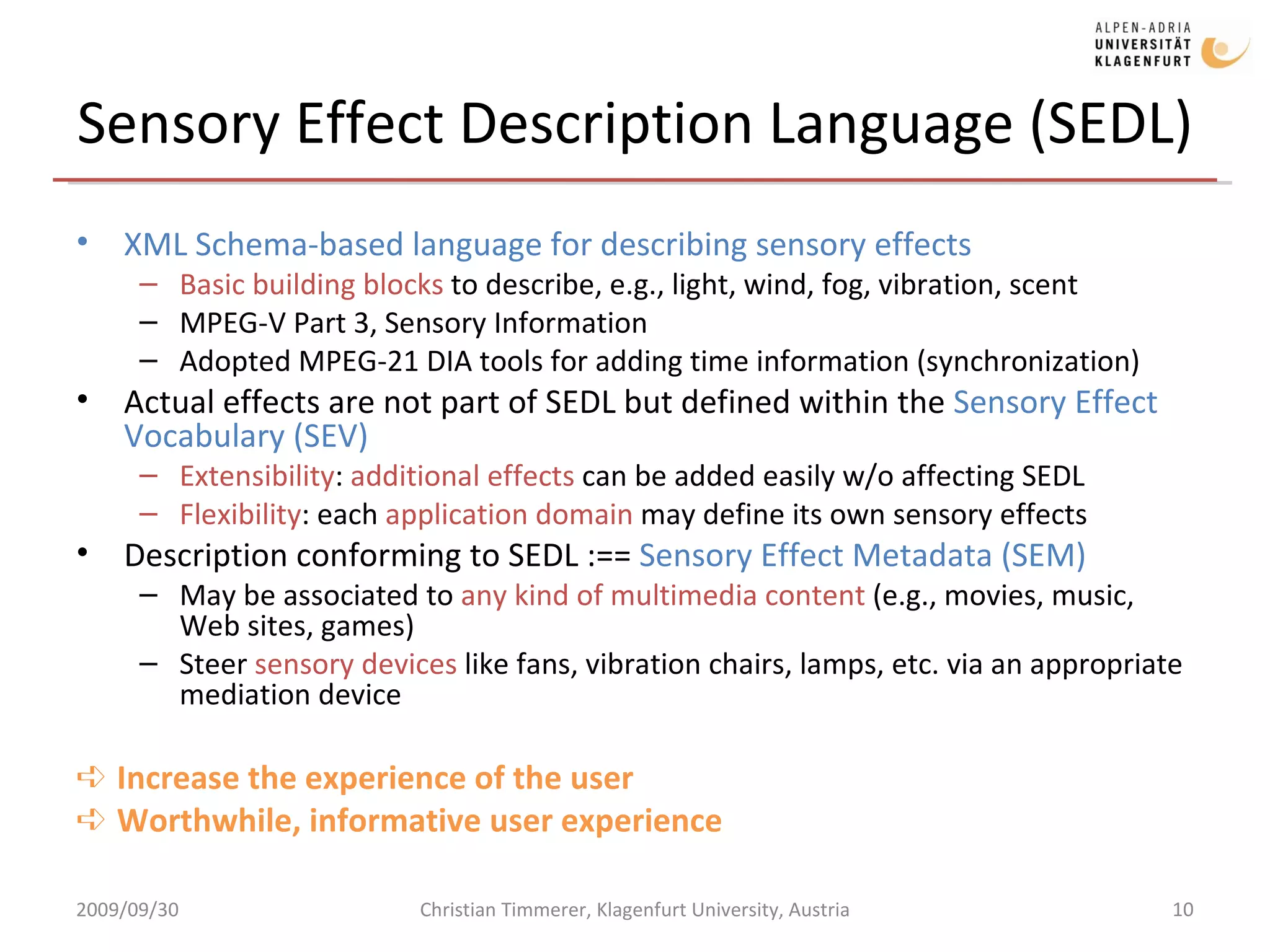

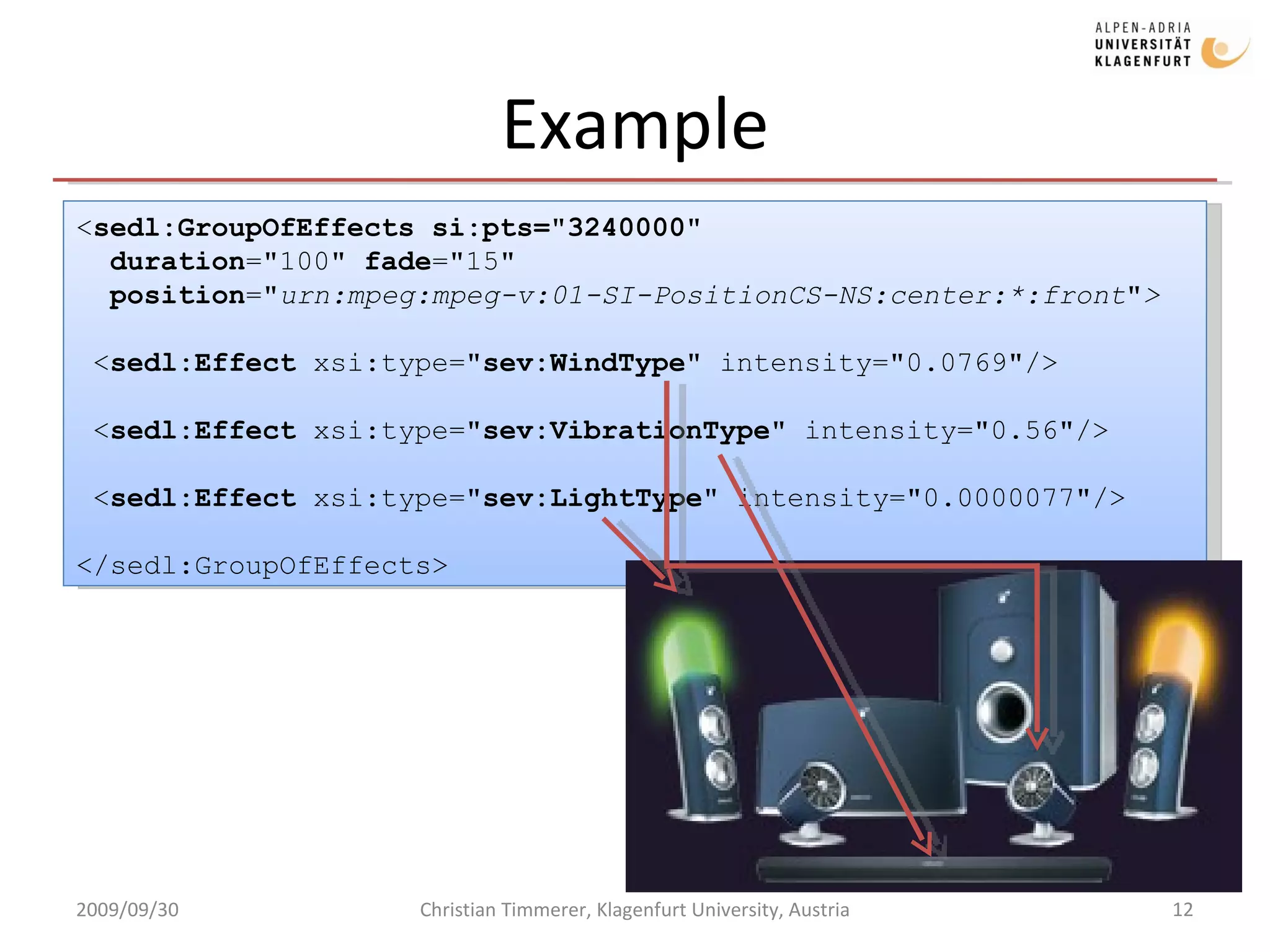

The document introduces MPEG-V, a standard for interfacing with virtual worlds. MPEG-V includes four parts that define the system architecture and representations for information exchange between virtual worlds and between virtual and real worlds. Part 3, on sensory information, defines a language called SEDL to annotate multimedia content with sensory effects metadata to control sensory devices and enhance the user experience. The presentation provides an overview of MPEG-V and its goals of achieving interoperability and a better user experience through sensory stimulation related to multimedia content.

![Sensory Effect Description Language (cont’d) 2009/09/30 Christian Timmerer, Klagenfurt University, Austria EffectDefinition ::= [activate][duration][fade][alt] [priority][intensity][position] [adaptability] SEM ::=[DescriptionMetadata](Declarations|GroupOfEffects| Effect|ReferenceEffect)+ Declarations ::= (GroupOfEffects|Effect|Parameter)+ GroupOfEffects ::= timestamp EffectDefinition EffectDefinition (EffectDefinition)* Effect ::= timestamp EffectDefinition](https://image.slidesharecdn.com/qomex2009final-090930090331-phpapp02/75/Interfacing-with-Virtual-Worlds-11-2048.jpg)

![Thank you for your attention ... questions, comments, etc. are welcome … Ass.-Prof. Dipl.-Ing. Dr. Christian Timmerer Klagenfurt University, Department of Information Technology (ITEC) Universitätsstrasse 65-67, A-9020 Klagenfurt, AUSTRIA [email_address] http://research.timmerer.com/ Tel: +43/463/2700 3621 Fax: +43/463/2700 3699 © Copyright: Christian Timmerer 2009/09/30 Christian Timmerer, Klagenfurt University, Austria](https://image.slidesharecdn.com/qomex2009final-090930090331-phpapp02/75/Interfacing-with-Virtual-Worlds-16-2048.jpg)