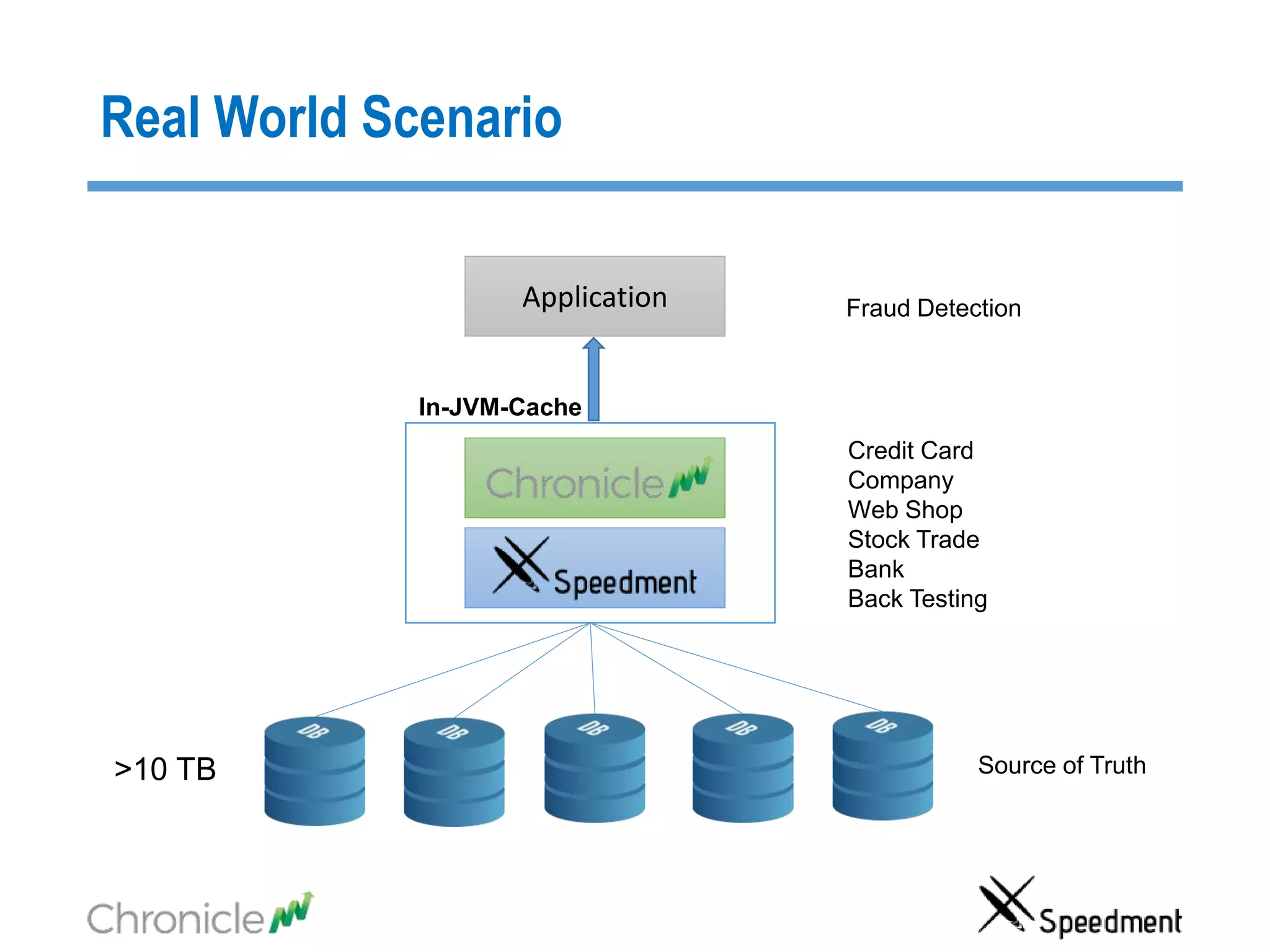

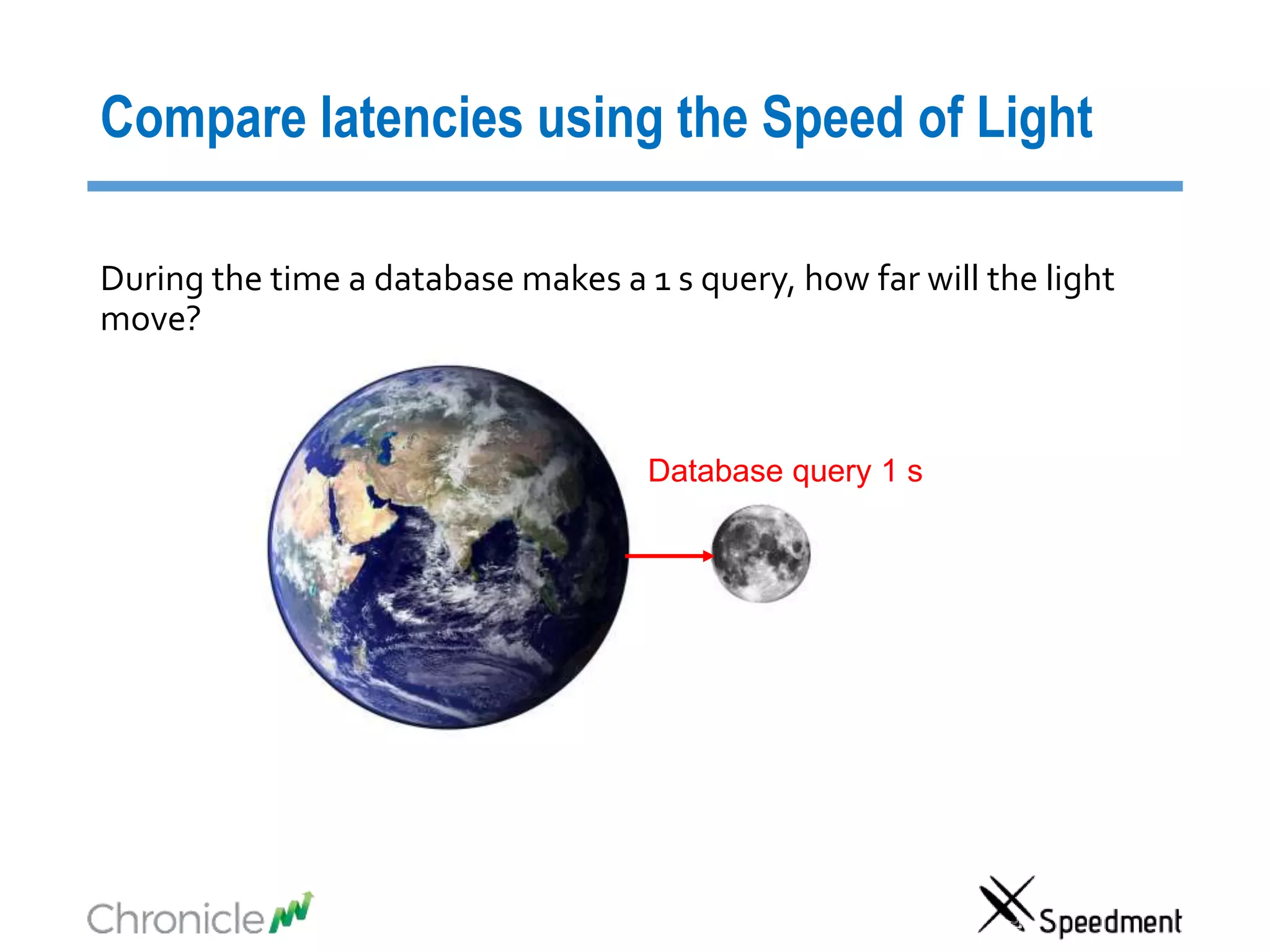

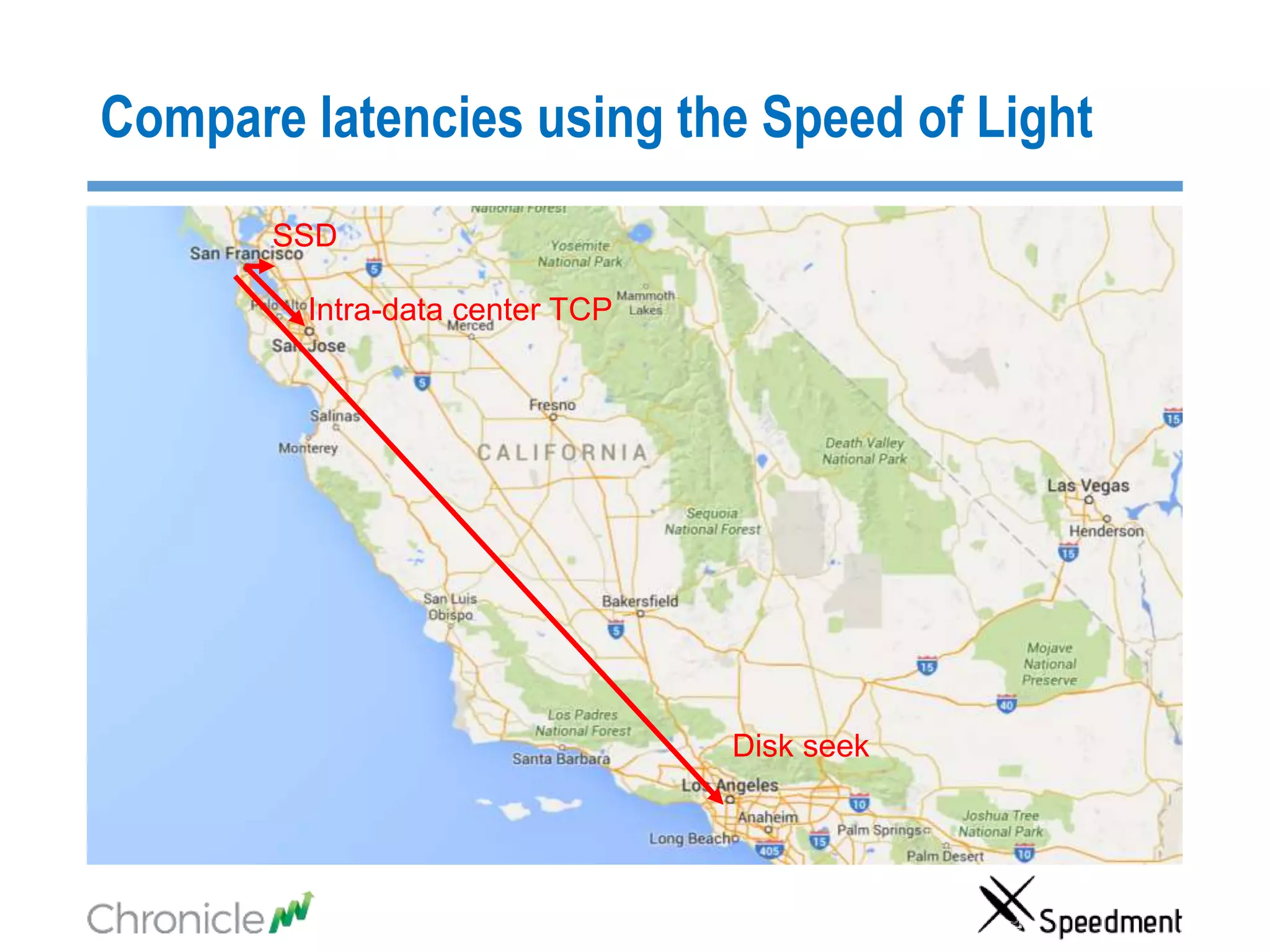

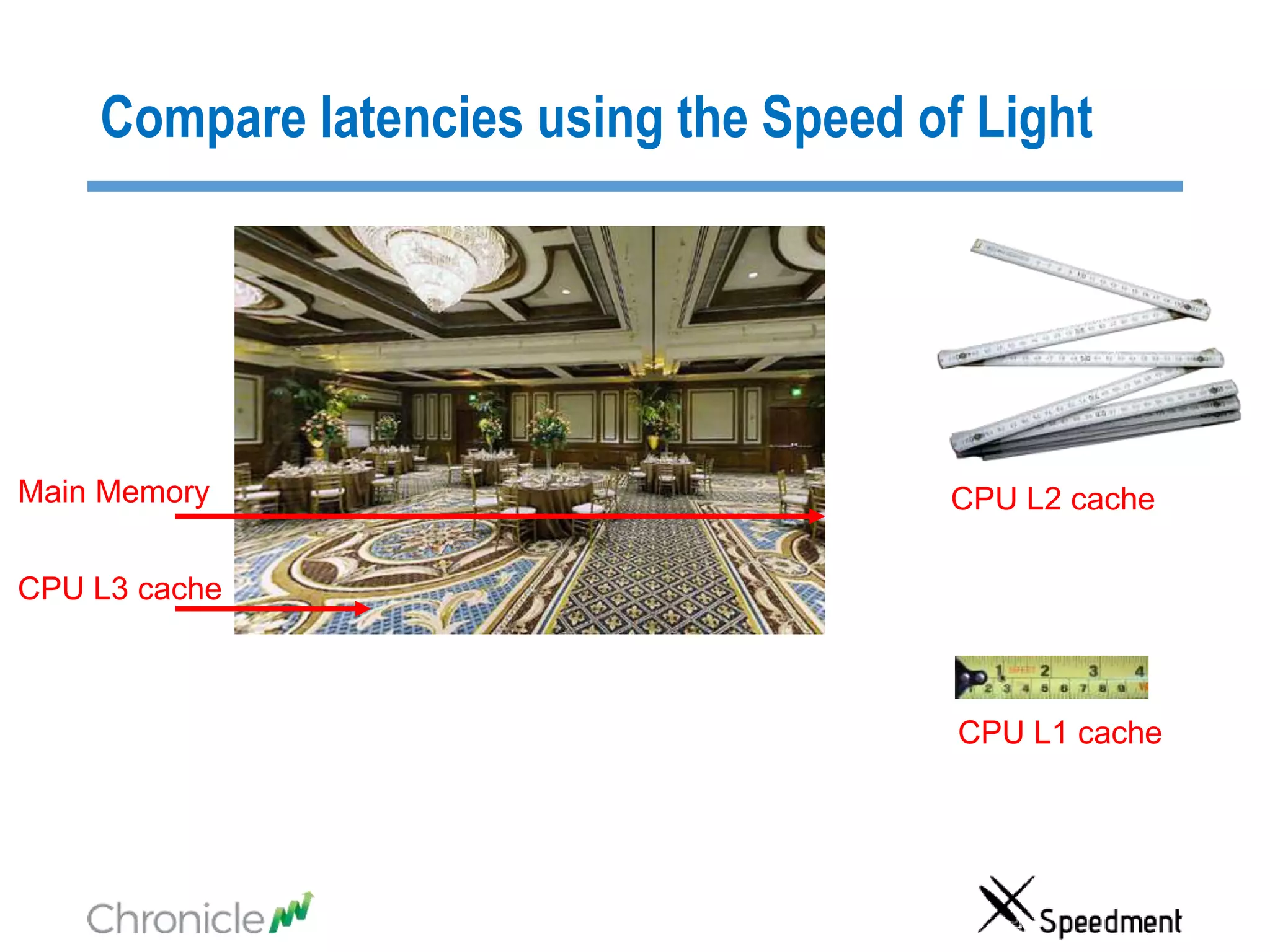

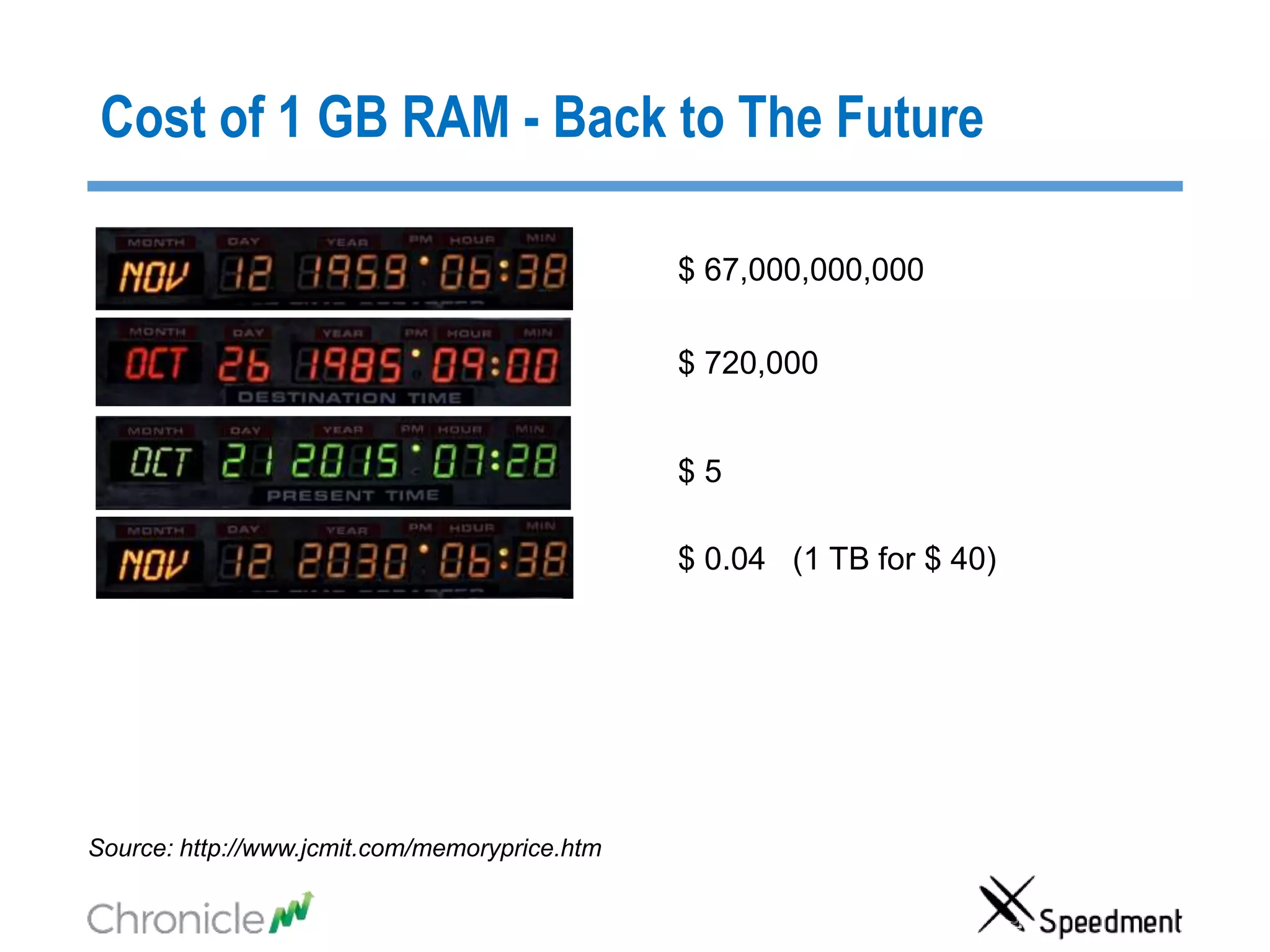

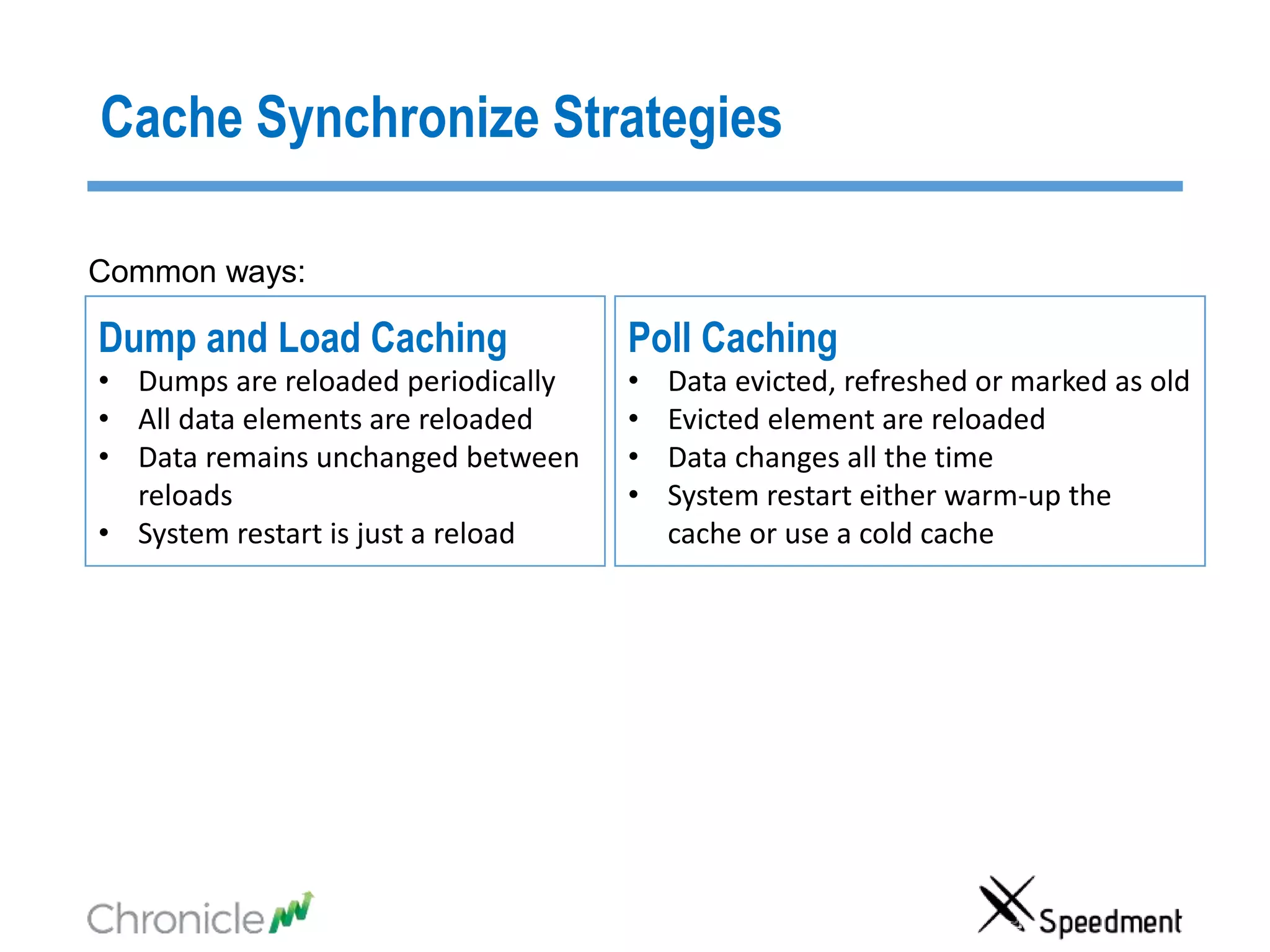

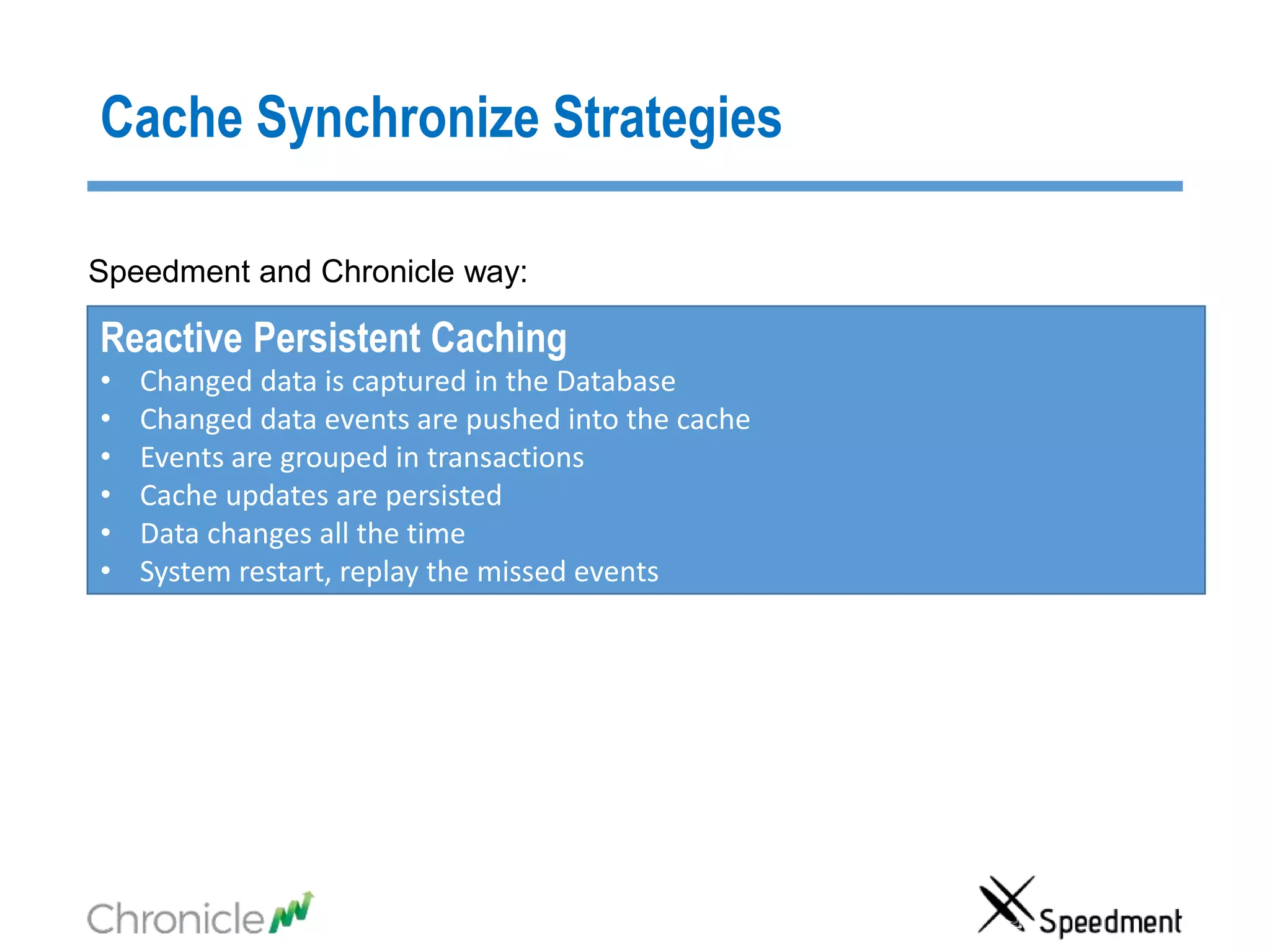

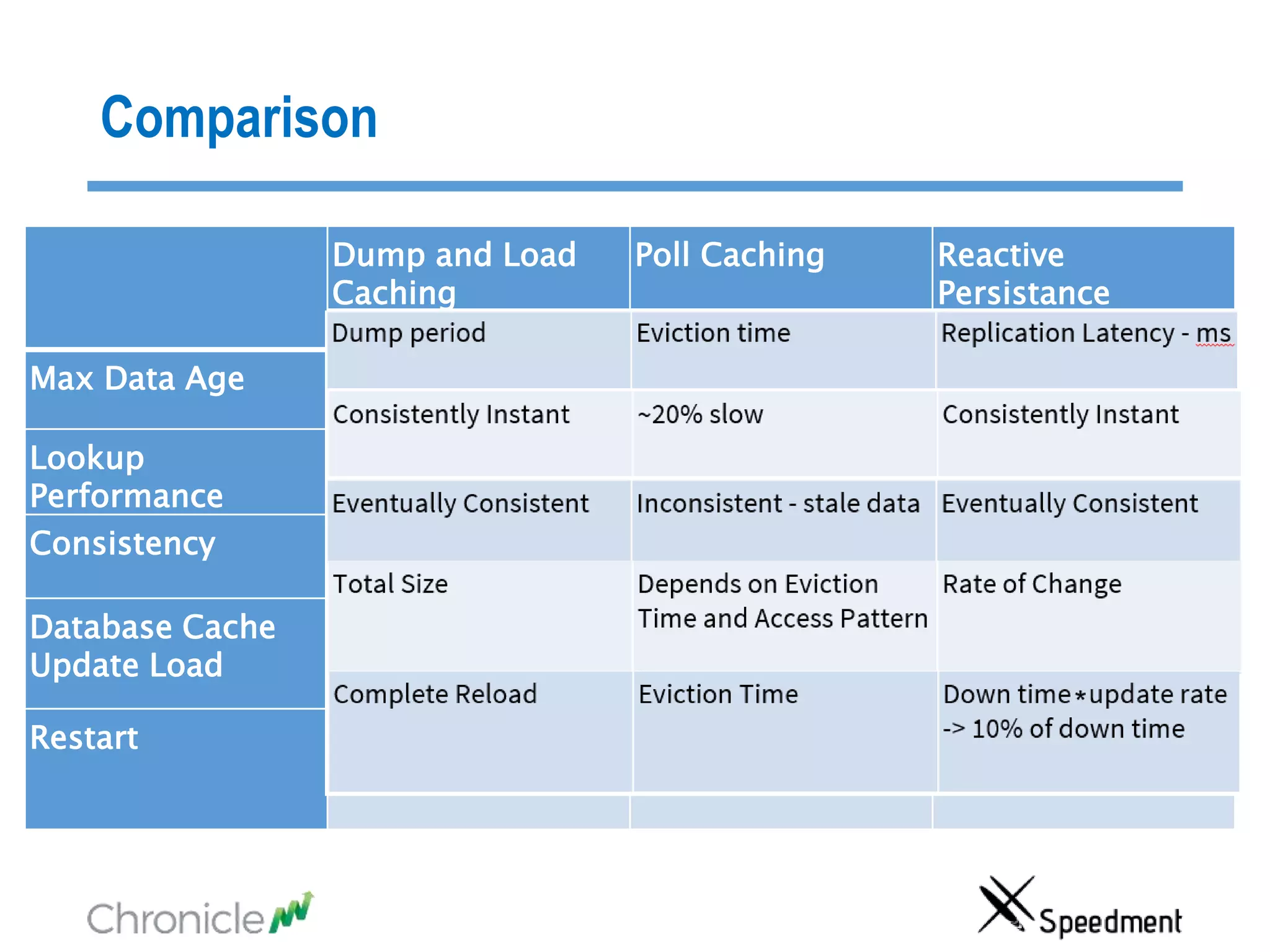

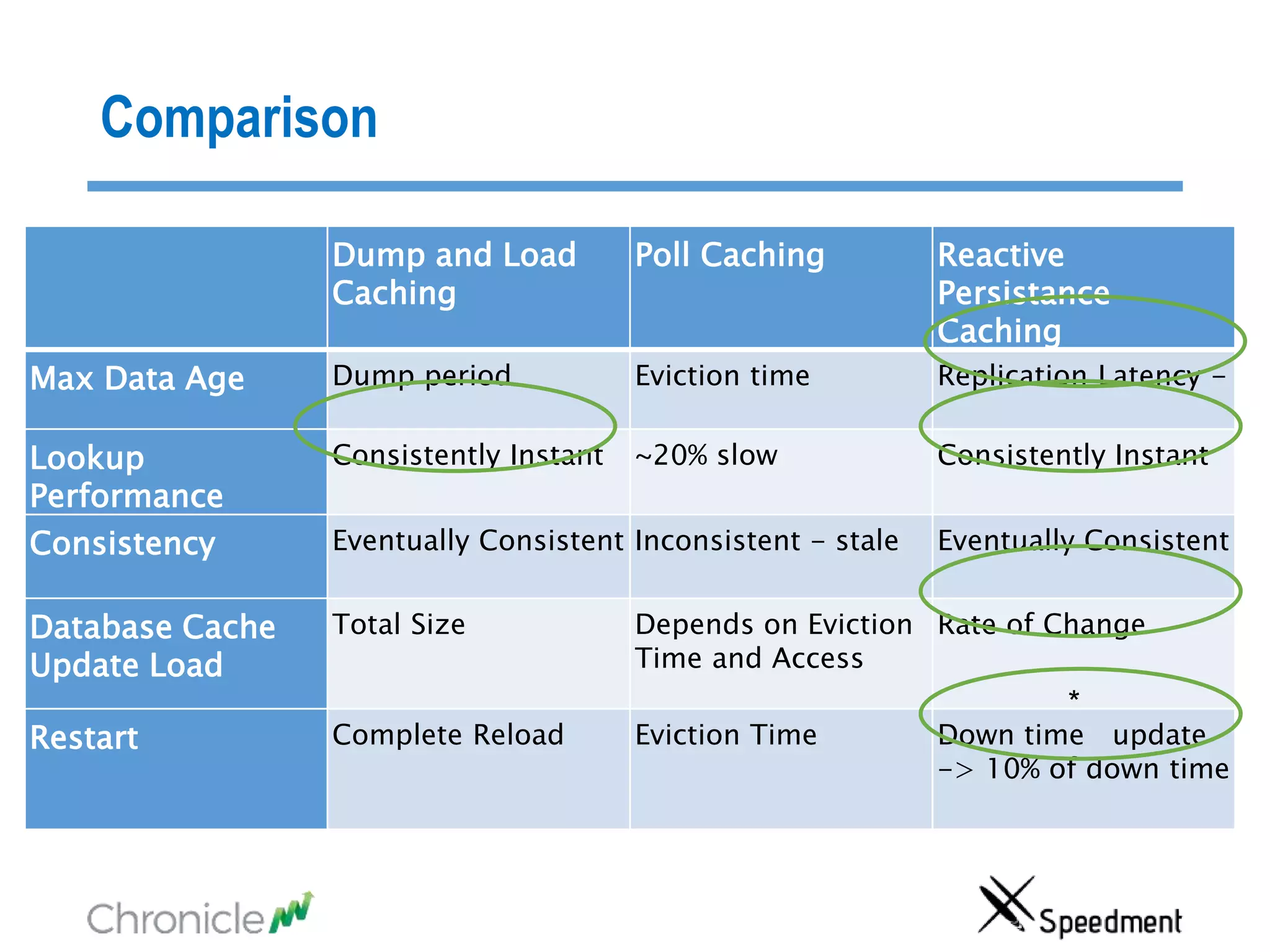

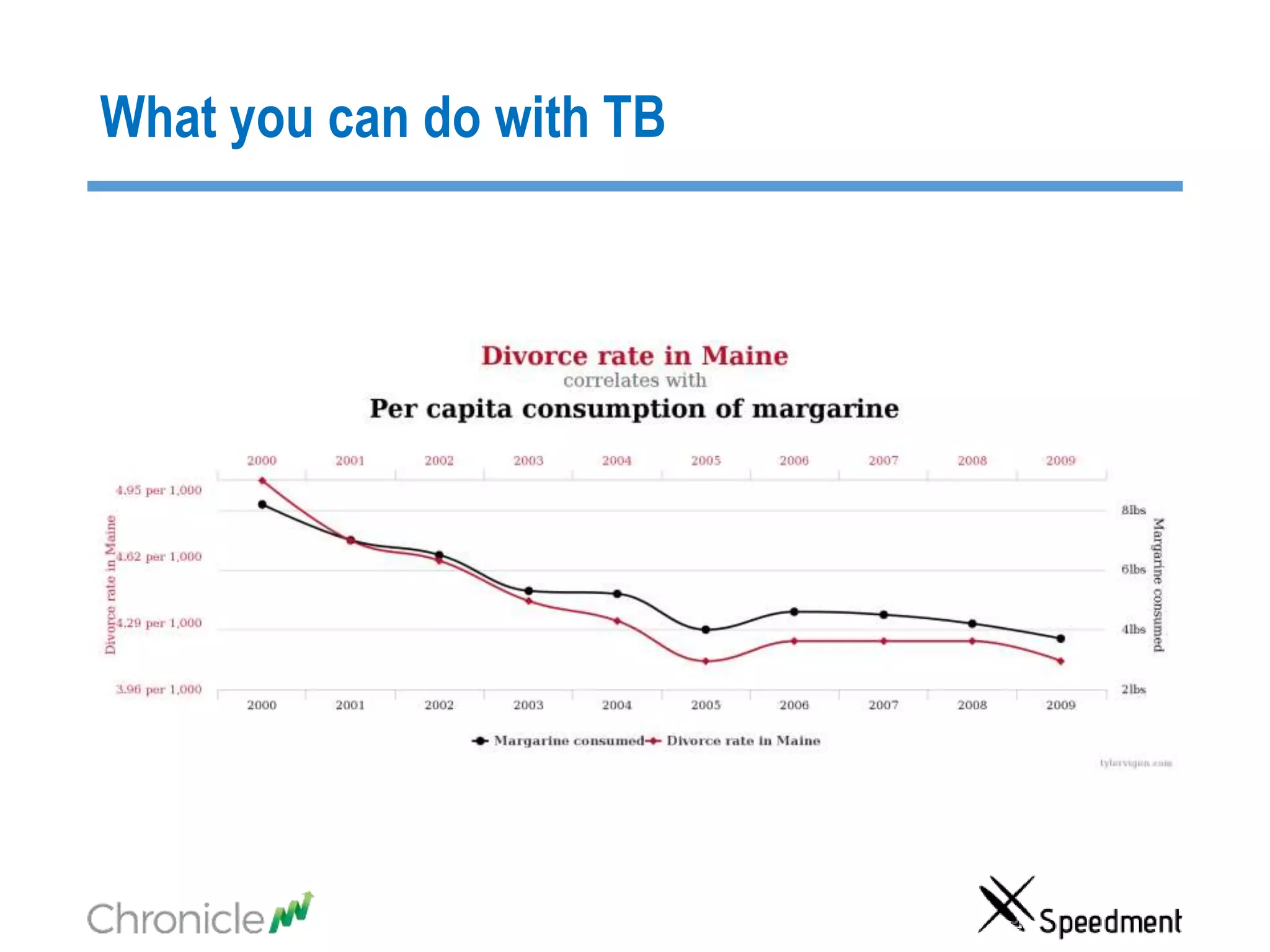

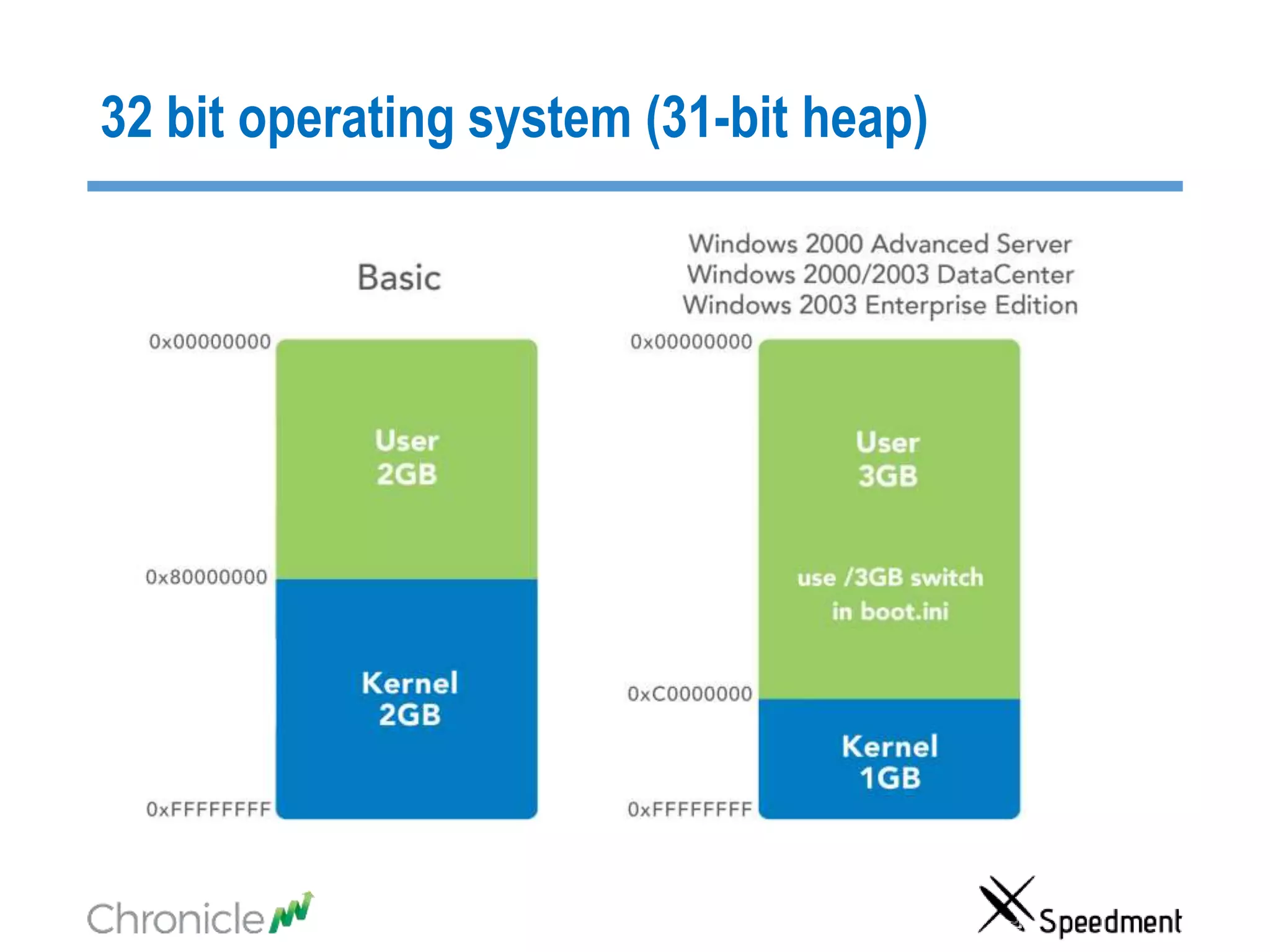

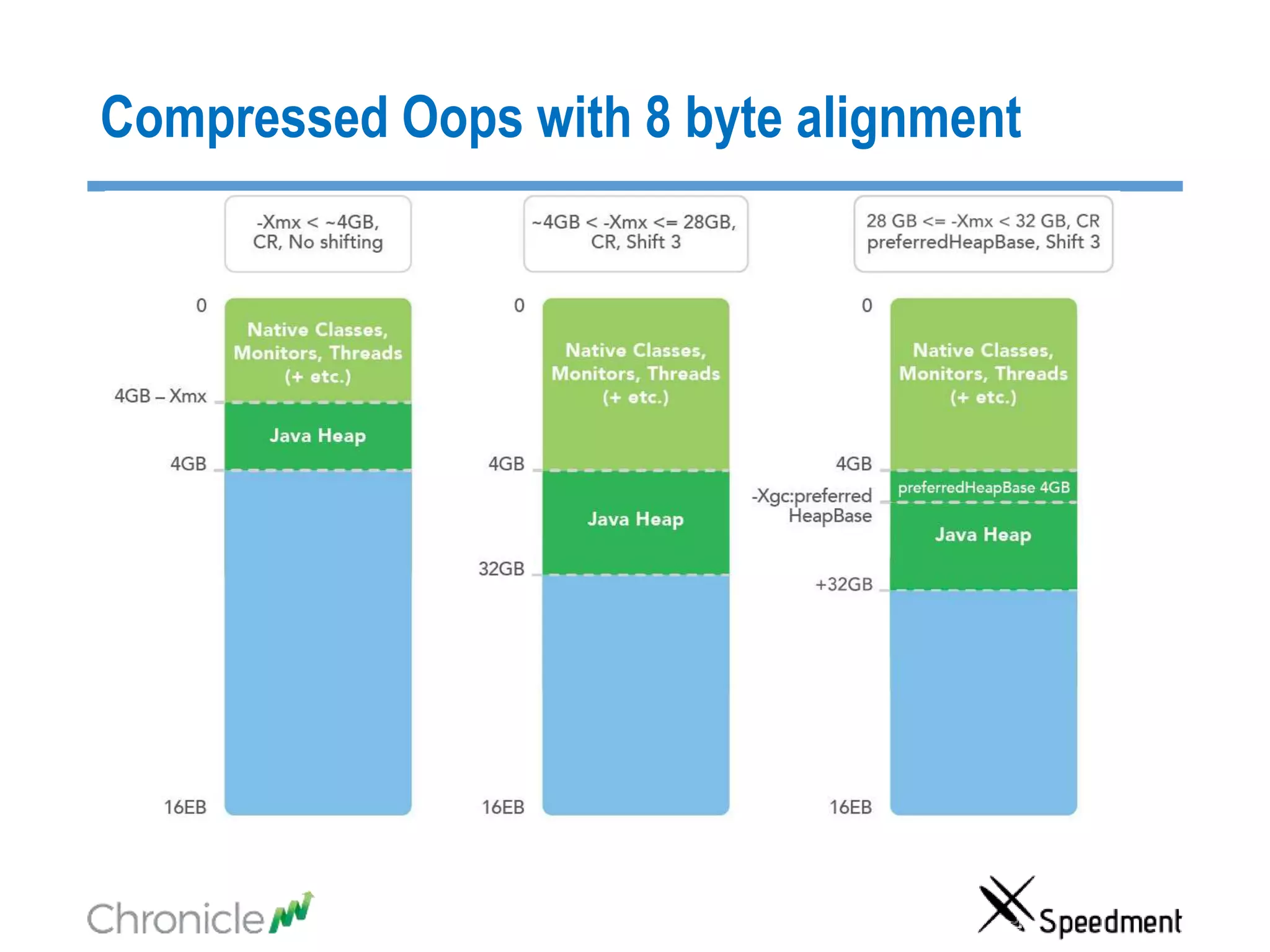

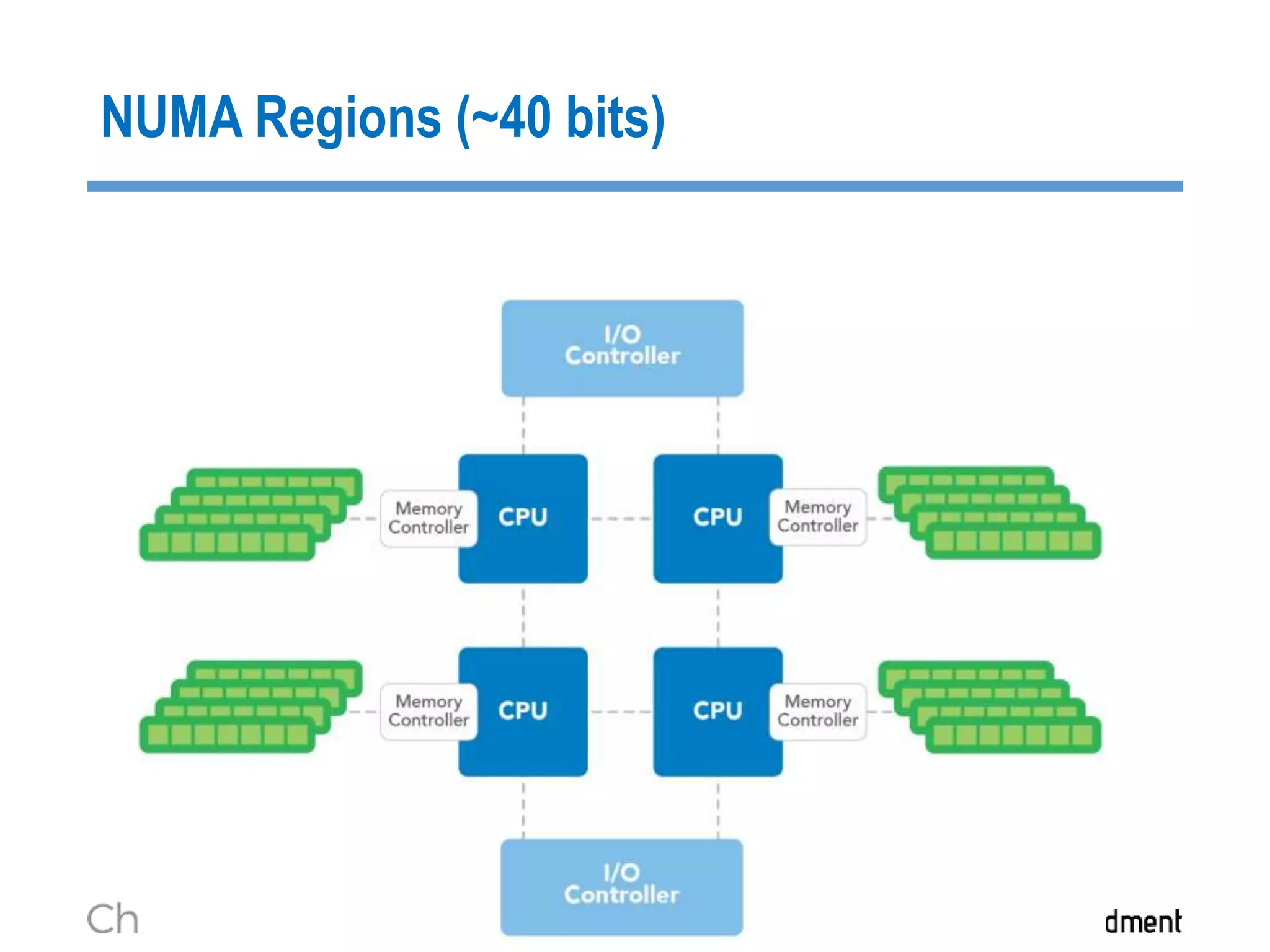

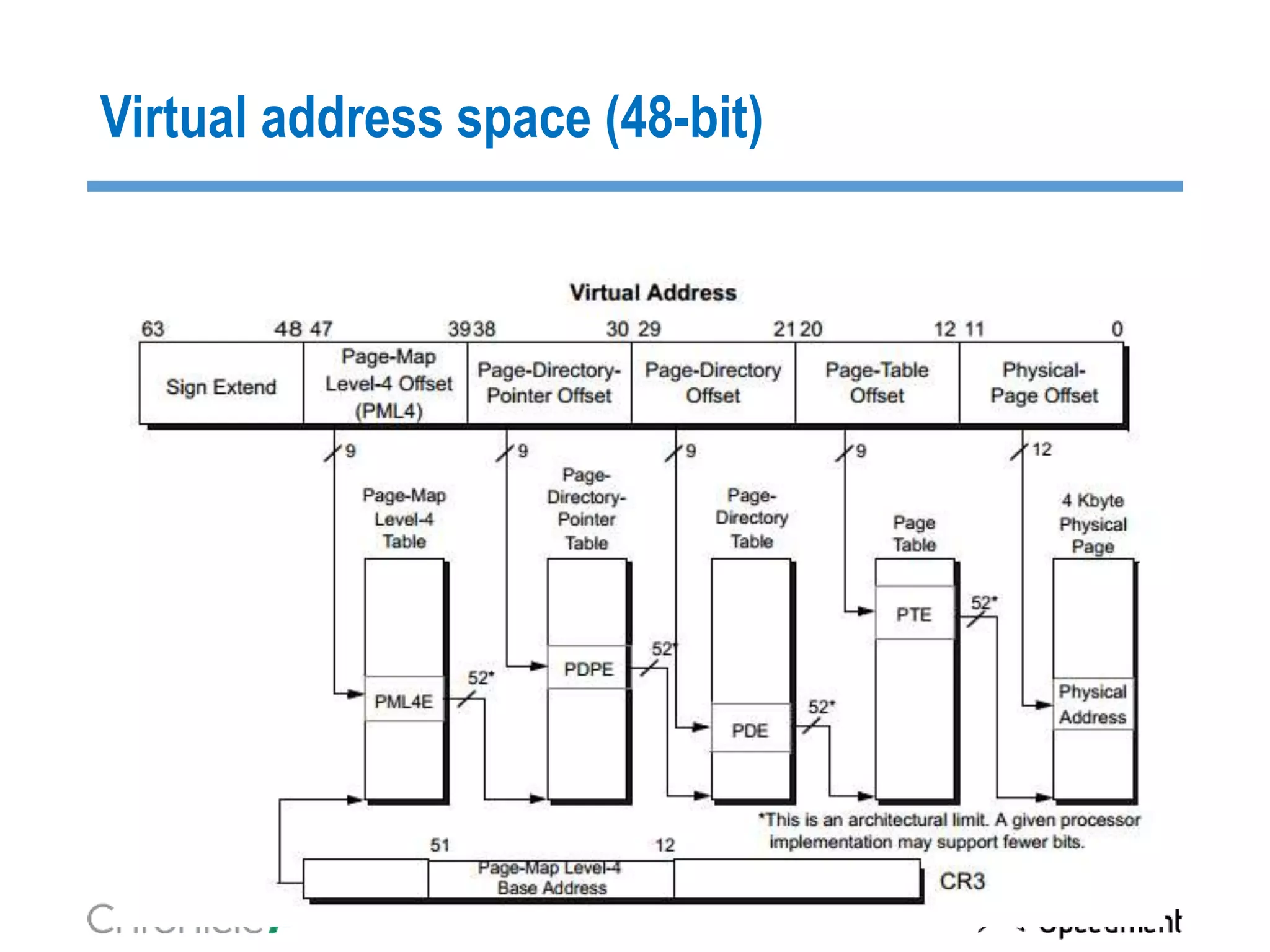

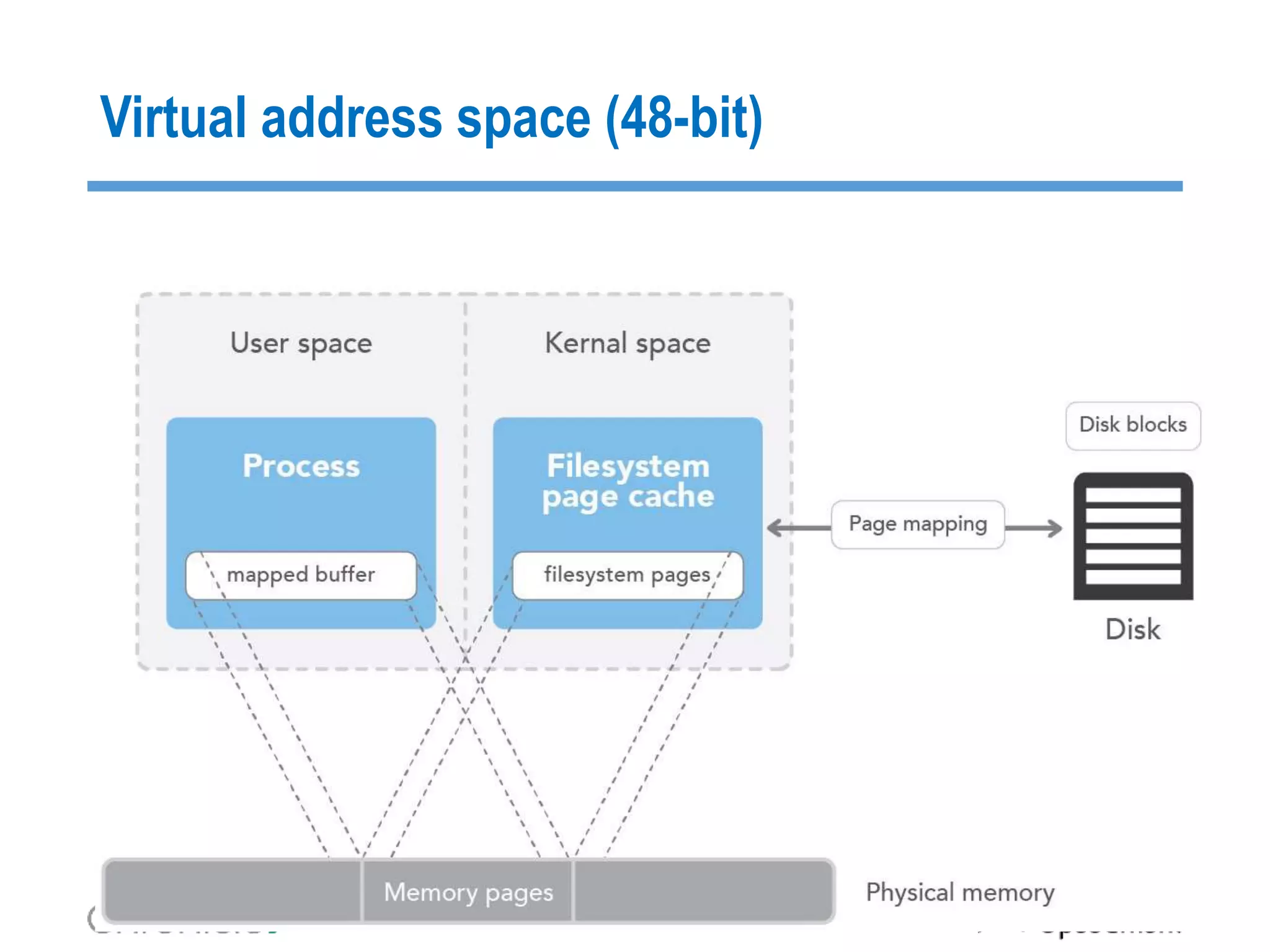

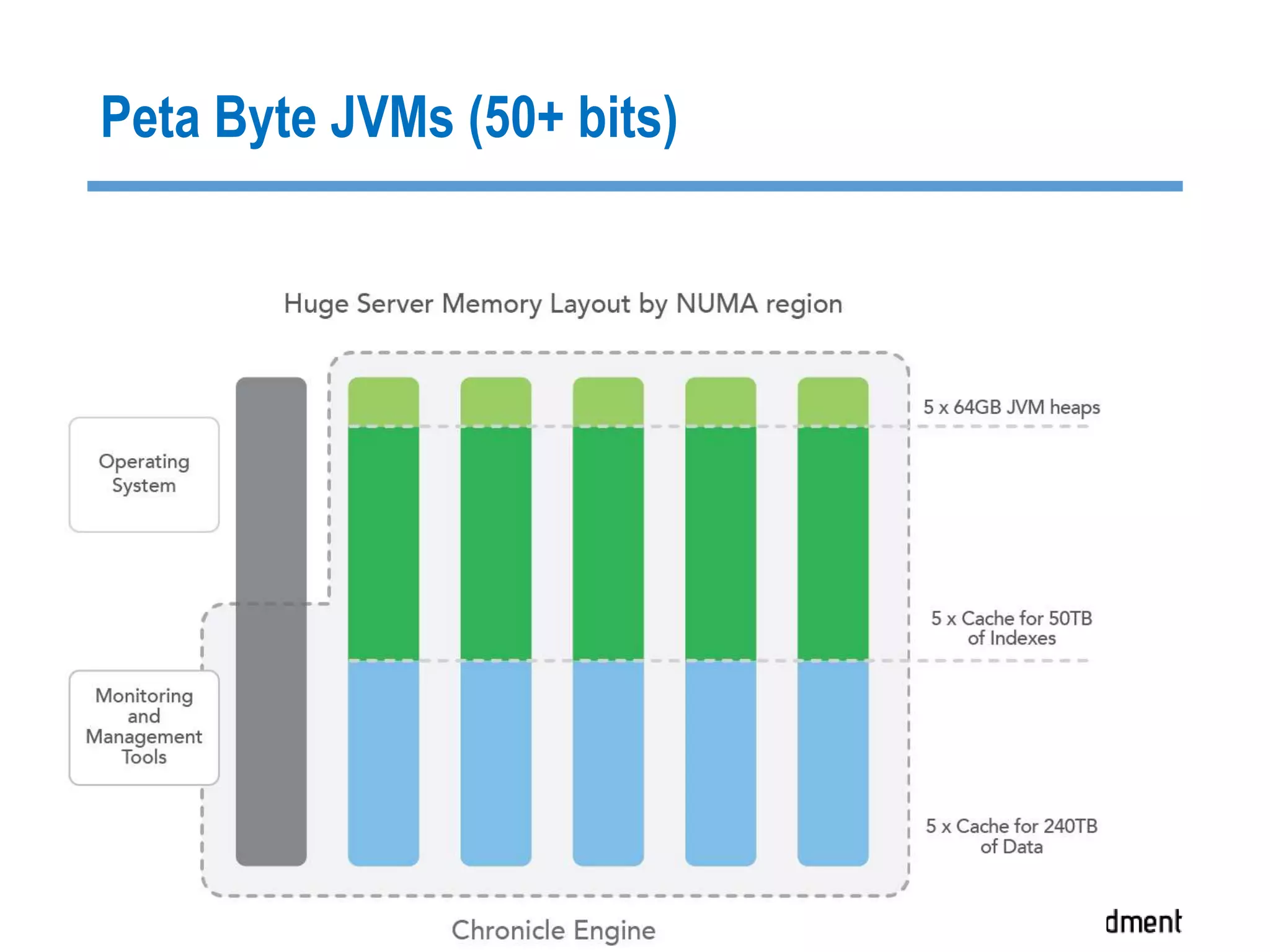

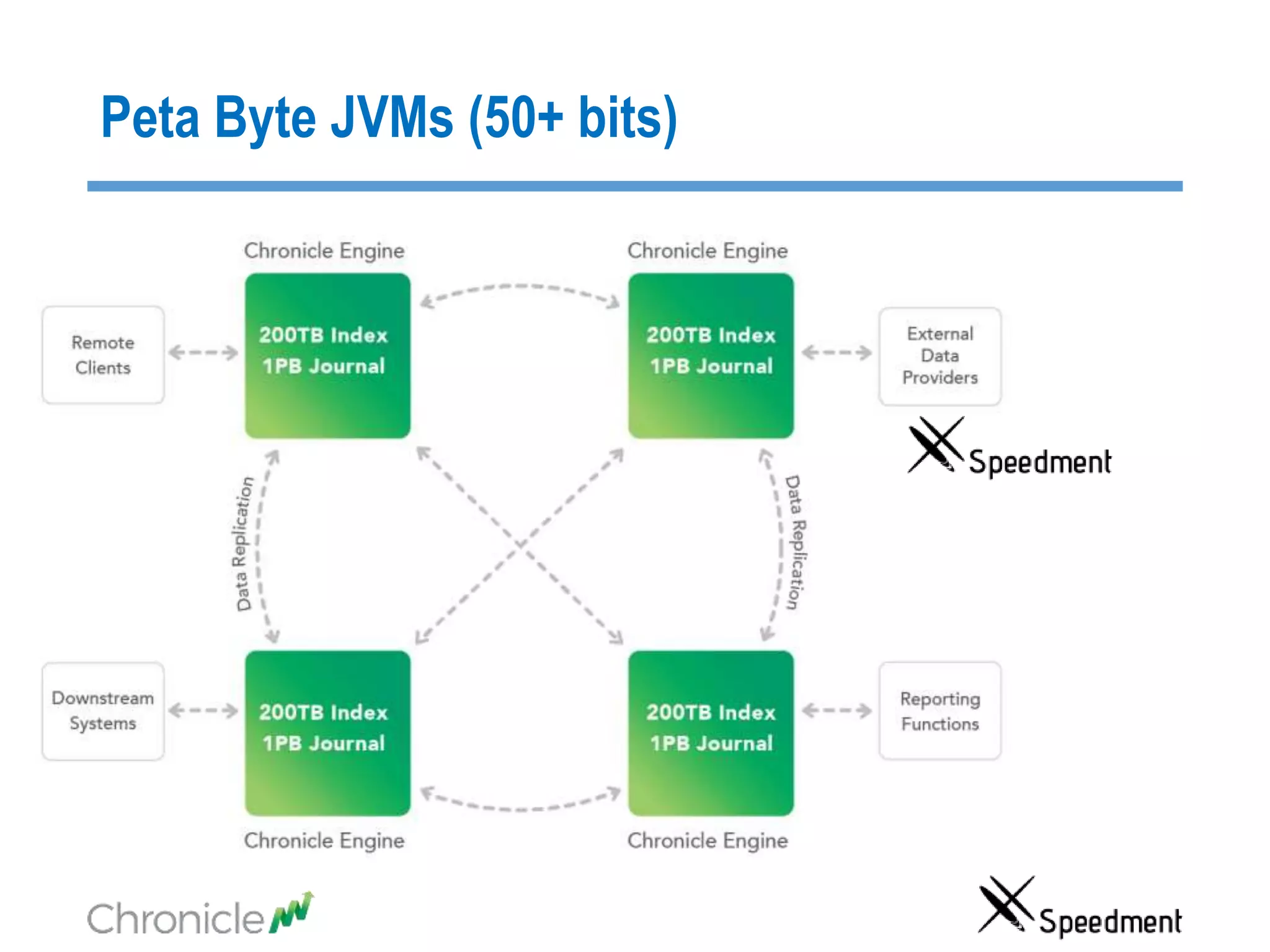

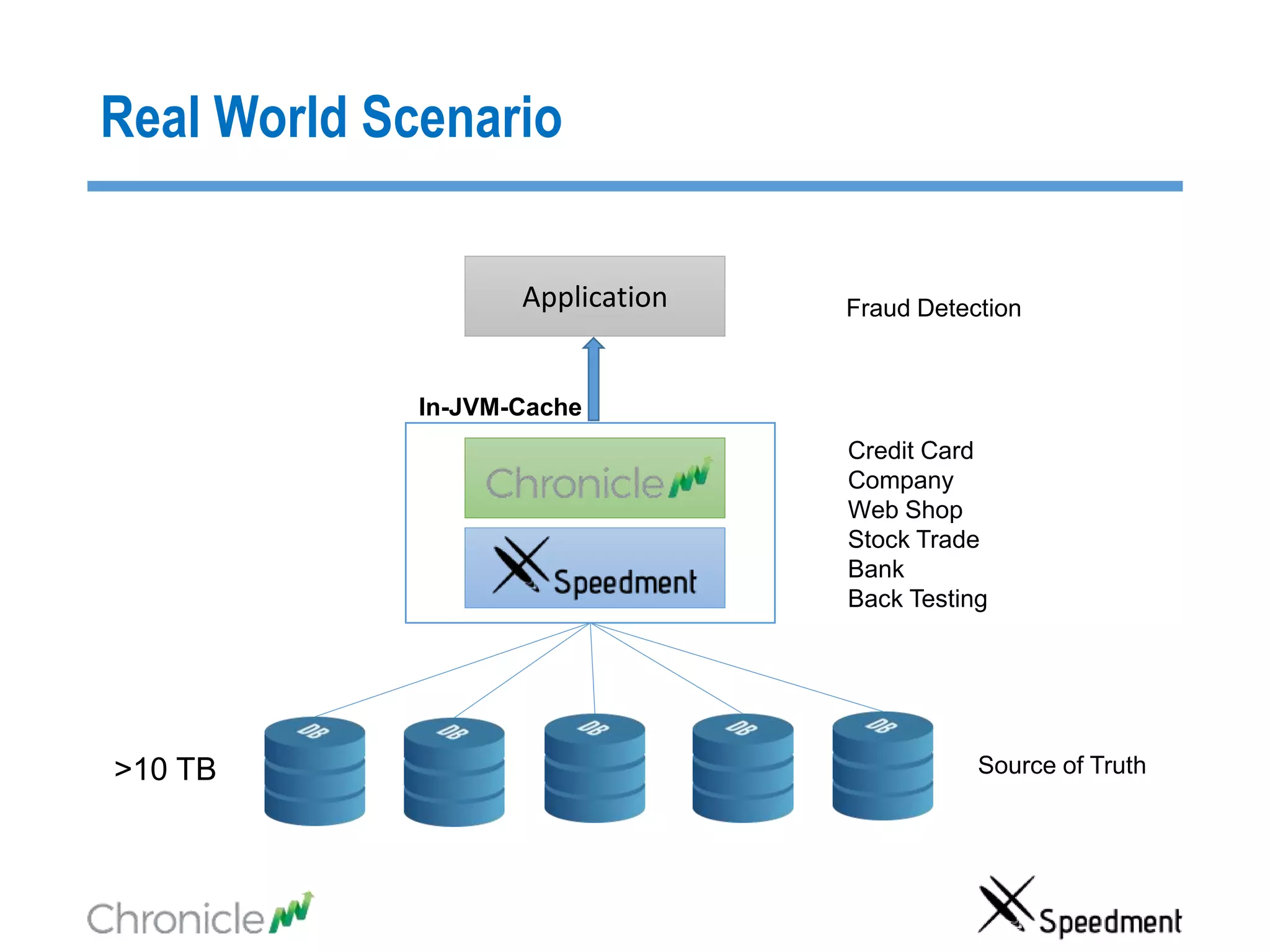

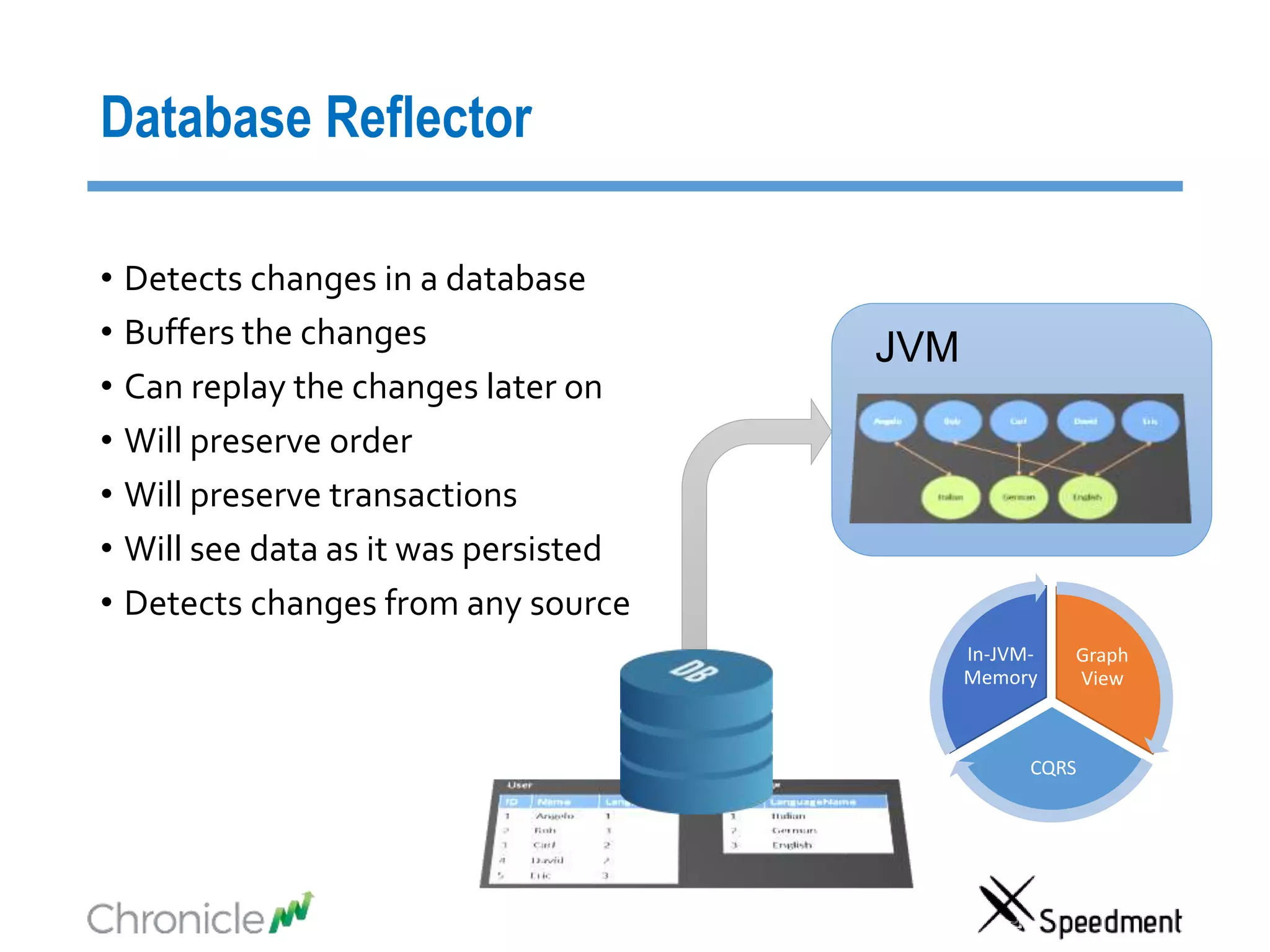

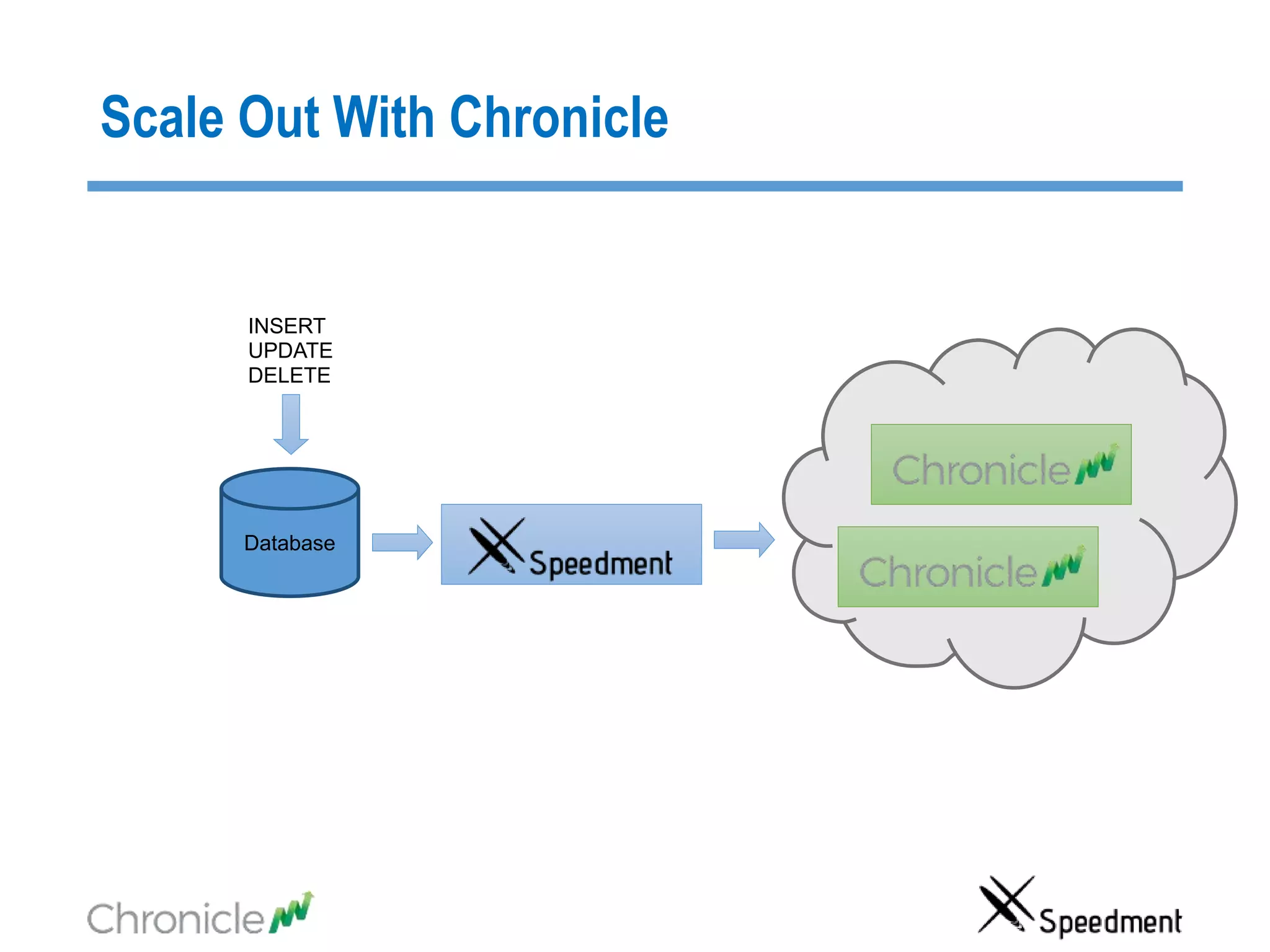

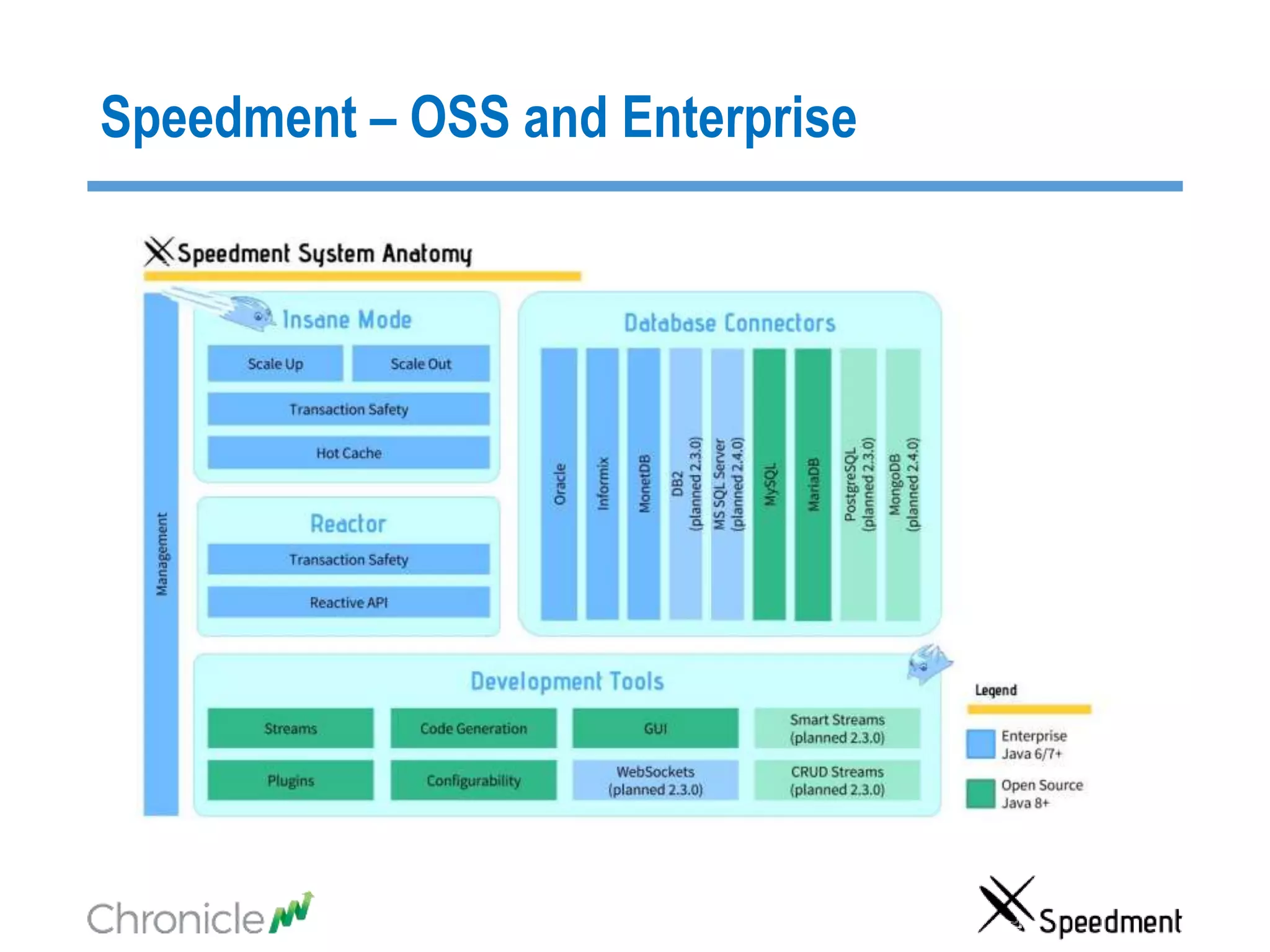

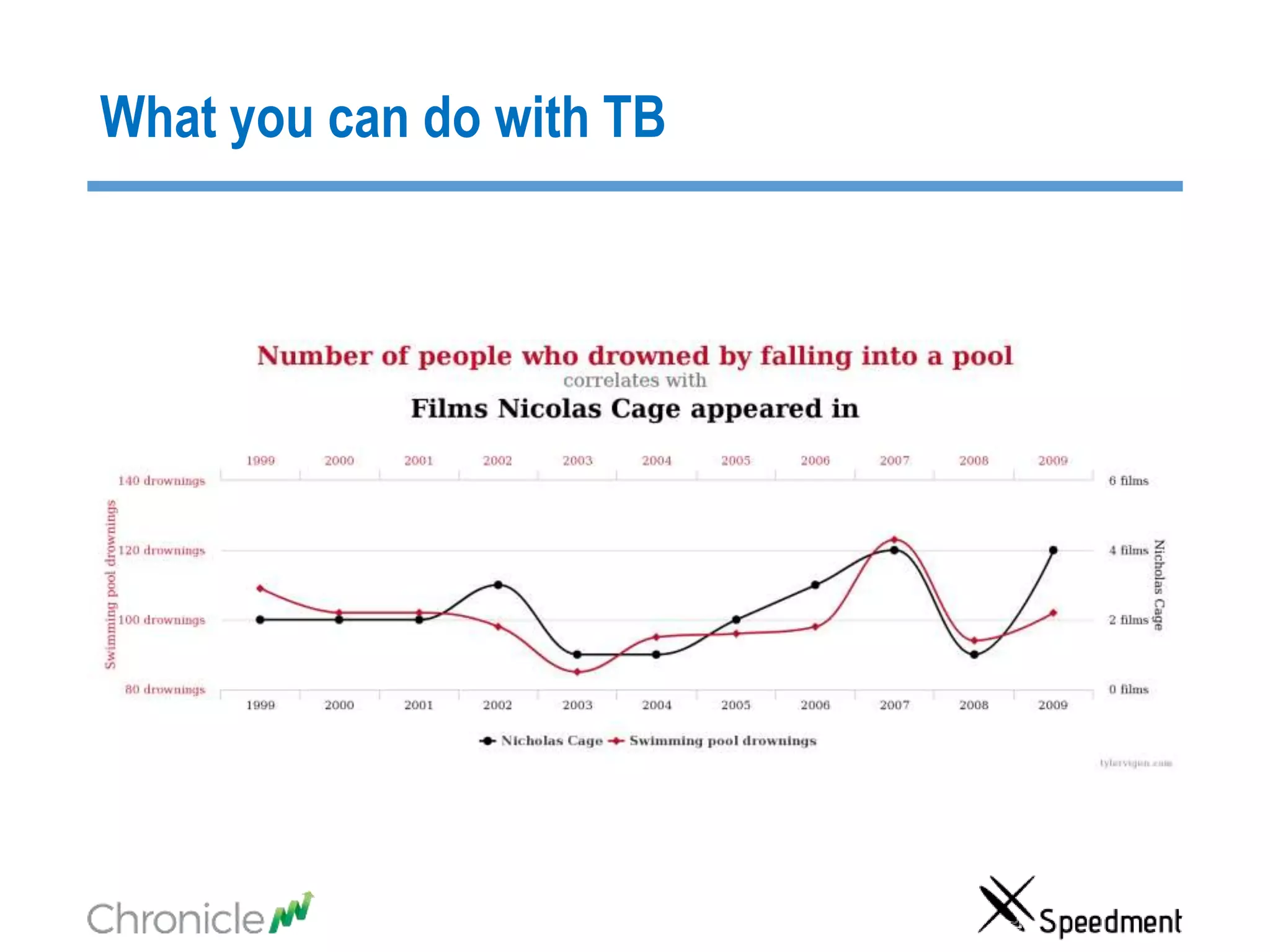

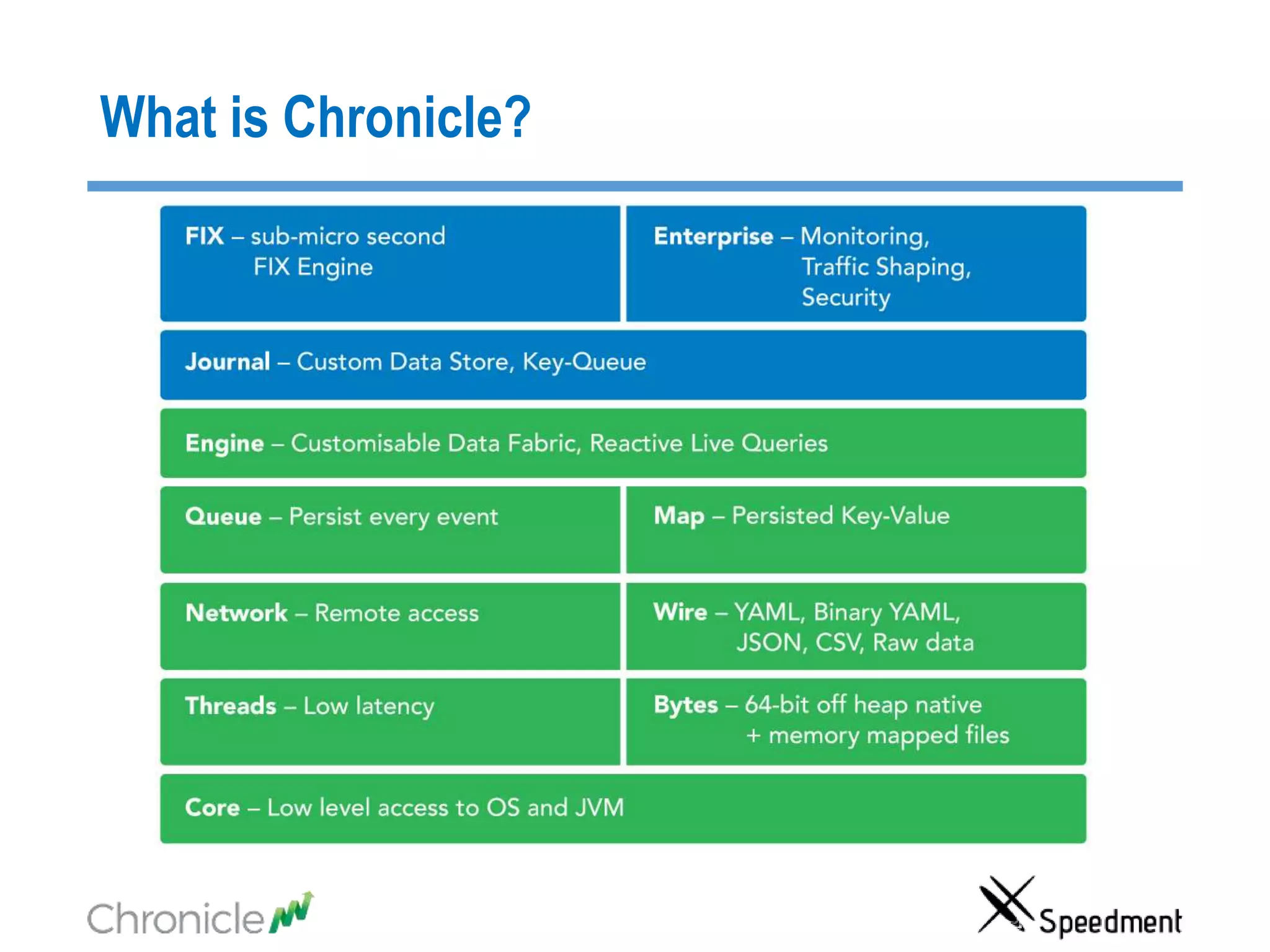

The document discusses strategies for managing big data latency and cache synchronization in JVMs, particularly focusing on in-memory technologies like Speedment and Chronicle. It emphasizes the importance of data locality, the decreasing cost of RAM, and techniques for efficient caching and data update management in high-frequency trading environments. Additionally, it highlights the complexities associated with handling petabyte-scale JVMs and the necessity for robust replication and recovery strategies in large systems.