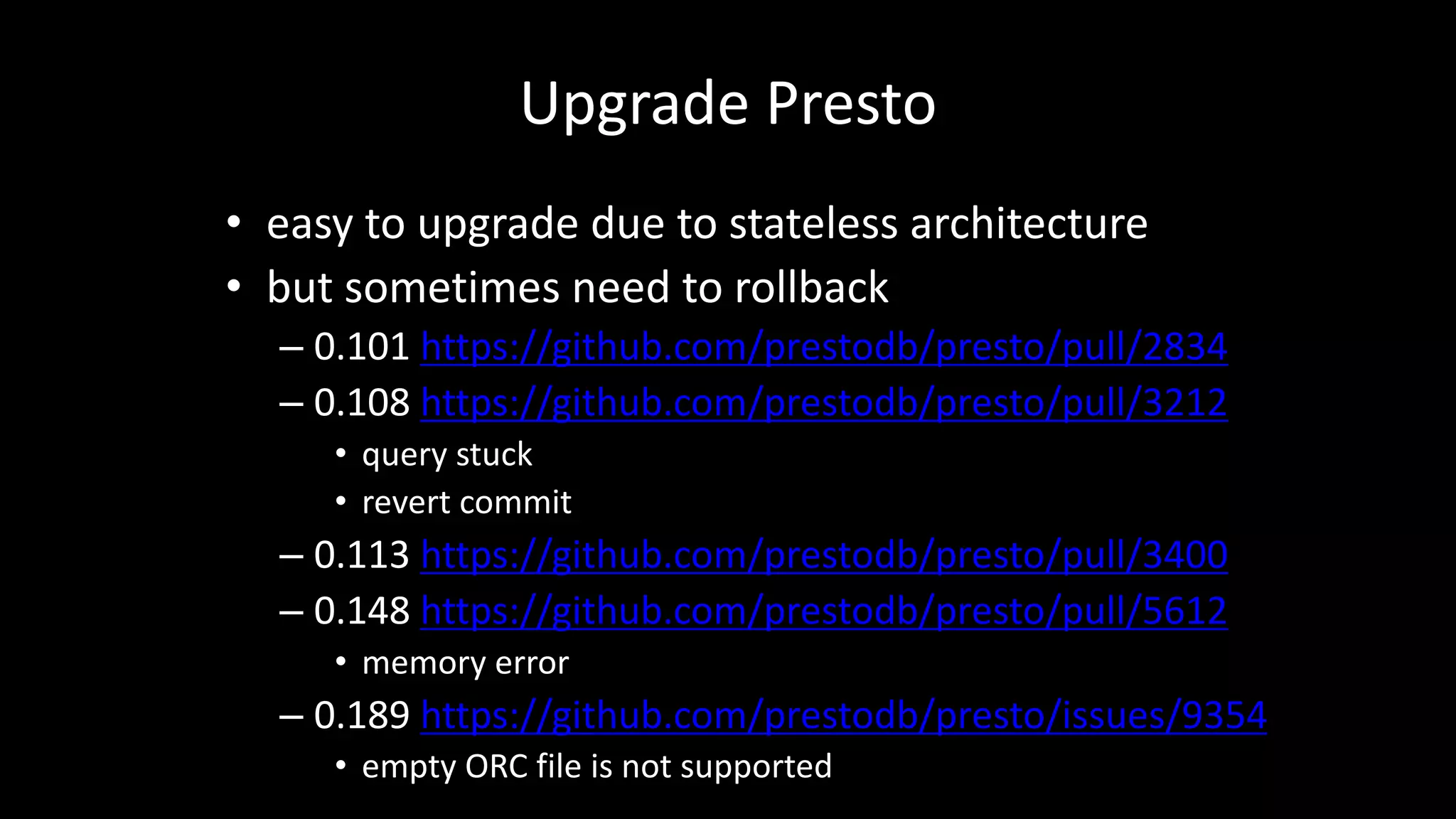

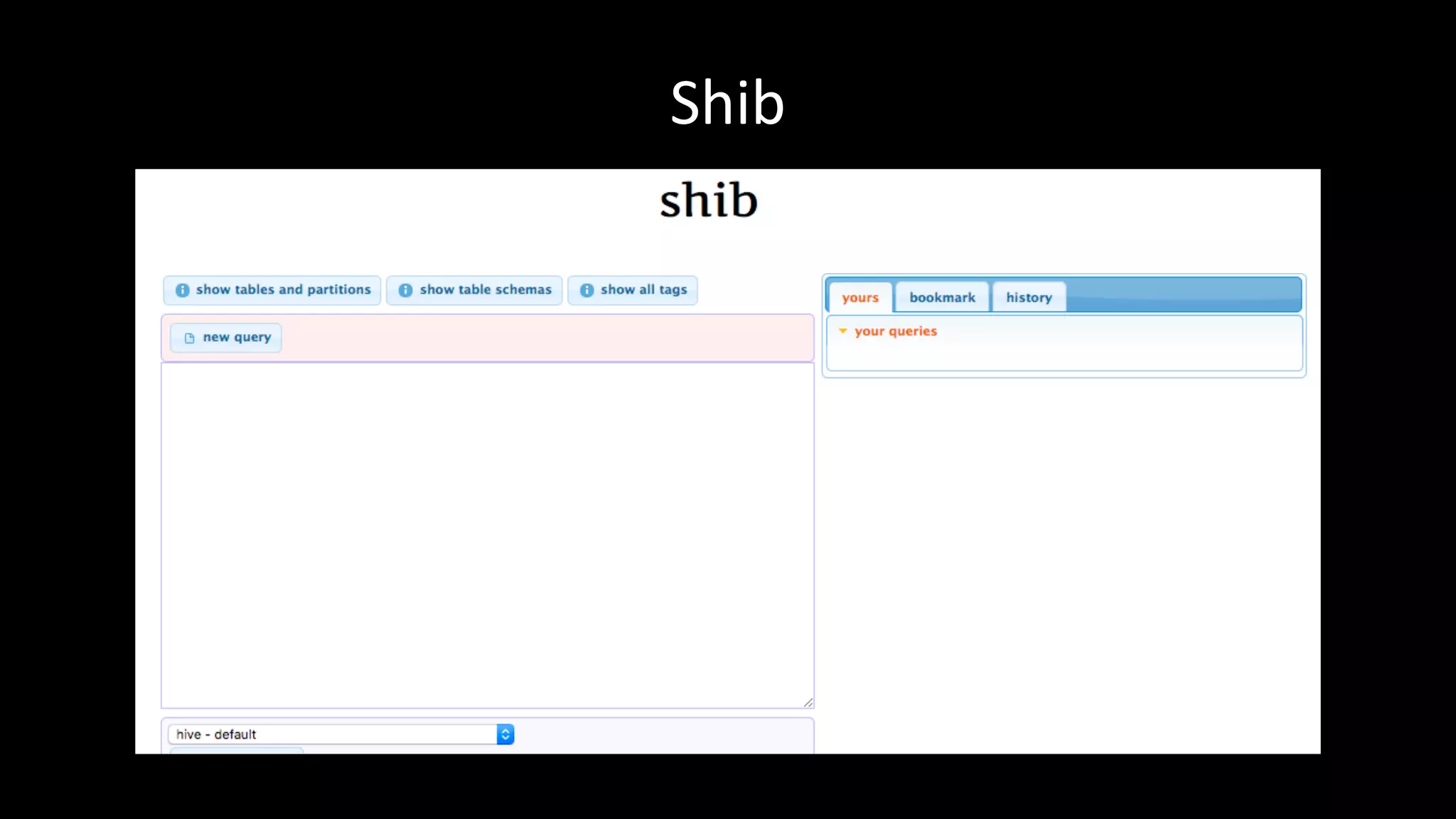

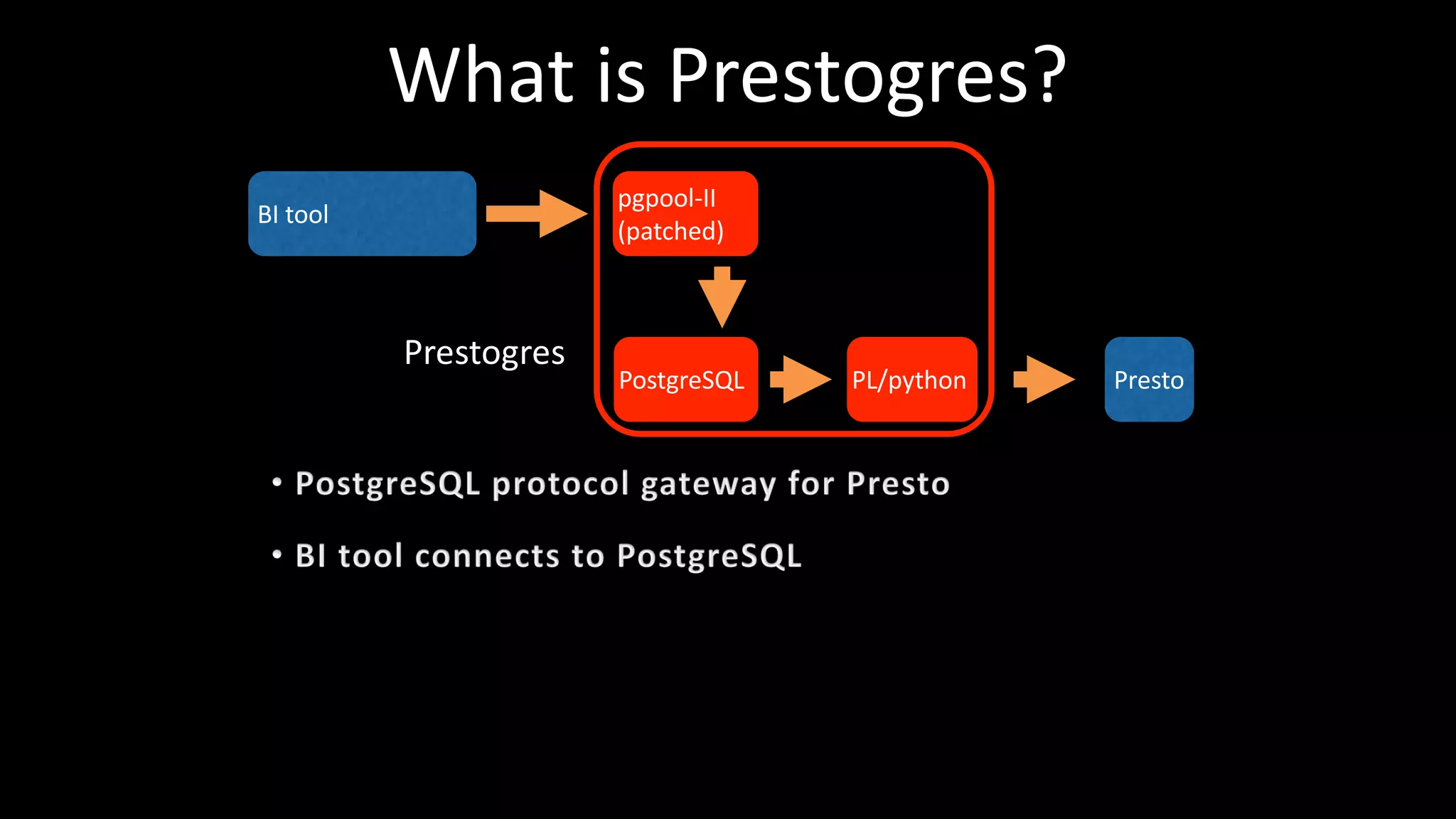

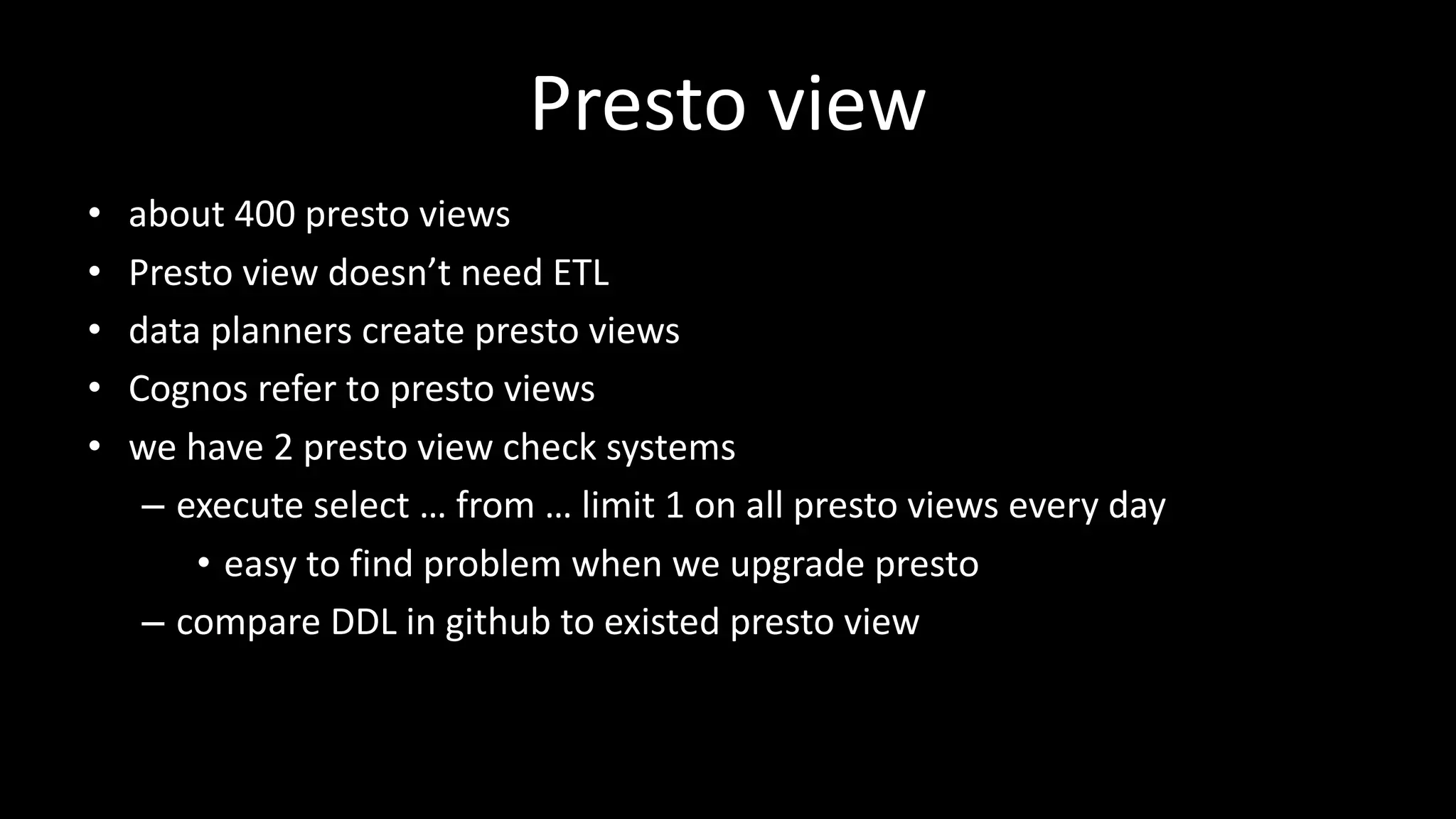

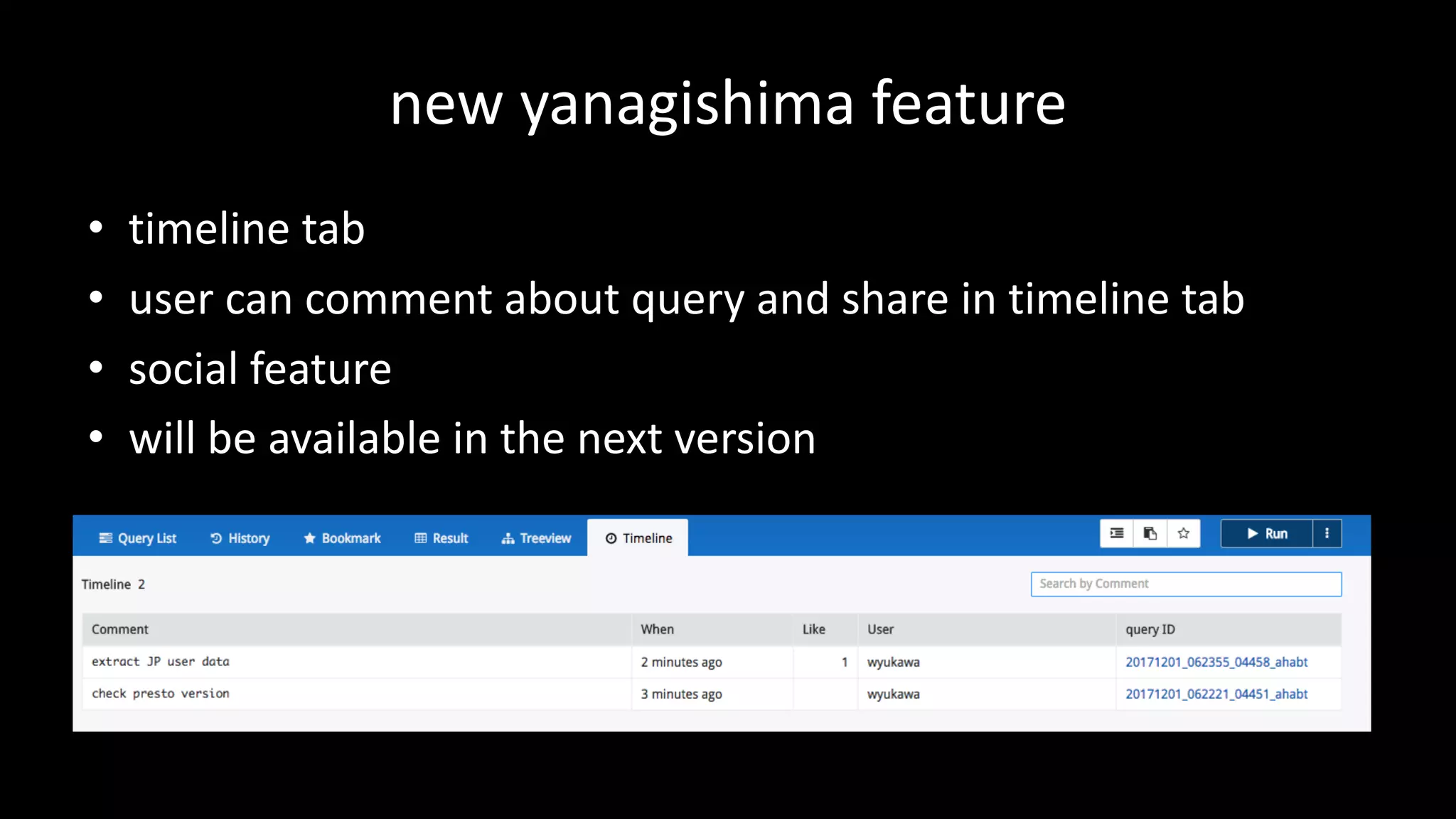

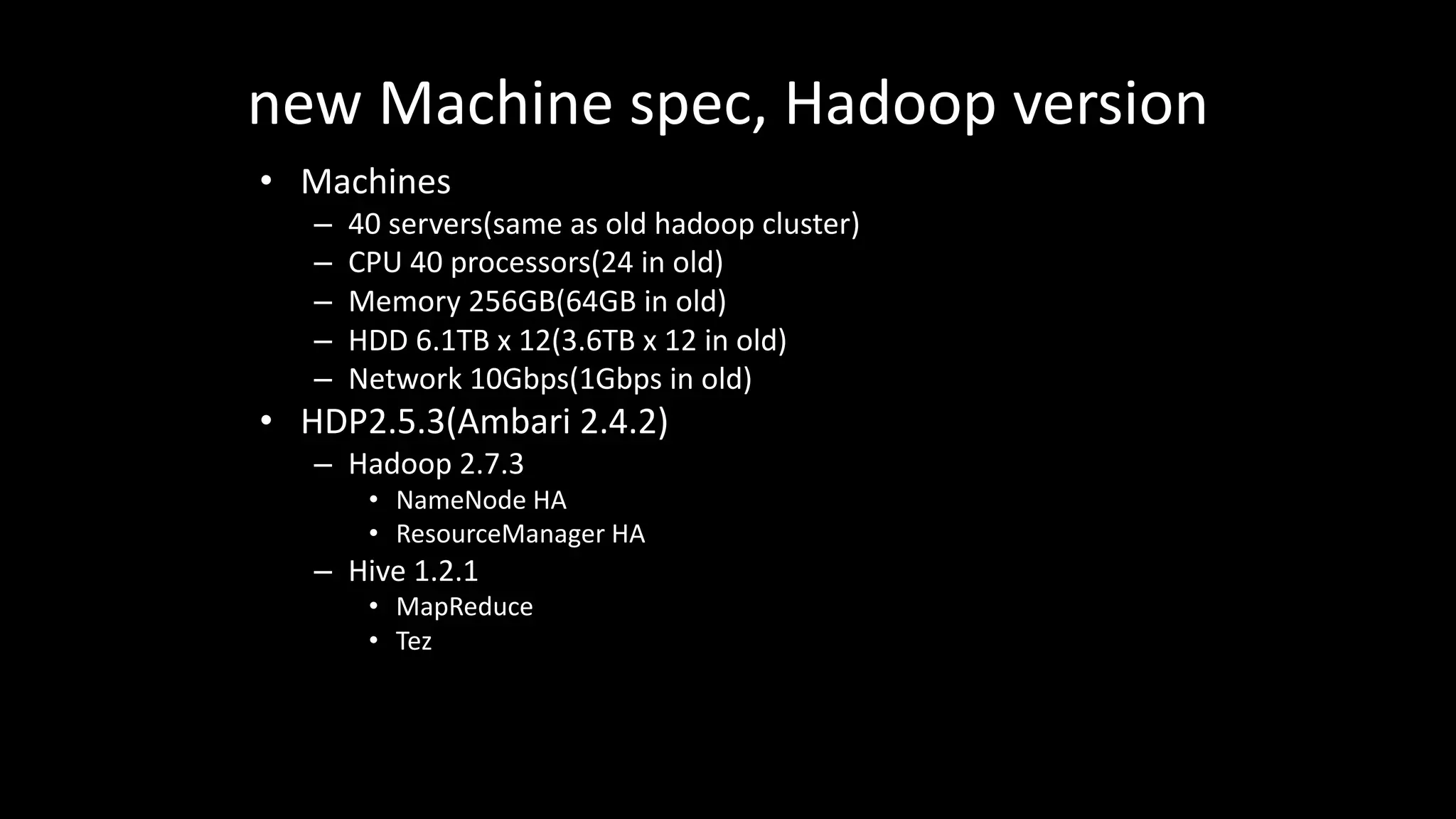

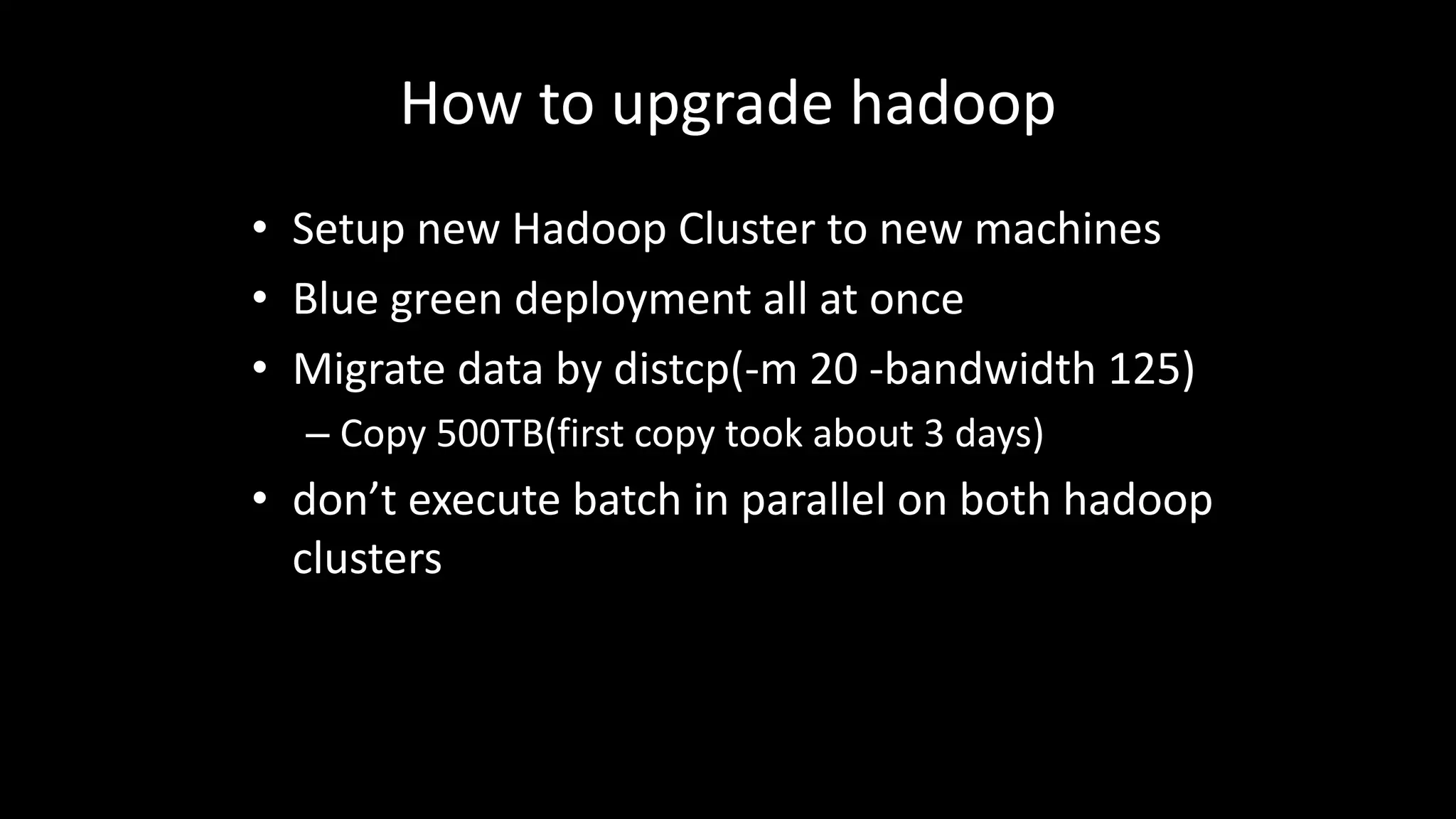

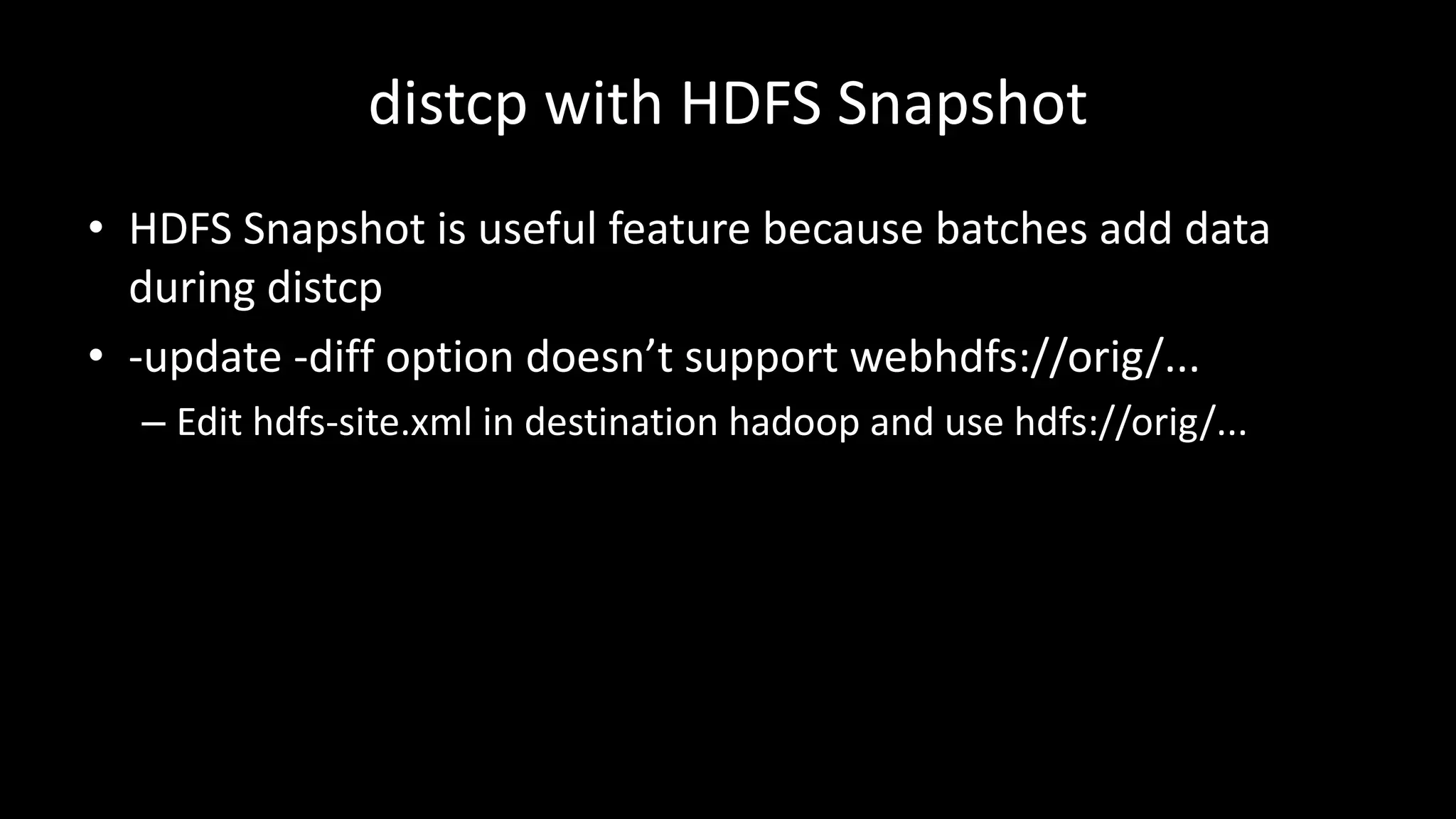

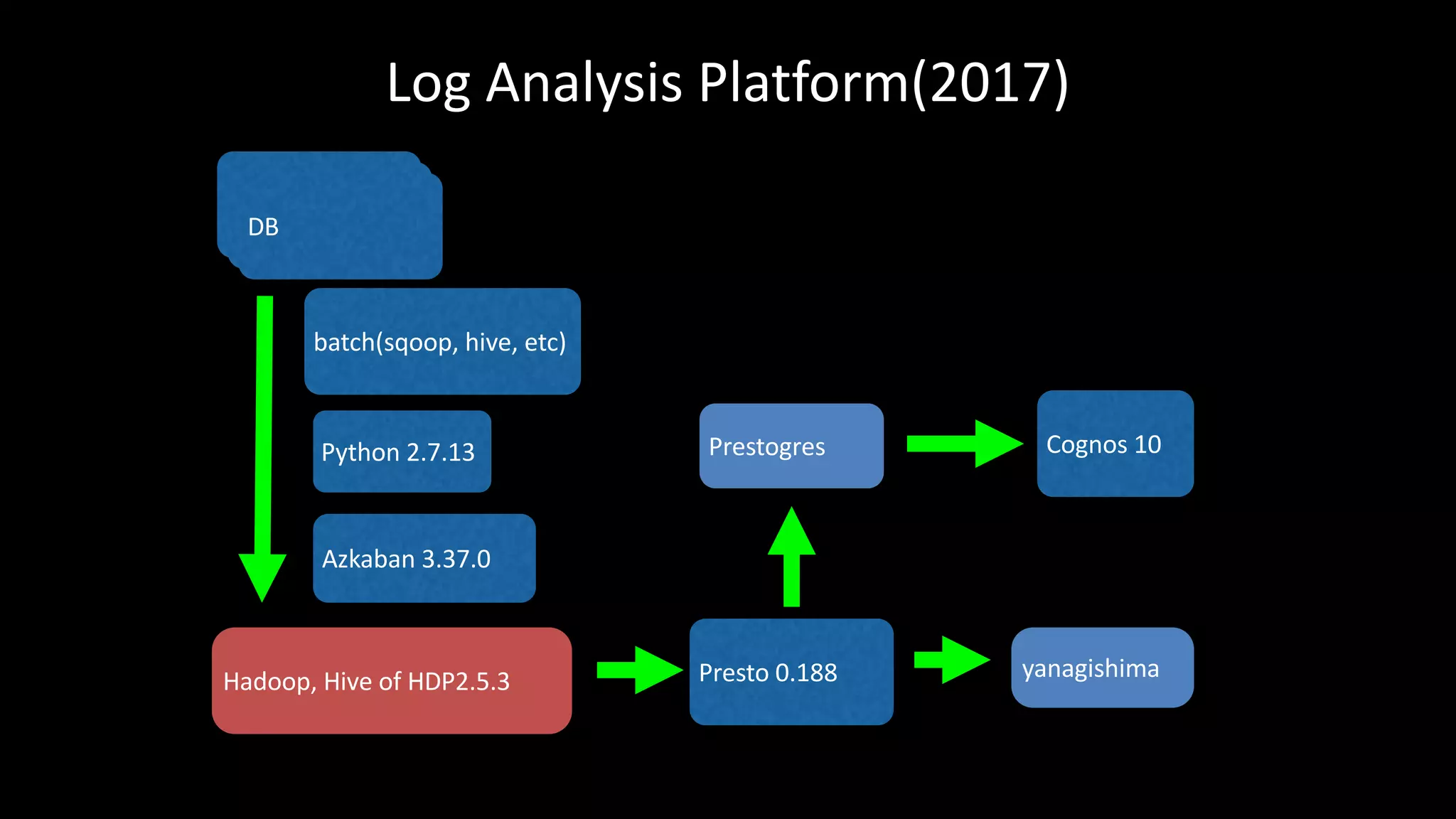

This document summarizes Wataru Yukawa's presentation about LINE's log analysis platform over the past 3 years. It discusses the platform in 2014 when it was first created using Hadoop, Hive, Presto, Azkaban, and Cognos. It describes the addition of Prestogres to allow Cognos to connect to Presto and the development of the yanagishima UI. It outlines the upgrade of the Hadoop cluster in 2016 to a new version with more resources and the process for migrating the data and applications. Finally, it provides an overview of the current platform in 2017.