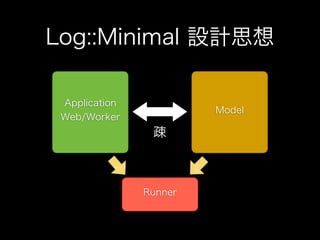

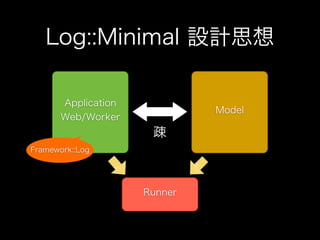

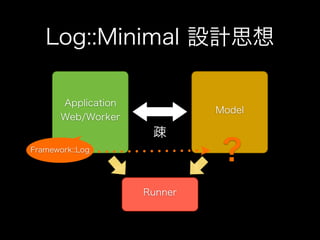

The document discusses using the Log::Minimal module in Perl to perform logging at different levels. It demonstrates calling the critf(), warnf(), infoff(), and debugff() functions to log messages tagged with severity levels. It also shows how to configure log formatting and filtering based on level. The document then discusses using Log::Minimal with the Plack framework to log requests.

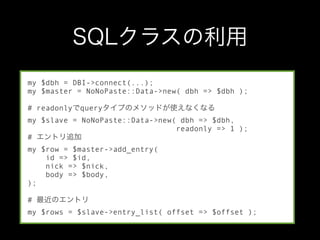

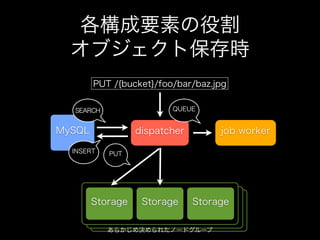

![$ LM_DEBUG=1 perl /tmp/logminimal.pl

2011-05-11T15:57:49 [CRITICAL] foo at /tmp/logminimal.pl line 7

2011-05-11T15:57:49 [WARN] 1 foo at /tmp/logminimal.pl line 8

2011-05-11T15:57:49 [INFO] foo at /tmp/logminimal.pl line 11 ,/tmp/

logminimal.pl line 14

2011-05-11T15:57:49 [DEBUG] bar at /tmp/logminimal.pl line 12 ,/tmp/

logminimal.pl line 14

2011-05-11T15:57:49 [INFO] {'key' => 'val'} at /tmp/logminimal.pl line 17

2011-05-11T15:57:49 [WARN] data is {'key' => 'val'} at /tmp/logminimal.pl

line 18](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-8-320.jpg)

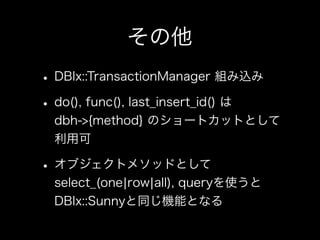

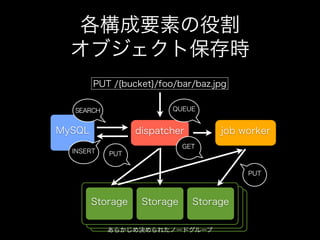

![local $Log::Minimal::PRINT = sub {

my ( $time, $type, $message, $trace) = @_;

print STDERR “[$type] $message $trace”;

};

local $Log::Minimal::LOG_LEVEL = "WARN";

infof("foo"); #print nothing

warnf(“xaicron++”);](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-9-320.jpg)

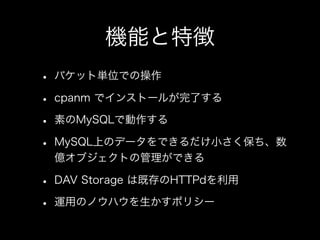

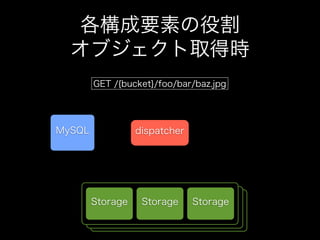

![local $Log::Minimal::AUTODUMP = 1;

warnf(“response => %s”,[ 200, [‘Content-Type’,‘text/

plain’],[‘OK’]]);

# 2011-05-11T15:56:14 [WARN] response => [200,

['Content-Type','text/plain'],['OK']] at ..

sub myerror {

local $Log::Minimal::TRACE_LEVEL = 1;

infof(@_);

}

myerror(“foo”);](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-10-320.jpg)

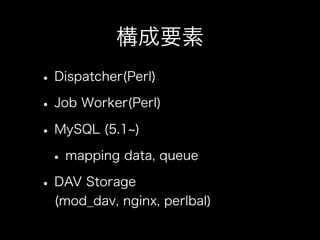

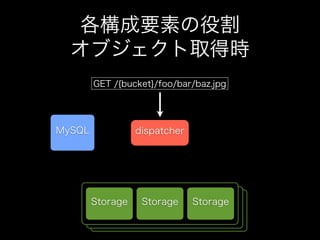

![use Log::Minimal;

use Plack::Builder;

builder {

enable "Log::Minimal", autodump => 1;

sub {

my $env = shift;

warnf("warn message");

debugf("debug message");

...

}

};

$ plackup -a demo.psgi

HTTP::Server::PSGI: Accepting connections at http://0:5000/

2011-05-11T16:32:24 [WARN] [/foo/bar/baz] warn message at /

tmp/demo.psgi line 8

2011-05-11T16:32:24 [DEBUG] [/foo/bar/baz] debug message at /

tmp/demo.psgi line 9](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-16-320.jpg)

![package NoNoPaste::Data;

use parent qw/DBIx::Sunny::Schema/;

__PACKAGE__->query(

'add_entry',

id => 'Str',

nick => { isa => 'Str', default => 'anonymouse' },

body => 'Str',

q{INSERT INTO entries ( id, nick, body, ctime )

values ( ?, ?, ?, NOW() )},

);

__PACKAGE__->select_row(

'entry',

'id' => 'Uint',

q{SELECT id,nick,body,ctime FROM entries WHERE id =?};

);

__PACKAGE__->select_all(

'entries_multi',

'id' => { isa => 'ArrayRef[Uint]' },

q{SELECT id,nick,body,ctime FROM entries WHERE id IN (?)}

);](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-28-320.jpg)

![use parent qw/DBIx::Sunny::Schema/;

__PACKAGE__-> (

' ',

=> / ,

[ => / ,[..]],

‘ ’,

);](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-29-320.jpg)

![prepare && execute && fetchrow_arrayref->[0];

prepare && execute && fetchrow_hashref;

prepare && execute && push @result, $_ while fetchrow_hash;

prepare && execute](https://image.slidesharecdn.com/yokohamapm7-110513110339-phpapp02/85/Operation-Oriented-Web-Applications-Yokohama-pm7-30-320.jpg)